前言:

现今,Oracle数据迁移MySQL的需求已经越来越普遍,主要的迁移场景大致可以分为三类,第一类是涉及小表以及少量表的一次性迁移,无需进行增量同步,第二类是涉及大表以及多表的一次性迁移,第三类是涉及增量实时同步,而对于数据的迁移方法,常见的方式有使用第三方的同步工具CDC进行Oracle到MySQL的数据迁移、使用开源的同步工具以及应用层面进行迁移同步。

基于作者的迁移实施经验,本文接下来将讲述三种操作相对简单,可落地的Oracle数据迁移MySQL的方法。

测试迁移方法:

| 迁移方法 | 适合场景 | 测试场景 |

| navicat | 操作简单,同步效率一般,适合小表的一次性迁移 | Oracle一次性同步表test.test1到MySQL下的db1.test1 |

| navicat+sqluldr+load data infile | 操作较复杂,同步效率高,适合少量大表的一次性迁移 | Oracle一次性同步表test.test1到MySQL下的db1.test1 |

| navicat+Oracle GoldenGate(OGG) | 操作复杂,同步效率较高,适合需要大批量的大表进行迁移以及需要实时增量同步 | Oracle全量+实时增量同步表test.test1,test2到MySQL下的db1.test1,test2 |

迁移方式一(navicat)

这种迁移方式主要适合小表的一次性迁移,navicat的同步效率速度一般。

迁移开始之前,我们需要先安装navicat,在一台能访问Oracle源端和MySQL目标端的机器上安装即可。

安装完navicat之后,配置源端Oracle连接

配置目标端MySQL连接

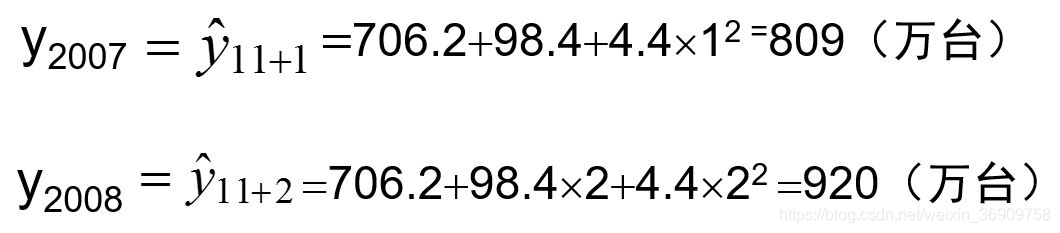

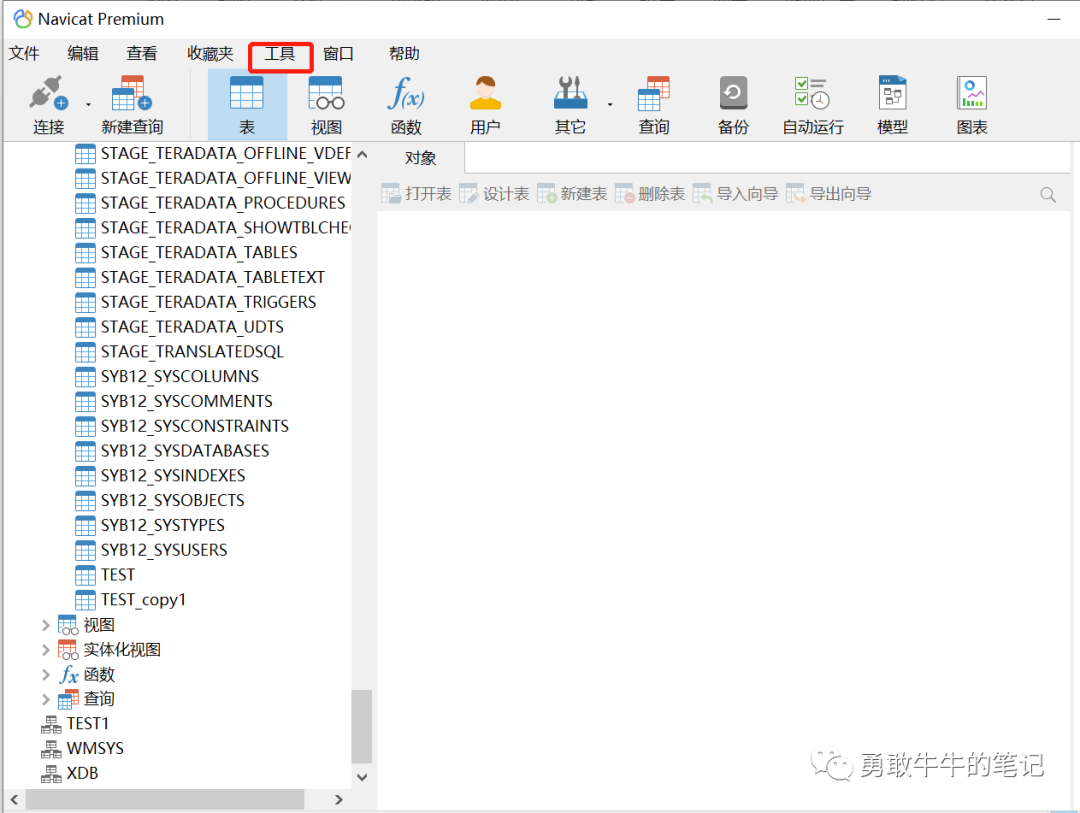

开始进行迁移,选择工具--->数据传输

选择源端Oracle以及目标端MySQL

下一步选择要同步的表TEST,也可以选择同步全部表

传输模式选择自动,点下一步开始进行同步

传输同步完成,整个同步的效率还是较慢的,7.2W的数据,用了1分钟。

迁移方式二(navicat+sqluldr+load data infile)

这种迁移方式主要适合少量大表的一次性迁移,通过navicat工具进行Oracle-->MySQL表结构转化,再通过sqluldr将Oracle数据导出到本地文件,最后再通过load data infile将数据导入MySQL。

注:navicat工具虽然有同步数据的功能,但在实际的操作过程中,同步数据的效率以及成功率都很低,所以这里只作为数据字典转化的工具。

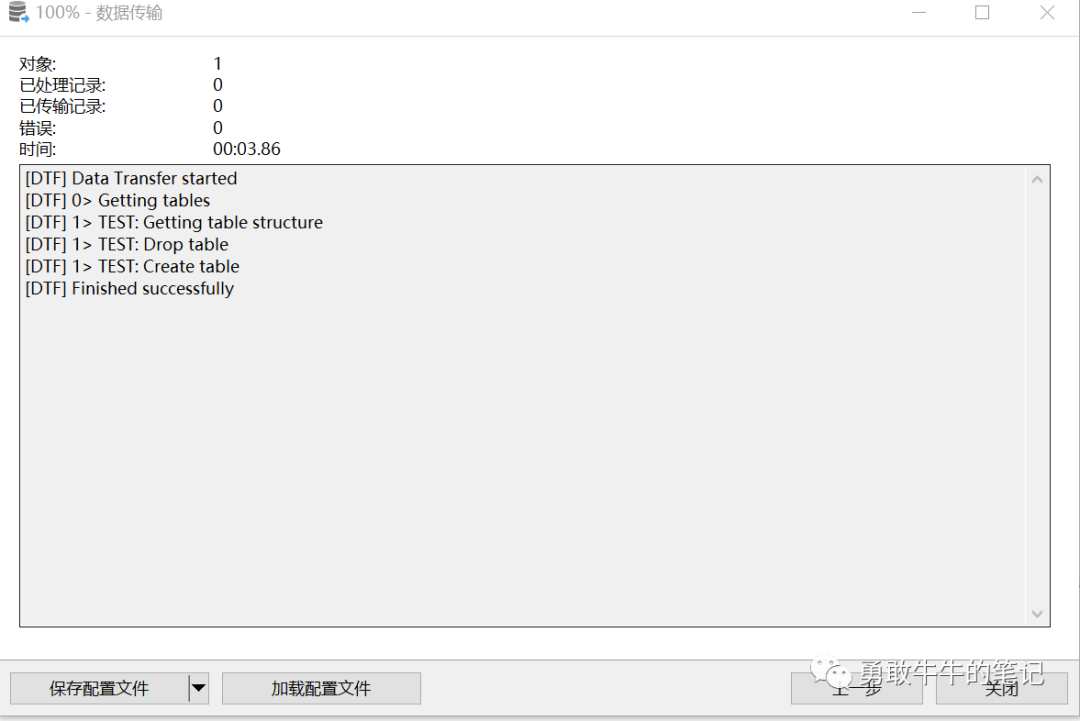

使用navicat工具进行表结构同步,步骤可以参考迁移方式一里面的操作,主要在数据传输同步时,选项里面只同步表结构,不创建记录。

点击开始,完成表结构同步

接下来进行数据的导出导入,先安装导出工具sqluldr

---解压安装包

unzip sqluldr2linux64.zip ./sqluldr2linux64.bin --help

---拷贝sqluldr2linux64.bin到$ORACLE_HOME的bin目录

cp -rp sqluldr2linux64.bin $ORACLE_HOME/bin

---重命名为sqluldr2.bin

mv sqluldr2linux64.bin sqluldr2.bin测试安装成功

[oracle@rac19a ~]$ sqluldr2.bin --help

SQL*UnLoader: Fast Oracle Text Unloader (GZIP, Parallel), Release 4.0.1

(@) Copyright Lou Fangxin (AnySQL.net) 2004 - 2010, all rights reserved.

License: Free for non-commercial useage, else 100 USD per server.

Usage: SQLULDR2 keyword=value [,keyword=value,...]

Valid Keywords:user = username/password@tnsnamesql = SQL file namequery = select statementfield = separator string between fieldsrecord = separator string between recordsrows = print progress for every given rows (default, 1000000) file = output file name(default: uldrdata.txt)log = log file name, prefix with + to append modefast = auto tuning the session level parameters(YES)text = output type (MYSQL, CSV, MYSQLINS, ORACLEINS, FORM, SEARCH).charset = character set name of the target database.ncharset= national character set name of the target database.parfile = read command option from parameter file

for field and record, you can use '0x' to specify hex character code,\r=0x0d \n=0x0a |=0x7c ,=0x2c, \t=0x09, :=0x3a, #=0x23, "=0x22 '=0x27使用sqluldr导出为文本类型为MYSQL

sqluldr2.bin user=test/oracle@pdb1 query="select * from test" text=MYSQL field=',' charset=AL32UTF8 head='NO' file=/home/oracle/test_001.csv log=test.log导出过程很快,58w的数据,只需要7秒

0 rows exported at 2022-10-12 22:18:14, size 0 MB.583680 rows exported at 2022-10-12 22:18:21, size 108 MB.output file /home/oracle/test_001.csv closed at 583680 rows, size 108 MB.再将从Oracle导出的MYSQL文件导入MySQL数据库

LOAD DATA INFILE '/tmp/test_001.csv' INTO TABLE test FIELDS TERMINATED BY ',' ENCLOSED BY '"';导入过程很快,58w的数据,只需要13秒

test@mysql.sock 22:38: [db1]>LOAD DATA INFILE '/tmp/test_001.csv' INTO TABLE test FIELDS TERMINATED BY ',' ENCLOSED BY '"';

Query OK, 583680 rows affected (13.43 sec)

Records: 583680 Deleted: 0 Skipped: 0 Warnings: 0整个数据同步过程还是较快的,但操作步骤较为繁琐,不太适合多表操作。

迁移方式三(navicat+Oracle GoldenGate(OGG))

这种迁移方式适合大批量的大表或者需要增量同步的表进行迁移,支持全量初始化+Oracle GoldenGate(OGG)增量同步,通过navicat工具进行Oracle-->MySQL表结构转化,再通过数据同步工具OGG进行全量表初始化以及后续的增量同步。

注意:使用增量方式同步的表都需要有主键,确保每行数据的唯一。

先使用navicat进行表结构的转化,具体参考迁移方式二里面的步骤。

Oracle源端配置OGG准备

1 数据库开启归档模式

---查看是否开启归档模式

archive log list

---开启归档模式

startup mount

alter database archvielog ;

alter database open;

2 数据库开启force_logging

---查看是否开启force logging

select force_logging from v$database;

----开启force logging

alter database force logging;

alter system switch logfile;

3 数据库开启补充日志supplemental logging

---查看补充日志

SELECT supplemental_log_data FROM v$database;

---开启补充日志

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

Alter system switch logfile;

4 开启ogg参数

alter system set enable_goldengate_replication=true scope=both;

5 配置stream_pool大小

(MAX_SGA_SIZE * # of integrated Extracts) + 25% head room

For example, using the default values for the MAX_SGA_SIZE with two integrated Extracts:

( 1GB * 2 ) * 1.25 = 2.50GB STREAMS_POOL_SIZE = 2560MMySQL目标端配置OGG准备

1 开启bin_log

---确认是否开启bin_log

show variables like 'log_bin';

2 开启bin_log(需要重启生效)

在my,cnf 中 [mysqld] 添加如下

[mysqld]

# binlog configuration

log-bin = /usr/local/var/mysql/logs/mysql-bin.log

expire-logs-days = 14

max-binlog-size = 500M

server-id = 1

2 确认binlog_format

----确认格式为row

show variables like 'binlog_format';

3 确认sql_mode

----确认包含STRICT_TRANS_TABLES

show variables like 'sql_mode';

4 确认版本

----确认版本,5.7.10之后才支持部分DDL

(CREATE TABLE, ALTER TABLE, and DROP TABLE operations are supported.)

select version();创建ogg同步用户

1 oracle源端同步用户创建

create tablespace ogg_tbs datafile size 1g;

create user ogg identified by "oggoracle";

grant resource,dba,connect to ogg;2 mysql目标端同步用户创建

CREATE USER ogg IDENTIFIED by "oggmysql";

GRANT ALL PRIVILEGES ON *.* TO 'ogg'@'%';Oracle源端安装ogg软件

1 配置环境变量

---/home/oracle/.bash_profile

export OGG_HOME=/u01/app/ogg

export PATH=$OGG_HOME:$PATH2 解压安装ogg软件

---解压安装ogg软件,安装包:p31766135_191004_Linux-x86-64.zip

cd /tmp/

unzip p31766135_191004_Linux-x86-64.zip

cd 31766135/

mv files/* /u01/app/ogg/

---验证

oracle@rac19b ~]$ ggsci

Oracle GoldenGate Command Interpreter for Oracle

Version 19.1.0.0.4 31637694_FBO

Linux, x64, 64bit (optimized), Oracle 19c on Aug 19 2020 20:08:53

Operating system character set identified as UTF-8.

Copyright (C) 1995, 2019, Oracle and/or its affiliates. All rights reserved.

GGSCI (rac19b) 1>3 创建ogg配置目录

GGSCI (rac19b) 10> create subdirs

Creating subdirectories under current directory /home/oracle

Parameter file /u01/app/ogg/dirprm: created.

Report file /u01/app/ogg/dirrpt: created.

Checkpoint file /u01/app/ogg/dirchk: created.

Process status files /u01/app/ogg/dirpcs: created.

SQL script files /u01/app/ogg/dirsql: created.

Database definitions files /u01/app/ogg/dirdef: created.

Extract data files /u01/app/ogg/dirdat: created.

Temporary files /u01/app/ogg/dirtmp: created.

Credential store files /u01/app/ogg/dircrd: created.

Masterkey wallet files /u01/app/ogg/dirwlt: created.

Dump files /u01/app/ogg/dirdmp: created.

GGSCI (rac19b) 11>4 启动MGR进程

---编辑mgr配置

cd /u01/app/ogg/

./ggsci

GGSCI (rac19b) 1> edit params mgr

---配置以下参数

PORT 7809

autorestart extract * ,waitminutes 2,resetminutes 5

PURGEOLDEXTRACTS /u01/app/ogg/dirdat/*,USECHECKPOINTS,MINKEEPDAYS 5

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

---启动mgr进程

GGSCI (rac19b) 1> start mgr

Manager started.

GGSCI (rac19b) 2> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

MySQL目标端安装ogg软件

1 配置环境变量

---/etc/profile

export OGG_HOME=/opt/ogg

export PATH=$OGG_HOME:$PATH2 解压安装ogg软件

---解压安装ogg软件,安装包:ggs_Linux_x64_MySQL_64bit.tar

cd ogg/

tar xvf /tmp/ggs_Linux_x64_MySQL_64bit.tar

---验证

[mysql@rac19a ~]$ ggsci

Oracle GoldenGate Command Interpreter for MySQL

Version 19.1.0.0.3 OGGCORE_19.1.0.0.0_PLATFORMS_190907.0144

Linux, x64, 64bit (optimized), MySQL Enterprise on Sep 7 2019 08:41:32

Operating system character set identified as UTF-8.

Copyright (C) 1995, 2019, Oracle and/or its affiliates. All rights reserved.3 创建ogg配置目录

GGSCI (rac19a) 1> create subdirs

Creating subdirectories under current directory /home/mysql

Parameter file /opt/ogg/dirprm: created.

Report file /opt/ogg/dirrpt: created.

Checkpoint file /opt/ogg/dirchk: created.

Process status files /opt/ogg/dirpcs: created.

SQL script files /opt/ogg/dirsql: created.

Database definitions files /opt/ogg/dirdef: created.

Extract data files /opt/ogg/dirdat: created.

Temporary files /opt/ogg/dirtmp: created.

Credential store files /opt/ogg/dircrd: created.

Masterkey wallet files /opt/ogg/dirwlt: created.

Dump files /opt/ogg/dirdmp: created.4 启动MGR进程

---编辑mgr配置

cd /opt/ogg/

./ggsci

GGSCI (rac19b) 1> edit params mgr

---配置以下参数

PORT 7809

AUTOSTART REPLICAT *

AUTORESTART REPLICAT *,RETRIES 5,WAITMINUTES 2,RESETMINUTES 10

PURGEOLDEXTRACTS /opt/ogg/dirdat/*,USECHECKPOINTS,MINKEEPDAYS 5

ACCESSRULE, PROG *, IPADDR 192.168.2.*, ALLOW

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

---启动mgr进程

GGSCI (rac19a) 2> start mgr

Manager started.

GGSCI (rac19a) 3> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING5 配置全局文件以及检查表

---ogg连接MySQL

GGSCI (rac19a DBLOGIN as ogg) 8> dblogin sourcedb db1@192.168.2.201:3306,userid ogg,password oggmysql

Successfully logged into database.

---创建检查表

GGSCI (rac19a DBLOGIN as ogg) 9> ADD CHECKPOINTTABLE db1.checkpoint

Successfully created checkpoint table db1.checkpoint.

GGSCI (rac19a DBLOGIN as ogg) 10>

---配置文件设置全局检查表

GGSCI (rac19a DBLOGIN as ogg) 10> edit params ./GLOBALS

---添加以下配置

CHECKPOINTTABLE db1.checkpointOracle源端配置抽取以及投递进程(增量进程)

1 对同步表添加补充日志

---ogg连接Oracle

GGSCI (rac19b) 3> dblogin userid ogg password oggoracle

Successfully logged into database.

---为表test.test1添加同步日志

GGSCI (rac19b as ogg@testdb) 4> add trandata test.test1

2022-10-13 13:08:58 INFO OGG-15132 Logging of supplemental redo data enabled for table TEST.TEST1.

2022-10-13 13:08:58 INFO OGG-15133 TRANDATA for scheduling columns has been added on table TEST.TEST1.

2022-10-13 13:08:58 INFO OGG-15135 TRANDATA for instantiation CSN has been added on table TEST.TEST1.

2022-10-13 13:08:59 INFO OGG-10471 ***** Oracle Goldengate support information on table TEST.TEST1 *****

Oracle Goldengate support native capture on table TEST.TEST1.

Oracle Goldengate marked following column as key columns on table TEST.TEST1: ID.

---为表test.test2添加同步日志

GGSCI (rac19b as ogg@testdb) 5> add trandata test.test2

2022-10-13 13:09:04 INFO OGG-15132 Logging of supplemental redo data enabled for table TEST.TEST2.

2022-10-13 13:09:04 INFO OGG-15133 TRANDATA for scheduling columns has been added on table TEST.TEST2.

2022-10-13 13:09:04 INFO OGG-15135 TRANDATA for instantiation CSN has been added on table TEST.TEST2.

2022-10-13 13:09:04 INFO OGG-10471 ***** Oracle Goldengate support information on table TEST.TEST2 *****

Oracle Goldengate support native capture on table TEST.TEST2.

Oracle Goldengate marked following column as key columns on table TEST.TEST2: ID.

GGSCI (rac19b as ogg@testdb) 6>2 创建EXTRACT抽取进程

GGSCI (rac19b as ogg@testdb) 6> add extract E_TEST tranlog,begin now,threads 1

EXTRACT added.

GGSCI (rac19b as ogg@testdb) 7> add exttrail ./dirdat/es,extract E_TEST,megabytes 1000

EXTTRAIL added.

GGSCI (rac19b as ogg@testdb) 8> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT STOPPED E_TEST 00:00:00 00:00:20

3 设置EXTRACT抽取进程参数

GGSCI (rac19b) 2> edit params e_test

extract E_TEST

SETENV (ORACLE_HOME = "/u01/app/oracle/product/19.0.0/dbhome_1")

SETENV (ORACLE_SID = "testdb")

SETENV (NLS_LANG = "AMERICAN_AMERICA.AL32UTF8")

userid ogg, password oggoracle

exttrail ./dirdat/es

gettruncates

TRANLOGOPTIONS INCLUDEREGIONID,EXCLUDEUSER ogg

TRANLOGOPTIONS BUFSIZE 2048000

TRANLOGOPTIONS DBLOGREADER,DBLOGREADERBUFSIZE 2048000

DISCARDFILE ./dirrpt/E_TEST.dsc,APPEND,MEGABYTES 1000

DISCARDROLLOVER AT 6:00

REPORTROLLOVER AT 6:00

REPORTCOUNT EVERY 1 HOURS,RATE

FETCHOPTIONS MISSINGROW ABEND

STATOPTIONS REPORTFETCH

WARNLONGTRANS 1H,CHECKINTERVAL 10m

DYNAMICRESOLUTION

TABLE TEST.TEST1;

TABLE TEST.TEST2;4 创建EXTRACT投递进程

GGSCI (rac19b) 3> add extract P_TEST,exttrailsource ./dirdat/es

EXTRACT added.

GGSCI (rac19b) 4> add RMTTRAIL ./dirdat/rs,ext P_TEST,megabytes 1000

RMTTRAIL added.

GGSCI (rac19b) 5>

5 设置EXTRACT投递进程参数

extract P_TEST

userid ogg, password oggoracle

rmthost 192.168.2.201, mgrport 7809

rmttrail /opt/ogg/dirdat/rs

passthru

DISCARDFILE ./dirrpt/P_TEST.dsc,APPEND,MEGABYTES 1000

DISCARDROLLOVER AT 6:00

REPORTROLLOVER AT 6:00

REPORTCOUNT EVERY 1 HOURS,RATE

TABLE TEST.TEST1;

TABLE TEST.TEST2;6 启动源端抽取以及投递进程

---启动抽取以及投递进程

GGSCI (rac19b) 8> start *test

Sending START request to MANAGER ...

EXTRACT E_TEST starting

Sending START request to MANAGER ...

EXTRACT P_TEST starting

---确认状态正常running

GGSCI (rac19b) 14> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING E_TEST 00:00:02 00:00:07

EXTRACT RUNNING P_TEST 00:00:00 00:00:03

GGSCI (rac19b) 15>

---确认目标端能接收到队列文件

[mysql@rac19a dirdat]$ ls -rlth

total 20K

-rw-r----- 1 mysql mysql 19K Oct 13 13:24 rs000000000

[mysql@rac19a dirdat]$ MySQL目标端配置复制进程(增量进程)

1 添加复制进程

GGSCI (rac19a DBLOGIN as ogg) 11> add replicat r_test,exttrail /opt/ogg/dirdat/rs,checkpointtable db1.checkpoint

REPLICAT added.

GGSCI (rac19a DBLOGIN as ogg) 12> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

REPLICAT STOPPED R_TEST 00:00:00 00:00:042 配置复制进程参数

GGSCI (rac19a DBLOGIN as ogg) 13> edit params r_test

replicat r_test

setenv (MYSQL_HOME="/usr/local/mysql")

setenv (MYSQL_UNIX_PORT="/opt/mysql/data/mysql.sock")

dboptions host 192.168.2.201,connectionport 3306

targetdb db1,userid ogg, password oggmysql

discardfile /opt/ogg/dirrpt/r_test.dsc,append,megabytes 1000

DISCARDROLLOVER AT 6:00

REPERROR (DEFAULT, ABEND)

MAXTRANSOPS 5000HANDLECOLLISIONS

REPORTROLLOVER AT 6:00

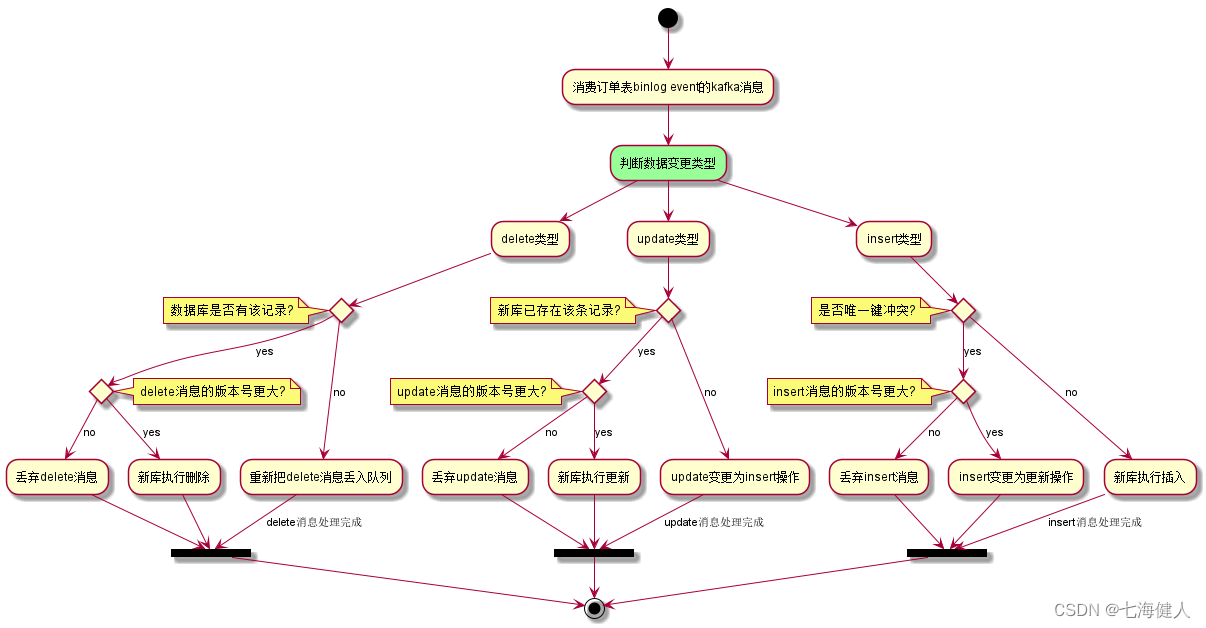

REPORTCOUNT EVERY 1 HOURS, RATEMAP test.test1, TARGET db1.test1; MAP test.test2, TARGET db1.test2;3 HANDLECOLLISIONS参数说明

参数是实现 OGG 全量数据与增量数据衔接的关键,其实现原理是在全量数据初始完成之后,开启增量抽取进程,应用全量数据初始化期间产生的 redo log。

当全量应用完成后,开启增量回放进程,应用全量期间的增量数据。可能会出现数据冲突的情况,这就是为什么表一定要有主键或者唯一键,使用该参数后增量回放 DML 语句时主要有以下冲突场景及处理逻辑:

1 目标端不存在 delete 语句的记录,忽略该问题并不记录到 discardfile。

2 目标端丢失 update 记录,更新的是主键值,update 转换成 insert,更新的键值是非主键,忽略该问题并不记录到 discardfile。

3 目标端重复 insert 已存在的主键值,这将被 replicat 进程转换为 UPDATE 现有主键值的。

4 在初始化数据,并追完增量数据之后,建议把HANDLECOLLISIONS参数去掉,Oracle官方建议不要一直使用该参数,这可能导致数据不准。

Oracle源端配置数据初始化进程(数据全量初始化进程)

1 添加初始化进程

GGSCI (rac19b) 17> add extract e_init,sourceistable

EXTRACT added.2 配置初始化进程

GGSCI (rac19b) 20> edit params e_init

extract e_init

SETENV (ORACLE_HOME = "/u01/app/oracle/product/19.0.0/dbhome_1")

SETENV (ORACLE_SID = "testdb")

SETENV (NLS_LANG = "AMERICAN_AMERICA.AL32UTF8")

userid ogg, password oggoracle

RMTHOST 192.168.2.201,MGRPORT 7809

RMTTASK REPLICAT,GROUP r_init

table test.test1;

table test.test2;MySQL目标端配置数据初始化进程(数据全量初始化进程)

1 添加初始化进程

GGSCI (rac19a DBLOGIN as ogg) 17> add replicat r_init,specialrun

REPLICAT added.2 配置初始化进程

GGSCI (rac19a DBLOGIN as ogg) 18> edit params r_init

replicat r_init

setenv (MYSQL_HOME="/usr/local/mysql")

setenv (MYSQL_UNIX_PORT="/opt/mysql/data/mysql.sock")

dboptions host 192.168.2.201,connectionport 3306

targetdb db1,userid ogg, password oggmysql

discardfile /opt/ogg/dirrpt/r_init.dsc,append,megabytes 1000

MAXTRANSOPS 5000MAP test.test1, TARGET db1.test1; MAP test.test2, TARGET db1.test2;全量数据初始化

数据初始化会将全表的数据通过创建的ogg进程e_init,r_init从Oracle源端同步到MySQL目标端。

1 启动Oracle源端的e_init初始化进程

GGSCI (rac19b) 22> start e_init

Sending START request to MANAGER ...

EXTRACT E_INIT starting2 目标端查看同步的进度

GGSCI (rac19a DBLOGIN as ogg) 56> view report r_init

2022-10-13 14:30:57 INFO OGG-06509 Using the following key columns for source table TEST.TEST1: ID.

2022-10-13 14:30:57 INFO OGG-06509 Using the following key columns for source table TEST.TEST2: ID.

2022-10-13 14:31:03 INFO OGG-02911 Processing table TEST.TEST1.

2022-10-13 14:33:14 INFO OGG-02911 Processing table TEST.TEST2.

***********************************************************************

* ** Run Time Statistics ** *

***********************************************************************

3 同步完成,会输出总的数量

Report at 2022-10-13 14:35:19 (activity since 2022-10-13 14:30:57)

Output to r_init:

From Table TEST.TEST1:# inserts: 999901# updates: 0# deletes: 0# upserts: 0# discards: 0

From Table TEST.TEST2:# inserts: 1000000# updates: 0# deletes: 0# upserts: 0# discards: 0

REDO Log StatisticsBytes parsed 0Bytes output 285986537增量数据同步

1 启动目标端复制进程r_test

GGSCI (rac19a DBLOGIN as ogg) 65> start r_test

Sending START request to MANAGER ...

REPLICAT R_TEST starting

GGSCI (rac19a DBLOGIN as ogg) 66> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

REPLICAT RUNNING R_TEST 00:00:00 00:00:012 查看增量同步信息

GGSCI (rac19a DBLOGIN as ogg) 67> stats r_test

Sending STATS request to REPLICAT R_TEST ...

Start of Statistics at 2022-10-13 14:45:24.

Replicating from TEST.TEST1 to db1.test1:

---collisions解决冲突数据的行数

*** Total statistics since 2022-10-13 14:45:17 ***Total inserts 0.00Total updates 0.00Total deletes 99.00Total upserts 0.00Total discards 0.00Total operations 99.00Total delete collisions 99.00

Replicating from TEST.TEST2 to db1.test2:

---增量update了10行

*** Total statistics since 2022-10-13 14:45:17 ***Total inserts 0.00Total updates 10.00Total deletes 0.00Total upserts 0.00Total discards 0.00Total operations 10.00

End of Statistics.

3 注释去除HANDLECOLLISIONS参数

注:要在增量同步进程应用完初始化期间产生的日志以及实时同步之后,再去除参数。

#编辑配置文件,注释---HANDLECOLLISIONS

GGSCI (rac19a DBLOGIN as ogg) 71> edit params r_test

replicat r_test

setenv (MYSQL_HOME="/usr/local/mysql")

setenv (MYSQL_UNIX_PORT="/opt/mysql/data/mysql.sock")

dboptions host 192.168.2.201,connectionport 3306

targetdb db1,userid ogg, password oggmysql

discardfile /opt/ogg/dirrpt/r_test.dsc,append,megabytes 1000

DISCARDROLLOVER AT 6:00

REPERROR (DEFAULT, ABEND)

MAXTRANSOPS 5000

---HANDLECOLLISIONS

REPORTROLLOVER AT 6:00

REPORTCOUNT EVERY 1 HOURS, RATEMAP test.test1, TARGET db1.test1;MAP test.test2, TARGET db1.test2;

#重启进程生效

GGSCI (rac19a DBLOGIN as ogg) 73> stop r_test

Sending STOP request to REPLICAT R_TEST ...

Request processed.

GGSCI (rac19a DBLOGIN as ogg) 74> start r_test

Sending START request to MANAGER ...

REPLICAT R_TEST starting

GGSCI (rac19a DBLOGIN as ogg) 75>4 测试数据同步情况

Oracle源端删除999行数据,当前数据99001

SQL> select count(*) from test.test2;

COUNT(*)

----------1000000

SQL> delete from test.test2 where rownum<1000;

999 rows deleted.

SQL> commit;

Commit complete.

SQL> select count(*) from test.test2;

COUNT(*)

----------999001

SQL>MySQL目标端同步删除的操作,数据 一致都为999001

root@mysql.sock 14:33: [db1]>select count(*) from db1.test2;

+----------+

| count(*) |

+----------+

| 999001 |

+----------+

1 row in set (0.17 sec)

root@mysql.sock 14:54: [db1]>

使用navicat+Oracle GoldenGate(OGG)的方式,操作步骤比较复杂,但如果需要迁移的表多,并且需要实时的增量同步,那么还是比较适合的。