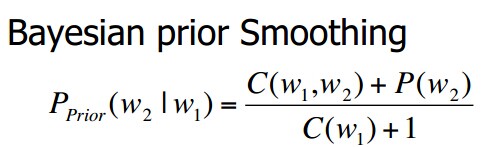

理论介绍可以参考 【Inception-v3】《Rethinking the Inception Architecture for Computer Vision》 中的 4.5 Model Regularization via Label Smoothing

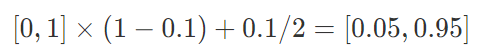

本质就是用右边(意会下就行)的标签替换左边的 one-hot 编码形式,让网络别那么愤青,成了二极管(非黑即白)

代码实现如下,来自 【Pytorch】label smoothing

class NMTCritierion(nn.Module):"""1. Add label smoothing"""def __init__(self, label_smoothing=0.0):super(NMTCritierion, self).__init__()self.label_smoothing = label_smoothingself.LogSoftmax = nn.LogSoftmax()if label_smoothing > 0:self.criterion = nn.KLDivLoss(size_average=False)else:self.criterion = nn.NLLLoss(size_average=False, ignore_index=100000)self.confidence = 1.0 - label_smoothingdef _smooth_label(self, num_tokens):# When label smoothing is turned on,# KL-divergence between q_{smoothed ground truth prob.}(w)# and p_{prob. computed by model}(w) is minimized.# If label smoothing value is set to zero, the loss# is equivalent to NLLLoss or CrossEntropyLoss.# All non-true labels are uniformly set to low-confidence.one_hot = torch.randn(1, num_tokens)one_hot.fill_(self.label_smoothing / (num_tokens - 1))return one_hotdef _bottle(self, v):return v.view(-1, v.size(2))def forward(self, dec_outs, labels):scores = self.LogSoftmax(dec_outs)num_tokens = scores.size(-1)# conduct label_smoothing modulegtruth = labels.view(-1)if self.confidence < 1:tdata = gtruth.detach()one_hot = self._smooth_label(num_tokens) # Do label smoothing, shape is [M]if labels.is_cuda:one_hot = one_hot.cuda()tmp_ = one_hot.repeat(gtruth.size(0), 1) # [N, M]tmp_.scatter_(1, tdata.unsqueeze(1), self.confidence) # after tdata.unsqueeze(1) , tdata shape is [N,1]gtruth = tmp_.detach()loss = self.criterion(scores, gtruth)return loss

使用方式如下:

loss_func = NMTCritierion(0.1) # label smoothing

loss = loss_func(out,label)

其中 out 是网络的输出,label 是输入对应的标签