这一此的博客我给大家介绍一下DCGAN的原理以及DCGAN的实战代码,今天我用最简单的语言给大家介绍DCGAN。

相信大家现在对深度学习有了一定的了解,对GAN也有了认识,如果不知道什么是GAN的可以去看我以前的博客,接下来我给大家介绍一下DCGAN的原理。

DCGAN

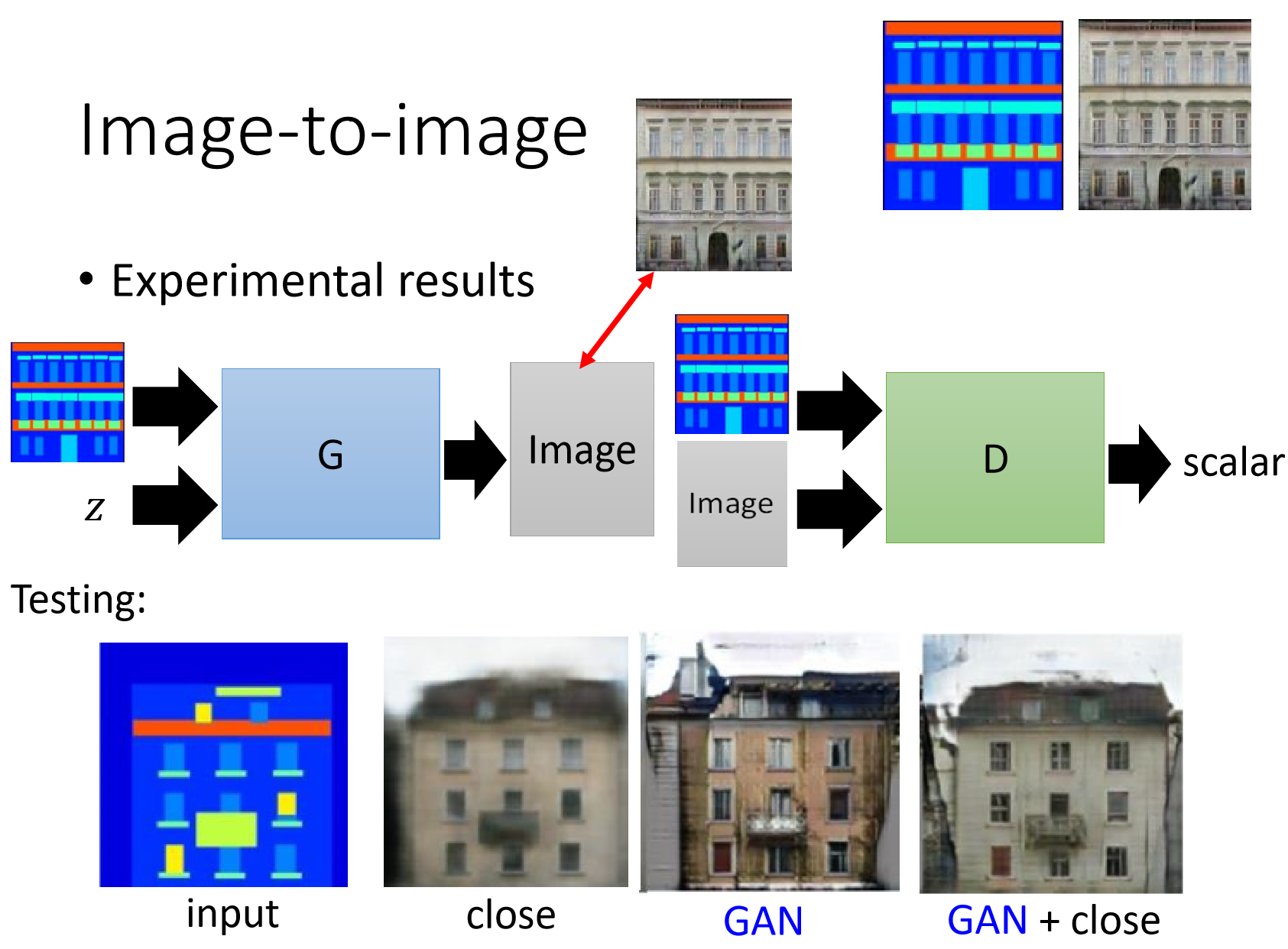

DCGAN的全称是Deep Convolution Generative Adversarial Networks(深度卷积生成对抗网络)顾名思义也就是在深度卷积的基础上加上了一个GAN,就构成了我们所说的DCGAN。

这是DCGAN中生成网络G的模型,通过随机输入的一个噪声,通过反卷积之后我们得到了一个64X64X3的RGB的图像.

这里我们要介绍一下反卷积:

卷积相信大家都很了解了把,反卷积其实就是卷积的一一个反向的过程。这里的反向的过程说的其实是还原输入图片的大小而不是原封不动地还原数据,这里要注意以下反卷积的意义。

例如:我们输入一个 3x3的一个灰度图经过一个卷积核为2x2 strides=2反卷积之后就变成了6x6的灰度图,也就是反卷积图片大小的变化和卷积过程是相反的。

DCGAN中我们要注意以下几点:

1.判别网络D判别完了之后我们把输出的结果要送入一个输出单元为一个的全连接网络

2.在判别网络的所有层上使用LeakyReLU激活函数。

3.在生成网络的所有层上使用RelU激活函数,除了输出层使用Tanh激活函数。

接下来我们来看一下实现代码:

# -*- coding: utf-8 -*-

//导入相应的模块

import tensorflow as tf

import numpy as np

import urllib

import tarfile

import os

import matplotlib.pyplot as plt

%matplotlib inline

from imageio import imread, imsave, mimsave

from scipy.misc import imresize

import glob

# 下载和处理LFW数据

url = 'http://vis-www.cs.umass.edu/lfw/lfw.tgz'

filename = 'lfw.tgz'

directory = 'lfw_imgs'

new_dir = 'lfw_new_imgs'

if not os.path.isdir(new_dir):os.mkdir(new_dir)

#判断是否存在该文件夹 if not os.path.isdir(directory):if not os.path.isfile(filename):urllib.request.urlretrieve(url, filename)tar = tarfile.open(filename, 'r:gz')tar.extractall(path=directory)tar.close()count = 0for dir_, _, files in os.walk(directory):#取出所有的图片重新命名for file_ in files:img = imread(os.path.join(dir_, file_))imsave(os.path.join(new_dir, '%d.png' % count), img)count += 1

# dataset = 'lfw_new_imgs' # LFW

dataset = 'celeba' # CelebA

images = glob.glob(os.path.join(dataset, '*.*'))

print(len(images))

batch_size = 100

z_dim = 100

WIDTH = 64

HEIGHT = 64OUTPUT_DIR = 'samples_' + dataset

if not os.path.exists(OUTPUT_DIR):os.mkdir(OUTPUT_DIR)

#设置图片保存路径X = tf.placeholder(dtype=tf.float32, shape=[None, HEIGHT, WIDTH, 3], name='X')

noise = tf.placeholder(dtype=tf.float32, shape=[None, z_dim], name='noise')

is_training = tf.placeholder(dtype=tf.bool, name='is_training')def lrelu(x, leak=0.2):return tf.maximum(x, leak * x)

#激活函数输出X和0.2X之间比较大的一个

def sigmoid_cross_entropy_with_logits(x, y):return tf.nn.sigmoid_cross_entropy_with_logits(logits=x, labels=y)

#损失函数

//定义判别器进行卷积操作

def discriminator(image, reuse=None, is_training=is_training):momentum = 0.9with tf.variable_scope('discriminator', reuse=reuse):#下面的每个变量名都带上discriminatorh0 = lrelu(tf.layers.conv2d(image, kernel_size=5, filters=64, strides=2, padding='same'))h1 = tf.layers.conv2d(h0, kernel_size=5, filters=128, strides=2, padding='same')h1 = lrelu(tf.contrib.layers.batch_norm(h1, is_training=is_training, decay=momentum))h2 = tf.layers.conv2d(h1, kernel_size=5, filters=256, strides=2, padding='same')h2 = lrelu(tf.contrib.layers.batch_norm(h2, is_training=is_training, decay=momentum))h3 = tf.layers.conv2d(h2, kernel_size=5, filters=512, strides=2, padding='same')h3 = lrelu(tf.contrib.layers.batch_norm(h3, is_training=is_training, decay=momentum))h4 = tf.contrib.layers.flatten(h3)h4 = tf.layers.dense(h4, units=1)//送入是由一个输出神经元的全连接网络,因为只需要一个真假的答案所以只要一个输出return tf.nn.sigmoid(h4), h4

//定义生成器进行反卷积的操作

def generator(z, is_training=is_training):momentum = 0.9with tf.variable_scope('generator', reuse=None):#下面的变量名都带上generatod = 4h0 = tf.layers.dense(z, units=d * d * 512)h0 = tf.reshape(h0, shape=[-1, d, d, 512])h0 = tf.nn.relu(tf.contrib.layers.batch_norm(h0, is_training=is_training, decay=momentum))h1 = tf.layers.conv2d_transpose(h0, kernel_size=5, filters=256, strides=2, padding='same')h1 = tf.nn.relu(tf.contrib.layers.batch_norm(h1, is_training=is_training, decay=momentum))h2 = tf.layers.conv2d_transpose(h1, kernel_size=5, filters=128, strides=2, padding='same')h2 = tf.nn.relu(tf.contrib.layers.batch_norm(h2, is_training=is_training, decay=momentum))h3 = tf.layers.conv2d_transpose(h2, kernel_size=5, filters=64, strides=2, padding='same')h3 = tf.nn.relu(tf.contrib.layers.batch_norm(h3, is_training=is_training, decay=momentum))h4 = tf.layers.conv2d_transpose(h3, kernel_size=5, filters=3, strides=2, padding='same', activation=tf.nn.tanh, name='g')//输出用tanh激活激活return h4

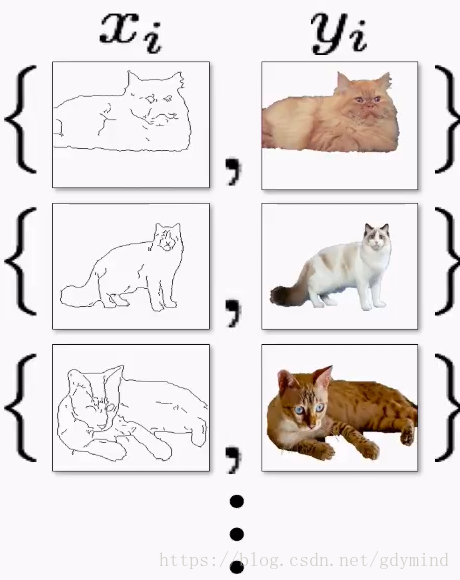

这里给大家图片变化的步骤

g = generator(noise)#输入随机噪声产生图片

d_real, d_real_logits = discriminator(X)

d_fake, d_fake_logits = discriminator(g, reuse=True)vars_g = [var for var in tf.trainable_variables() if var.name.startswith('generator')]#取出所有的生成器数据

vars_d = [var for var in tf.trainable_variables() if var.name.startswith('discriminator')]#取出所有的判别器的数据loss_d_real = tf.reduce_mean(sigmoid_cross_entropy_with_logits(d_real_logits, tf.ones_like(d_real)))

#真实数据对判别器产生的损失,要尽可能给高分

loss_d_fake = tf.reduce_mean(sigmoid_cross_entropy_with_logits(d_fake_logits, tf.zeros_like(d_fake)))

#生成的假数据对判别器产生的损失要尽可能给低分

loss_g = tf.reduce_mean(sigmoid_cross_entropy_with_logits(d_fake_logits, tf.ones_like(d_fake)))

#生成器的数据对生成器产生的损失要尽可能给高分

loss_d = loss_d_real + loss_d_fake#生成器的损失

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):optimizer_d = tf.train.AdamOptimizer(learning_rate=0.0002, beta1=0.5).minimize(loss_d, var_list=vars_d)optimizer_g = tf.train.AdamOptimizer(learning_rate=0.0002, beta1=0.5).minimize(loss_g, var_list=vars_g)

def read_image(path, height, width):image = imread(path)h = image.shape[0]w = image.shape[1]def read_image(path, height, width):image = imread(path)h = image.shape[0]w = image.shape[1]if h > w:image = image[h // 2 - w // 2: h // 2 + w // 2, :, :]#高度取中间部分宽度和深度全部都要else:image = image[:, w // 2 - h // 2: w // 2 + h // 2, :] image = imresize(image, (height, width))#改变图片的大小return image / 255.#把图片范围转到了0-1if h > w:image = image[h // 2 - w // 2: h // 2 + w // 2, :, :]#高度取中间部分宽度和深度全部都要else:image = image[:, w // 2 - h // 2: w // 2 + h // 2, :] image = imresize(image, (height, width))#改变图片的大小return image / 255.#把图片范围转到了0-1

#这段代码为一个辅助函数代码,大家可以不用看

def montage(images): if isinstance(images, list):images = np.array(images)img_h = images.shape[1]img_w = images.shape[2]n_plots = int(np.ceil(np.sqrt(images.shape[0])))if len(images.shape) == 4 and images.shape[3] == 3:m = np.ones((images.shape[1] * n_plots + n_plots + 1,images.shape[2] * n_plots + n_plots + 1, 3)) * 0.5elif len(images.shape) == 4 and images.shape[3] == 1:m = np.ones((images.shape[1] * n_plots + n_plots + 1,images.shape[2] * n_plots + n_plots + 1, 1)) * 0.5elif len(images.shape) == 3:m = np.ones((images.shape[1] * n_plots + n_plots + 1,images.shape[2] * n_plots + n_plots + 1)) * 0.5else:raise ValueError('Could not parse image shape of {}'.format(images.shape))for i in range(n_plots):for j in range(n_plots):this_filter = i * n_plots + jif this_filter < images.shape[0]:this_img = images[this_filter]m[1 + i + i * img_h:1 + i + (i + 1) * img_h,1 + j + j * img_w:1 + j + (j + 1) * img_w] = this_imgreturn m

ess = tf.Session()

sess.run(tf.global_variables_initializer())

z_samples = np.random.uniform(-1.0, 1.0, [batch_size, z_dim]).astype(np.float32)

samples = []

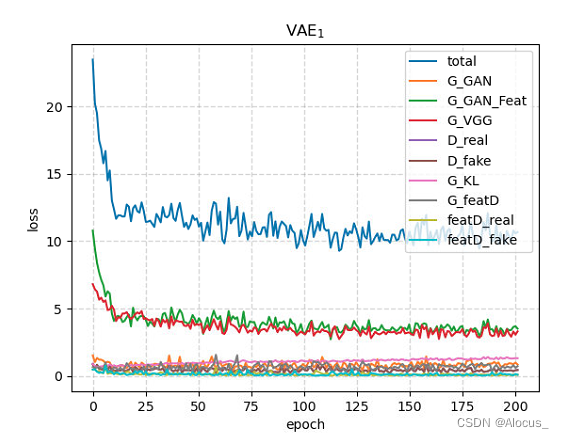

loss = {'d': [], 'g': []}offset = 0

for i in range(60000):n = np.random.uniform(-1.0, 1.0, [batch_size, z_dim]).astype(np.float32)offset = (offset + batch_size) % len(images)batch = np.array([read_image(img, HEIGHT, WIDTH) for img in images[offset: offset + batch_size]])batch = (batch - 0.5) * 2#把图片范围转到-1-1之间d_ls, g_ls = sess.run([loss_d, loss_g], feed_dict={X: batch, noise: n, is_training: True})loss['d'].append(d_ls)loss['g'].append(g_ls)sess.run(optimizer_d, feed_dict={X: batch损失, noise: n, is_training: True})sess.run(optimizer_g, feed_dict={X: batch, noise: n, is_training: True})sess.run(optimizer_g, feed_dict={X: batch, noise: n, is_training: True})if i % 500 == 0:print(i, d_ls, g_ls)gen_imgs = sess.run(g, feed_dict={noise: z_samples, is_training: False})gen_imgs = (gen_imgs + 1) / 2#再把图片转到0-1之间imgs = [img[:, :, :] for img in gen_imgs]gen_imgs = montage(imgs)plt.axis('off')plt.imshow(gen_imgs)imsave(os.path.join(OUTPUT_DIR, 'sample_%d.jpg' % i), gen_imgs)plt.show()samples.append(gen_imgs)plt.plot(loss['d'], label='Discriminator')

plt.plot(loss['g'], label='Generator')

plt.legend(loc='upper right')

plt.savefig(os.path.join(OUTPUT_DIR, 'Loss.png'))

plt.show()

mimsave(os.path.join(OUTPUT_DIR, 'samples.gif'), samples, fps=10)

#saver = tf.train.Saver()

#saver.save(sess, os.path.join(OUTPUT_DIR, 'dcgan_' + dataset), global_step=60000) 保存现有的模型

这段代码来自CSDN—张宏伦 , 代码注释原创

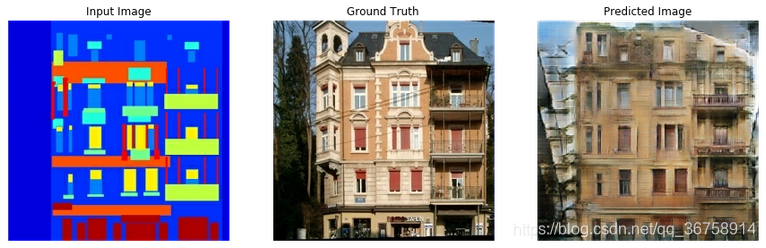

我用他的模型跑出来的结果为:

生成了人脸并把100张图片融合为一张图片