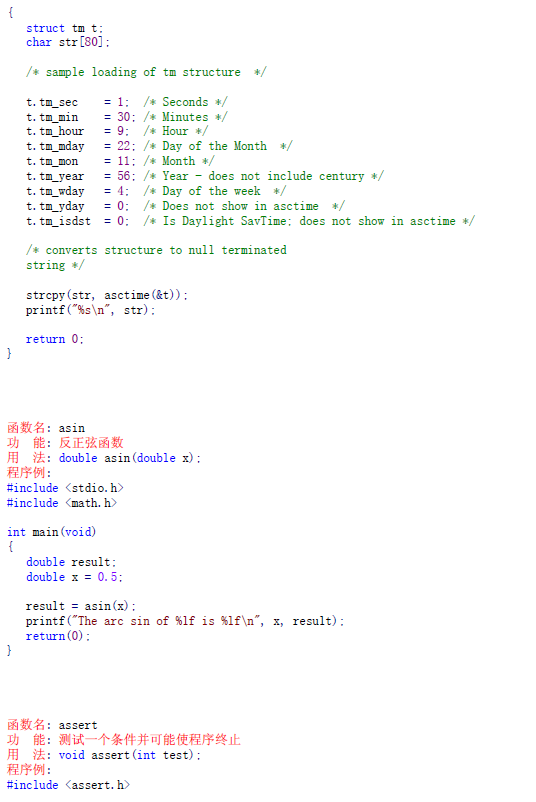

实验代码

本文采用python sklearn库中,作为quantile regression的示例代码。以下为详细解析:

import numpy as np

import matplotlib.pyplot as pltfrom sklearn.ensemble import GradientBoostingRegressor

%matplotlib inline

np.random.seed(1)

#设置随机数生成的种子def f(x):"""The function to predict."""return x * np.sin(x)

#对x取正弦#----------------------------------------------------------------------

# First the noiseless case

X = np.atleast_2d(np.random.uniform(0, 10.0, size=100)).T

# 随机采样并转换成数组

# numpy.atleast_2d()其他格式转换成数组

# numpy.random.uniform(low,high,size) 从一个均匀分布[low,high)中随机采样,注意定义域是左闭右开,即包含low,不包含high.

# .T 数组转置.

X = X.astype(np.float32)

#转换数据类型 float32 减少精度# Observations

y = f(X).ravel()

#多维数组转1维向量dy = 1.5 + 1.0 * np.random.random(y.shape)

#生成 np.random.random(y.shape) y.shape大小矩阵的随机数noise = np.random.normal(0, dy)

#生成一个正态分布,正态分布标准差(宽度)为dyy += noise

y = y.astype(np.float32)# Mesh the input space for evaluations of the real function, the prediction and

# its MSE

xx = np.atleast_2d(np.linspace(0, 10, 1000)).T

#np.linespace 产生从0到10,1000个等差数列中的数字xx = xx.astype(np.float32)alpha = 0.95clf = GradientBoostingRegressor(loss='quantile', alpha=alpha,n_estimators=250, max_depth=3,learning_rate=.1, min_samples_leaf=9,min_samples_split=9)

#loss: 选择损失函数,默认值为ls(least squres)# learning_rate: 学习率,

# alpha ,quantile regression的置信度# n_estimators: 弱学习器的数目,250# max_depth: 每一个学习器的最大深度,限制回归树的节点数目,默认为3# min_samples_split: 可以划分为内部节点的最小样本数,9# min_samples_leaf: 叶节点所需的最小样本数,9clf.fit(X, y)# Make the prediction on the meshed x-axis

y_upper = clf.predict(xx)

#用训练好的分类器去预测xxclf.set_params(alpha=1.0 - alpha)

#1-0.95=0.05 再训练一条

clf.fit(X, y)# Make the prediction on the meshed x-axis

y_lower = clf.predict(xx)clf.set_params(loss='ls')

#改成 ls :least squares regression 最小二乘回归

clf.fit(X, y)# Make the prediction on the meshed x-axis

y_pred = clf.predict(xx)# Plot the function, the prediction and the 95% confidence interval based on

# the MSE

#matplot画图 y_upper(alpha=0.95)画一条 y_lower(alpha=0.05)画一条 普通的线性回归(prediction)画一条

fig = plt.figure()

plt.plot(xx, f(xx), 'g:', label=r'$f(x) = x\,\sin(x)$')

plt.plot(X, y, 'b.', markersize=10, label=u'Observations')

plt.plot(xx, y_pred, 'r-', label=u'Prediction')

plt.plot(xx, y_upper, 'k-')

plt.plot(xx, y_lower, 'k-')

plt.fill(np.concatenate([xx, xx[::-1]]),np.concatenate([y_upper, y_lower[::-1]]),alpha=.5, fc='b', ec='None', label='95% prediction interval')

plt.xlabel('$x$')

plt.ylabel('$f(x)$')

plt.ylim(-10, 20)

plt.legend(loc='upper left')

plt.show()

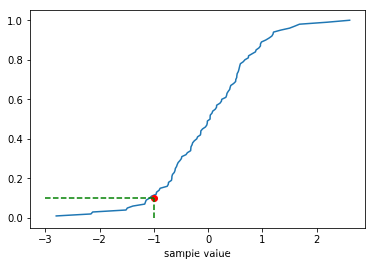

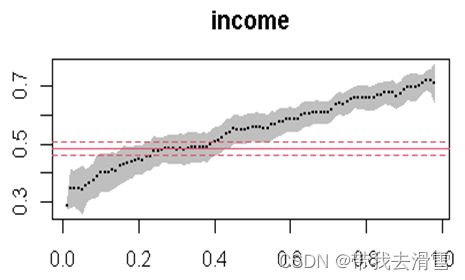

运行结果图

实验链接:

sklearn官方文档链接:

https://scikit-learn.org/stable/auto_examples/ensemble/plot_gradient_boosting_quantile.html#sphx-glr-auto-examples-ensemble-plot-gradient-boosting-quantile-py

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingRegressor.html