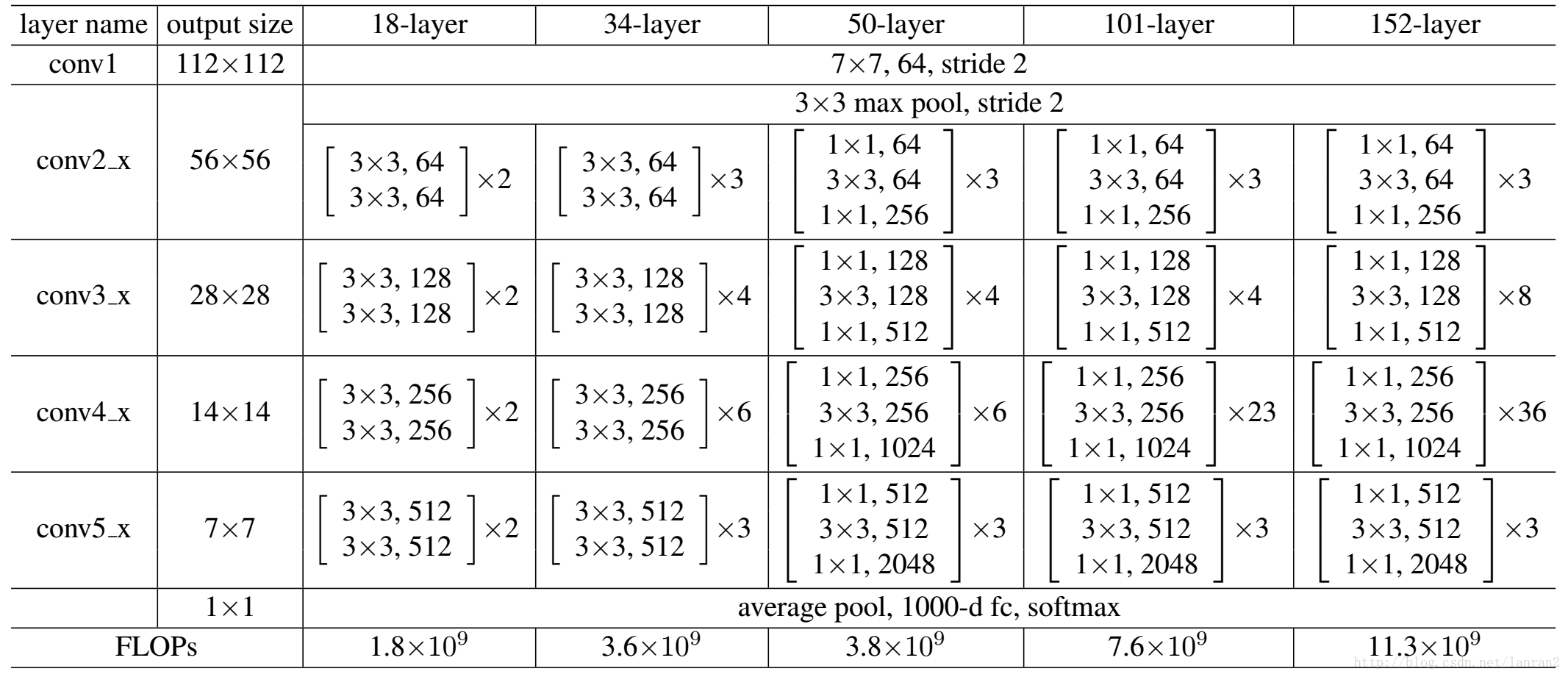

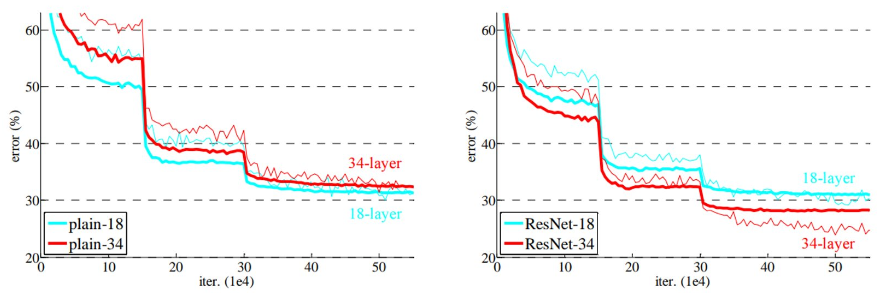

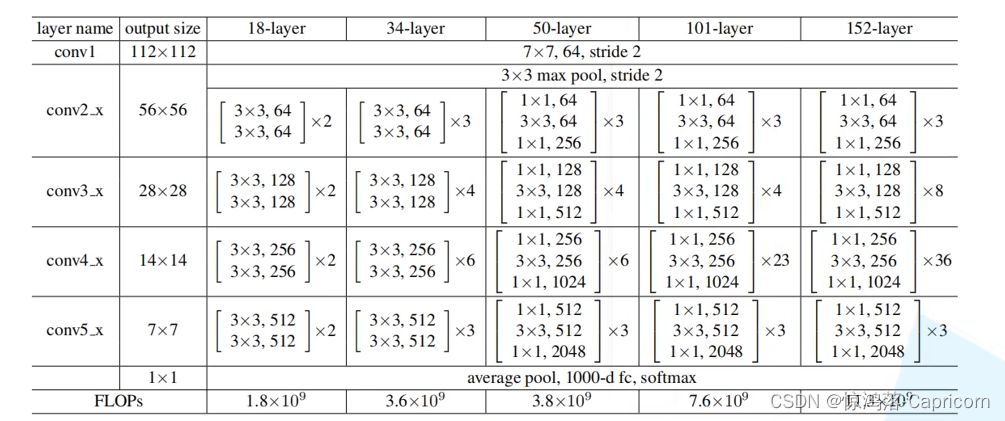

ResNet18的18层代表的是带有权重的 18层,包括卷积层和全连接层,不包括池化层和BN层。

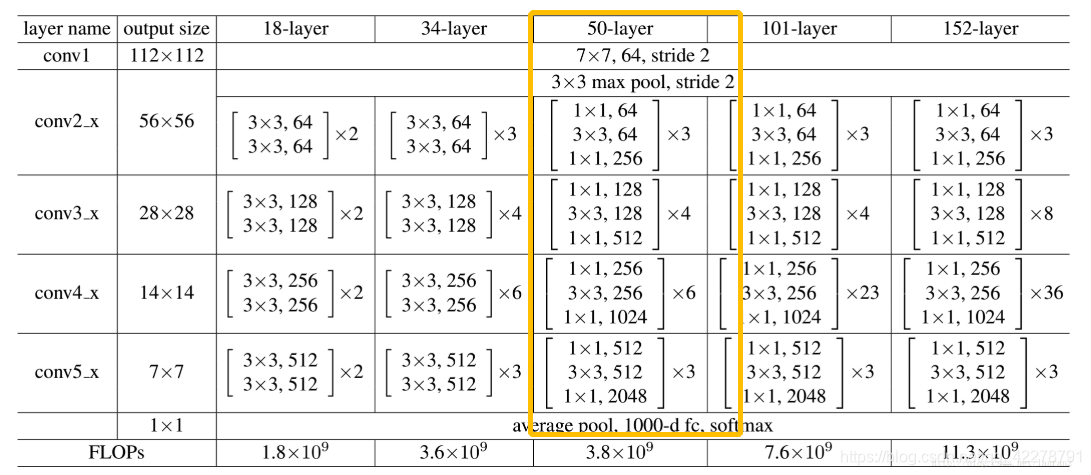

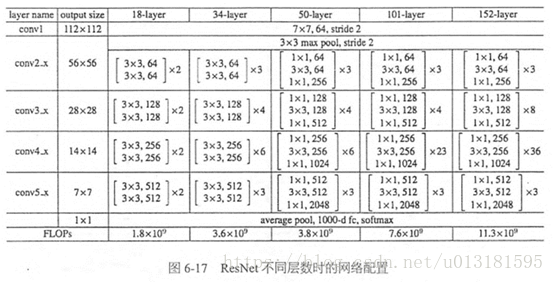

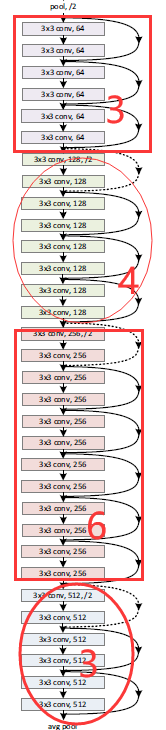

Resnet论文给出的结构图

参考ResNet详细解读

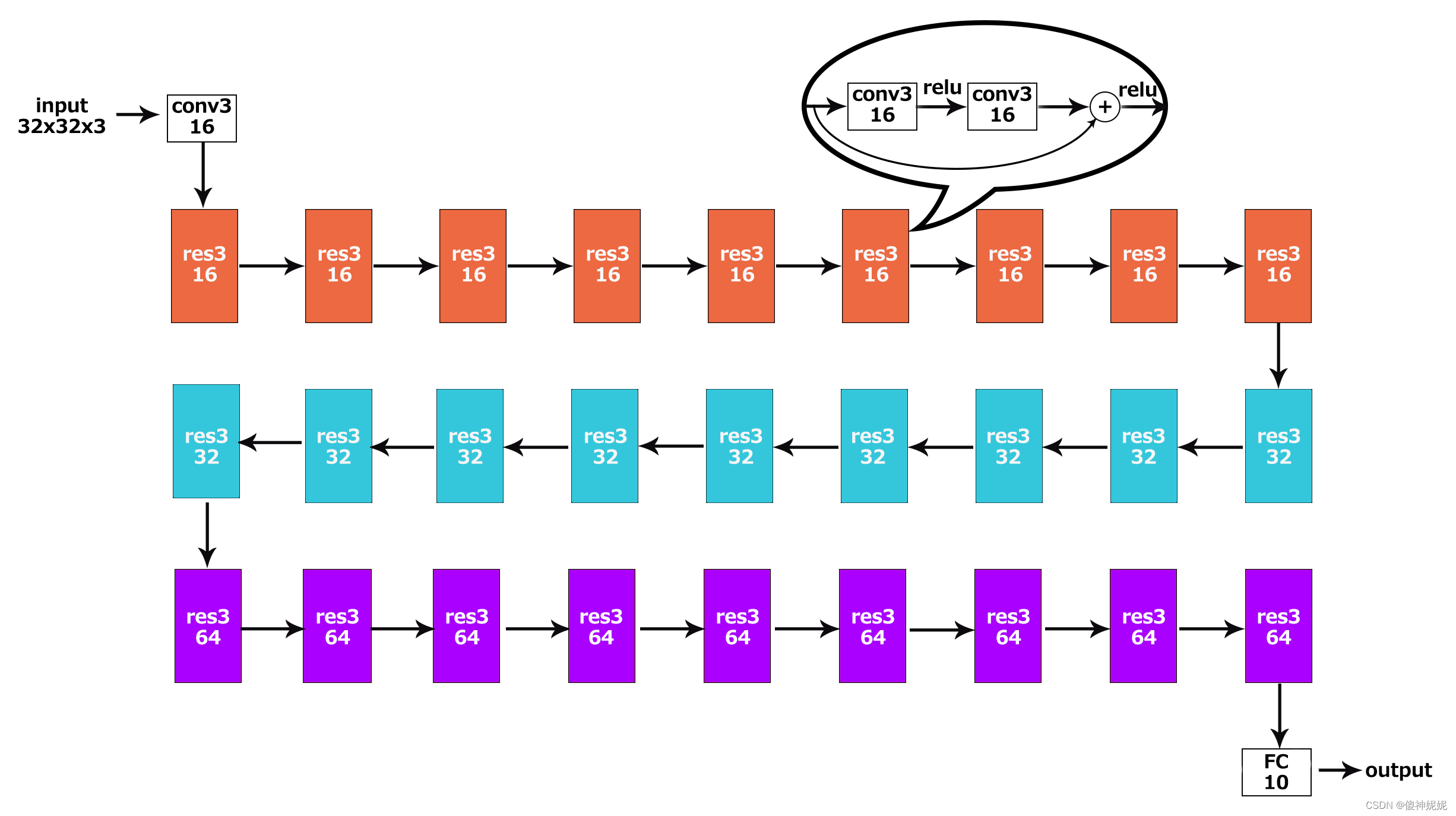

结构解析:

-

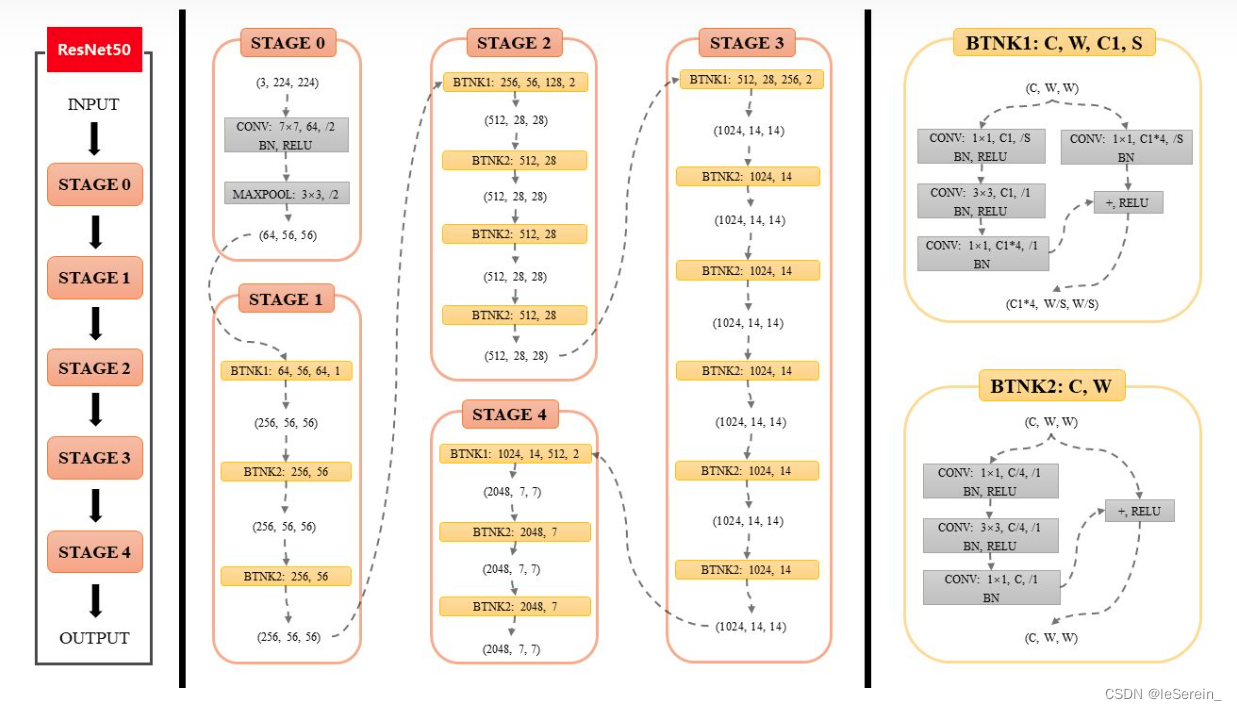

首先是第一层卷积使用7∗77∗7大小的模板,步长为2,padding为3。之后进行BN,ReLU和maxpool。这些构成了第一部分卷积模块conv1。

-

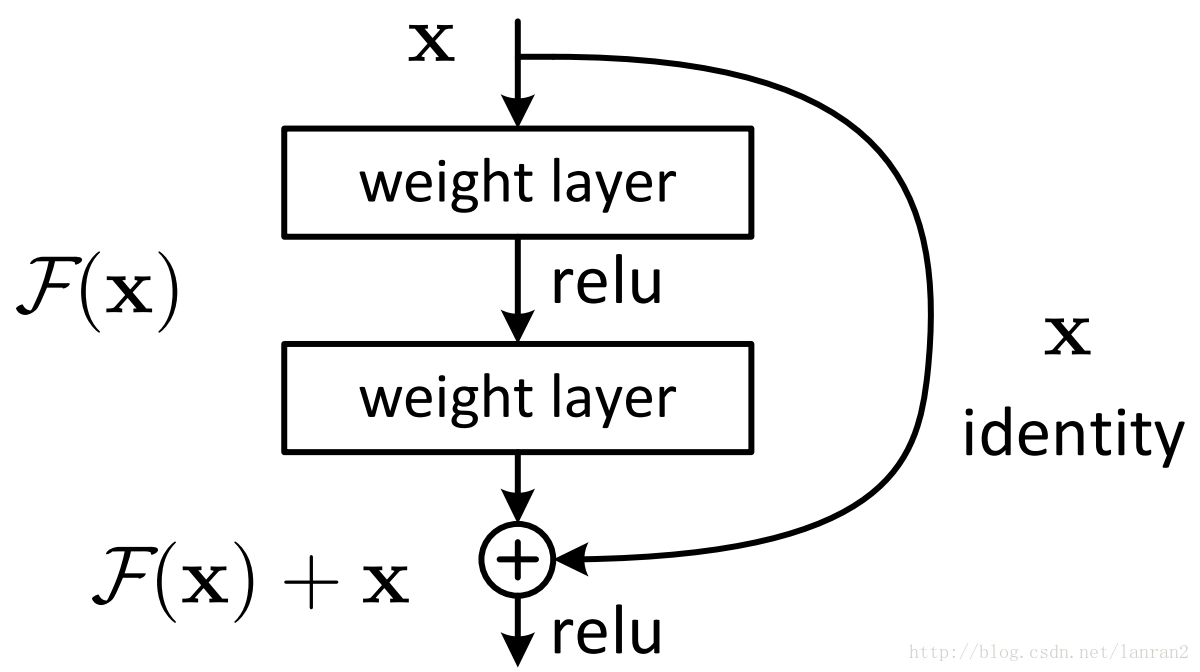

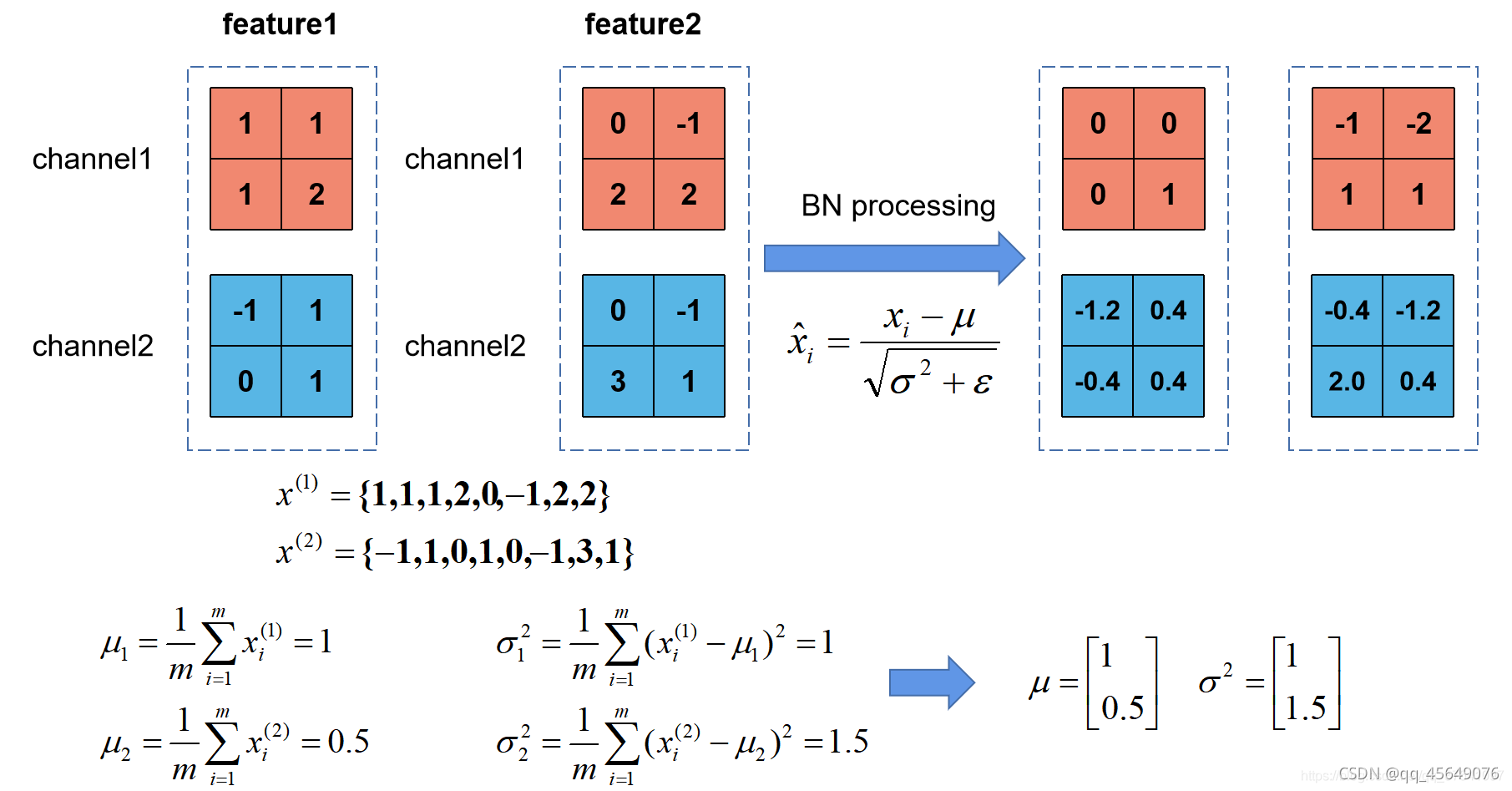

然后是四个stage,代码中用make_layer()来生成stage,每个stage中有多个模块,每个模块叫做building block,resnet18= [2,2,2,2],就有8个building block。注意到他有两种模块BasicBlock和Bottleneck。resnet18和resnet34用的是BasicBlock,resnet50及以上用的是Bottleneck。无论BasicBlock还是Bottleneck模块,都用到了shortcut connection连接方式:

-

BasicBlock架构中,主要使用了两个3x3的卷积,然后进行BN,之后的

out += residual这一句在输出上叠加了输入xx(注意到一开始定义了residual = x)class BasicBlock(nn.Module):expansion = 1def __init__(self, inplanes, planes, stride=1, downsample=None):super(BasicBlock, self).__init__()self.conv1 = conv3x3(inplanes, planes, stride)self.bn1 = nn.BatchNorm2d(planes)self.relu = nn.ReLU(inplace=True)self.conv2 = conv3x3(planes, planes)self.bn2 = nn.BatchNorm2d(planes)self.downsample = downsampleself.stride = stridedef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)if self.downsample is not None:residual = self.downsample(x)out += residualout = self.relu(out)return out -

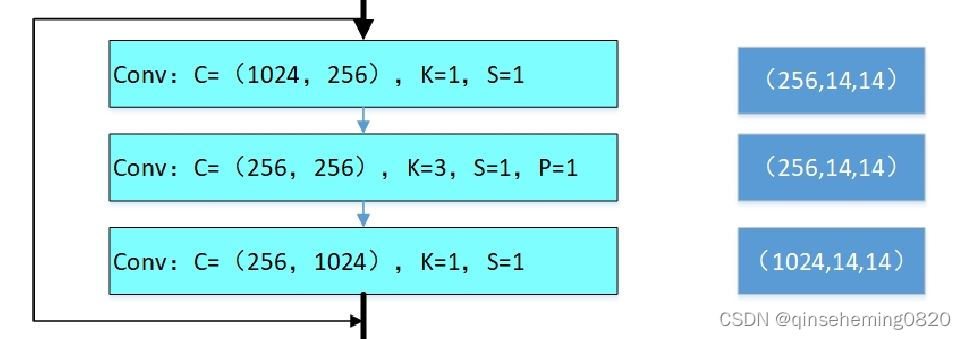

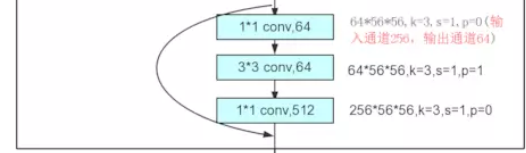

Bottleneck模块使用1x1,3x3,1x1的卷积模板,使用Bottleneck结构可以减少网络参数数量。他首先用1x1卷积将通道数缩减为一半,3x3卷积维持通道数不变,1x1卷积将通道数放大为4倍。则总体来看,经过这个模块后通道数变为两倍。

class Bottleneck(nn.Module):expansion = 4def __init__(self, inplanes, planes, stride=1, downsample=None):super(Bottleneck, self).__init__()self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)self.bn1 = nn.BatchNorm2d(planes)self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,padding=1, bias=False)self.bn2 = nn.BatchNorm2d(planes)self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False)self.bn3 = nn.BatchNorm2d(planes * 4)self.relu = nn.ReLU(inplace=True)self.downsample = downsampleself.stride = stridedef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)out = self.relu(out)out = self.conv3(out)out = self.bn3(out)if self.downsample is not None:residual = self.downsample(x)out += residualout = self.relu(out)return out

-

-

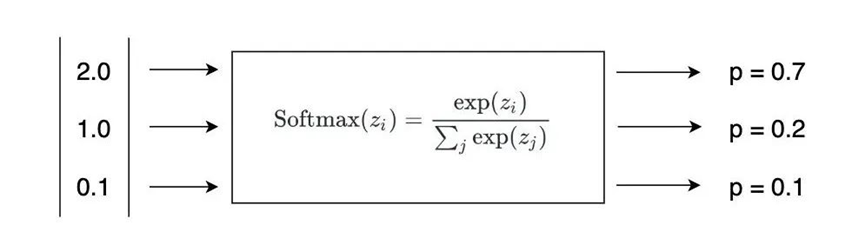

最后是avgpool和一个全连接层,映射到1000维上(因为ImageNet有1000个类别)。

结构图和更多细节:

参考https://www.jianshu.com/p/085f4c8256f1

https://blog.csdn.net/weixin_40548136/article/details/88820996

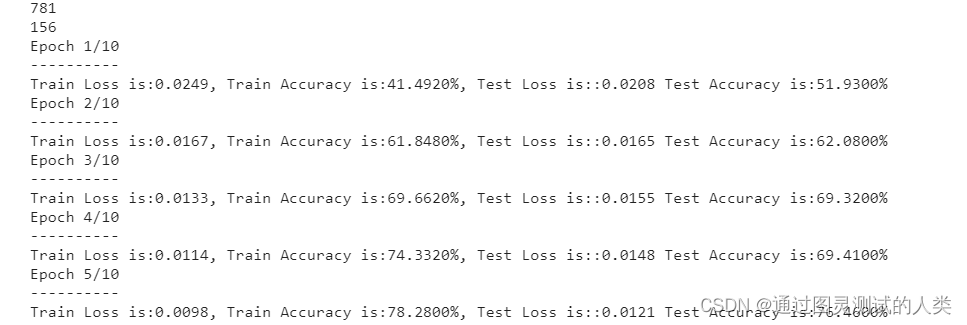

resnet18

resnet50