最近许多目标检测网络的backbone都有用到resnet-50的部分结构,于是找到原论文,看了一下网络结构,在这里做一个备份,需要的时候再来看看。

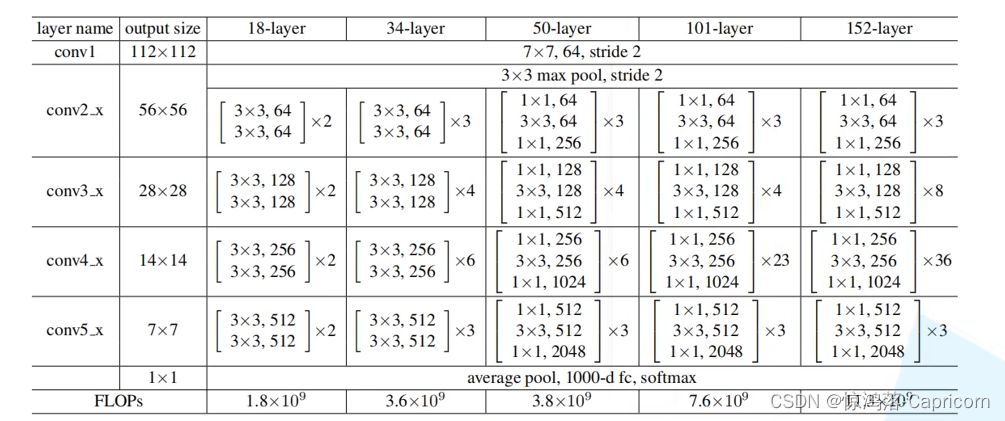

整体结构

layer0

首先是layer0,这部分在各个网络都一样,如图,由一个7x7,步距为2的卷积+BN+relu,加上3x3最大值池化,步长为2的池化层构成。

layer1

以18和50举例

18的basicblock如下,layer1共有2个下图的basicblock

resnet-18的block称为basicblock

resnet-50称为bottleneck

identity和卷积之后的输出对应数值相加!

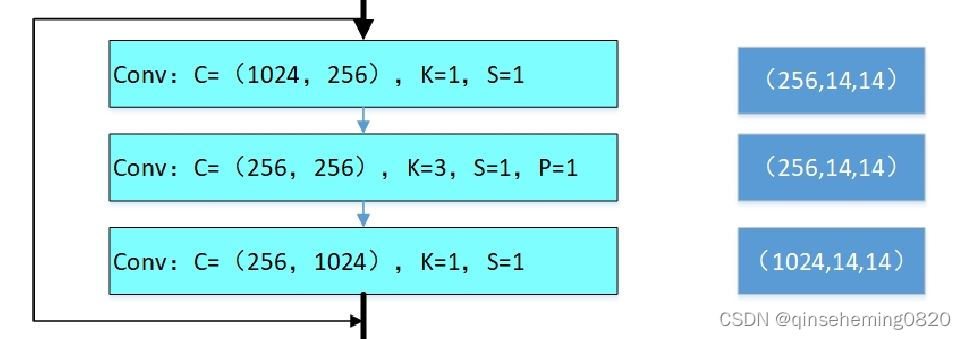

resnet-50的bottleneck如下,layer1共有3个bottleneck,

第2个和第3个block的输入channel为256

layer2及layer3,4,5

layer2以及之后的3,4,5与layer1的结构大致上相同,只不过每个layer的第一个block,resnet-18的第一个conv层的stride=2,resnet-50的3x3conv层stride=2。其他的block都与layer1一致。

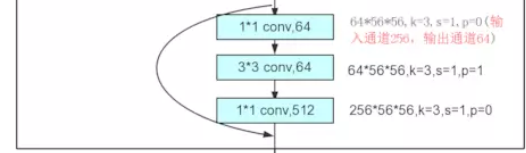

例如resnet-50的layer2第一个bottleneck:

identity的卷积核大小应为3x3,为了保证residual前后的shape一致,能够相加,进行了下采样

第2,3,4个bottleneck为

将block和整体结构对应,理解resnet50结构并不难,

34与18类似,101,152与50类似,只是block数量不一样。

代码实现

import torch.nn as nn# bottleneck多次用到,所以封装成类调用 这是layer2及之后的Bottleneck

class Bottleneck(nn.Module):def __init__(self, inchannels, outchannels, stride=1, identity=None):""":param inchannels: 输入维度:param outchannels: 输出维度:param stride: 第二个卷积层的步长:param identity: 输入到输出的处理,维度相同直接相加,维度不同要1x1卷积"""super(Bottleneck, self).__init__()# 第一个卷积和第二个卷积的channelself.mid_channels = outchannels // 4;# 定义三个卷积层self.conv1 = nn.Conv2d(inchannels, self.mid_channels, kernel_size=1, stride=1)self.bn1 = nn.BatchNorm2d(self.mid_channels)self.conv2 = nn.Conv2d(self.mid_channels, self.mid_channels, kernel_size=3, stride=stride, padding=1)self.bn2 = nn.BatchNorm2d(self.mid_channels)self.conv3 = nn.Conv2d(self.mid_channels, outchannels, kernel_size=1, stride=1)self.bn3 = nn.BatchNorm2d(outchannels)# inplace=True 新的值直接代替旧的值 节省内存self.relu = nn.ReLU(inplace=True)self.stride = strideif identity is not None:self.identity = identityelse:# 卷积然后BN,RELU,stride和3x3卷积的一致,保持shape一致self.identity = nn.Sequential(nn.Conv2d(inchannels,outchannels,kernel_size=1,stride=stride,bias=False),nn.BatchNorm2d(outchannels),nn.ReLU(inplace=True))def forward(self,x):# 走identity线resduial=xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)out = self.relu(out)out = self.conv3(out)out = self.bn3(out)out = self.relu(out)if self.identity is not None:resduial = self.identity(resduial)out += resduialout = self.bn3(out)out = self.relu(out)return outclass resnet_50(nn.Module):def __init__(self,image_shape):super(resnet_50, self).__init__()# 一般后接BN层不需要偏置self.layer_0_conv1 = nn.Conv2d(3,64,kernel_size=7,stride=2,padding=3,bias=False)self.layer_0_bn1 = nn.BatchNorm2d(64)self.layer_0_pool = nn.MaxPool2d(kernel_size=3,stride=2,padding=1)self.layer_1 = self.makelayer(3,64,256,1)self.layer_2 = self.makelayer(4,256,512,2)self.layer_3 = self.makelayer(6,512,1024,2)self.layer_4 = self.makelayer(3,1024,2048,2)self.a_pool = nn.AvgPool2d(3,stride=1,padding=1)self.fc = nn.Linear(7*7*2048,1000)def forward(self,x):out = self.layer_0_conv1(x)out = self.layer_0_bn1(out)out = self.layer_0_pool(out)out = self.layer_1(out)out = self.layer_2(out)out = self.layer_3(out)out = self.layer_4(out)out = self.a_pool(out)out = out.view(-1,7*7*2048)out = self.fc(out)return out# 生成layerdef makelayer(self,number,inchannels, last_channels, stride=1):""":param number: 由几个bottleneck构成:param last_channels: bottleneck的最后一个卷积层输出的channel:param stride: bottleneck 3x3卷积的步长:param is_layer1: 是否为第一层:return:"""layer = []# 构建生成每一层layer的方法# 每个layer的第一层的stride为2 单独构造bottle = Bottleneck(inchannels,last_channels,stride=stride)layer.append(bottle)for i in range(number-1):bottle = Bottleneck(last_channels,last_channels,stride=1,)layer.append(bottle)# *list 提取list中的每一个元素return nn.Sequential(*layer)