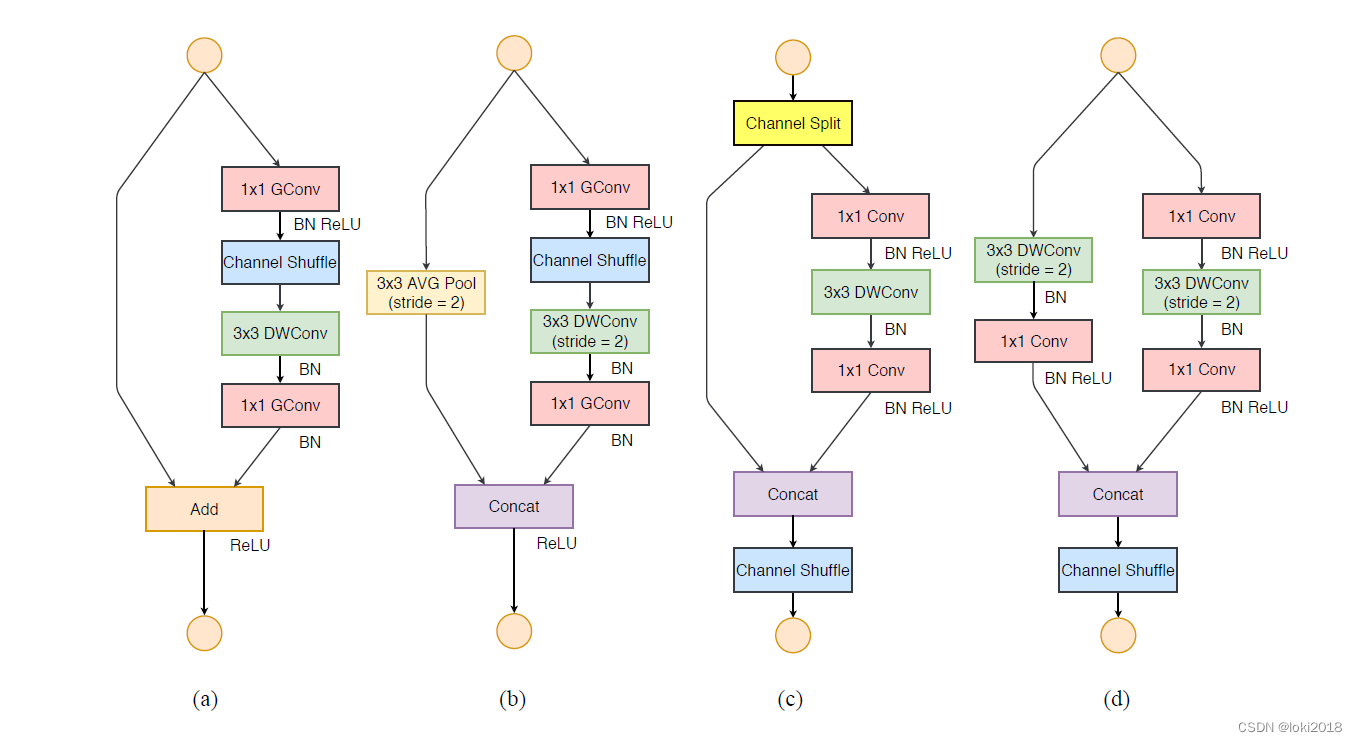

本文使用的是轻量级模型shufflenet,使用keras框架进行训练。

参考链接(模型详解):https://blog.csdn.net/zjn295771349/article/details/89704086

代码如下:

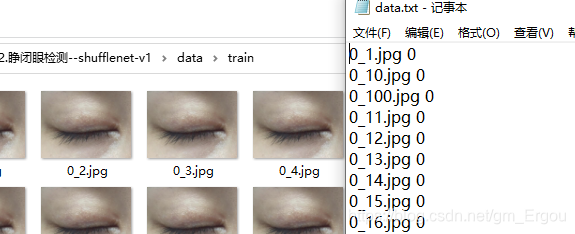

1.data_process.py(数据处理:这里随便选了两张照片,重复造数据,生成数据集)

import numpy as np

import random

import cv2

import os

import shutil# path="data/train/"

# for file in os.listdir(path):

# file_name=path+file

# print(file_name)

# for i in range(100):

# new_file_name=path+file.split('.')[0]+"_"+str(i+1)+'.jpg'

# shutil.copy(file_name,new_file_name)f1=open("data/train/data.txt",'w+')

path="data/train/"

for file in os.listdir(path):file_name=path+fileprint(file_name)if file.endswith('jpg'):f1.write(file+' '+file.split('.')[0].split('_')[0]+'\n')

2.train.py

import keras

from keras import backend as K

from keras.preprocessing.image import ImageDataGenerator

from keras.applications.imagenet_utils import preprocess_input

from keras.utils import plot_model

from shufflenet import ShuffleNet

from keras.preprocessing.image import load_img

from keras.preprocessing import image

from keras.callbacks import CSVLogger, ModelCheckpoint, ReduceLROnPlateau, LearningRateScheduler

import numpy as np

import cv2

import time

import os

import utils

import tensorflow as tfos.environ["CUDA_VISIBLE_DEVICES"] = "1"

config = tf.ConfigProto()

config.gpu_options.allow_growth=True #不全部占满显存, 按需分配start=time.time()#加载数据一

# train_path='../data/eye_data2/train/'

# test_path='../data/eye_data2/test/'

train_path='data/train/'

test_path='data/test/'

enhance_label=1 #数据增强train_data,train_label=utils.load_dataset1(train_path,enhance_label)

test_data,test_label=utils.load_dataset1(test_path,enhance_label)

time=time.time()-start

print("加载数据耗时:",time)log_dir='model2/'

#接着上一次的echo继续训练

inital_epoch = 0# #append:True:如果文件存在则追加(对继续培训很有用);False:覆盖现有文件。 separator:元素分隔符

csv_logger = CSVLogger(log_dir+'log.log', append=(inital_epoch is not 0))

# checkpoint = ModelCheckpoint(filepath='log3/m8.hdf5', verbose=0, save_best_only=True, monitor='val_acc', mode='max')

# #动态设置学习率,按照epoch的次数自动调整学习率

# learn_rates = [0.05, 0.01, 0.005, 0.001, 0.0005]

# lr_scheduler = LearningRateScheduler(lambda epoch: learn_rates[epoch // 30]) #每30个epoch改变一次学习率checkpoint = ModelCheckpoint(log_dir + 'ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5',monitor='val_acc', save_weights_only=False, save_best_only=True, period=5)#调用shufflenet网络架构

model = ShuffleNet(groups=3, pooling='avg')model.compile(optimizer=keras.optimizers.Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=None, decay=0.0),metrics=['accuracy'], #评价指标loss='categorical_crossentropy') #计算损失---分类交叉熵函数, binary_crossentropy(二分类)# 训练方法二

models = model.fit(train_data,train_label,batch_size=64,epochs=5,verbose=1,shuffle=True,callbacks=[csv_logger, checkpoint],initial_epoch=0, #从指定的epoch开始训练,在这之前的训练时仍有用。validation_split=0.1 #0~1之间,用来指定训练集的一定比例数据作为验证集# validation_data=(test_data, test_label) #指定的验证集,此参数将覆盖validation_spilt。

)#保存权重model.save_weights(),保存模型model.save()

model.save(log_dir+'m2.h5') #保存最后一次迭代的模型

model.save_weights(log_dir+'m1.h5')3.shufflenet.py(网络结构)

from keras import backend as K

from keras_applications.imagenet_utils import _obtain_input_shape

from keras.models import Model

from keras.engine.topology import get_source_inputs

from keras.layers import Activation, Add, Concatenate, GlobalAveragePooling2D,GlobalMaxPooling2D, Input, Dense

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, BatchNormalization, Lambda

from keras.layers import DepthwiseConv2D

import numpy as npdef ShuffleNet(include_top=True, input_tensor=None, scale_factor=1.0, pooling='max',input_shape=(224,224,3), groups=1, load_model=None, num_shuffle_units=[3, 7, 3],bottleneck_ratio=0.25, classes=2):if K.backend() != 'tensorflow':raise RuntimeError('Only TensorFlow backend is currently supported, ''as other backends do not support ')name = "ShuffleNet_%.2gX_g%d_br_%.2g_%s" % (scale_factor, groups, bottleneck_ratio, "".join([str(x) for x in num_shuffle_units]))input_shape = _obtain_input_shape(input_shape,default_size=224,min_size=28,require_flatten=include_top,data_format=K.image_data_format())out_dim_stage_two = {1: 144, 2: 200, 3: 240, 4: 272, 8: 384} #old# out_dim_stage_two = {1: 48, 2: 80, 3: 120, 4: 160, 8: 240} #---test2# out_dim_stage_two = {1: 48, 2: 66, 3: 84, 4: 100, 8: 120} #---test3# out_dim_stage_two = {1: 24, 2: 36, 3: 48, 4: 60, 8: 72} #---test4,训练不成功if groups not in out_dim_stage_two:raise ValueError("Invalid number of groups.")if pooling not in ['max','avg']:raise ValueError("Invalid value for pooling.")if not (float(scale_factor) * 4).is_integer():raise ValueError("Invalid value for scale_factor. Should be x over 4.")exp = np.insert(np.arange(0, len(num_shuffle_units), dtype=np.float32), 0, 0)out_channels_in_stage = 2 ** expout_channels_in_stage *= out_dim_stage_two[groups] # calculate output channels for each stageout_channels_in_stage[0] = 24 # first stage has always 24 output channelsout_channels_in_stage *= scale_factorout_channels_in_stage = out_channels_in_stage.astype(int)if input_tensor is None:img_input = Input(shape=input_shape)else:if not K.is_keras_tensor(input_tensor):img_input = Input(tensor=input_tensor, shape=input_shape)else:img_input = input_tensor# create shufflenet architecturex = Conv2D(filters=out_channels_in_stage[0], kernel_size=(3, 3), padding='same',use_bias=False, strides=(2, 2), activation="relu", name="conv1")(img_input)x = MaxPooling2D(pool_size=(3, 3), strides=(2, 2), padding='same', name="maxpool1")(x)# create stages containing shufflenet units beginning at stage 2for stage in range(0, len(num_shuffle_units)):repeat = num_shuffle_units[stage]x = _block(x, out_channels_in_stage, repeat=repeat,bottleneck_ratio=bottleneck_ratio,groups=groups, stage=stage + 2)if pooling == 'avg':x = GlobalAveragePooling2D(name="global_pool")(x)elif pooling == 'max':x = GlobalMaxPooling2D(name="global_pool")(x)if include_top:x = Dense(units=classes, name="fc")(x)x = Activation('softmax', name='softmax')(x)if input_tensor is not None:inputs = get_source_inputs(input_tensor)else:inputs = img_inputmodel = Model(inputs=inputs, outputs=x, name=name)if load_model is not None:model.load_weights('', by_name=True)return modeldef _block(x, channel_map, bottleneck_ratio, repeat=1, groups=1, stage=1):x = _shuffle_unit(x, in_channels=channel_map[stage - 2],out_channels=channel_map[stage - 1], strides=2,groups=groups, bottleneck_ratio=bottleneck_ratio,stage=stage, block=1)for i in range(1, repeat + 1):x = _shuffle_unit(x, in_channels=channel_map[stage - 1],out_channels=channel_map[stage - 1], strides=1,groups=groups, bottleneck_ratio=bottleneck_ratio,stage=stage, block=(i + 1))return xdef _shuffle_unit(inputs, in_channels, out_channels, groups, bottleneck_ratio, strides=2, stage=1, block=1):if K.image_data_format() == 'channels_last':bn_axis = -1else:bn_axis = 1prefix = 'stage%d/block%d' % (stage, block)#if strides >= 2:#out_channels -= in_channels# default: 1/4 of the output channel of a ShuffleNet Unitbottleneck_channels = int(out_channels * bottleneck_ratio)groups = (1 if stage == 2 and block == 1 else groups)x = _group_conv(inputs, in_channels, out_channels=bottleneck_channels,groups=(1 if stage == 2 and block == 1 else groups),name='%s/1x1_gconv_1' % prefix)x = BatchNormalization(axis=bn_axis, name='%s/bn_gconv_1' % prefix)(x)x = Activation('relu', name='%s/relu_gconv_1' % prefix)(x)x = Lambda(channel_shuffle, arguments={'groups': groups}, name='%s/channel_shuffle' % prefix)(x)x = DepthwiseConv2D(kernel_size=(3, 3), padding="same", use_bias=False,strides=strides, name='%s/1x1_dwconv_1' % prefix)(x)x = BatchNormalization(axis=bn_axis, name='%s/bn_dwconv_1' % prefix)(x)x = _group_conv(x, bottleneck_channels, out_channels=out_channels if strides == 1 else out_channels - in_channels,groups=groups, name='%s/1x1_gconv_2' % prefix)x = BatchNormalization(axis=bn_axis, name='%s/bn_gconv_2' % prefix)(x)if strides < 2:ret = Add(name='%s/add' % prefix)([x, inputs])else:avg = AveragePooling2D(pool_size=3, strides=2, padding='same', name='%s/avg_pool' % prefix)(inputs)ret = Concatenate(bn_axis, name='%s/concat' % prefix)([x, avg])ret = Activation('relu', name='%s/relu_out' % prefix)(ret)return retdef _group_conv(x, in_channels, out_channels, groups, kernel=1, stride=1, name=''):if groups == 1:return Conv2D(filters=out_channels, kernel_size=kernel, padding='same',use_bias=False, strides=stride, name=name)(x)# number of intput channels per groupig = in_channels // groupsgroup_list = []# print(out_channels,groups)assert out_channels % groups == 0for i in range(groups):offset = i * iggroup = Lambda(lambda z: z[:, :, :, offset: offset + ig], name='%s/g%d_slice' % (name, i))(x)group_list.append(Conv2D(int(0.5 + out_channels / groups), kernel_size=kernel, strides=stride,use_bias=False, padding='same', name='%s_/g%d' % (name, i))(group))return Concatenate(name='%s/concat' % name)(group_list)def channel_shuffle(x, groups):height, width, in_channels = x.shape.as_list()[1:]channels_per_group = in_channels // groupsx = K.reshape(x, [-1, height, width, groups, channels_per_group])x = K.permute_dimensions(x, (0, 1, 2, 4, 3)) # transposex = K.reshape(x, [-1, height, width, in_channels])return x

4.utils.py

from keras.applications.imagenet_utils import preprocess_input

from keras.preprocessing.image import load_img

from keras.preprocessing import image

import numpy as np

import random

import cv2

import osdef one_hot(data, num_classes):return np.squeeze(np.eye(num_classes)[data.reshape(-1)])# 限制学习率下标

def get_num(a):if a>4:a=4return a#预测结果返回0、1

def get_result(pre):if pre[0]>pre[1]:return 0else:return 1def preprocess(x):x = np.expand_dims(x, axis=0)x = preprocess_input(x)x /= 255.0x -= 0.5x *= 2.0return xdef random_enhance(path,file): #随机颜色img = cv2.imread(path+file)img = cv2.resize(img, (224,224), interpolation=cv2.INTER_CUBIC)# 1.图片随机裁剪img = cv2.copyMakeBorder(img,8,8,8,8,cv2.BORDER_CONSTANT,value=(0,0,0)) #扩大填充黑色upper_x = random.randint(0,16)dowm_x =upper_x+224upper_y = random.randint(0,16)dowm_y = upper_y+224image = img[upper_x:dowm_x,upper_y:dowm_y]num1=random.randint(0,1)# 2.随机翻转if (num1==0):image=cv2.flip(image, 1) #1:水平翻转 0:垂直翻转 -1:水平垂直翻转img_hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)w=image.shape[0]h=image.shape[1]num2=random.randint(0,5)# 1.hsv——色调if (num2==0):set_h = np.random.uniform(-3,8)for i in range(w):for j in range(h):hsv_h=img_hsv[i,j][0]hsv_h+=set_hif hsv_h>255:hsv_h=255if hsv_h<0:hsv_h=0img_hsv[i,j][0]=hsv_himage = cv2.cvtColor(img_hsv, cv2.COLOR_HSV2BGR)# 2.hsv——饱和度if (num2==1):set_s = np.random.uniform(-30,30)for i in range(w):for j in range(h):hsv_s=img_hsv[i,j][1]hsv_s+=set_sif hsv_s>255:hsv_s=255if hsv_s<0:hsv_s=0img_hsv[i,j][1]=hsv_simage = cv2.cvtColor(img_hsv, cv2.COLOR_HSV2BGR)# 3.hsv——亮度if (num2==2):set_v = np.random.uniform(-30,30)for i in range(w):for j in range(h):v=img_hsv[i,j][2]v+=set_vif v>255:v=255if v<0:v=0img_hsv[i,j][2]=vimage = cv2.cvtColor(img_hsv, cv2.COLOR_HSV2BGR)return image#自己写的图片加载

def load_dataset1(path,enhance_label):dataset = []labels = []n=0f1=open(path+'data.txt','r')for line in f1.readlines():n=n+1print(line,'---',n)file_name=line.strip().split(' ')[0]label=int(line.strip().split(' ')[-1])# print(file_name,label)if enhance_label==0:# 1.原数据加载pic=cv2.imread(path+file_name)pic=cv2.resize(pic,(224,224), interpolation=cv2.INTER_CUBIC)dataset.append(pic)labels.append(label)if enhance_label==1:# 2.数据随机增强后加载pic=random_enhance(path,file_name)dataset.append(pic)labels.append(label)dataset=np.array(dataset)labels=np.array(labels)labels=one_hot(labels, 2)return dataset, labels#generator

def load_dataset2(path,enhance_label):while 1:dataset = []labels = []batch_size=64f1=open(path+'label-shuffle.txt','r') lines=f1.readlines()number=np.random.randint(0,len(lines),size=batch_size)for i in range(batch_size):num=number[i]file_name=lines[num].strip().split(' ')[0]label=int(lines[num].strip().split(' ')[-1])# print(file_name,label)if enhance_label==0:# 1.原数据加载pic=cv2.imread(path+file_name)pic=cv2.resize(pic,(224,224), interpolation=cv2.INTER_CUBIC)dataset.append(pic)labels.append(label)if enhance_label==1:# 2.数据随机增强后加载pic=random_enhance(path,file_name)dataset.append(pic)labels.append(label)dataset=np.array(dataset)labels=np.array(labels)labels=one_hot(labels, 2)yield dataset, labels#按标签批量预测

def get_acc(model, path):n=0total=0f1 = open(path + 'data.txt', 'r')for line in f1.readlines():total += 1file_name=line.strip().split(' ')[0]label=int(line.strip().split(' ')[-1])# print(file_name,label)# # keras加载图片# img = load_img(path + file_name, target_size=(224, 224))# # img = image.img_to_array(img) / 255.0# img = np.expand_dims(img, axis=0)# opencv加载图片img=cv2.imread(path + file_name)img=cv2.resize(img,(224,224), interpolation=cv2.INTER_CUBIC)# img = image.img_to_array(img) / 255.0img = np.expand_dims(img, axis=0)img=np.array(img)predictions = model.predict(img)result = get_result(predictions)print("pre_value:", result,predictions,'---'+str(total))if result==label:n += 1acc=n/totalprint("acc:",acc)return acc# # 加载数据

# test_path='data2/test/'

# train_data_label=load_dataset2(test_path,1)5.test.py

from shufflenet import ShuffleNet

from keras.preprocessing.image import load_img

from keras.preprocessing import image

from keras.applications.imagenet_utils import preprocess_input

from keras.models import load_model

import numpy as np

import utils

import keras

import os

import tensorflow as tfmodel = ShuffleNet(groups=3, pooling='avg')

model.compile(optimizer=keras.optimizers.Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=None, decay=0.0),metrics=['accuracy'],loss='categorical_crossentropy')model.load_weights('model/m1.h5')# model = load_model('model2/log2-4/m3.hdf5')# 批量预测

path='E:/eye_dataset/test/eye/'

path2='data/test/'

acc=utils.get_acc(model,path2)

print("pre_acc:",acc)# test_path='data/test/'

# test_data,test_label=utils.load_dataset1(test_path,0)

# print(test_data.shape,test_label.shape)

# # 验证以及预测

# loss,acc=model.evaluate(test_data,test_label,verbose=1)

# print("验证集的损失为:",loss," 精度为:",acc)

6.shufflenet2.py(可以在train中把网络改成v2的,训练v2的模型)

from keras import backend as K

from keras_applications.imagenet_utils import _obtain_input_shape

from keras.models import Model

from keras.engine.topology import get_source_inputs

from keras.layers import Activation, Add, Concatenate, GlobalAveragePooling2D,GlobalMaxPooling2D, Input, Dense

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, BatchNormalization, Lambda

from keras.layers import DepthwiseConv2D

import numpy as npdef ShuffleNet(include_top=True, input_tensor=None, scale_factor=1.0, pooling='max',input_shape=(224,224,3), groups=1, load_model=None, num_shuffle_units=[3, 7, 3],bottleneck_ratio=0.25, classes=2):if K.backend() != 'tensorflow':raise RuntimeError('Only TensorFlow backend is currently supported, ''as other backends do not support ')name = "ShuffleNet_%.2gX_g%d_br_%.2g_%s" % (scale_factor, groups, bottleneck_ratio, "".join([str(x) for x in num_shuffle_units]))input_shape = _obtain_input_shape(input_shape,default_size=224,min_size=28,require_flatten=include_top,data_format=K.image_data_format())out_dim_stage_two = {1: 144, 2: 200, 3: 240, 4: 272, 8: 384} #old# out_dim_stage_two = {1: 48, 2: 80, 3: 120, 4: 160, 8: 240} #---test2# out_dim_stage_two = {1: 48, 2: 64, 3: 81, 4: 100, 8: 120} #---test3# out_dim_stage_two = {1: 24, 2: 36, 3: 48, 4: 60, 8: 72} #---test4if groups not in out_dim_stage_two:raise ValueError("Invalid number of groups.")if pooling not in ['max','avg']:raise ValueError("Invalid value for pooling.")if not (float(scale_factor) * 4).is_integer():raise ValueError("Invalid value for scale_factor. Should be x over 4.")exp = np.insert(np.arange(0, len(num_shuffle_units), dtype=np.float32), 0, 0)out_channels_in_stage = 2 ** expout_channels_in_stage *= out_dim_stage_two[groups] # calculate output channels for each stageout_channels_in_stage[0] = 24 # first stage has always 24 output channelsout_channels_in_stage *= scale_factorout_channels_in_stage = out_channels_in_stage.astype(int)if input_tensor is None:img_input = Input(shape=input_shape)else:if not K.is_keras_tensor(input_tensor):img_input = Input(tensor=input_tensor, shape=input_shape)else:img_input = input_tensor# create shufflenet architecturex = Conv2D(filters=out_channels_in_stage[0], kernel_size=(3, 3), padding='same',use_bias=False, strides=(2, 2), activation="relu", name="conv1")(img_input)x = MaxPooling2D(pool_size=(3, 3), strides=(2, 2), padding='same', name="maxpool1")(x)# create stages containing shufflenet units beginning at stage 2for stage in range(0, len(num_shuffle_units)):repeat = num_shuffle_units[stage]x = _block(x, out_channels_in_stage, repeat=repeat,bottleneck_ratio=bottleneck_ratio,groups=groups, stage=stage + 2)if pooling == 'avg':x = GlobalAveragePooling2D(name="global_pool")(x)elif pooling == 'max':x = GlobalMaxPooling2D(name="global_pool")(x)if include_top:x = Dense(units=classes, name="fc")(x)x = Activation('softmax', name='softmax')(x)if input_tensor is not None:inputs = get_source_inputs(input_tensor)else:inputs = img_inputmodel = Model(inputs=inputs, outputs=x, name=name)if load_model is not None:model.load_weights('', by_name=True)return modeldef _block(x, channel_map, bottleneck_ratio, repeat=1, groups=1, stage=1):x = _shuffle_unit(x, in_channels=channel_map[stage - 2],out_channels=channel_map[stage - 1], strides=2,groups=groups, bottleneck_ratio=bottleneck_ratio,stage=stage, block=1)for i in range(1, repeat + 1):x = _shuffle_unit(x, in_channels=channel_map[stage - 1],out_channels=channel_map[stage - 1], strides=1,groups=groups, bottleneck_ratio=bottleneck_ratio,stage=stage, block=(i + 1))return xdef _shuffle_unit(inputs, in_channels, out_channels, groups, bottleneck_ratio, strides=2, stage=1, block=1):if K.image_data_format() == 'channels_last':bn_axis = -1else:bn_axis = 1prefix = 'stage%d/block%d' % (stage, block)#if strides >= 2:#out_channels -= in_channels# default: 1/4 of the output channel of a ShuffleNet Unitbottleneck_channels = int(out_channels * bottleneck_ratio)groups = (1 if stage == 2 and block == 1 else groups)x = _group_conv(inputs, in_channels, out_channels=bottleneck_channels,groups=(1 if stage == 2 and block == 1 else groups),name='%s/1x1_gconv_1' % prefix)x = BatchNormalization(axis=bn_axis, name='%s/bn_gconv_1' % prefix)(x)x = Activation('relu', name='%s/relu_gconv_1' % prefix)(x)x = Lambda(channel_shuffle, arguments={'groups': groups}, name='%s/channel_shuffle' % prefix)(x)x = DepthwiseConv2D(kernel_size=(3, 3), padding="same", use_bias=False,strides=strides, name='%s/1x1_dwconv_1' % prefix)(x)x = BatchNormalization(axis=bn_axis, name='%s/bn_dwconv_1' % prefix)(x)x = _group_conv(x, bottleneck_channels, out_channels=out_channels if strides == 1 else out_channels - in_channels,groups=groups, name='%s/1x1_gconv_2' % prefix)x = BatchNormalization(axis=bn_axis, name='%s/bn_gconv_2' % prefix)(x)if strides < 2:ret = Add(name='%s/add' % prefix)([x, inputs])else:avg = AveragePooling2D(pool_size=3, strides=2, padding='same', name='%s/avg_pool' % prefix)(inputs)ret = Concatenate(bn_axis, name='%s/concat' % prefix)([x, avg])ret = Activation('relu', name='%s/relu_out' % prefix)(ret)return retdef _group_conv(x, in_channels, out_channels, groups, kernel=1, stride=1, name=''):if groups == 1:return Conv2D(filters=out_channels, kernel_size=kernel, padding='same',use_bias=False, strides=stride, name=name)(x)# number of intput channels per groupig = in_channels // groupsgroup_list = []assert out_channels % groups == 0for i in range(groups):offset = i * iggroup = Lambda(lambda z: z[:, :, :, offset: offset + ig], name='%s/g%d_slice' % (name, i))(x)group_list.append(Conv2D(int(0.5 + out_channels / groups), kernel_size=kernel, strides=stride,use_bias=False, padding='same', name='%s_/g%d' % (name, i))(group))return Concatenate(name='%s/concat' % name)(group_list)def channel_shuffle(x, groups):height, width, in_channels = x.shape.as_list()[1:]channels_per_group = in_channels // groupsx = K.reshape(x, [-1, height, width, groups, channels_per_group])x = K.permute_dimensions(x, (0, 1, 2, 4, 3)) # transposex = K.reshape(x, [-1, height, width, in_channels])return x

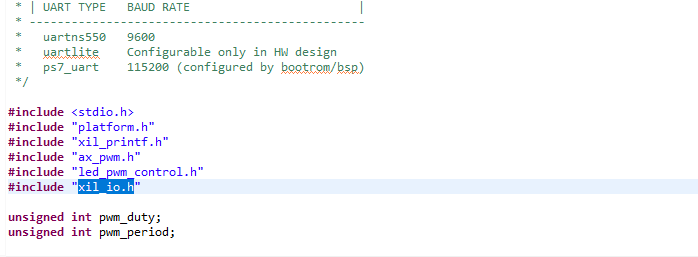

7.h5_2_pb.py(模型转换,keras的h5模型转成pb模型)

#*-coding:utf-8-*"""

将keras的.h5的模型文件,转换成TensorFlow的pb文件

"""

# ==========================================================

import keras

from keras.models import load_model

import tensorflow as tf

import os

from keras import backend

from keras.applications.mobilenetv2 import MobileNetV2

from keras.layers import Input

from keras.preprocessing import image

from keras.applications.mobilenetv2 import preprocess_input, decode_predictions

from keras.applications.inception_resnet_v2 import InceptionResNetV2

from shufflenet import ShuffleNet

from tensorflow.python.framework import graph_util, graph_iodef h5_to_pb(h5_model, output_dir, model_name, out_prefix="output_", log_tensorboard=True):if os.path.exists(output_dir) == False:os.mkdir(output_dir)out_nodes = []for i in range(len(h5_model.outputs)):out_nodes.append(out_prefix + str(i + 1))tf.identity(h5_model.output[i], out_prefix + str(i + 1))sess = backend.get_session()# 写入pb模型文件init_graph = sess.graph.as_graph_def()main_graph = graph_util.convert_variables_to_constants(sess, init_graph, out_nodes)graph_io.write_graph(main_graph, output_dir, name=model_name, as_text=False)# # 输出日志文件# if log_tensorboard:# from tensorflow.python.tools import import_pb_to_tensorboard# import_pb_to_tensorboard.import_to_tensorboard(os.path.join(output_dir, model_name), output_dir)if __name__ == '__main__':# .h模型文件路径参数input_path = 'model/'weight_file = 'm2.h5'weight_file_path = os.path.join(input_path, weight_file)output_dir = input_pathoutput_graph_name = 'test1.pb'# # 加载方法1:疑似load_model有问题,权重未加载进来# h5_model = load_model(weight_file_path)# 加载方法2h5_model = ShuffleNet(groups=3, pooling='avg')h5_model.load_weights(weight_file_path)h5_model.summary()h5_to_pb(h5_model, output_dir=output_dir, model_name=output_graph_name)print('Transform success!')8.test_pb.py(测试pb模型结果)

import tensorflow as tf

import numpy as np

import cv2

import os

from keras.preprocessing.image import load_img

from utils import get_resultpb_path = 'model/test1.pb'

with tf.gfile.FastGFile(pb_path,'rb') as model_file:graph_def = tf.GraphDef()graph_def.ParseFromString(model_file.read())tf.import_graph_def(graph_def, name='')# print(graph_def)with tf.Session() as sess:tf.global_variables_initializer()input_1 = sess.graph.get_tensor_by_name("input_1:0")# pred = sess.graph.get_tensor_by_name("softmax/Softmax:0")pred = sess.graph.get_tensor_by_name("output_1:0")# # 查看网络结构# ops = sess.graph.get_operations()# for op in ops:# print(op.name, op.outputs)n=0total=0path='E:/eye_dataset/test/eye/'path2='data/test/'f1 = open(path2 + 'data.txt', 'r')for line in f1.readlines():total += 1file_name=line.strip().split(' ')[0] #文件名label=int(line.strip().split(' ')[-1]) #标签# opencv加载图片img=cv2.imread(path2 + file_name)img=cv2.resize(img,(224,224), interpolation=cv2.INTER_CUBIC)img = np.expand_dims(img, axis=0)img=np.array(img)pre = sess.run(pred, feed_dict={input_1: img})result = get_result(pre)print("pre_value:", result,pre,'---'+str(total))if result==label:n += 1acc=n/totalprint("acc:",acc)