稀疏性和 L1 正则化

学习目标:

- 计算模型大小

- 通过应用 L1 正则化来增加稀疏性,以减小模型大小

降低复杂性的一种方法是使用正则化函数,它会使权重正好为零。对于线性模型(例如线性回归),权重为零就相当于完全没有使用相应特征。除了可避免过拟合之外,生成的模型还会更加有效。

L1 正则化是一种增加稀疏性的好方法。

导入,跟之前一样

import mathfrom IPython import display

from matplotlib import cm

from matplotlib import gridspec

from matplotlib import pyplot as plt

import numpy as np

import pandas as pd

from sklearn import metrics

import tensorflow as tf

from tensorflow.python.data import Datasettf.logging.set_verbosity(tf.logging.ERROR)

pd.options.display.max_rows = 10

pd.options.display.float_format = '{:.1f}'.formatcalifornia_housing_dataframe = pd.read_csv("https://storage.googleapis.com/mledu-datasets/california_housing_train.csv", sep=",")california_housing_dataframe = california_housing_dataframe.reindex(np.random.permutation(california_housing_dataframe.index))样本特征,跟之前一样

def preprocess_features(california_housing_dataframe):"""Prepares input features from California housing data set.Args:california_housing_dataframe: A Pandas DataFrame expected to contain datafrom the California housing data set.Returns:A DataFrame that contains the features to be used for the model, includingsynthetic features."""selected_features = california_housing_dataframe[["latitude","longitude","housing_median_age","total_rooms","total_bedrooms","population","households","median_income"]]processed_features = selected_features.copy()# Create a synthetic feature.processed_features["rooms_per_person"] = (california_housing_dataframe["total_rooms"] /california_housing_dataframe["population"])return processed_featuresdef preprocess_targets(california_housing_dataframe):"""Prepares target features (i.e., labels) from California housing data set.Args:california_housing_dataframe: A Pandas DataFrame expected to contain datafrom the California housing data set.Returns:A DataFrame that contains the target feature."""output_targets = pd.DataFrame()# Create a boolean categorical feature representing whether the# medianHouseValue is above a set threshold.output_targets["median_house_value_is_high"] = (california_housing_dataframe["median_house_value"] > 265000).astype(float)return output_targets样本集和验证集,与之前一样

# Choose the first 12000 (out of 17000) examples for training.

training_examples = preprocess_features(california_housing_dataframe.head(12000))

training_targets = preprocess_targets(california_housing_dataframe.head(12000))# Choose the last 5000 (out of 17000) examples for validation.

validation_examples = preprocess_features(california_housing_dataframe.tail(5000))

validation_targets = preprocess_targets(california_housing_dataframe.tail(5000))# Double-check that we've done the right thing.

print "Training examples summary:"

display.display(training_examples.describe())

print "Validation examples summary:"

display.display(validation_examples.describe())print "Training targets summary:"

display.display(training_targets.describe())

print "Validation targets summary:"

display.display(validation_targets.describe())输入函数,与之前一样

def my_input_fn(features, targets, batch_size=1, shuffle=True, num_epochs=None):"""Trains a linear regression model of one feature.Args:features: pandas DataFrame of featurestargets: pandas DataFrame of targetsbatch_size: Size of batches to be passed to the modelshuffle: True or False. Whether to shuffle the data.num_epochs: Number of epochs for which data should be repeated. None = repeat indefinitelyReturns:Tuple of (features, labels) for next data batch"""# Convert pandas data into a dict of np arrays.features = {key:np.array(value) for key,value in dict(features).items()} # Construct a dataset, and configure batching/repeatingds = Dataset.from_tensor_slices((features,targets)) # warning: 2GB limitds = ds.batch(batch_size).repeat(num_epochs)# Shuffle the data, if specifiedif shuffle:ds = ds.shuffle(10000)# Return the next batch of datafeatures, labels = ds.make_one_shot_iterator().get_next()return features, labels分桶函数

# get_quantile_based_boundaries 会根据num_buckets(指定的分桶数量),来对feature_values进行分桶。

def get_quantile_based_buckets(feature_values, num_buckets):quantiles = feature_values.quantile([(i+1.)/(num_buckets + 1.) for i in xrange(num_buckets)])return [quantiles[q] for q in quantiles.keys()]对样本对特征进行分桶

def construct_feature_columns():"""Construct the TensorFlow Feature Columns.Returns:A set of feature columns"""bucketized_households = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("households"),boundaries=get_quantile_based_buckets(training_examples["households"], 10))bucketized_longitude = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("longitude"),boundaries=get_quantile_based_buckets(training_examples["longitude"], 50))bucketized_latitude = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("latitude"),boundaries=get_quantile_based_buckets(training_examples["latitude"], 50))bucketized_housing_median_age = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("housing_median_age"),boundaries=get_quantile_based_buckets(training_examples["housing_median_age"], 10))bucketized_total_rooms = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("total_rooms"),boundaries=get_quantile_based_buckets(training_examples["total_rooms"], 10))bucketized_total_bedrooms = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("total_bedrooms"),boundaries=get_quantile_based_buckets(training_examples["total_bedrooms"], 10))bucketized_population = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("population"),boundaries=get_quantile_based_buckets(training_examples["population"], 10))bucketized_median_income = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("median_income"),boundaries=get_quantile_based_buckets(training_examples["median_income"], 10))bucketized_rooms_per_person = tf.feature_column.bucketized_column(tf.feature_column.numeric_column("rooms_per_person"),boundaries=get_quantile_based_buckets(training_examples["rooms_per_person"], 10))# 添加 longitude 与 latitude 的特征组合 # hash_bucket_size 参数指定 hash bucket 的桶个数,特征交叉的组合个数越多,hash_bucket_size 也应相应增加,从而减小哈希冲突 long_x_lat = tf.feature_column.crossed_column(set([bucketized_longitude, bucketized_latitude]), hash_bucket_size=1000)feature_columns = set([long_x_lat,bucketized_longitude,bucketized_latitude,bucketized_housing_median_age,bucketized_total_rooms,bucketized_total_bedrooms,bucketized_population,bucketized_households,bucketized_median_income,bucketized_rooms_per_person])return feature_columns计算模型大小

要计算模型大小,只需计算非零参数的数量即可。为此,我们在下面提供了一个辅助函数。该函数深入使用了 Estimator API,如果不了解它的工作原理,也不用担心。

def model_size(estimator):# 取得所有变量对名称variables = estimator.get_variable_names()size = 0# 遍历所有变量名for variable in variables:# 如果变量名中不存在'global_step','centered_bias_weight','bias_weight','Ftrl'字串,# 就累加一个非零参数# 为什么可以这样判断? 这些字串代表什么?if not any(x in variable for x in ['global_step','centered_bias_weight','bias_weight','Ftrl']):# 累加一个非零参数size += np.count_nonzero(estimator.get_variable_value(variable))return size减小模型大小

您的团队需要针对 SmartRing 构建一个准确度高的逻辑回归模型,这种指环非常智能,可以感应城市街区的人口统计特征(median_income、avg_rooms、households 等等),并告诉您指定城市街区的住房成本是否高昂。

由于 SmartRing 很小,因此工程团队已确定它只能处理参数数量不超过 600 个的模型。另一方面,产品管理团队也已确定,除非所保留测试集的对数损失函数低于 0.35,否则该模型不能发布。

您可以使用秘密武器“L1 正则化”调整模型,使其同时满足大小和准确率限制条件吗?

任务 1:查找合适的正则化系数。

查找可同时满足以下两种限制条件的 L1 正则化强度参数:模型的参数数量不超过 600 个且验证集的对数损失函数低于 0.35。

以下代码可帮助您快速开始。您可以通过多种方法向您的模型应用正则化。在此练习中,我们选择使用 FtrlOptimizer 来应用正则化。FtrlOptimizer 是一种设计成使用 L1 正则化比标准梯度下降法得到更好结果的方法。

重申一次,我们会使用整个数据集来训练该模型,因此预计其运行速度会比通常要慢。

线性分类模型def train_linear_classifier_model(learning_rate,regularization_strength,steps,batch_size,feature_columns,training_examples,training_targets,validation_examples,validation_targets):"""Trains a linear regression model.In addition to training, this function also prints training progress information,as well as a plot of the training and validation loss over time.Args:learning_rate: A `float`, the learning rate.regularization_strength: A `float` that indicates the strength of the L1regularization. A value of `0.0` means no regularization.steps: A non-zero `int`, the total number of training steps. A training stepconsists of a forward and backward pass using a single batch.feature_columns: A `set` specifying the input feature columns to use.training_examples: A `DataFrame` containing one or more columns from`california_housing_dataframe` to use as input features for training.training_targets: A `DataFrame` containing exactly one column from`california_housing_dataframe` to use as target for training.validation_examples: A `DataFrame` containing one or more columns from`california_housing_dataframe` to use as input features for validation.validation_targets: A `DataFrame` containing exactly one column from`california_housing_dataframe` to use as target for validation.Returns:A `LinearClassifier` object trained on the training data."""periods = 7steps_per_period = steps / periods# Create a linear classifier object.# FtrlOptimizer 是一种设计成使用 L1 正则化比标准梯度下降法得到更好结果的方法。my_optimizer = tf.train.FtrlOptimizer(learning_rate=learning_rate, l1_regularization_strength=regularization_strength)my_optimizer = tf.contrib.estimator.clip_gradients_by_norm(my_optimizer, 5.0)# linear_classifier = tf.estimator.LinearClassifier线性分类 linear_classifier = tf.estimator.LinearClassifier(feature_columns=feature_columns,optimizer=my_optimizer)# Create input functions.training_input_fn = lambda: my_input_fn(training_examples, training_targets["median_house_value_is_high"], batch_size=batch_size)predict_training_input_fn = lambda: my_input_fn(training_examples, training_targets["median_house_value_is_high"], num_epochs=1, shuffle=False)predict_validation_input_fn = lambda: my_input_fn(validation_examples, validation_targets["median_house_value_is_high"], num_epochs=1, shuffle=False)# Train the model, but do so inside a loop so that we can periodically assess# loss metrics.print "Training model..."print "LogLoss (on validation data):"training_log_losses = []validation_log_losses = []for period in range (0, periods):# Train the model, starting from the prior state.linear_classifier.train(input_fn=training_input_fn,steps=steps_per_period)# Take a break and compute predictions.training_probabilities = linear_classifier.predict(input_fn=predict_training_input_fn)training_probabilities = np.array([item['probabilities'] for item in training_probabilities])validation_probabilities = linear_classifier.predict(input_fn=predict_validation_input_fn)validation_probabilities = np.array([item['probabilities'] for item in validation_probabilities])# Compute training and validation loss.training_log_loss = metrics.log_loss(training_targets, training_probabilities)validation_log_loss = metrics.log_loss(validation_targets, validation_probabilities)# Occasionally print the current loss.print " period %02d : %0.2f" % (period, validation_log_loss)# Add the loss metrics from this period to our list.training_log_losses.append(training_log_loss)validation_log_losses.append(validation_log_loss)print "Model training finished."# Output a graph of loss metrics over periods.plt.ylabel("LogLoss")plt.xlabel("Periods")plt.title("LogLoss vs. Periods")plt.tight_layout()plt.plot(training_log_losses, label="training")plt.plot(validation_log_losses, label="validation")plt.legend()return linear_classifier默认L1 正则化强度参数为0.0时

linear_classifier = train_linear_classifier_model(learning_rate=0.1,# TWEAK THE REGULARIZATION VALUE BELOW# 默认L1 正则化强度参数为0.0时regularization_strength=0.0, steps=300,batch_size=100,feature_columns=construct_feature_columns(),training_examples=training_examples,training_targets=training_targets,validation_examples=validation_examples,validation_targets=validation_targets)

print "Model size:", model_size(linear_classifier)修改L1 正则化强度参数为0.1时

修改L1 正则化强度参数为0.5时

修改L1 正则化强度参数为1.0时

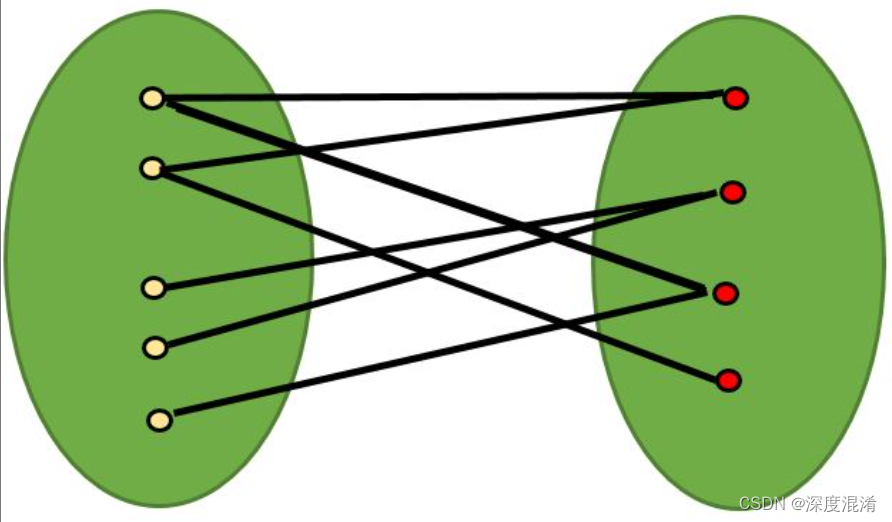

![[VLDB 2022]Butterfly Counting on Uncertain Bipartite Graphs](https://img-blog.csdnimg.cn/img_convert/1b73ccd0e116142bc0cca4fe865cd11b.png)

![二分匹配大总结——Bipartite Graph Matchings[LnJJF]](https://img-blog.csdnimg.cn/f12f5708538c44c08101ac60cf3d8342.png?x-oss-process=image/watermark,type_ZHJvaWRzYW5zZmFsbGJhY2s,shadow_50,text_Q1NETiBATG5KSkY=,size_20,color_FFFFFF,t_70,g_se,x_16)