python爬取网页图片

爬取数据一般分为三步:

- 爬取网页信息

- 解析爬取来的数据

- 保存数据

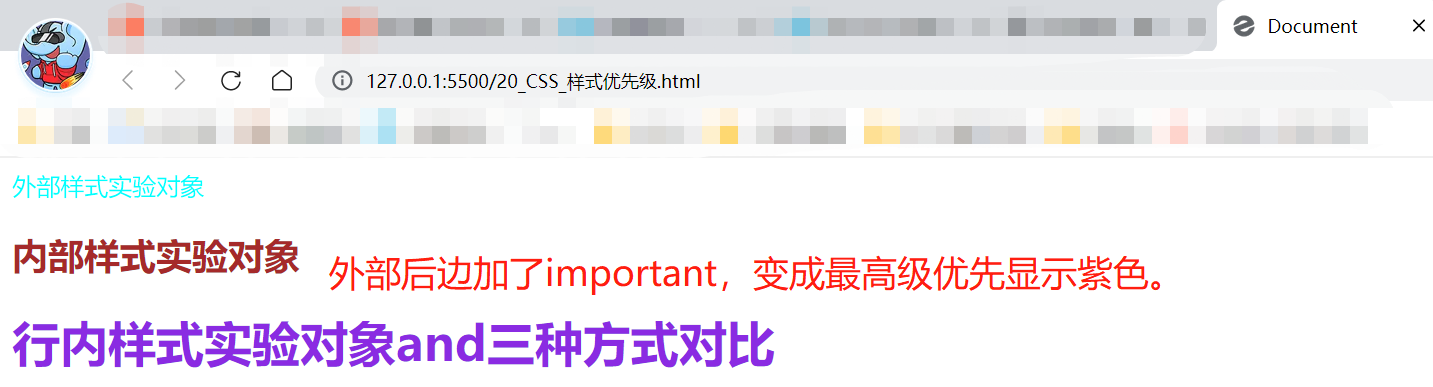

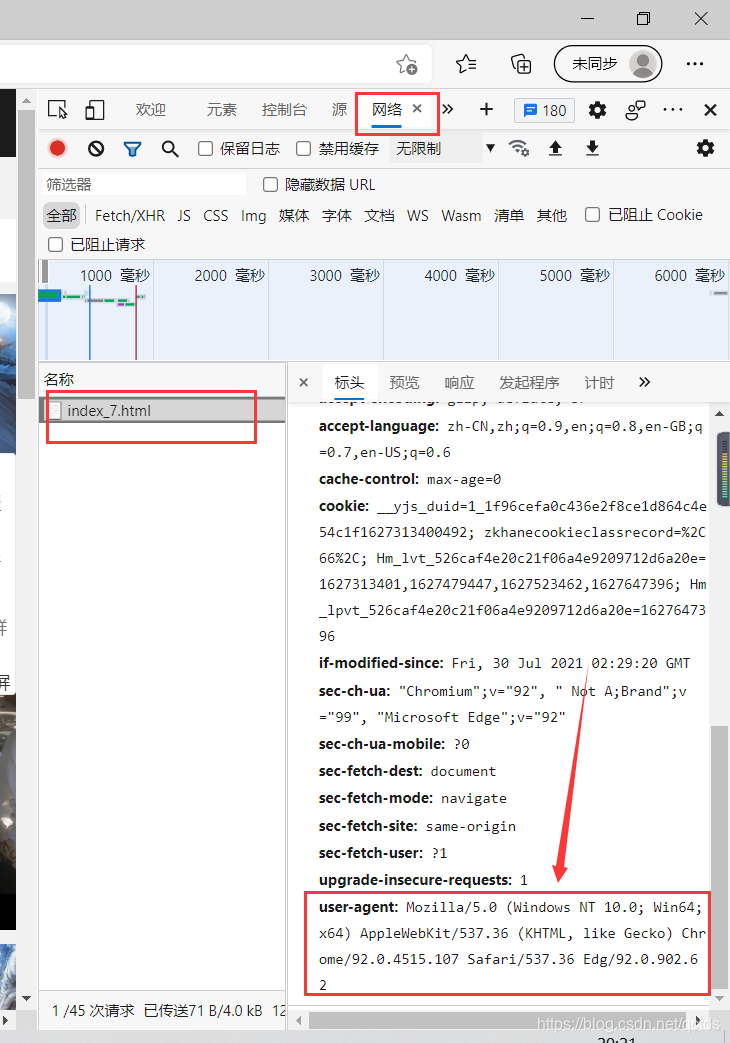

找到自己想要爬取的网页,找到user-agent

代码实现

- 首先导入需要用到的包

from bs4 import BeautifulSoup #网页解析,获取数据

import re #正则表达式,进行文字匹配

import urllib.request,urllib.error #制定URL,获取网页数据

import xlwt #进行excel操作

import sqlite3 #进行SQLite数据库操作import os

import requests

- 构造函数

- 将待爬取网页的信息加载进来

def askURL(url):head={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36 Edg/92.0.902.55"}res =urllib.request.Request(url,headers=head)html = ''try:response =urllib.request.urlopen(res)html = response.read().decode("gbk")# print(html)except urllib.error.URLError as e:if hasattr(e,"code"):print(e.code)if hasattr(e,"reason"):print(e.reason)return html

- 导入链接

def main():baseurl ="填入爬取网页的链接"# 1.爬取数据datalist = getData(baseurl)# 在d盘Pyproject目录下创建名称为img的文件夹path2 = r'D://Pyproject'os.mkdir(path2 + './' + "img")#保存图片路径savepath = path2 + './' + "img" + './'# 3.保存数据savaData(datalist,savepath)

- 爬取网页信息:

#图片链接

findImg = re.compile(r'<img.*src="(.*?)"/>')

#图片名字

findName = re.compile(r'<img alt="(.*?)".*/>')#爬取网页数据

def getData(baseurl):datalist = []for i in range(2,10): #得到多个爬取数据url = baseurl+str(i)+'.html'html=askURL(url) #保存获取到的网页源码#print(html) #测试保存源码是否成功#2.解析数据soup = BeautifulSoup(html,"html.parser")for item in soup.find_all('img'): #查找符合要求的字符串#print(item)data = []item = str(item)# 图片名字name = re.findall(findName, item) #re库用来通过正则表达式查找指定的字符串data.append(name) #添加图片名字#图片链接img = re.findall(findImg,item)[0]data.append(img)datalist.append(data)return datalist

- 保存图片到指定位置:

def savaData(datalist,savepath):print("save...")for i in range(0, 160):print("第%d条" % (i + 1))data = datalist[i]for j in range(0, 2):if j == 0:name = str(data[j])else:r = requests.get('https://pic.netbian.com/' + str(data[j]), stream=True)with open(savepath + name + '.jpg', 'wb') as fd:for chunk in r.iter_content():fd.write(chunk)

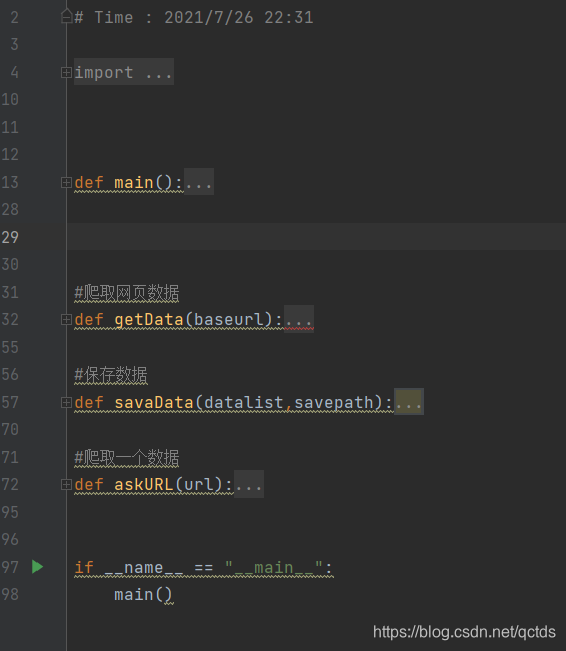

全部代码如下:

# -*- codeing = utf-8 -*-

# Time : 2021/7/26 22:31from bs4 import BeautifulSoup

import re

import urllib.request,urllib.errorimport os

import requestsdef main():baseurl ="自己想要爬取的网页链接"# 1.爬取数据datalist = getData(baseurl)# 在d盘Pyproject目录下创建名称为img的文件夹path2 = r'D://Pyproject'os.mkdir(path2 + './' + "img")savepath = path2 + './' + "img" + './'# 3.保存数据savaData(datalist,savepath)#askURL("https://pic.netbian.com/4kmeinv/index_")#图片链接

findImg = re.compile(r'<img.*src="(.*?)"/>')

#图片名字

findName = re.compile(r'<img alt="(.*?)".*/>')#爬取网页数据

def getData(baseurl):datalist = []for i in range(2,10):url = baseurl+str(i)+'.html'html=askURL(url)#print(html)#2.解析数据soup = BeautifulSoup(html,"html.parser")for item in soup.find_all('img'):#print(item)data = []item = str(item)# 图片名字name = re.findall(findName, item)data.append(name)#图片链接img = re.findall(findImg,item)[0]data.append(img)datalist.append(data)return datalist#保存数据

def savaData(datalist,savepath):print("save...")for i in range(0, 160):print("第%d条" % (i + 1))data = datalist[i]for j in range(0, 2):if j == 0:name = str(data[j])else:r = requests.get('https://pic.netbian.com/' + str(data[j]), stream=True)with open(savepath + name + '.jpg', 'wb') as fd:for chunk in r.iter_content():fd.write(chunk)#爬取一个数据

def askURL(url):head={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36 Edg/92.0.902.55"}res =urllib.request.Request(url,headers=head)html = ''try:response =urllib.request.urlopen(res)html = response.read().decode("gbk")# print(html)except urllib.error.URLError as e:if hasattr(e,"code"):print(e.code)if hasattr(e,"reason"):print(e.reason)return htmlif __name__ == "__main__":main()