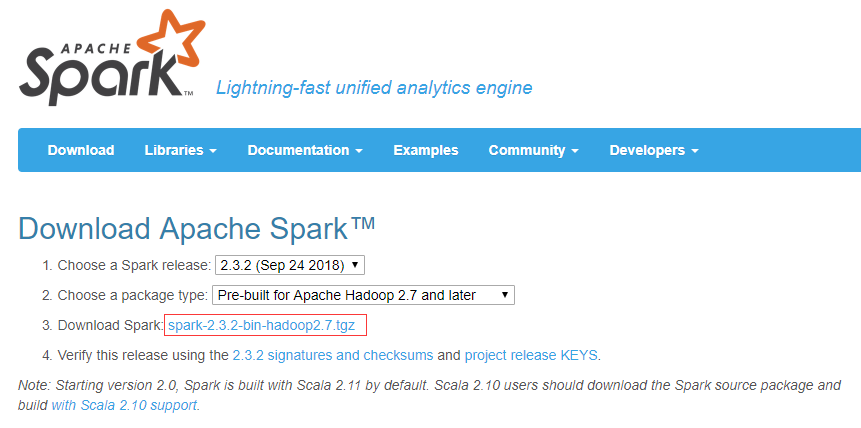

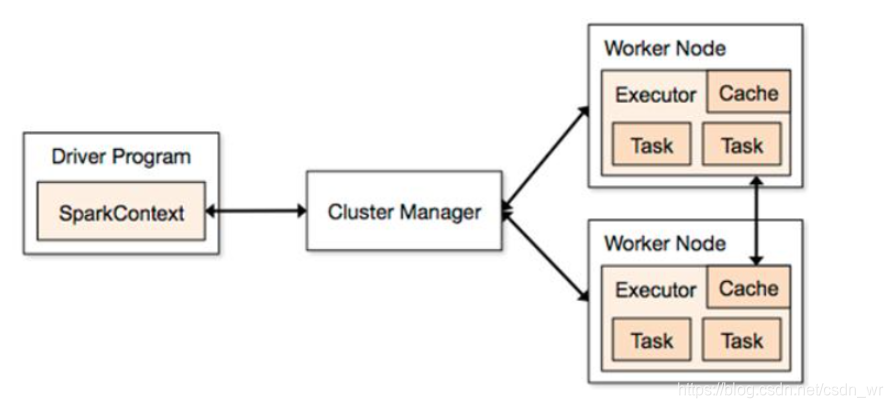

IDEA下使用maven配置Spark开发环境

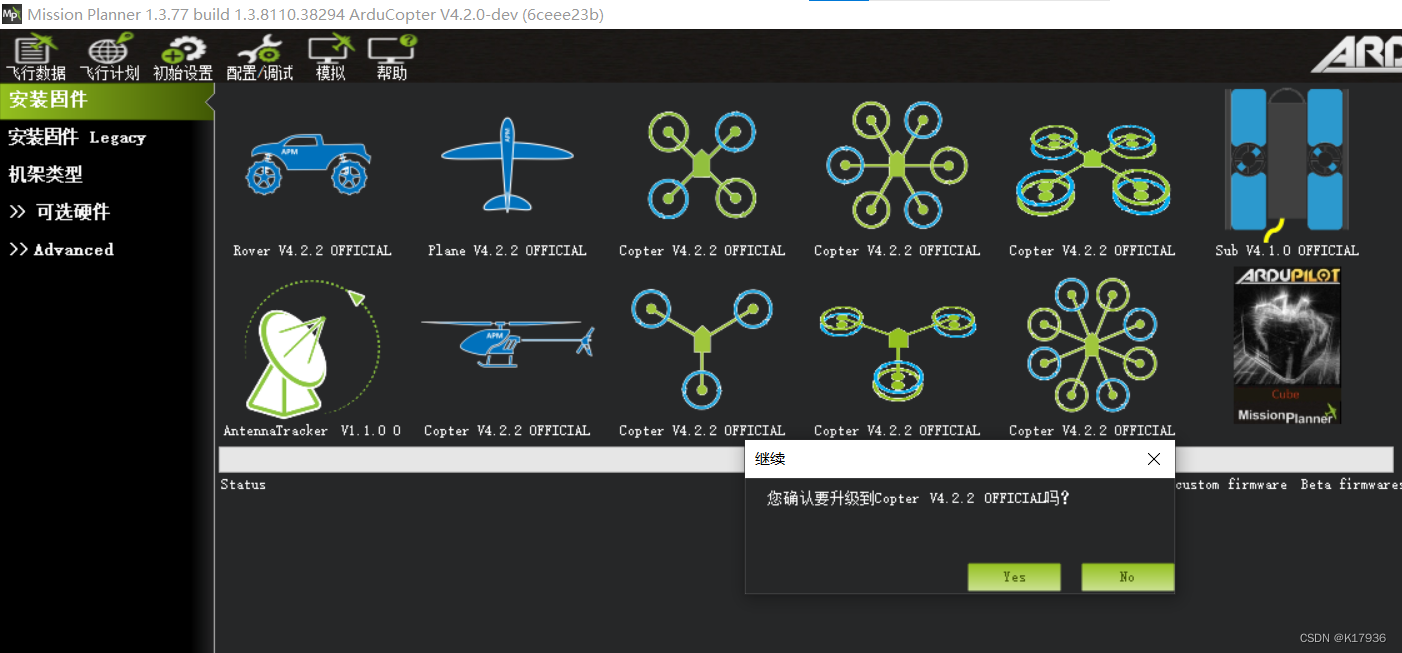

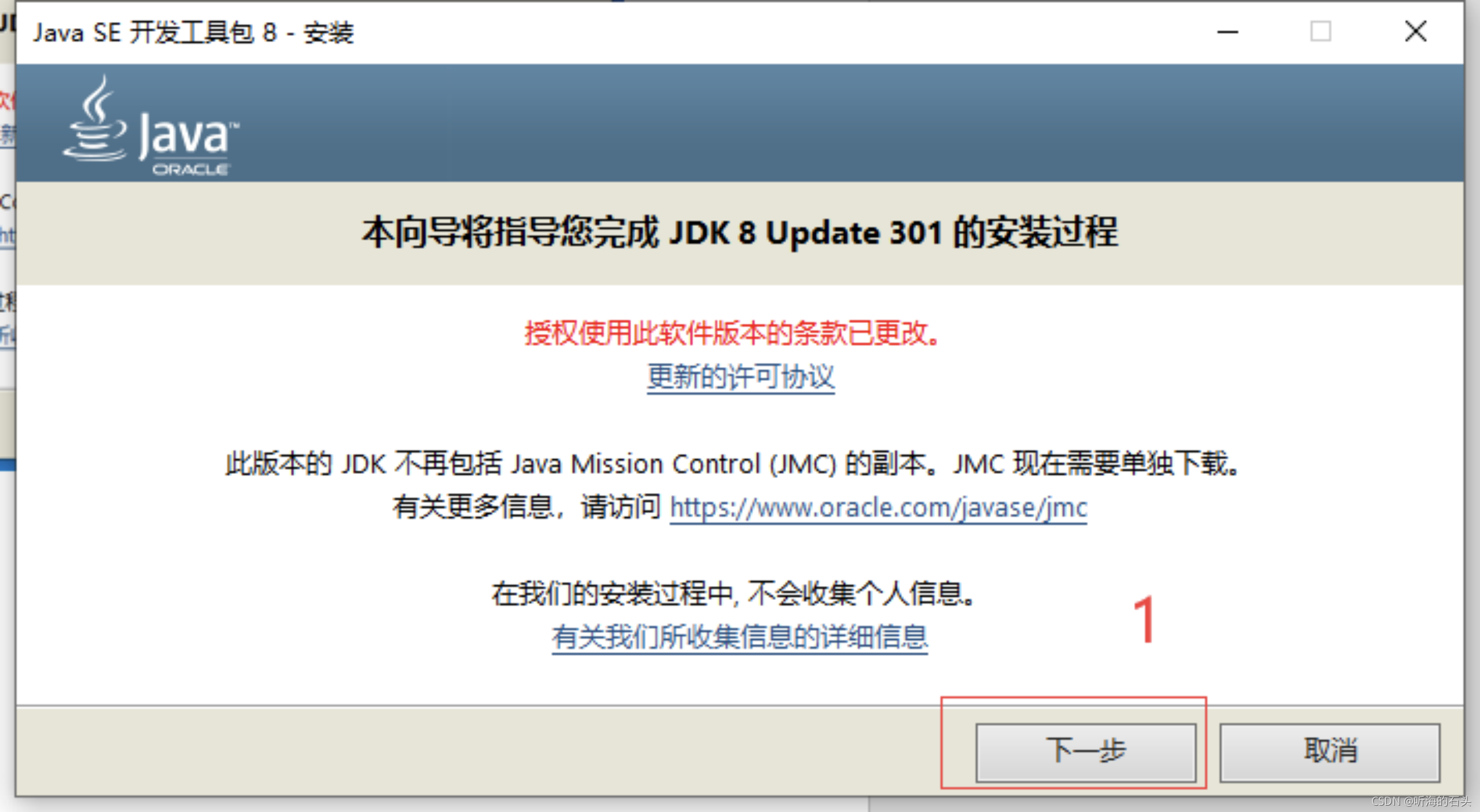

- 1、安装Java

- 2、配置环境变量

- 3、配置Hadoop环境

- 4、安装Scala插件

- 5、配置maven

- 4、Spark编程

- Spark测试

使用到的软件安装包: https://pan.baidu.com/s/1fOKsPYBmOUhHupT50_6yqQ 提取码: d473

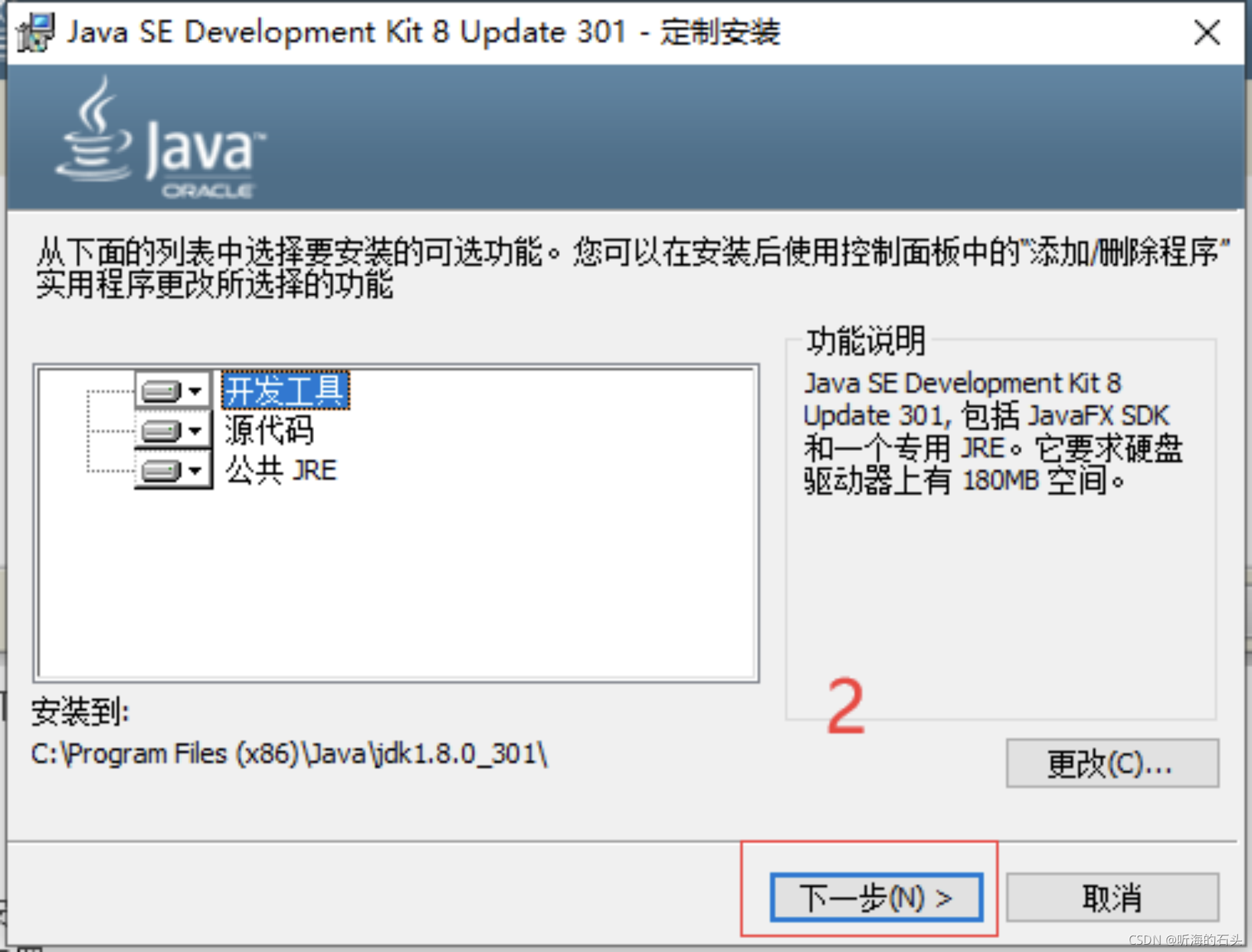

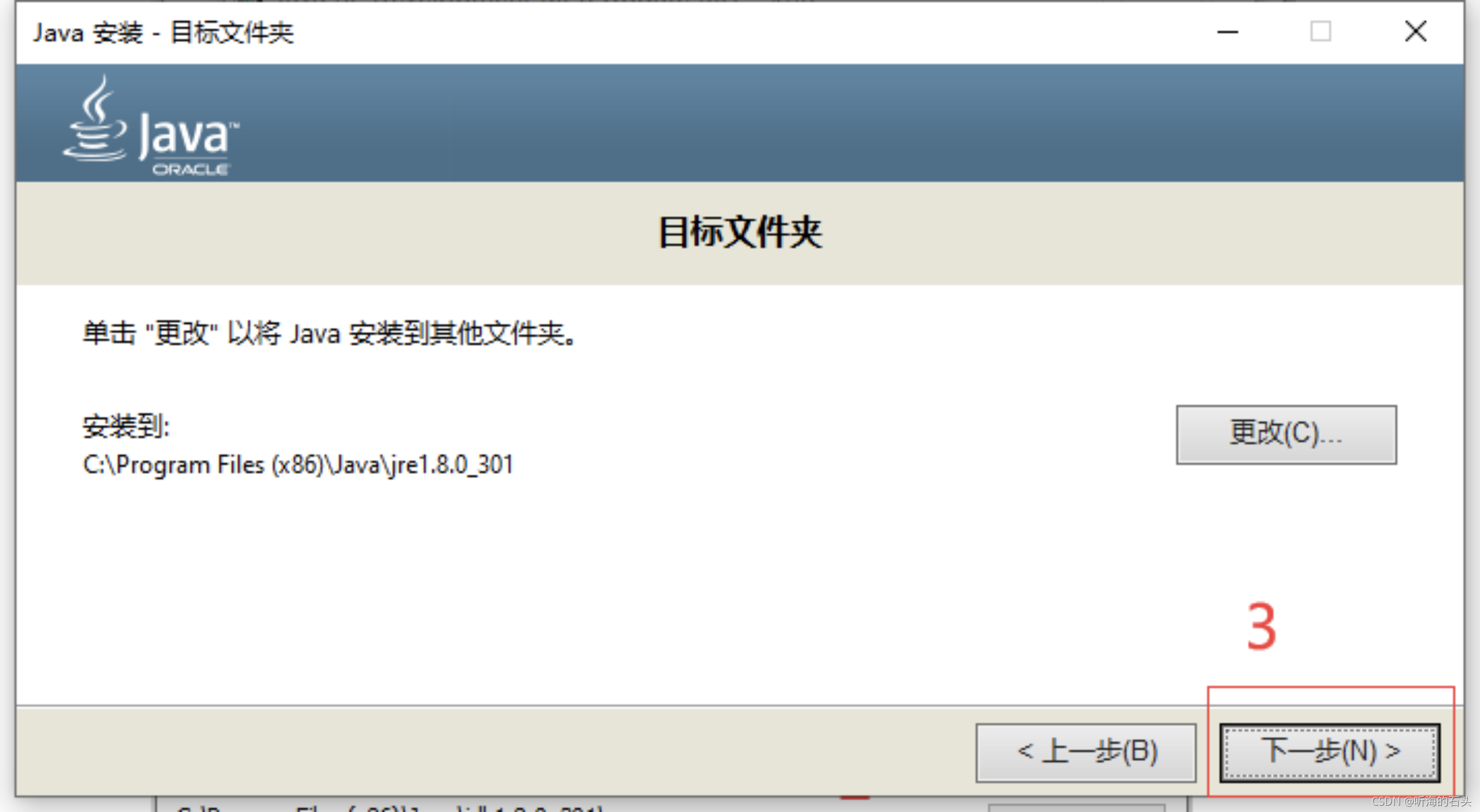

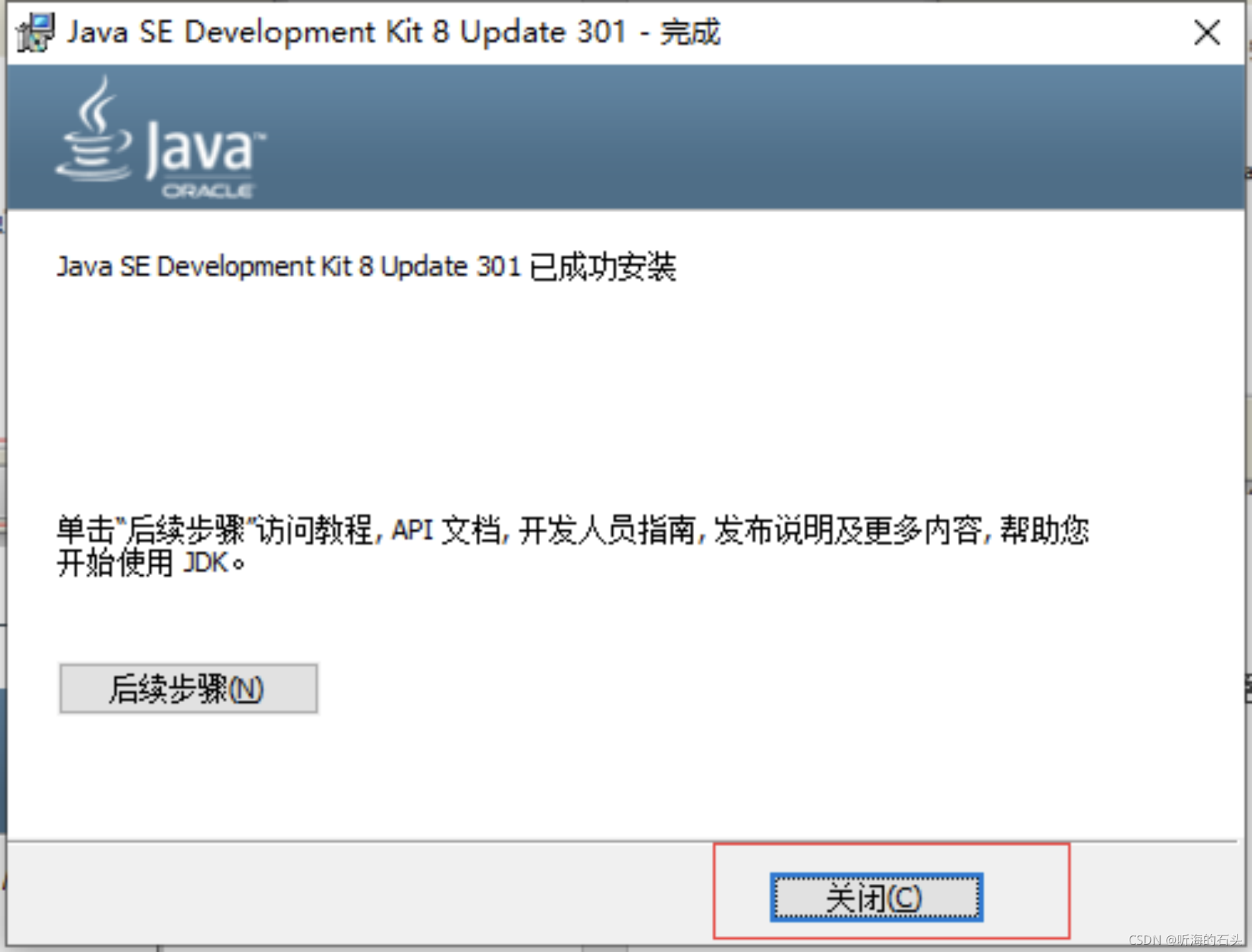

1、安装Java

点击下一步,

点击下一步:

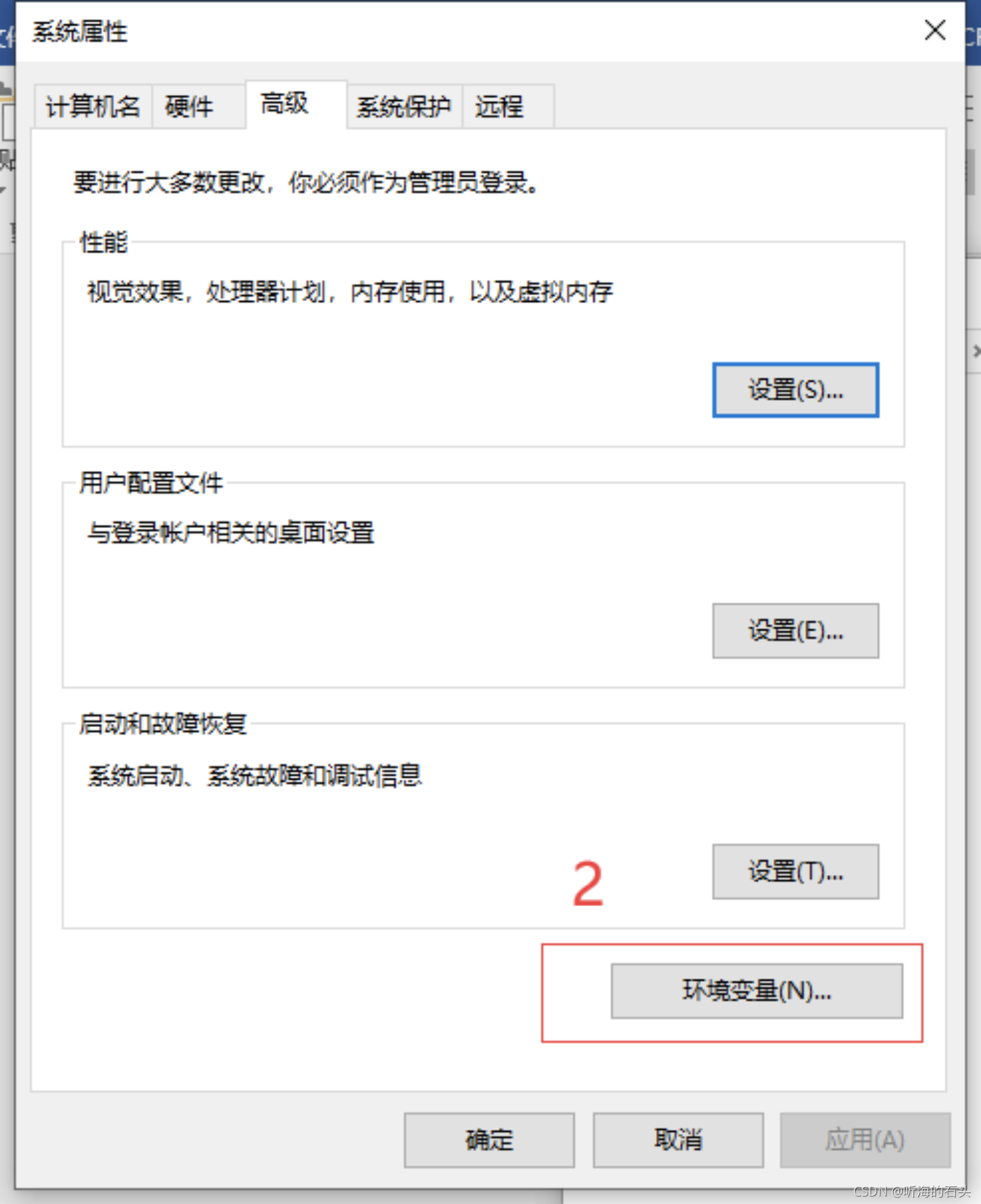

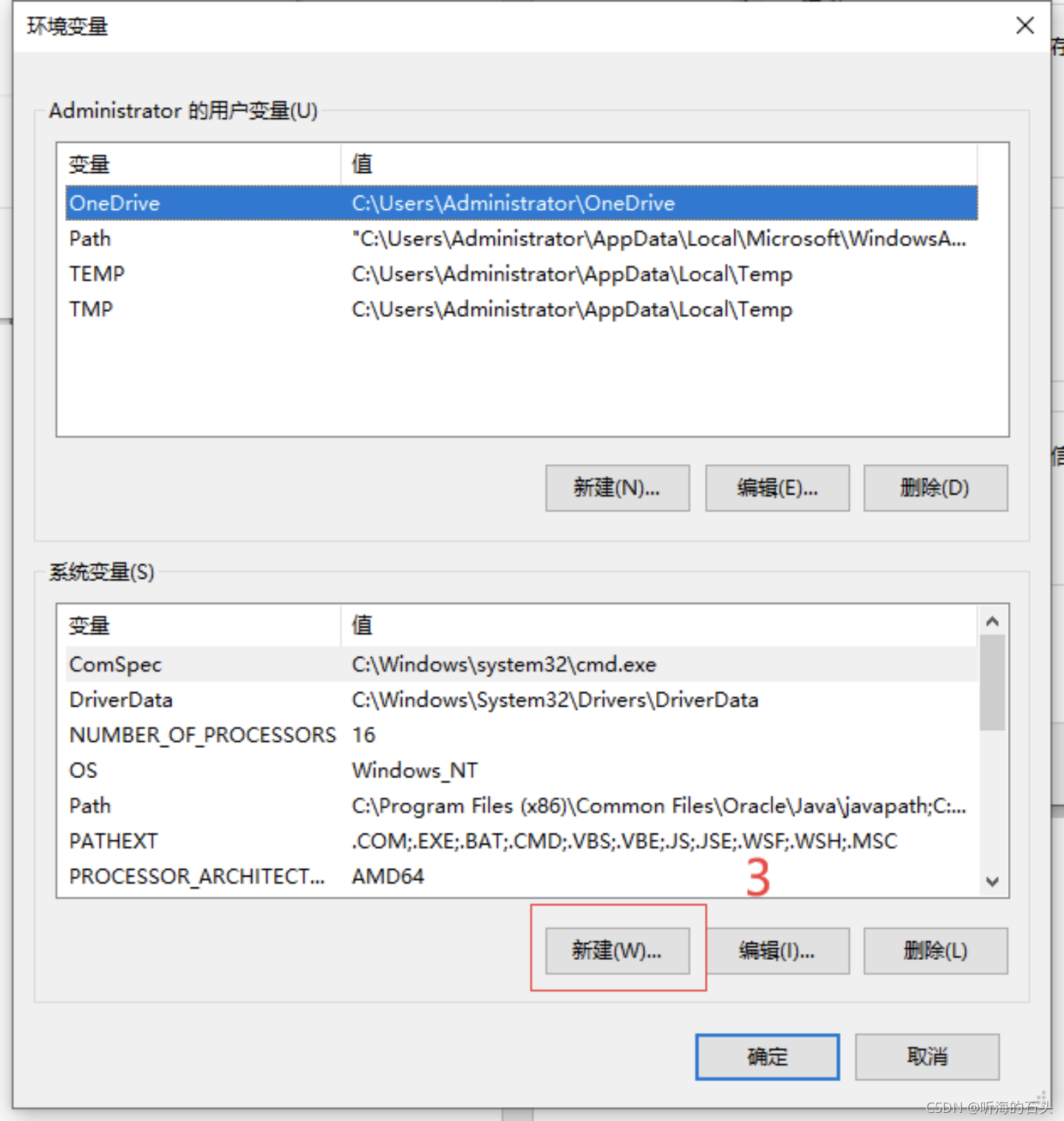

2、配置环境变量

环境变量设置:右键->我的电脑,选择属性

点击高级系统设置

点击环境变量:

点击新建:

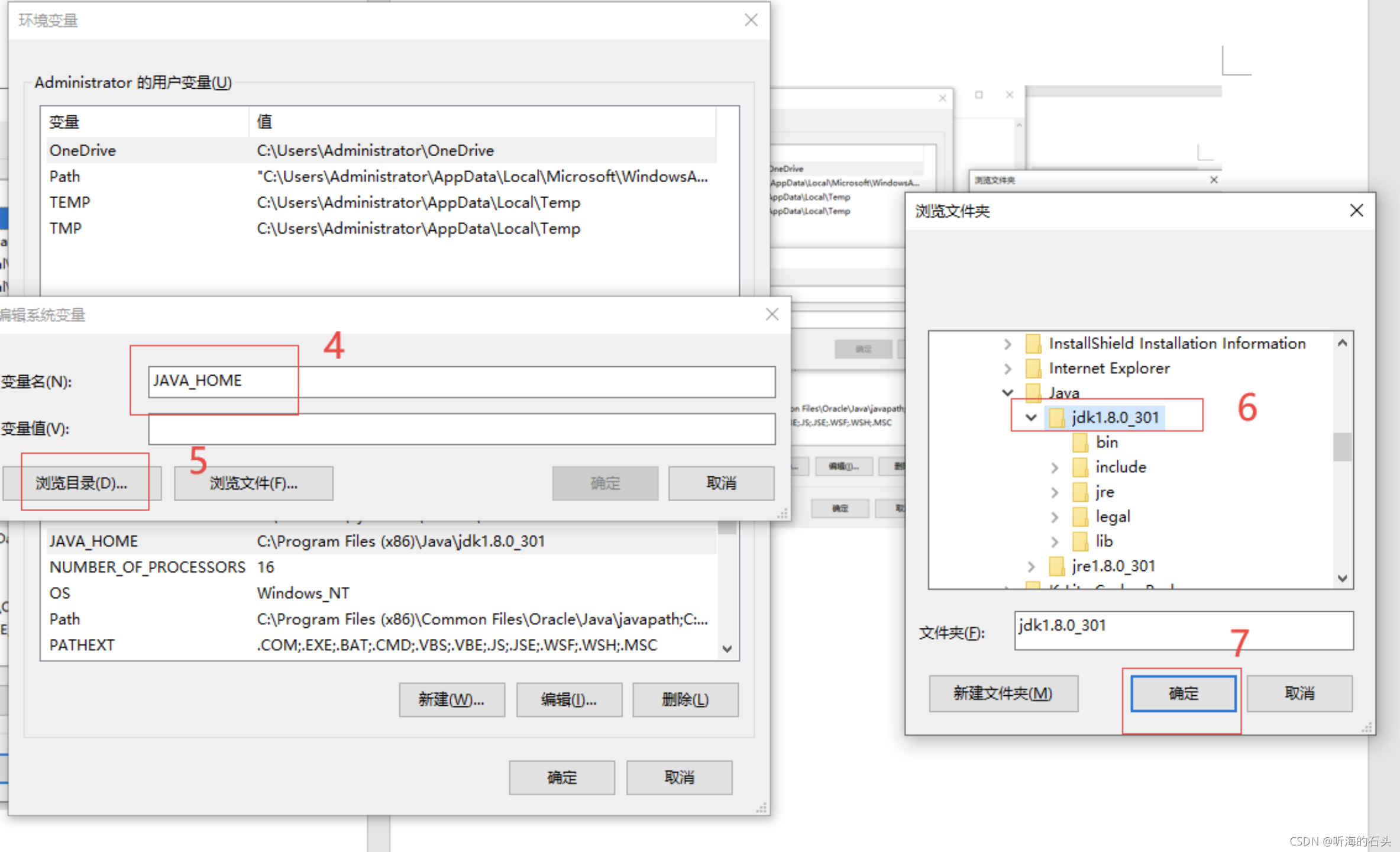

变量名输入:JAVA_HOME

然后点击浏览目录,选择C盘下的C:\Program Files (x86)\Java\jdk1.8.0_301文件夹,点击打开即可

3、配置Hadoop环境

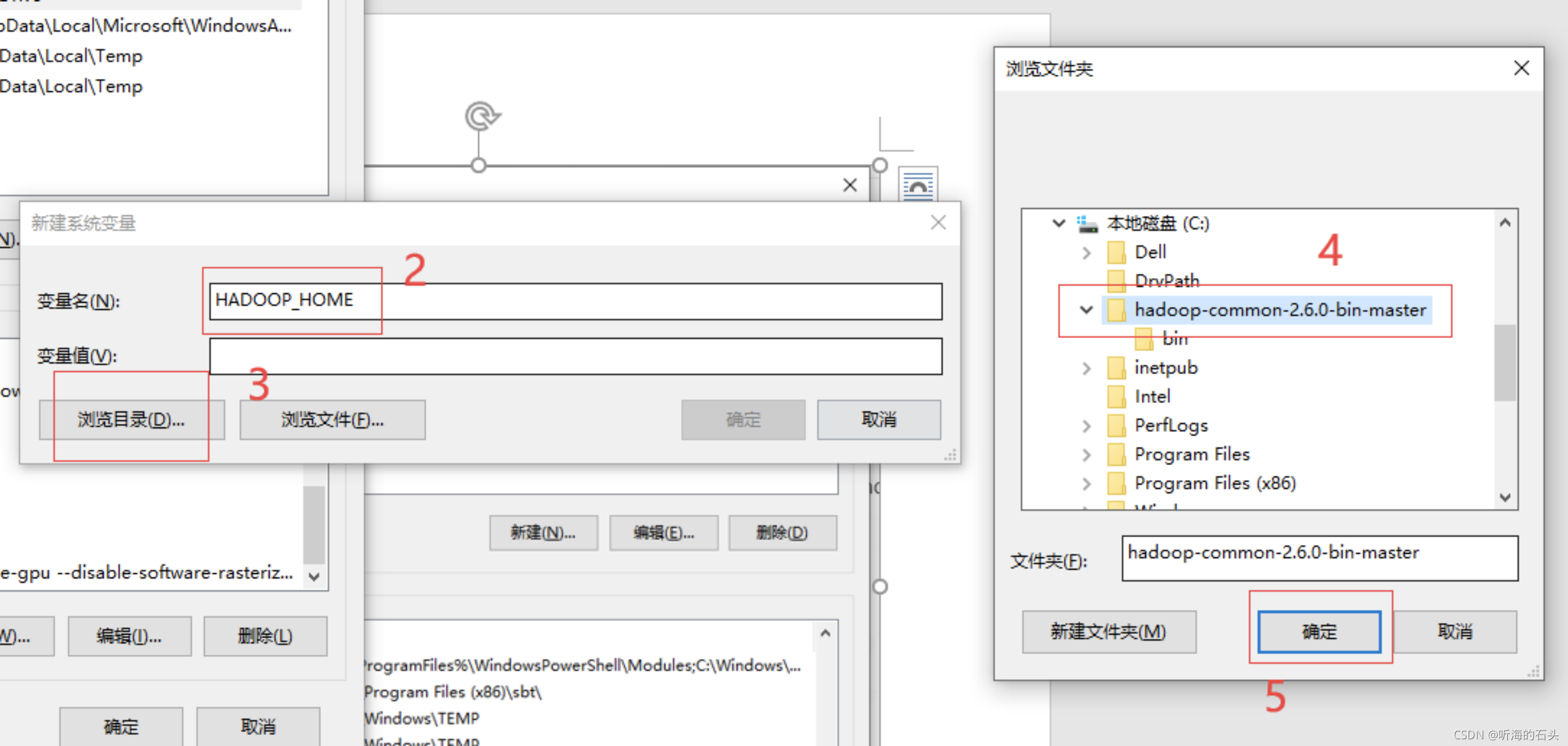

1、将hadoop-common-2.6.0-bin-master 拷贝到C盘目录下

2、将C:\ hadoop-common-2.6.0-bin-master\bin下的hadoop.dll文件和winutils.exe文件拷贝到C:\Windows\System32下,若有重复,不需要复制替换。

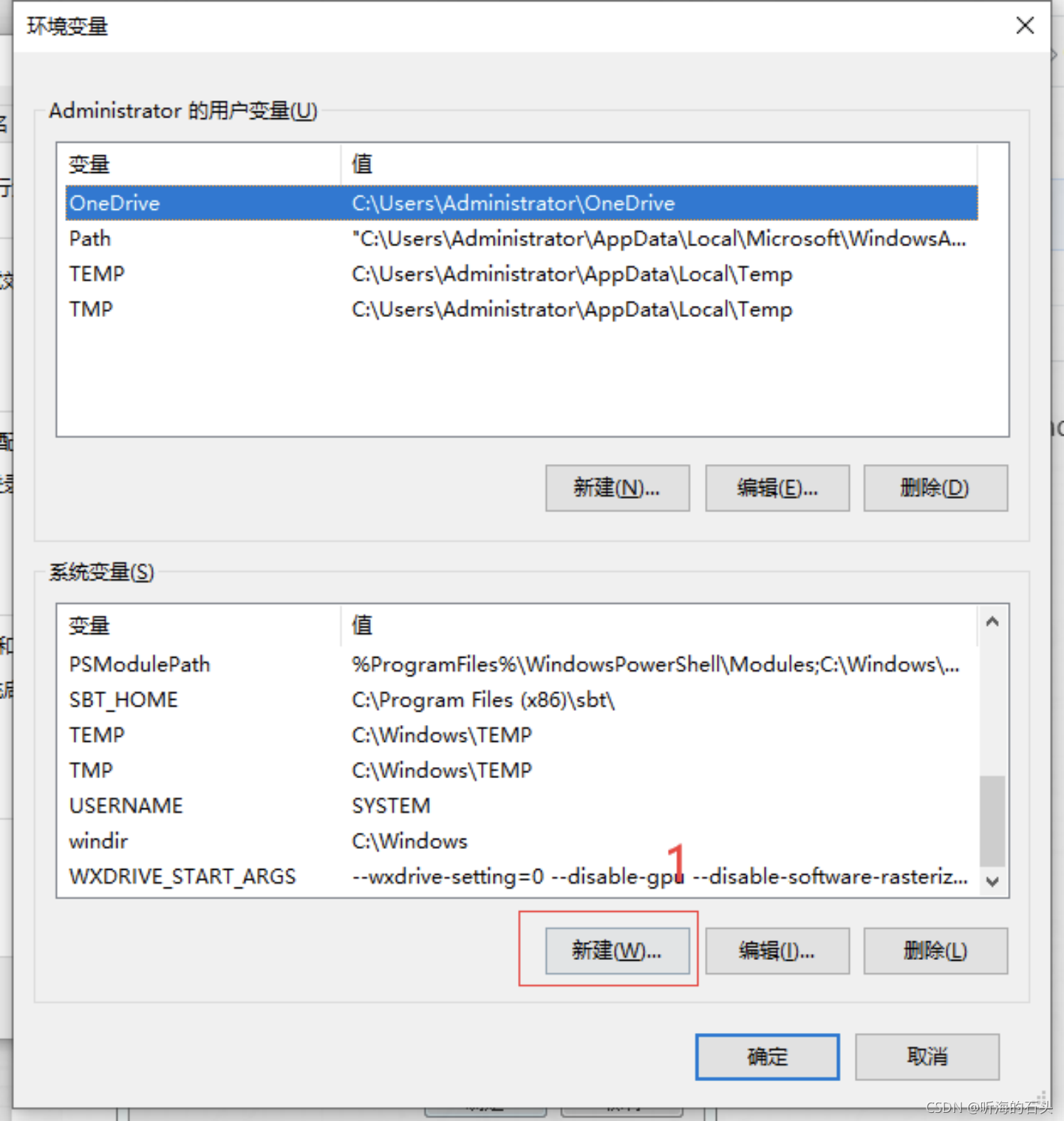

3、配置环境变量:

和配置Java的环境变量流程一样,配置Hadoop环境

重启电脑

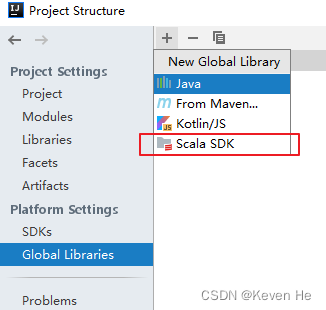

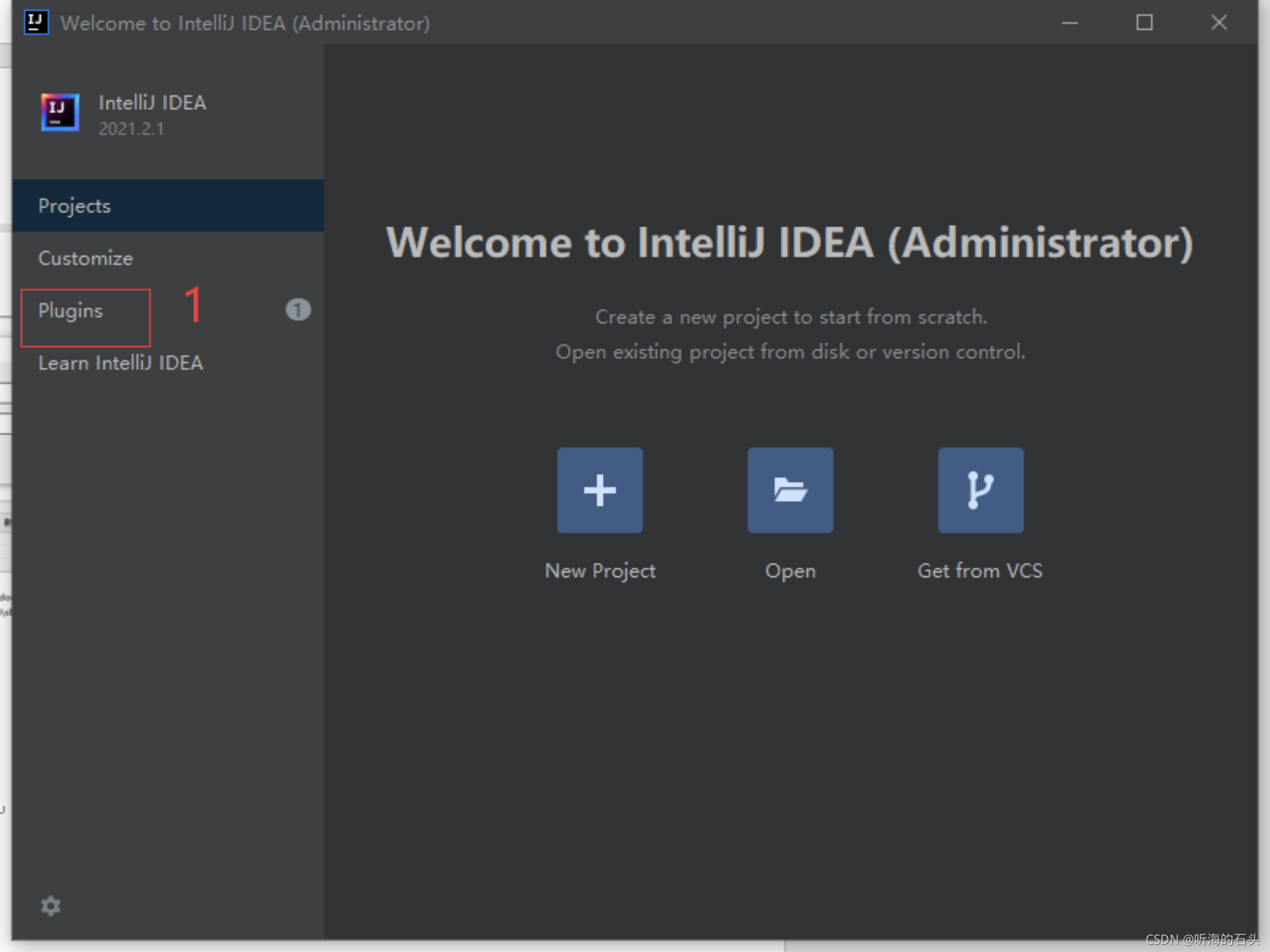

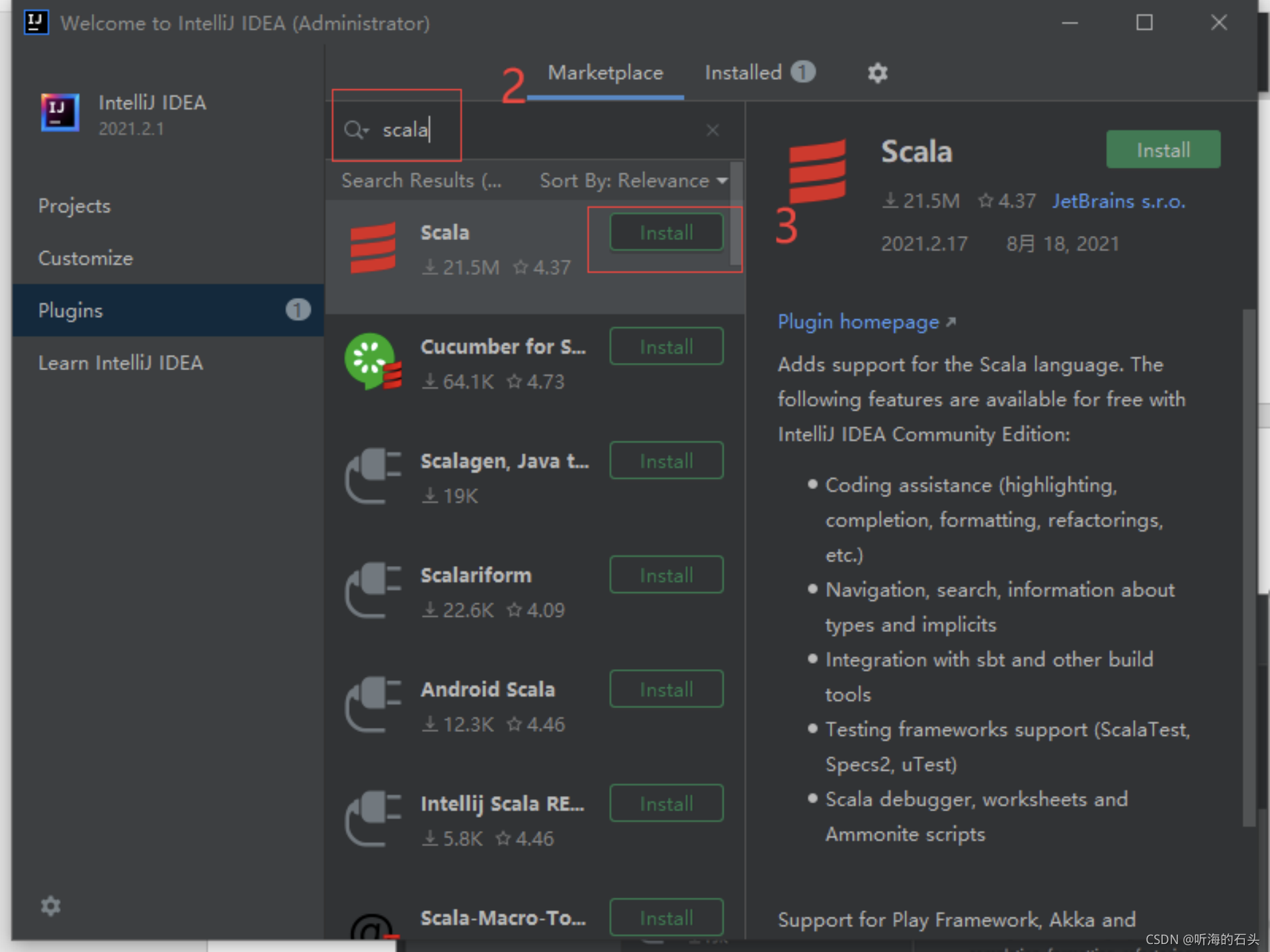

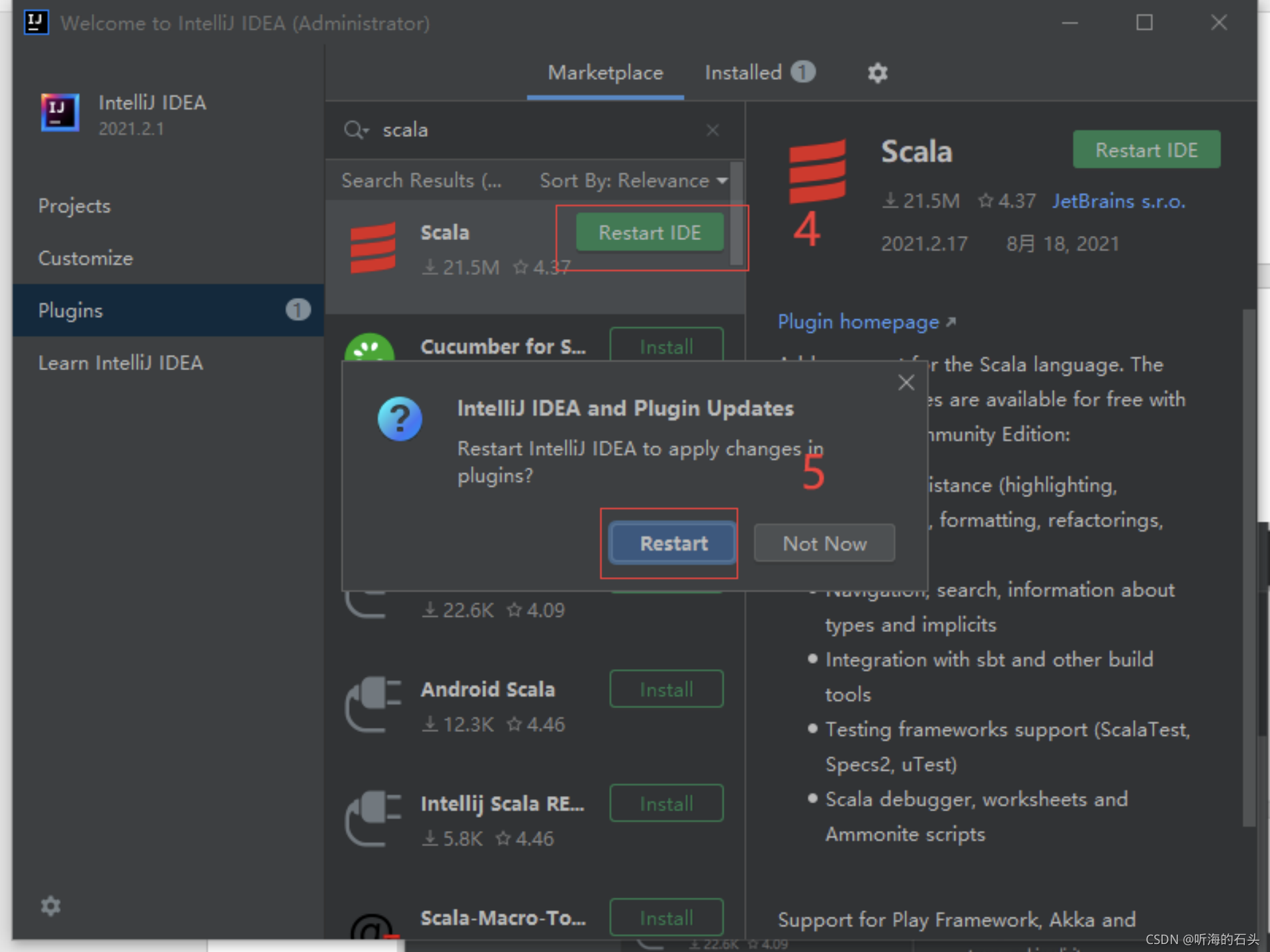

4、安装Scala插件

选择Plugis

输入scala,点击install

重启IDEA

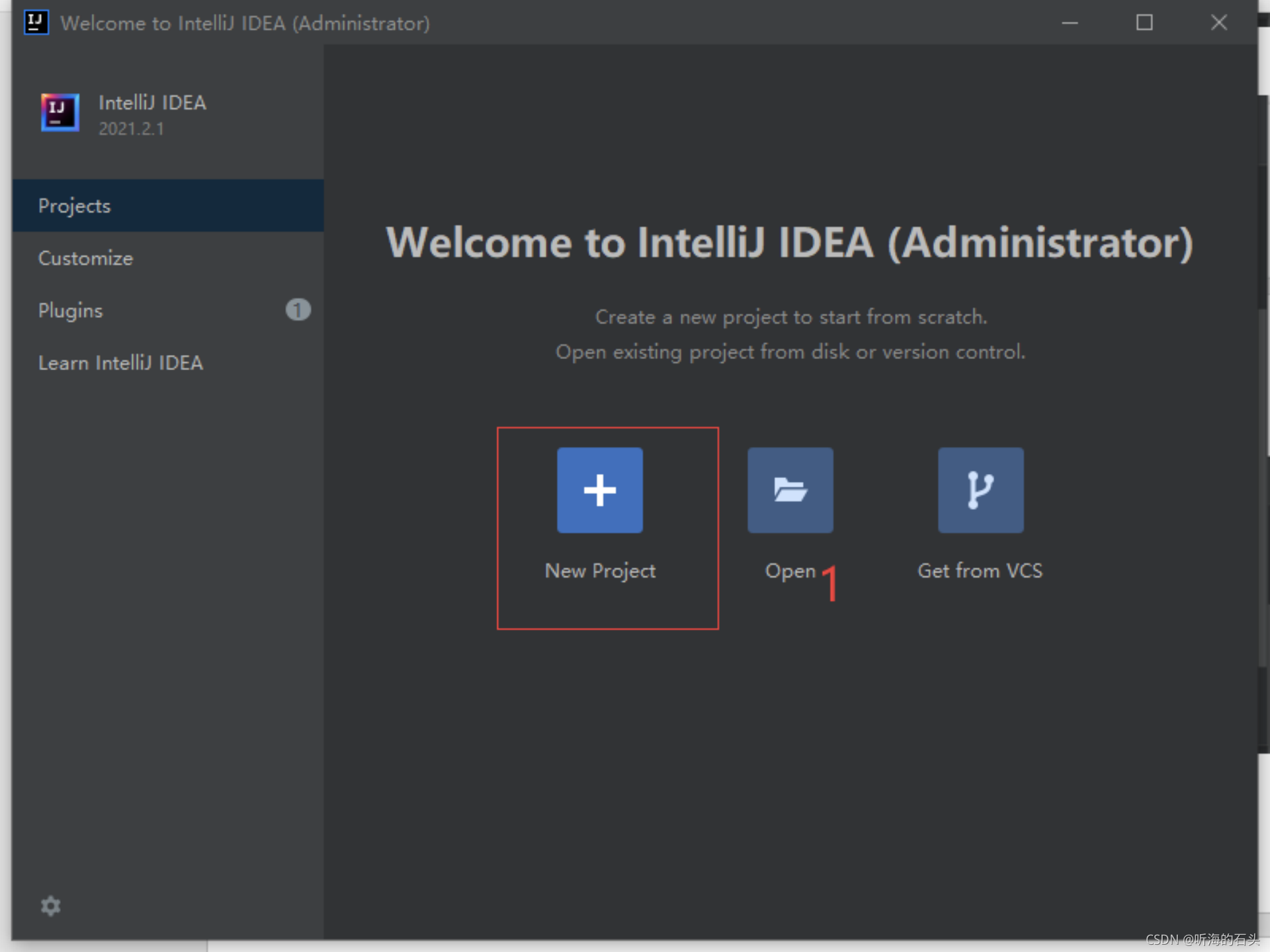

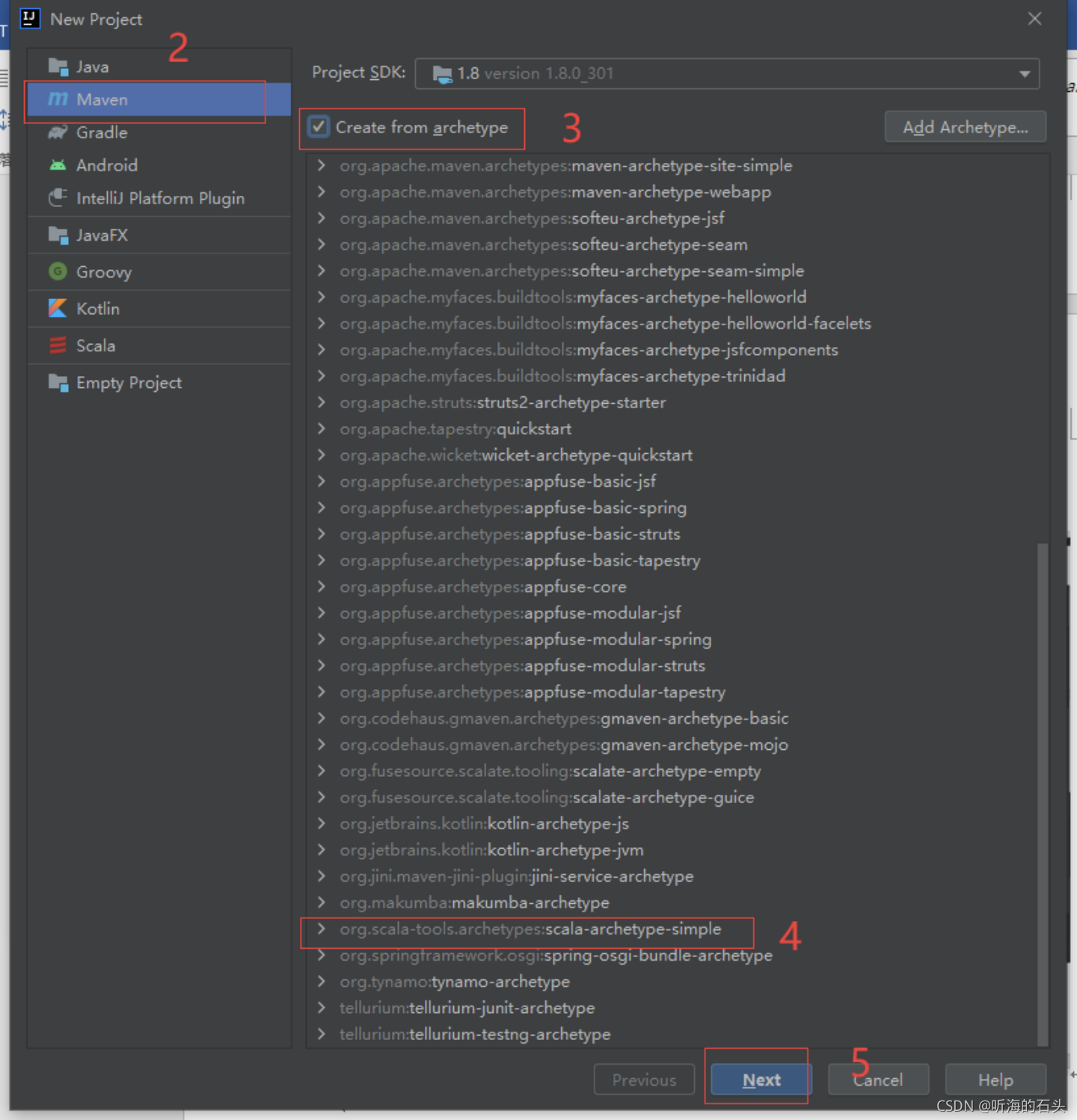

5、配置maven

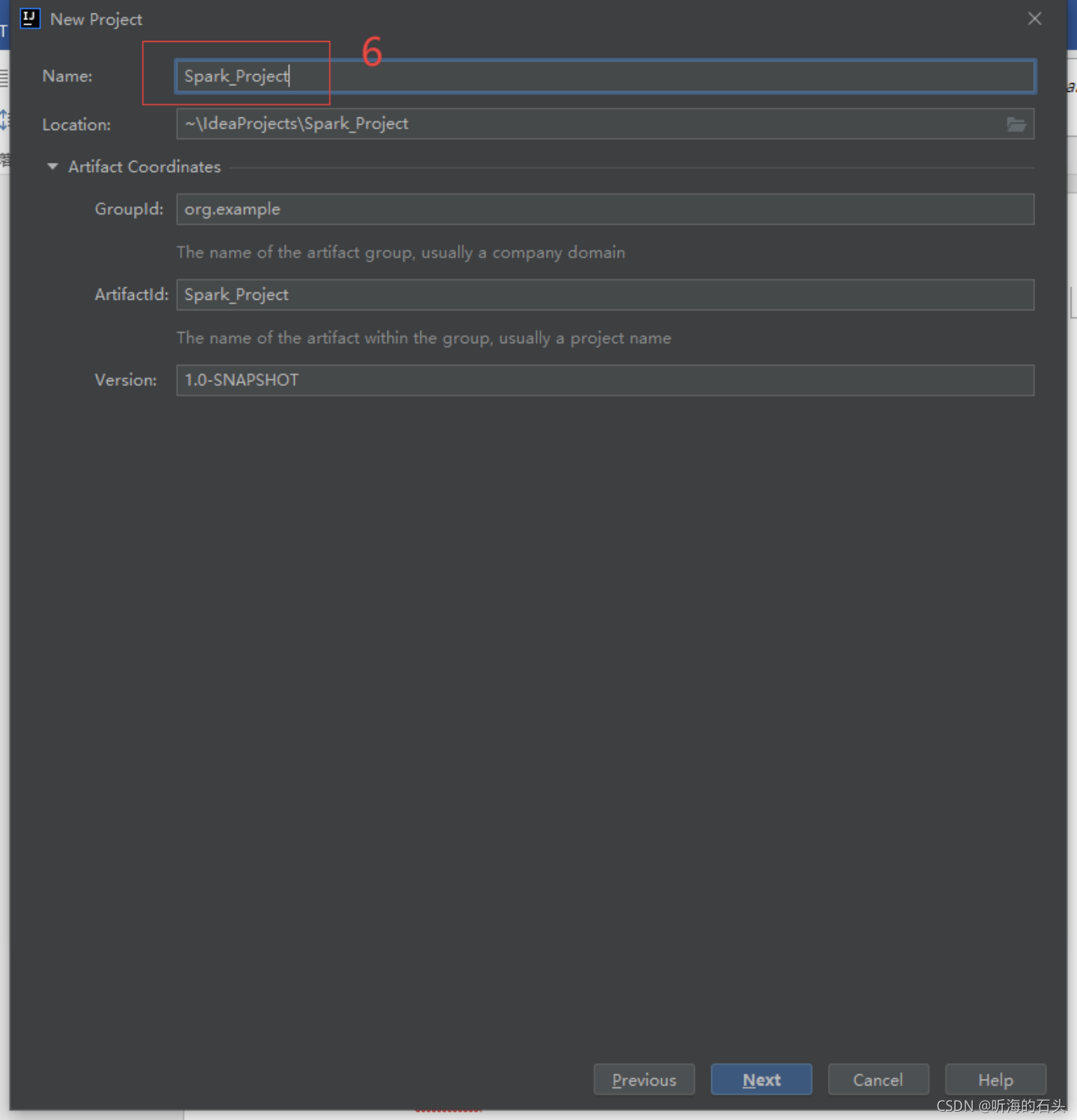

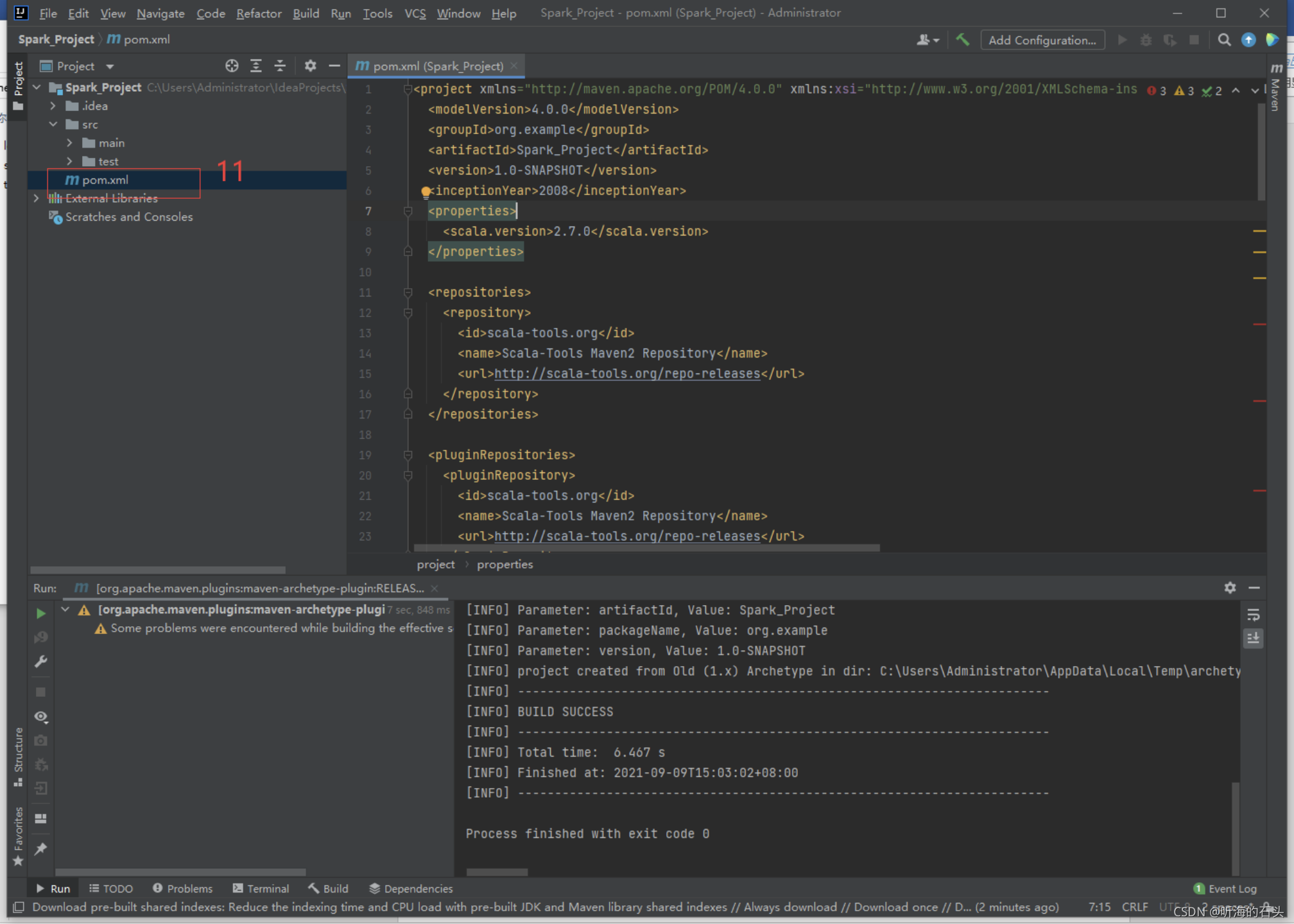

输入Spark_Project

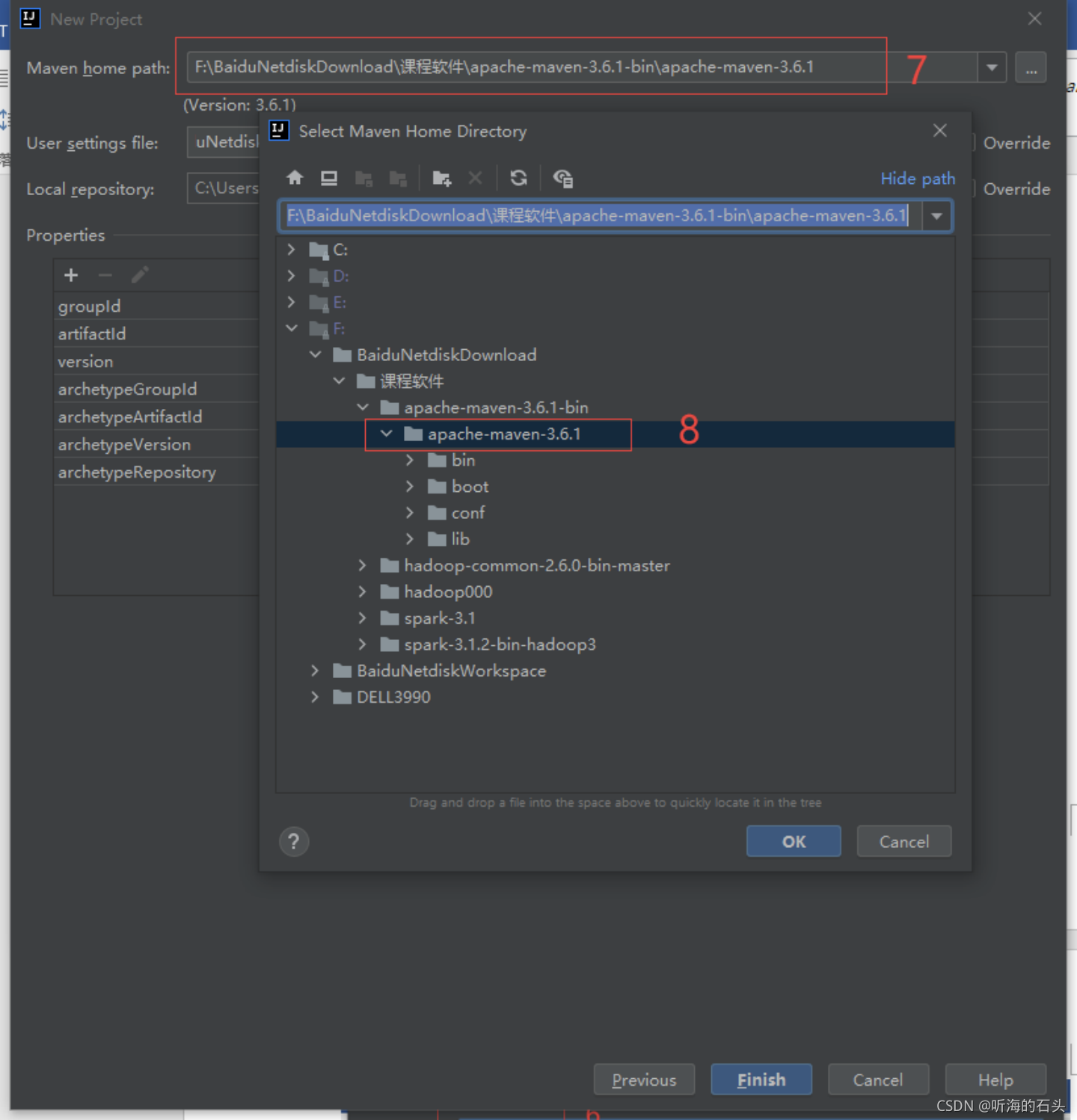

选择提供的maven安装包

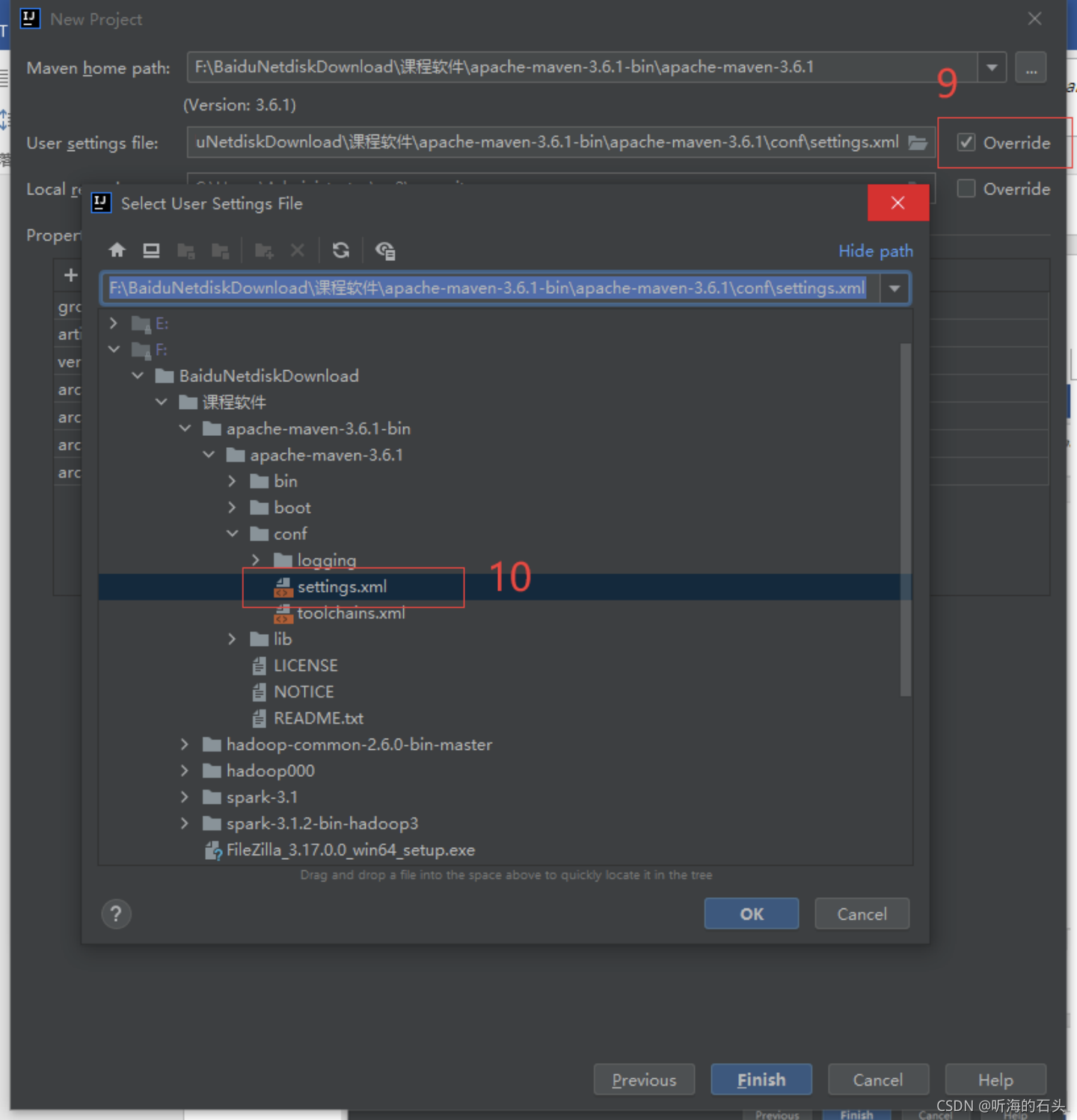

选择apache-maven-3.6.1-bin\apache-maven-3.6.1\conf下的setting文件,并将Override打钩

点击pom文件,将提供的pom内容全部复制修改

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"><modelVersion>4.0.0</modelVersion><groupId>org.example</groupId><artifactId>untitled2</artifactId><version>1.0-SNAPSHOT</version><inceptionYear>2008</inceptionYear><properties><scala.version>2.11.8</scala.version></properties><repositories><repository><id>scala-tools.org</id><name>Scala-Tools Maven2 Repository</name><url>http://scala-tools.org/repo-releases</url></repository></repositories><pluginRepositories><pluginRepository><id>scala-tools.org</id><name>Scala-Tools Maven2 Repository</name><url>http://scala-tools.org/repo-releases</url></pluginRepository></pluginRepositories><dependencies><dependency><groupId>org.scala-lang</groupId><artifactId>scala-library</artifactId><version>${scala.version}</version></dependency><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>3.8.1</version><scope>test</scope></dependency><dependency><groupId>org.specs</groupId><artifactId>specs</artifactId><version>1.2.5</version><scope>test</scope></dependency><!-- <dependency>--><!-- <groupId>org.apache.spark</groupId>--><!-- <artifactId>spark-core_2.11</artifactId>--><!-- <version>2.1.1</version>--><!-- <scope>provided</scope>--><!-- </dependency>--><dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql_2.11</artifactId><version>2.1.1</version><scope>provided</scope></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming_2.11</artifactId><version>2.1.1</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming-kafka-0-10_2.11</artifactId><version>2.1.1</version></dependency></dependencies><build><sourceDirectory>src/main/scala</sourceDirectory><testSourceDirectory>src/test/scala</testSourceDirectory><plugins><plugin><groupId>org.scala-tools</groupId><artifactId>maven-scala-plugin</artifactId><executions><execution><goals><goal>compile</goal><goal>testCompile</goal></goals></execution></executions><configuration><scalaVersion>${scala.version}</scalaVersion><args><arg>-target:jvm-1.5</arg></args></configuration></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-eclipse-plugin</artifactId><configuration><downloadSources>true</downloadSources><buildcommands><buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand></buildcommands><additionalProjectnatures><projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature></additionalProjectnatures><classpathContainers><classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer><classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer></classpathContainers></configuration></plugin></plugins></build><reporting><plugins><plugin><groupId>org.scala-tools</groupId><artifactId>maven-scala-plugin</artifactId><configuration><scalaVersion>${scala.version}</scalaVersion></configuration></plugin></plugins></reporting>

</project>

至此,环境配置完成,开始Spark编程。

4、Spark编程

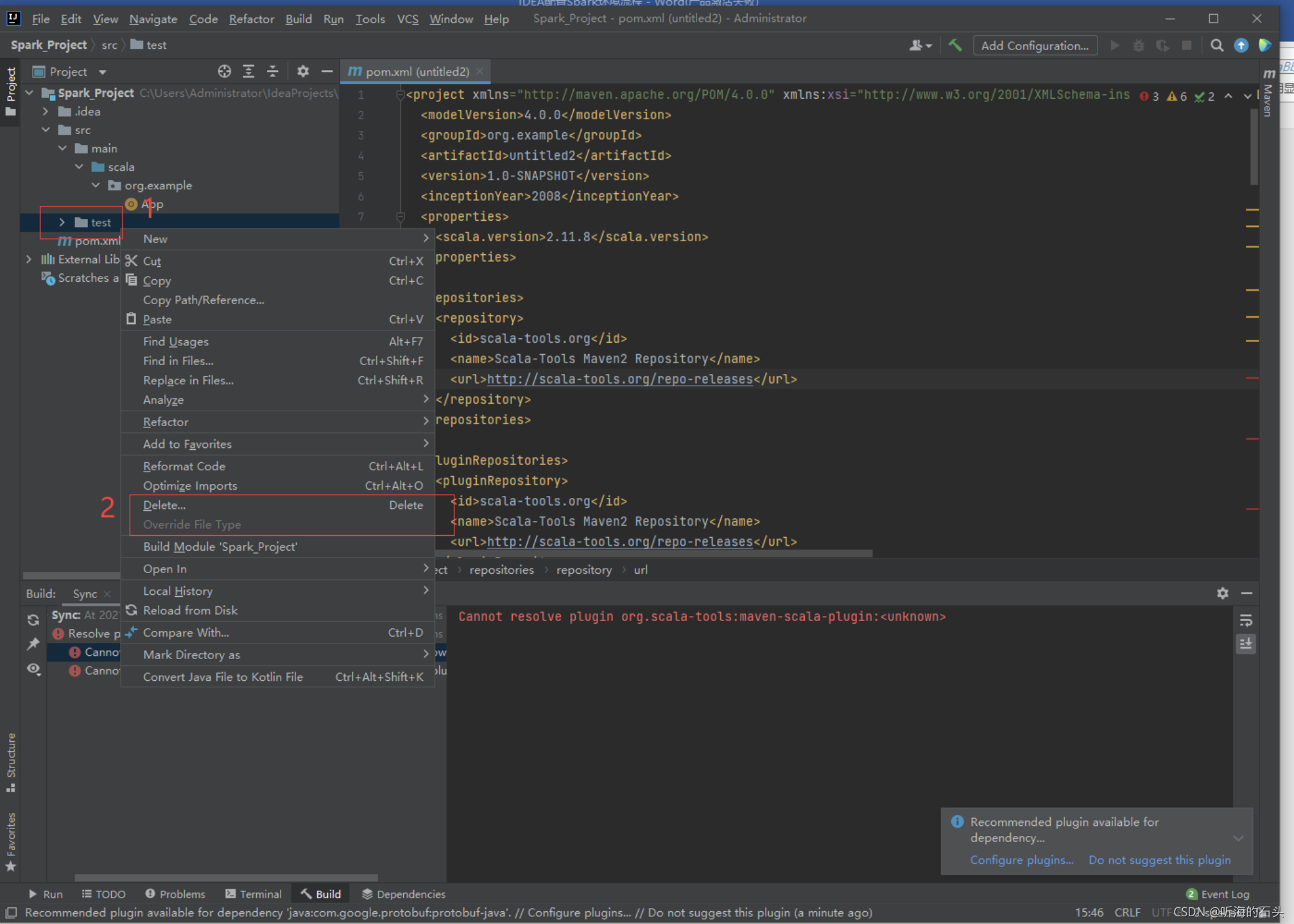

1、删除test文件夹

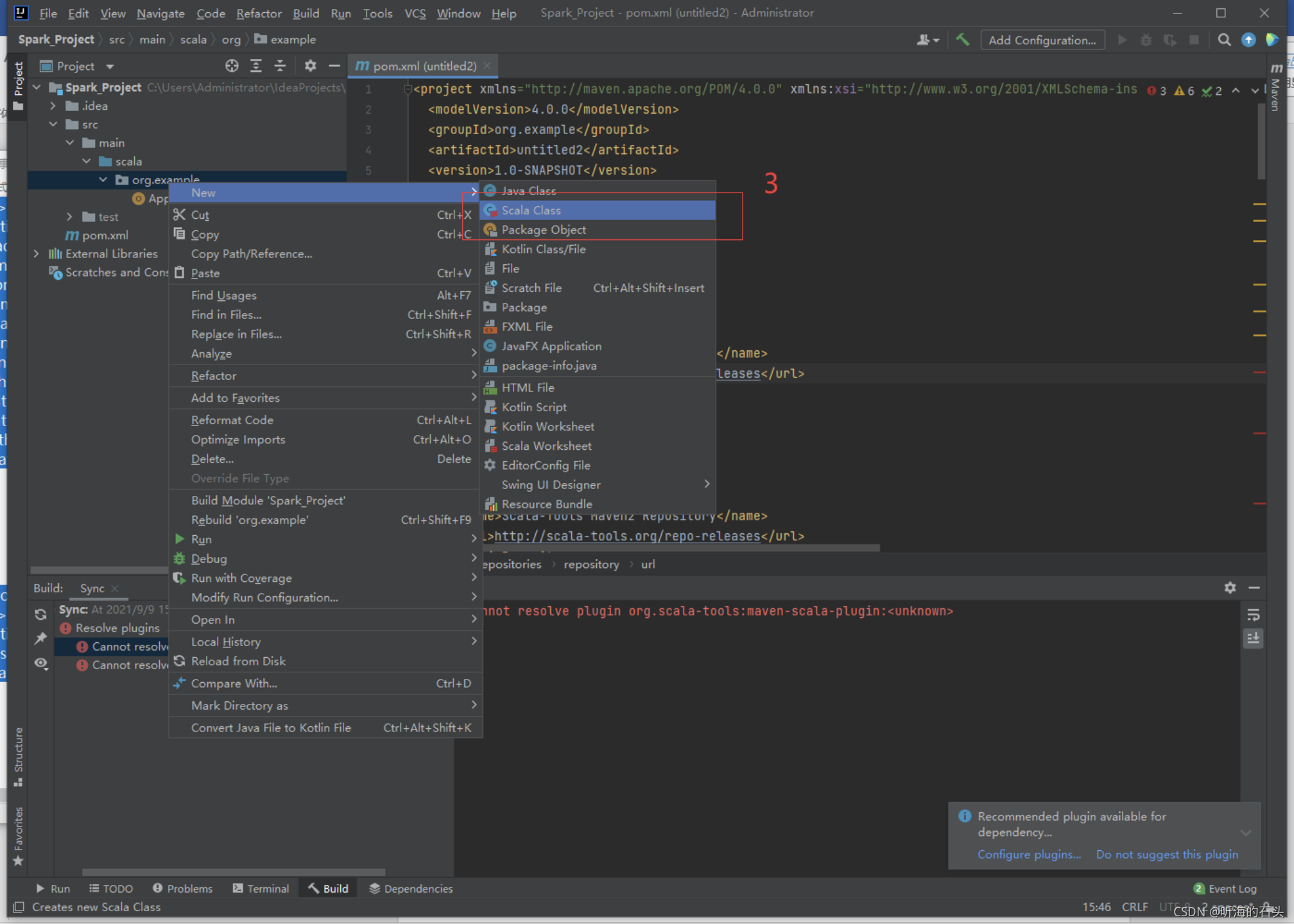

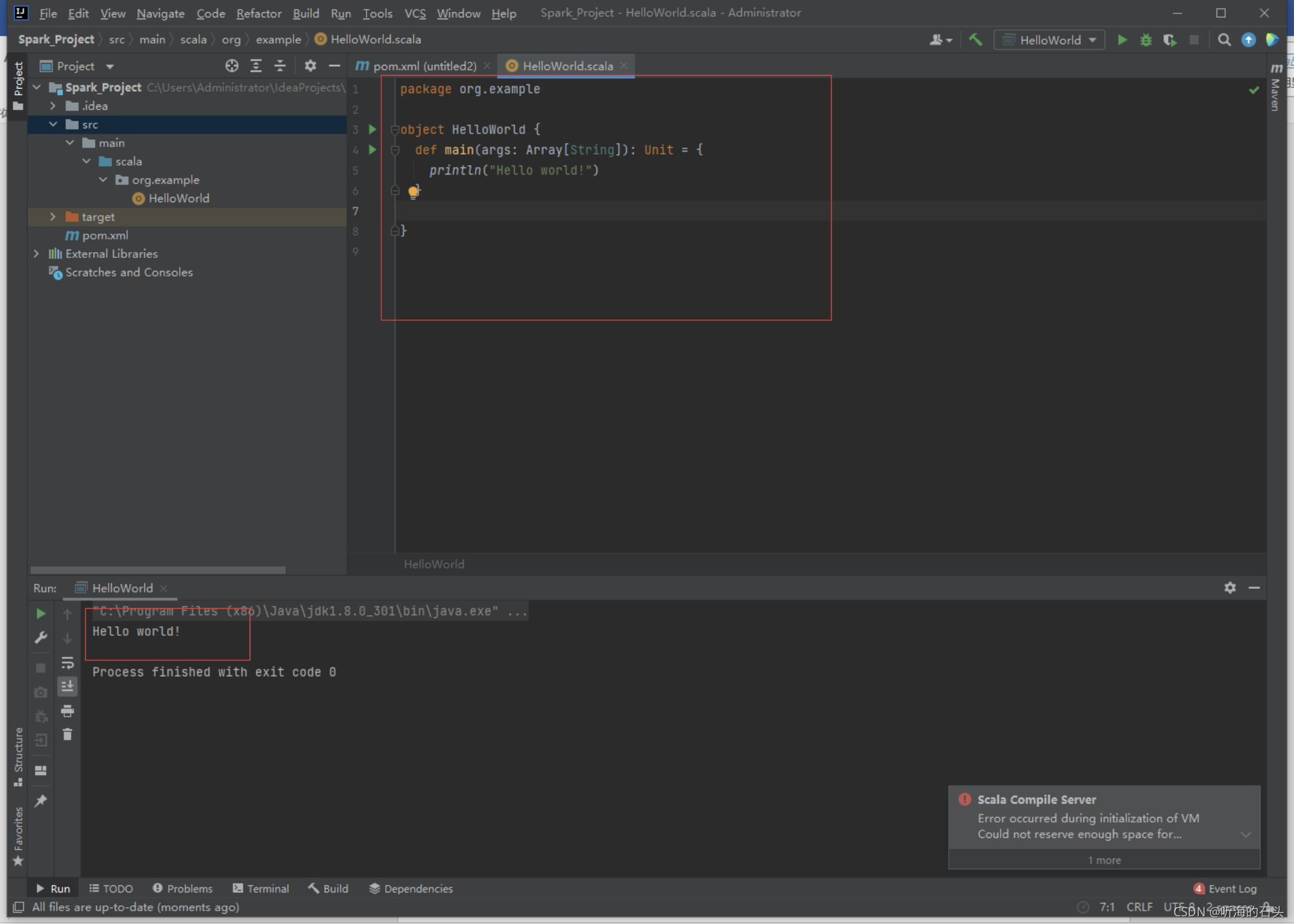

创建Scala文件

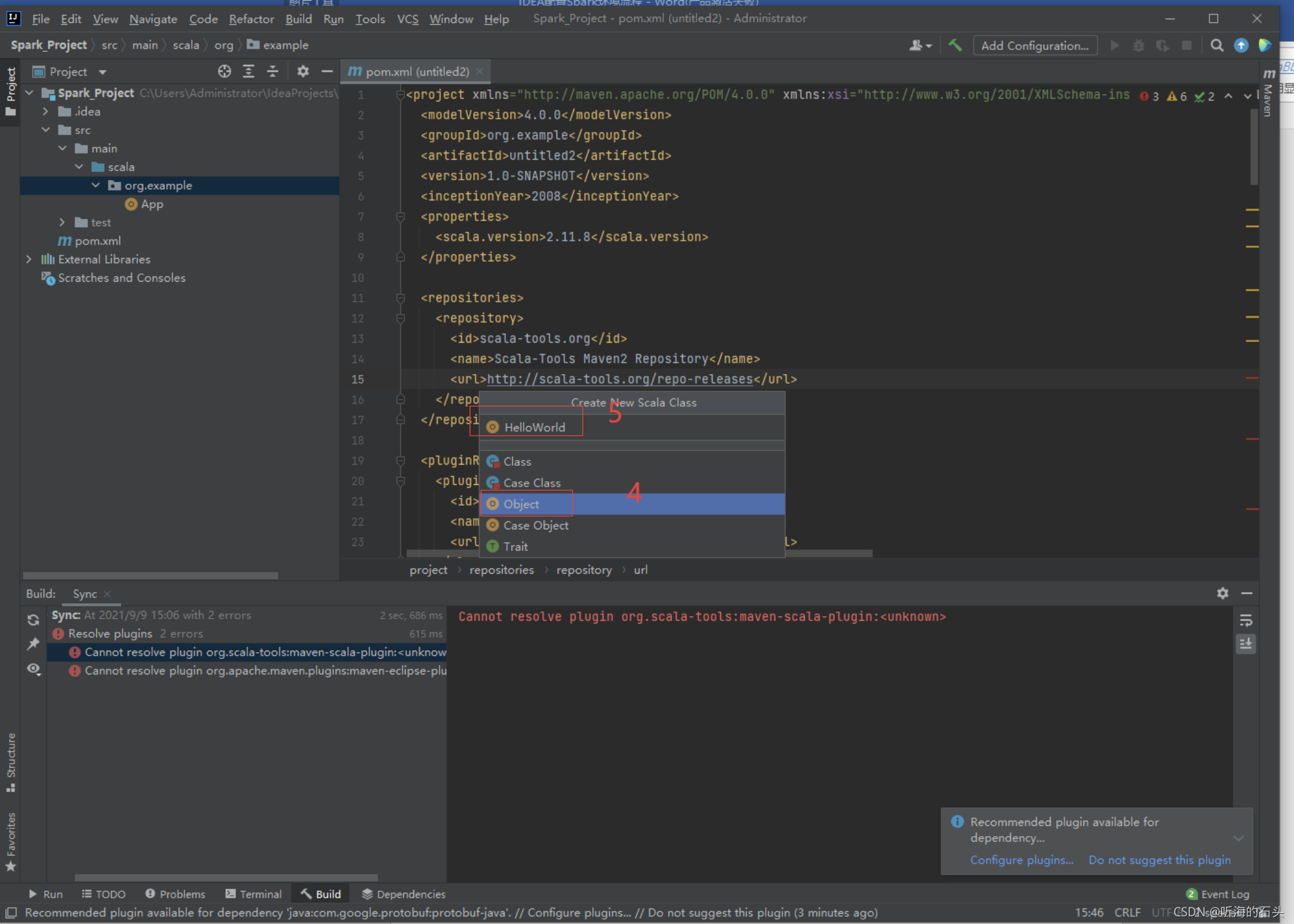

选择Object选项,输入HelloWorld类

Scala测试完成!

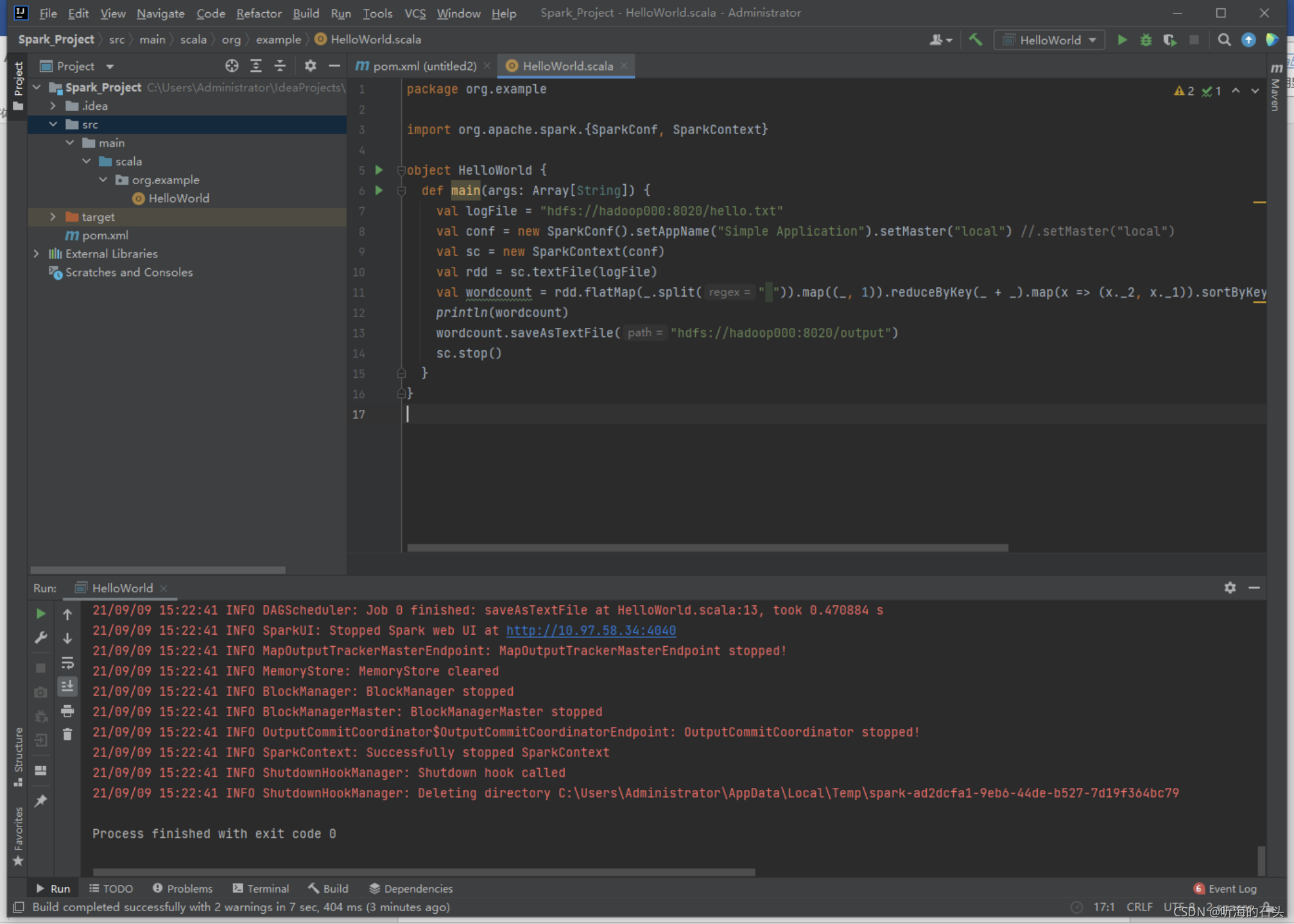

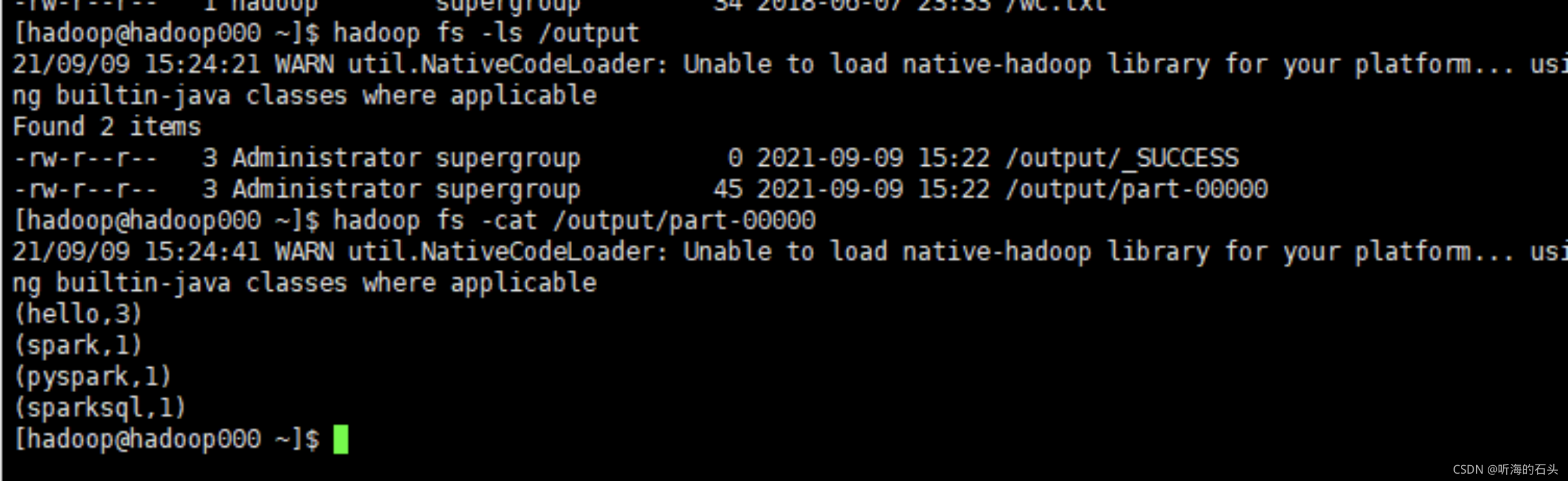

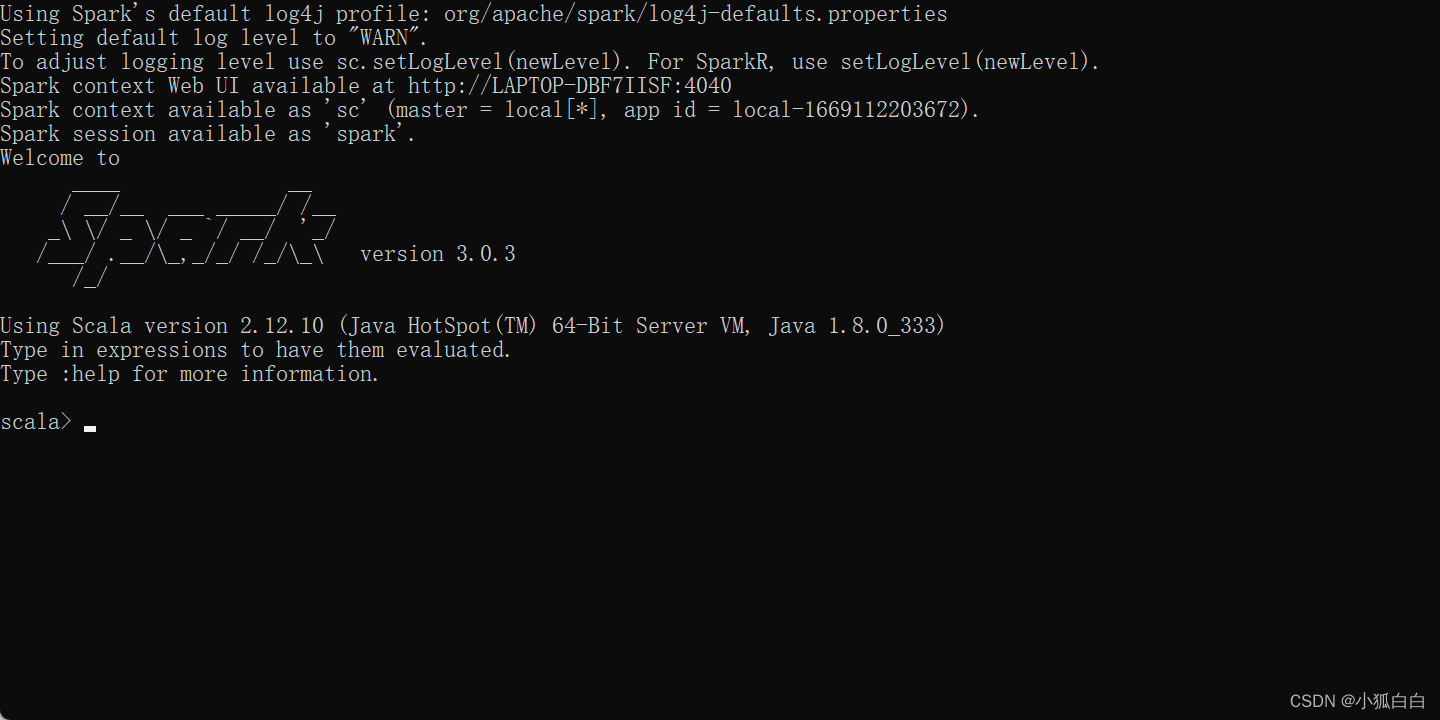

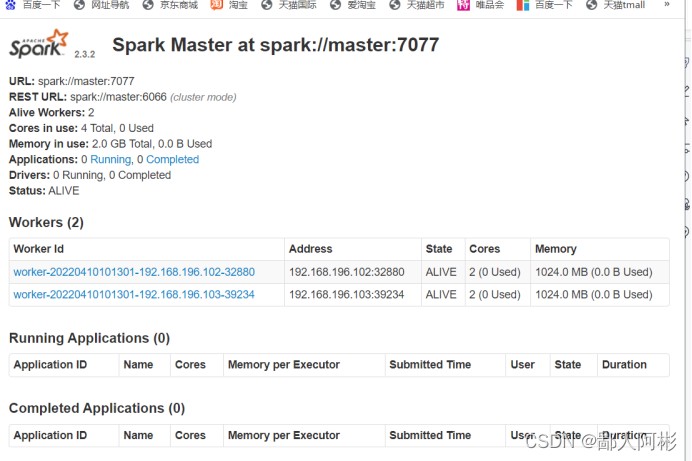

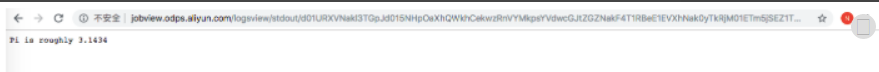

Spark测试

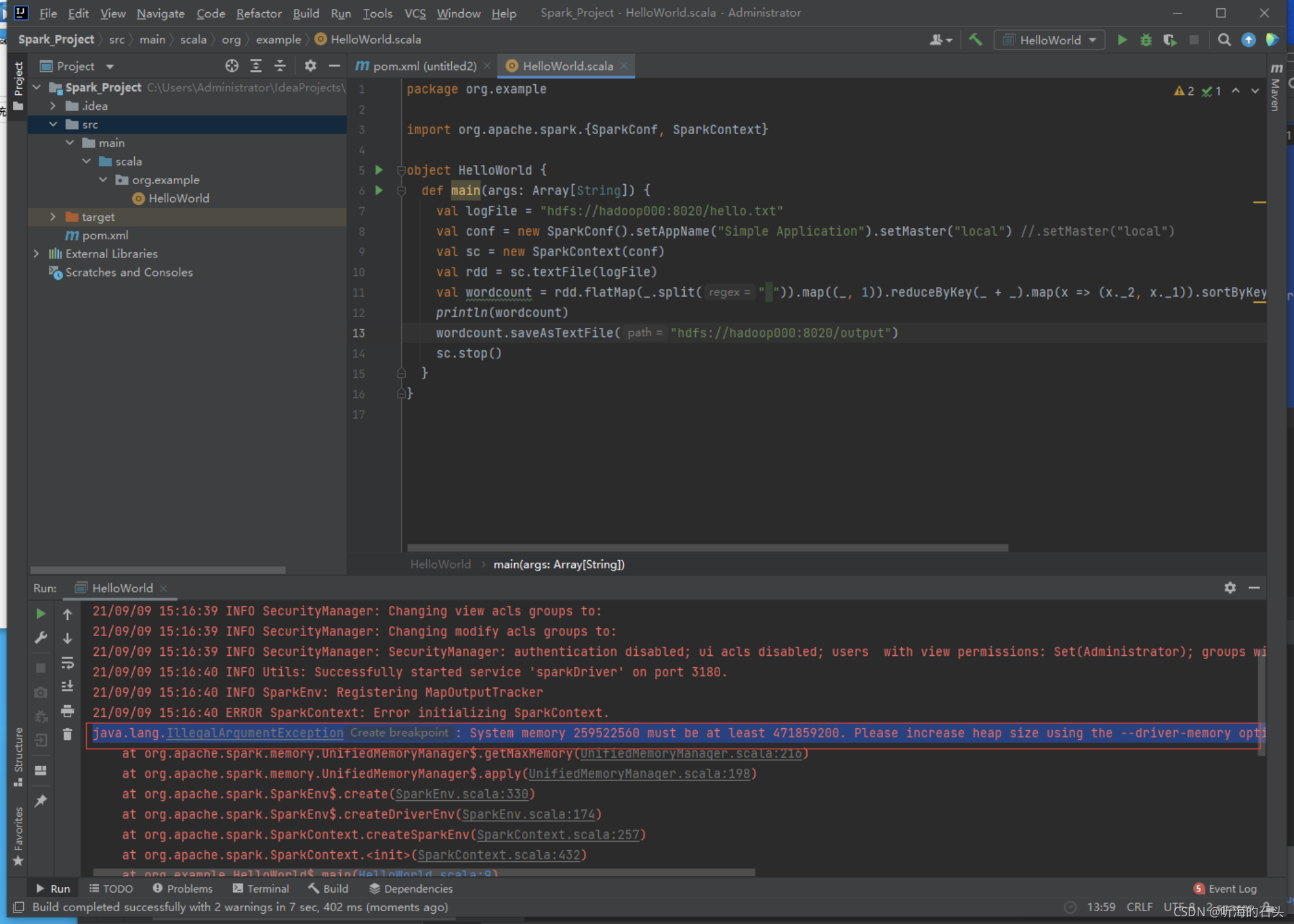

Spark测试代码:

ackage org.exampleimport org.apache.spark.{SparkConf, SparkContext}object HelloWorld {def main(args: Array[String]) {val logFile = "hdfs://hadoop000:8020/hello.txt"val conf = new SparkConf().setAppName("Simple Application").setMaster("local") //.setMaster("local")val sc = new SparkContext(conf)val rdd = sc.textFile(logFile)val wordcount = rdd.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _).map(x => (x._2, x._1)).sortByKey(false).map(x => (x._2, x._1))println(wordcount)wordcount.saveAsTextFile("hdfs://hadoop000:8020/output")sc.stop()}

}

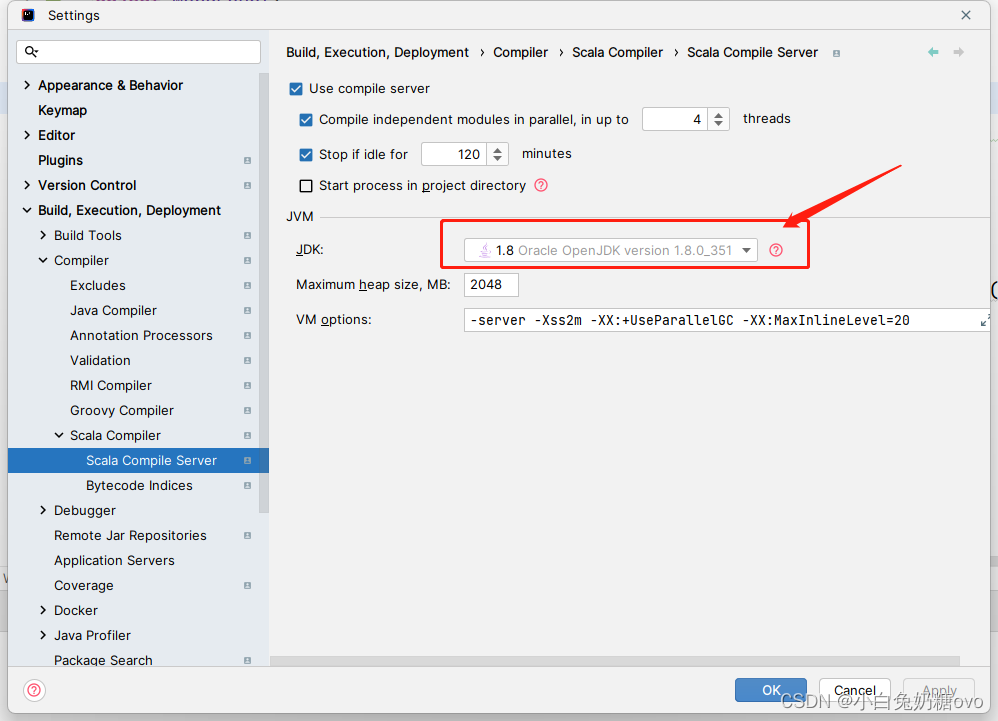

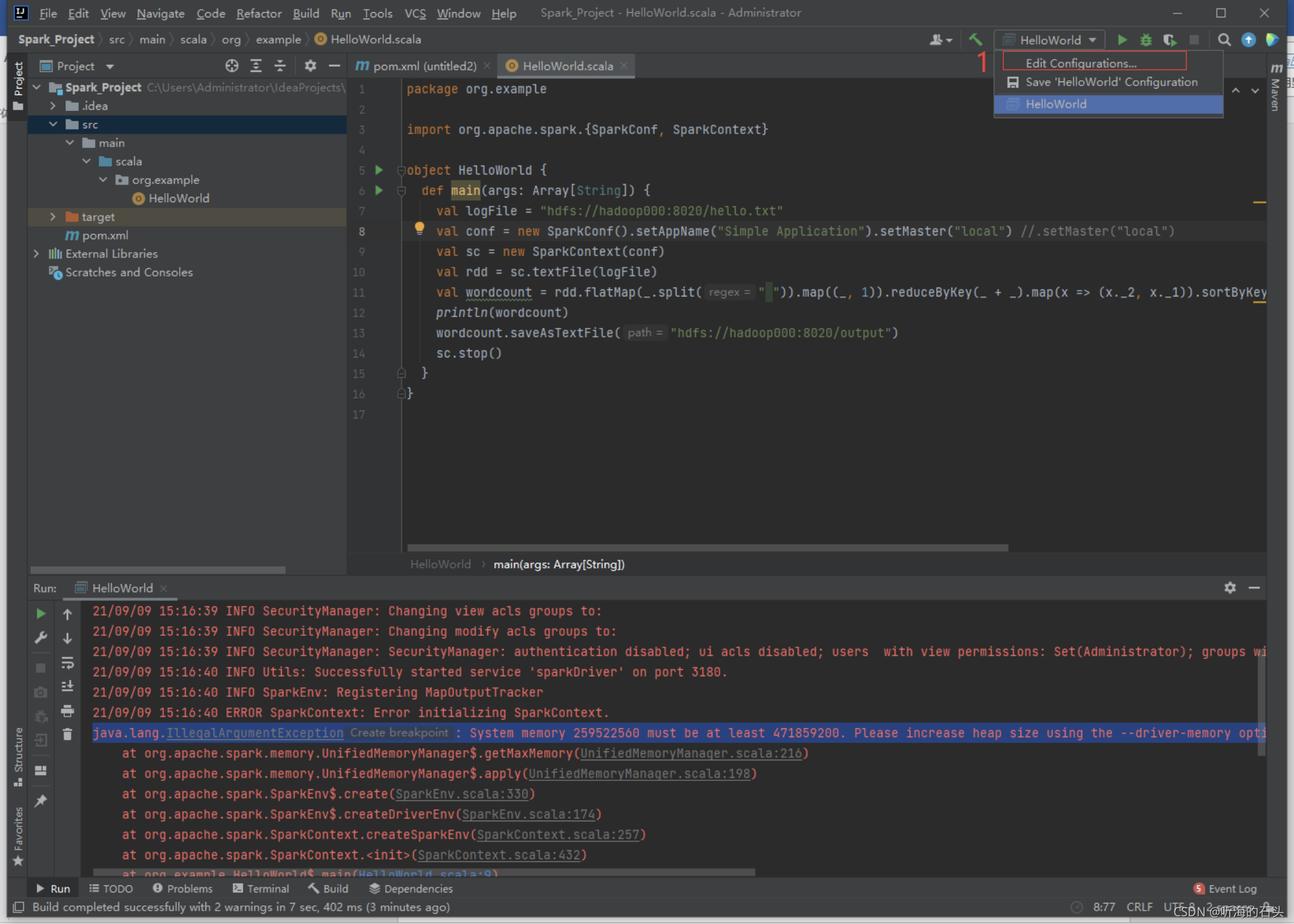

发现报错,原因是虚拟内存未配置,

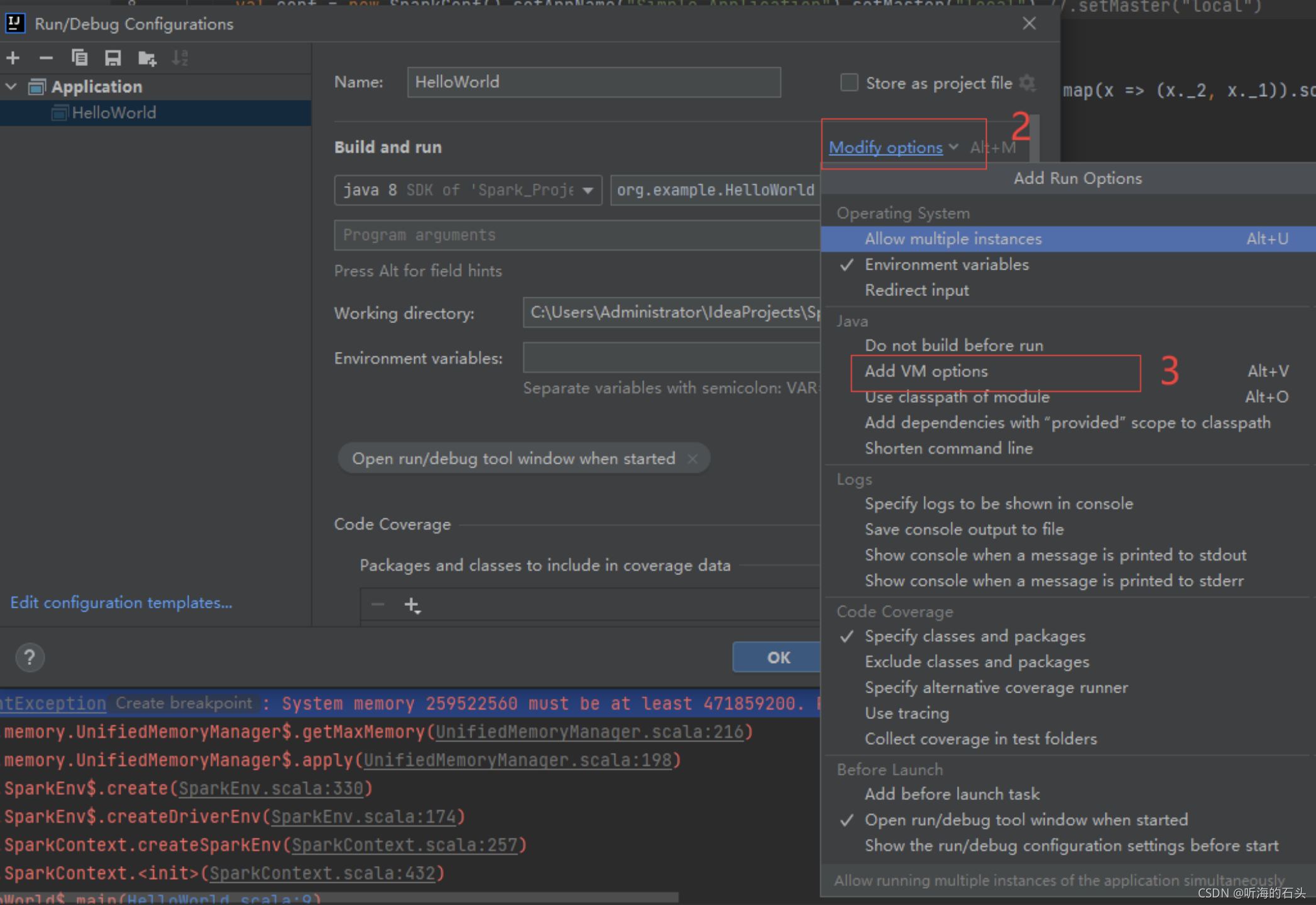

输入:-Xms1024m

运行成功!