~~~~~~~~~~~~~~~~~~~~串行single sample predict~~~~~~~~~~~~~~~~~~~~~~~~~

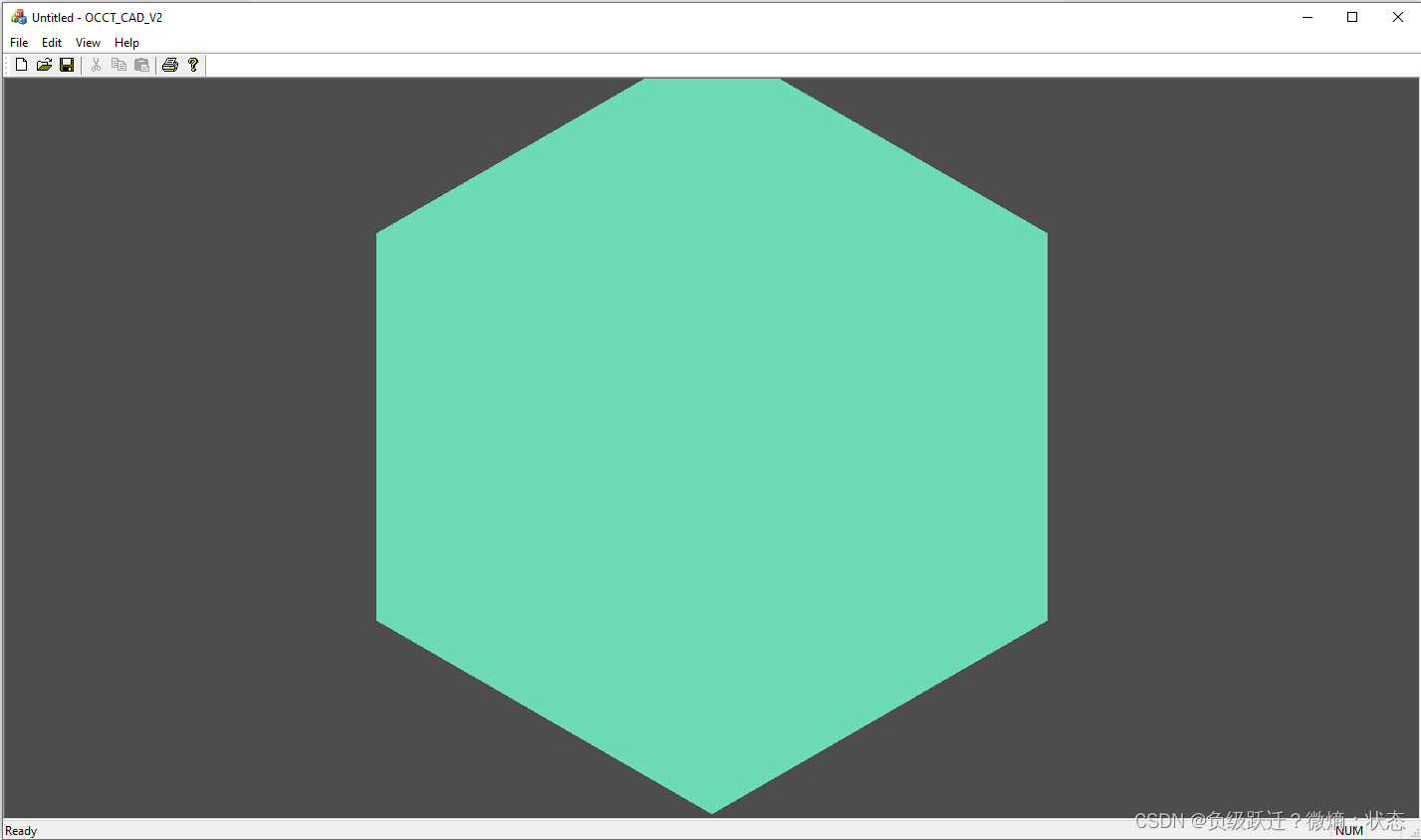

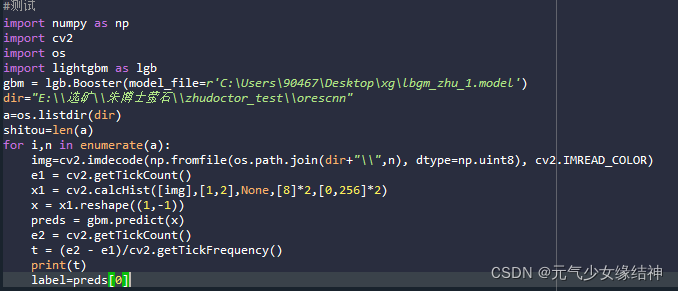

python下已测试通过,无问题:

然而C++下问题是:

1,首先是与python下概率不一致;

2,然后是所有输入的结果都一样

初步怀疑版本问题,准备从2.1.1升级到3.3.1试一下。2.x和3.x有很大不一样,编译3.3.1时遇到下列问题:

LightGBM-3.3.1/include/LightGBM/utils/common.h:36:59: fatal error: ../../../external_libs/fmt/include/fmt/format.h: 没有那个文件或目录

LightGBM-3.3.1/include/LightGBM/utils/common.h:38:82: fatal error: ../../../external_libs/fast_double_parser/include/fast_double_parser.h: 没有那个文件或目录2,LightGBM-3.3.1/src/treelearner/linear_tree_learner.cpp:7:23: fatal error: Eigen/Dense: 没有那个文件或目录

https://gitlab.com/libeigen/eigen/-/releases/3.4.0 LightGBM-3.3.1/external_libs/解决办法:直接 LightGBM/external_libs at master · microsoft/LightGBM · GitHub在这里下载对应的库解压后放在对应文件夹即可。编译一路顺风生成了动态库。将include、external_libs和lib_lightgbm.so文件夹打包即可应用。

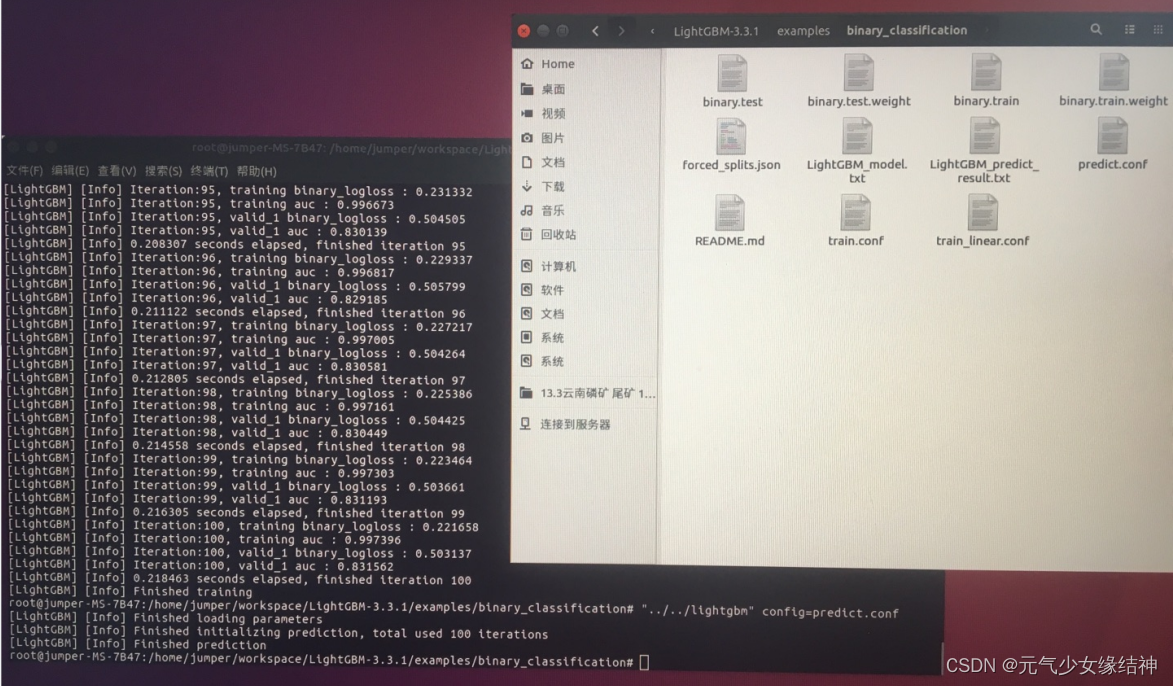

测试官例也通过了:

然而应用时发现:

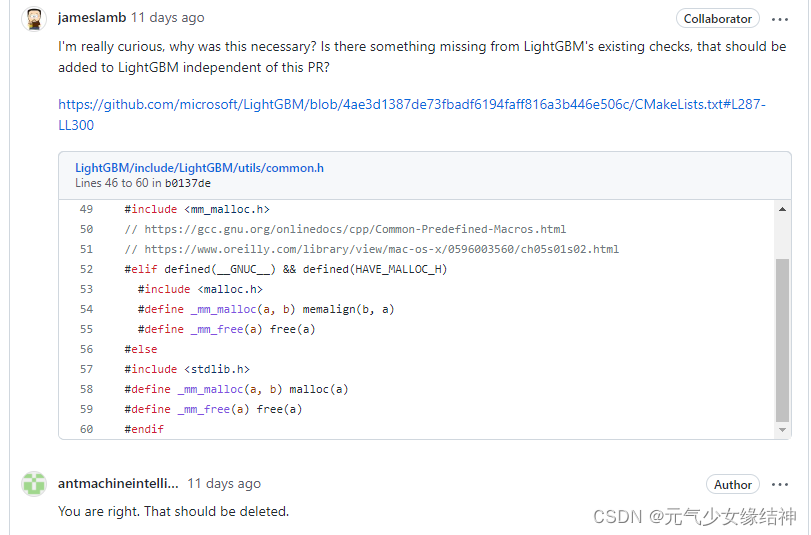

lightGBM/include/LightGBM/utils/common.h:57:26: 错误:‘void* malloc(size_t)’先被声明为‘extern’后又被声明为‘static’ [-fpermissive]

/usr/local/lib/gcc/x86_64-pc-linux-gnu/9.1.0/include/mm_malloc.h:41:7: 错误:‘__alignment’在此作用域中尚未声明41 | if (__alignment == 1)| ^~~~~~~~~~~

/usr/local/lib/gcc/x86_64-pc-linux-gnu/9.1.0/include/mm_malloc.h:43:7: 错误:‘__alignment’在此作用域中尚未声明43 | if (__alignment == 2 || (sizeof (void *) == 8 && __alignment == 4))| ^~~~~~~~~~~

/usr/local/lib/gcc/x86_64-pc-linux-gnu/9.1.0/include/mm_malloc.h:45:31: 错误:‘__alignment’在此作用域中尚未声明

LightGBM/utils/common.h:58:21: 错误:‘void free(void*)’先被声明为‘extern’后又被声明为‘static’ [-fpermissive]这些问题估计是与我的系统哪里冲突了。然后查了下:https://github.com/microsoft/LightGBM/pull/5111

Compilation error for cpp tests on macOS with gcc and `thread` sanitizer · Issue #4331 · microsoft/LightGBM · GitHub

可以看到别人也遇到这个问题

LightGBM/utils/common.h:57:26: error: 'void* malloc(size_t)' was declared 'extern' and later 'static' [-fpermissive]解决办法写成文档上传在此:https://download.csdn.net/download/wd1603926823/85201370

int main()

{char srcimg[400]={0};int numiterations = 1;BoosterHandle handle;int set = LGBM_BoosterCreateFromModelfile("/home/jumper/xrt/reference/model/lgbmmodel/lbgm_zhu_1.model", &numiterations, &handle);if(set==0){std::cout << "load model successfully ! "<< std::endl;}int channels[]={1,2};int histsize[]={8,8};float ghistrange[]={0,256};float rhistrange[]={0,256};const float *histsranges[]={ghistrange,rhistrange};vector<Mat> imgsvec;for(int index=0;index<=20;index++){sprintf(srcimg,"/home/jumper/xrt/reference/imgs/zhudoctor/orescnn/%d.png",index);Mat img=imread(srcimg);if(img.empty())continue;imgsvec.push_back(img);}double timeStart = (double)getTickCount();int size=imgsvec.size();for(int id=0;id!=size;id++){Mat img=imgsvec[id];MatND hist;cv::calcHist(&img,1,channels,Mat(),hist,2,histsize,histsranges);std::vector<double> out(1, 0);double *out_result = static_cast<double *>(out.data());int64_t out_len;int res = LGBM_BoosterPredictForMat(handle,hist.data,C_API_DTYPE_FLOAT32,1,64,1,C_API_PREDICT_NORMAL,0,-1,"None",&out_len,out_result);std::cout <<"image id:"<<id<<" ---LGBM row predict result is: " << out[0] <<std::endl;}double nTime = ((double)getTickCount() - timeStart) / getTickFrequency()*1000;cout<<nTime<<" ms!"<<endl;return 0;

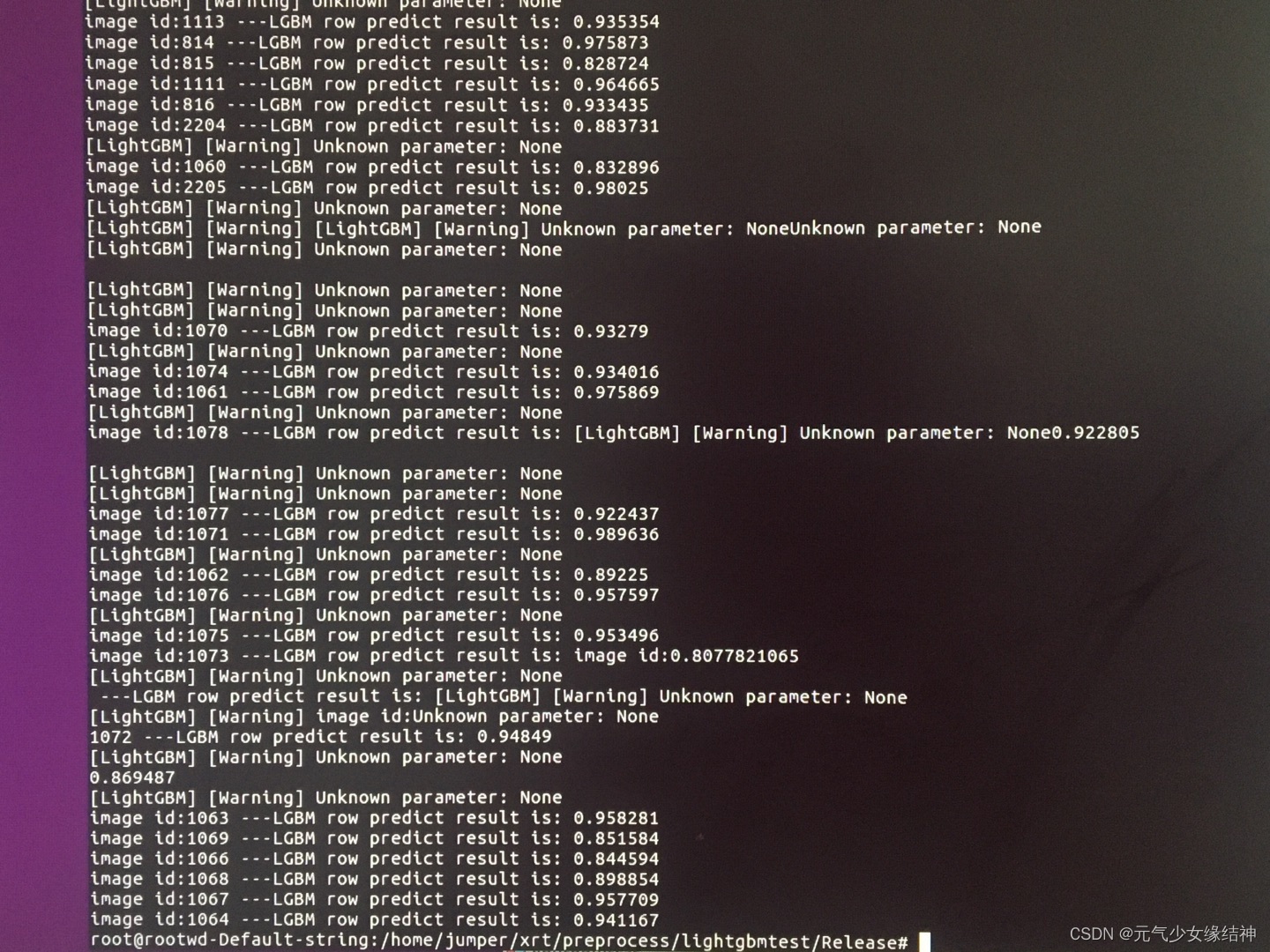

}结果已正确!!!如下所示:

load model successfully !

[LightGBM] [Warning] Unknown parameter: None

image id:0 ---LGBM row predict result is: 0.964677

[LightGBM] [Warning] Unknown parameter: None

image id:1 ---LGBM row predict result is: 0.877513

[LightGBM] [Warning] Unknown parameter: None

image id:2 ---LGBM row predict result is: 0.973227

[LightGBM] [Warning] Unknown parameter: None

image id:3 ---LGBM row predict result is: 0.895759

[LightGBM] [Warning] Unknown parameter: None

image id:4 ---LGBM row predict result is: 0.945096

[LightGBM] [Warning] Unknown parameter: None

image id:5 ---LGBM row predict result is: 0.792787

[LightGBM] [Warning] Unknown parameter: None

image id:6 ---LGBM row predict result is: 0.902854

[LightGBM] [Warning] Unknown parameter: None

image id:7 ---LGBM row predict result is: 0.965496

[LightGBM] [Warning] Unknown parameter: None

image id:8 ---LGBM row predict result is: 0.92893

[LightGBM] [Warning] Unknown parameter: None

image id:9 ---LGBM row predict result is: 0.110013

[LightGBM] [Warning] Unknown parameter: None

image id:10 ---LGBM row predict result is: 0.903951C和python结果已对上。小数点后五六位都完全一致。

~~~~~~~~~~~~~~~~~~~~~~~~分界线~~~~并行库single sample predict~~~~~~~~~~~~~~~~~~

并行库配合Lightgbm C++实现并行predict:

load model successfully !

[LightGBM] [Warning] [LightGBM] [Warning] Unknown parameter: None

[LightGBM] [Warning] [LightGBM] [Warning] Unknown parameter: None

Unknown parameter: None

[LightGBM] [Warning] [LightGBM] [Warning] [LightGBM] [Warning] Unknown parameter: None

Unknown parameter: None

Unknown parameter: None

Unknown parameter: None

[LightGBM] [Warning] Unknown parameter: None

image id:0 ---LGBM row predict result is: 0.964677

[LightGBM] [Warning] Unknown parameter: None

image id:1 ---LGBM row predict result is: 0.877513

[LightGBM] [Warning] Unknown parameter: None

image id:2 ---LGBM row predict result is: 0.973227

[LightGBM] [Warning] Unknown parameter: None

image id:3 ---LGBM row predict result is: 0.895759

[LightGBM] [Warning] Unknown parameter: None

image id:4 ---LGBM row predict result is: 0.945096

[LightGBM] [Warning] Unknown parameter: None

image id:5 ---LGBM row predict result is: 0.792787

[LightGBM] [Warning] Unknown parameter: None

image id:11 ---LGBM row predict result is: 0.0852482

image id:14 ---LGBM row predict result is: 0.92168

image id:7 ---LGBM row predict result is: 0.965496

[LightGBM] [Warning] Unknown parameter: None

[LightGBM] [Warning] Unknown parameter: None

image id:image id:12 ---LGBM row predict result is: 10 ---LGBM row predict result is: 0.948007

image id:6 ---LGBM row predict result is: [LightGBM] [Warning] Unknown parameter: None

[LightGBM] [Warning] Unknown parameter: None

0.902854

image id:13 ---LGBM row predict result is: 0.957286

[LightGBM] [Warning] Unknown parameter: None

[LightGBM] [Warning] Unknown parameter: None

0.903951

[LightGBM] [Warning] Unknown parameter: None

image id:8 ---LGBM row predict result is: 0.92893

image id:16 ---LGBM row predict result is: 0.934247

image id:19 ---LGBM row predict result is: 0.933926

image id:20 ---LGBM row predict result is: 0.937277

image id:18 ---LGBM row predict result is: 0.858938

image id:9 ---LGBM row predict result is: 0.110013

image id:17 ---LGBM row predict result is: 0.83457

image id:15 ---LGBM row predict result is: 0.927055可以看到并行与串行结果一致。

我跑了很多次测试single sample predict串行与并行的耗时跳变非常大,如下:

未解决耗时跳变时的稳定性测试,每次1560幅图:串行测试serial performance:load model successfully !

0---serial stable test:71.4656ms ~~ 71.4656ms!!!

1---serial stable test:71.4656ms ~~ 150.341ms!!!

2---serial stable test:71.4656ms ~~ 192.314ms!!!

3---serial stable test:71.4656ms ~~ 192.314ms!!!

4---serial stable test:71.4656ms ~~ 192.314ms!!!

5---serial stable test:71.4656ms ~~ 192.314ms!!!

6---serial stable test:71.4656ms ~~ 192.314ms!!!

7---serial stable test:71.4656ms ~~ 192.314ms!!!

8---serial stable test:71.4656ms ~~ 192.314ms!!!

9---serial stable test:71.4656ms ~~ 192.314ms!!!

10---serial stable test:71.4656ms ~~ 192.314ms!!!

11---serial stable test:71.4656ms ~~ 192.314ms!!!

12---serial stable test:71.4656ms ~~ 192.314ms!!!

13---serial stable test:71.4656ms ~~ 192.314ms!!!

14---serial stable test:71.4656ms ~~ 192.314ms!!!

15---serial stable test:71.4656ms ~~ 192.314ms!!!

16---serial stable test:71.4656ms ~~ 192.314ms!!!

17---serial stable test:71.4656ms ~~ 192.314ms!!!

18---serial stable test:71.4656ms ~~ 192.314ms!!!

19---serial stable test:71.4656ms ~~ 192.314ms!!!

20---serial stable test:71.4656ms ~~ 192.314ms!!!

21---serial stable test:71.4656ms ~~ 196.893ms!!!

22---serial stable test:71.4656ms ~~ 196.893ms!!!

23---serial stable test:71.4656ms ~~ 196.893ms!!!

24---serial stable test:71.4656ms ~~ 196.893ms!!!

25---serial stable test:71.4656ms ~~ 196.893ms!!!

26---serial stable test:71.4656ms ~~ 196.893ms!!!

27---serial stable test:71.4656ms ~~ 196.893ms!!!

28---serial stable test:71.4656ms ~~ 196.893ms!!!

29---serial stable test:71.4656ms ~~ 196.893ms!!!

30---serial stable test:71.4656ms ~~ 196.893ms!!!

31---serial stable test:71.4656ms ~~ 196.893ms!!!

32---serial stable test:71.4656ms ~~ 196.893ms!!!

33---serial stable test:71.4656ms ~~ 196.893ms!!!

34---serial stable test:71.4656ms ~~ 196.893ms!!!

35---serial stable test:71.4656ms ~~ 347.883ms!!!

36---serial stable test:71.4656ms ~~ 347.883ms!!!

37---serial stable test:71.4656ms ~~ 347.883ms!!!

38---serial stable test:71.4656ms ~~ 347.883ms!!!

39---serial stable test:71.4656ms ~~ 347.883ms!!!

40---serial stable test:71.4656ms ~~ 347.883ms!!!

41---serial stable test:71.4656ms ~~ 347.883ms!!!

42---serial stable test:71.4656ms ~~ 347.883ms!!!

43---serial stable test:71.4656ms ~~ 347.883ms!!!

44---serial stable test:71.4656ms ~~ 347.883ms!!!

45---serial stable test:71.4656ms ~~ 347.883ms!!!

46---serial stable test:71.4656ms ~~ 347.883ms!!!

47---serial stable test:71.4656ms ~~ 347.883ms!!!

48---serial stable test:71.4656ms ~~ 347.883ms!!!

49---serial stable test:71.4656ms ~~ 347.883ms!!!

50---serial stable test:71.4656ms ~~ 347.883ms!!!

51---serial stable test:71.4656ms ~~ 347.883ms!!!

52---serial stable test:71.4656ms ~~ 347.883ms!!!

53---serial stable test:71.4656ms ~~ 347.883ms!!!

54---serial stable test:71.4656ms ~~ 400.697ms!!!

55---serial stable test:71.4656ms ~~ 400.697ms!!!

56---serial stable test:71.4656ms ~~ 400.697ms!!!

57---serial stable test:71.4656ms ~~ 400.697ms!!!

58---serial stable test:71.4656ms ~~ 400.697ms!!!

59---serial stable test:71.4656ms ~~ 400.697ms!!!

60---serial stable test:71.4656ms ~~ 400.697ms!!!

61---serial stable test:71.4656ms ~~ 400.697ms!!!

62---serial stable test:71.4656ms ~~ 400.697ms!!!

63---serial stable test:71.4656ms ~~ 400.697ms!!!

64---serial stable test:71.4656ms ~~ 400.697ms!!!

65---serial stable test:71.4656ms ~~ 400.697ms!!!

66---serial stable test:71.4656ms ~~ 400.697ms!!!

67---serial stable test:71.4656ms ~~ 400.697ms!!!

68---serial stable test:71.4656ms ~~ 400.697ms!!!

69---serial stable test:71.4656ms ~~ 400.697ms!!!

70---serial stable test:71.4656ms ~~ 400.697ms!!!

71---serial stable test:71.4656ms ~~ 400.697ms!!!

72---serial stable test:71.4656ms ~~ 400.697ms!!!

73---serial stable test:71.4656ms ~~ 400.697ms!!!

74---serial stable test:71.4656ms ~~ 400.697ms!!!

75---serial stable test:71.4656ms ~~ 400.697ms!!!

76---serial stable test:71.4656ms ~~ 400.697ms!!!

77---serial stable test:71.4656ms ~~ 400.697ms!!!

78---serial stable test:71.4656ms ~~ 400.697ms!!!

79---serial stable test:71.4656ms ~~ 400.697ms!!!

80---serial stable test:71.4656ms ~~ 400.697ms!!!

81---serial stable test:71.4656ms ~~ 400.697ms!!!

82---serial stable test:71.4656ms ~~ 400.697ms!!!

83---serial stable test:71.4656ms ~~ 400.697ms!!!

84---serial stable test:71.4656ms ~~ 400.697ms!!!

85---serial stable test:71.4656ms ~~ 400.697ms!!!

86---serial stable test:71.4656ms ~~ 400.697ms!!!

87---serial stable test:71.4656ms ~~ 400.697ms!!!

88---serial stable test:71.4656ms ~~ 400.697ms!!!

89---serial stable test:71.4656ms ~~ 400.697ms!!!

90---serial stable test:71.4656ms ~~ 400.697ms!!!

91---serial stable test:71.4656ms ~~ 400.697ms!!!

92---serial stable test:71.4656ms ~~ 400.697ms!!!

93---serial stable test:71.4656ms ~~ 400.697ms!!!

94---serial stable test:71.4656ms ~~ 400.697ms!!!

95---serial stable test:71.4656ms ~~ 400.697ms!!!

96---serial stable test:71.4656ms ~~ 400.697ms!!!

97---serial stable test:71.4656ms ~~ 400.697ms!!!

98---serial stable test:71.4656ms ~~ 400.697ms!!!

99---serial stable test:71.4656ms ~~ 400.697ms!!!

可以看到,对于1560幅图耗时在71~400ms之间,非常不稳定!!!!并行测试:

parallel performance:

load model successfully !

0---parallel stable test:51.0798ms ~~ 51.0798ms!!!

1---parallel stable test:51.0798ms ~~ 55.0909ms!!!

2---parallel stable test:51.0798ms ~~ 59.2024ms!!!

3---parallel stable test:51.0798ms ~~ 65.9625ms!!!

4---parallel stable test:51.0798ms ~~ 69.393ms!!!

5---parallel stable test:51.0798ms ~~ 69.393ms!!!

6---parallel stable test:51.0798ms ~~ 69.393ms!!!

7---parallel stable test:51.0798ms ~~ 69.393ms!!!

8---parallel stable test:51.0798ms ~~ 69.393ms!!!

9---parallel stable test:51.0798ms ~~ 69.393ms!!!

10---parallel stable test:51.0798ms ~~ 69.393ms!!!

11---parallel stable test:51.0798ms ~~ 69.393ms!!!

12---parallel stable test:51.0798ms ~~ 69.393ms!!!

13---parallel stable test:51.0798ms ~~ 69.393ms!!!

14---parallel stable test:51.0798ms ~~ 69.393ms!!!

15---parallel stable test:51.0798ms ~~ 69.393ms!!!

16---parallel stable test:51.0798ms ~~ 69.393ms!!!

17---parallel stable test:51.0798ms ~~ 69.393ms!!!

18---parallel stable test:51.0798ms ~~ 69.393ms!!!

19---parallel stable test:51.0798ms ~~ 69.393ms!!!

20---parallel stable test:51.0798ms ~~ 69.393ms!!!

21---parallel stable test:51.0798ms ~~ 69.9926ms!!!

22---parallel stable test:51.0798ms ~~ 69.9926ms!!!

23---parallel stable test:51.0798ms ~~ 69.9926ms!!!

24---parallel stable test:51.0798ms ~~ 69.9926ms!!!

25---parallel stable test:51.0798ms ~~ 69.9926ms!!!

26---parallel stable test:51.0798ms ~~ 69.9926ms!!!

27---parallel stable test:51.0798ms ~~ 69.9926ms!!!

28---parallel stable test:51.0798ms ~~ 69.9926ms!!!

29---parallel stable test:51.0798ms ~~ 70.647ms!!!

30---parallel stable test:51.0798ms ~~ 70.647ms!!!

31---parallel stable test:51.0798ms ~~ 70.647ms!!!

32---parallel stable test:51.0798ms ~~ 70.647ms!!!

33---parallel stable test:51.0798ms ~~ 70.647ms!!!

34---parallel stable test:51.0798ms ~~ 70.647ms!!!

35---parallel stable test:51.0798ms ~~ 70.647ms!!!

36---parallel stable test:51.0798ms ~~ 70.647ms!!!

37---parallel stable test:51.0798ms ~~ 70.647ms!!!

38---parallel stable test:51.0798ms ~~ 70.647ms!!!

39---parallel stable test:51.0798ms ~~ 70.647ms!!!

40---parallel stable test:51.0798ms ~~ 70.647ms!!!

41---parallel stable test:51.0798ms ~~ 70.647ms!!!

42---parallel stable test:51.0798ms ~~ 70.647ms!!!

43---parallel stable test:51.0798ms ~~ 70.647ms!!!

44---parallel stable test:51.0798ms ~~ 70.647ms!!!

45---parallel stable test:51.0798ms ~~ 70.647ms!!!

46---parallel stable test:51.0798ms ~~ 70.647ms!!!

47---parallel stable test:51.0798ms ~~ 70.647ms!!!

48---parallel stable test:51.0798ms ~~ 70.647ms!!!

49---parallel stable test:51.0798ms ~~ 70.647ms!!!

50---parallel stable test:51.0798ms ~~ 70.647ms!!!

51---parallel stable test:51.0798ms ~~ 70.647ms!!!

52---parallel stable test:51.0798ms ~~ 78.3649ms!!!

53---parallel stable test:51.0798ms ~~ 78.3649ms!!!

54---parallel stable test:51.0798ms ~~ 78.3649ms!!!

55---parallel stable test:51.0798ms ~~ 78.3649ms!!!

56---parallel stable test:51.0798ms ~~ 78.3649ms!!!

57---parallel stable test:50.712ms ~~ 78.3649ms!!!

58---parallel stable test:50.712ms ~~ 78.3649ms!!!

59---parallel stable test:50.712ms ~~ 78.3649ms!!!

60---parallel stable test:50.712ms ~~ 78.3649ms!!!

61---parallel stable test:50.712ms ~~ 78.3649ms!!!

62---parallel stable test:50.712ms ~~ 78.3649ms!!!

63---parallel stable test:50.712ms ~~ 78.3649ms!!!

64---parallel stable test:50.712ms ~~ 78.3649ms!!!

65---parallel stable test:50.712ms ~~ 78.3649ms!!!

66---parallel stable test:50.712ms ~~ 78.3649ms!!!

67---parallel stable test:50.712ms ~~ 78.3649ms!!!

68---parallel stable test:50.712ms ~~ 78.3649ms!!!

69---parallel stable test:50.712ms ~~ 78.3649ms!!!

70---parallel stable test:50.712ms ~~ 78.3649ms!!!

71---parallel stable test:50.712ms ~~ 78.3649ms!!!

72---parallel stable test:50.712ms ~~ 78.3649ms!!!

73---parallel stable test:50.712ms ~~ 78.3649ms!!!

74---parallel stable test:50.712ms ~~ 78.3649ms!!!

75---parallel stable test:50.712ms ~~ 78.3649ms!!!

76---parallel stable test:50.712ms ~~ 78.3649ms!!!

77---parallel stable test:50.712ms ~~ 78.3649ms!!!

78---parallel stable test:50.712ms ~~ 78.3649ms!!!

79---parallel stable test:50.712ms ~~ 78.3649ms!!!

80---parallel stable test:50.712ms ~~ 78.3649ms!!!

81---parallel stable test:50.712ms ~~ 78.3649ms!!!

82---parallel stable test:50.712ms ~~ 78.3649ms!!!

83---parallel stable test:50.712ms ~~ 78.3649ms!!!

84---parallel stable test:50.712ms ~~ 78.3649ms!!!

85---parallel stable test:50.712ms ~~ 78.3649ms!!!

86---parallel stable test:50.712ms ~~ 78.3649ms!!!

87---parallel stable test:50.712ms ~~ 78.3649ms!!!

88---parallel stable test:50.712ms ~~ 78.3649ms!!!

89---parallel stable test:50.712ms ~~ 78.3649ms!!!

90---parallel stable test:50.712ms ~~ 78.3649ms!!!

91---parallel stable test:50.712ms ~~ 78.3649ms!!!

92---parallel stable test:50.712ms ~~ 78.3649ms!!!

93---parallel stable test:50.712ms ~~ 78.3649ms!!!

94---parallel stable test:50.712ms ~~ 78.3649ms!!!

95---parallel stable test:50.712ms ~~ 78.3649ms!!!

96---parallel stable test:50.712ms ~~ 78.3649ms!!!

97---parallel stable test:50.712ms ~~ 78.3649ms!!!

98---parallel stable test:50.712ms ~~ 78.3649ms!!!

99---parallel stable test:50.712ms ~~ 78.3649ms!!!

可以看到,对于1560幅图耗时在50~78ms之间,非常不稳定!!!!可见无论串/并行,耗时跳变都大,后面终于找到原因,解决以后耗时就非常非常稳定了,如下:

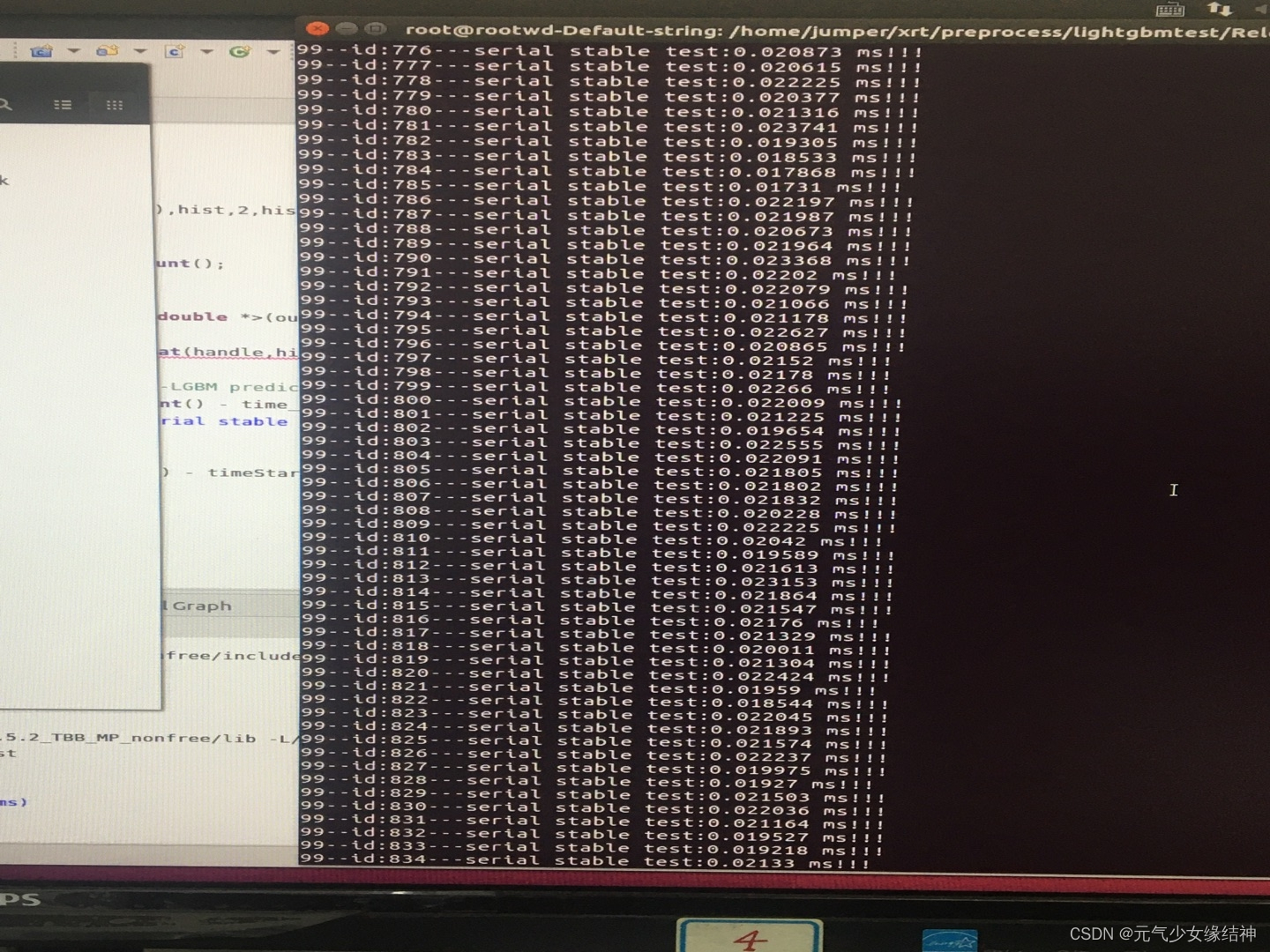

解决了耗时跳变后的稳定性测试,每次1560幅图:串行测试serial performance:

load model successfully !

0---serial stable test:41.7413ms ~~ 41.7413ms!!!

1---serial stable test:41.7413ms ~~ 44.2572ms!!!

2---serial stable test:41.7413ms ~~ 44.2572ms!!!

3---serial stable test:41.7413ms ~~ 44.2572ms!!!

4---serial stable test:41.7413ms ~~ 44.2572ms!!!

5---serial stable test:41.7413ms ~~ 44.2572ms!!!

6---serial stable test:41.7413ms ~~ 44.2572ms!!!

7---serial stable test:41.7413ms ~~ 44.2572ms!!!

8---serial stable test:41.7413ms ~~ 44.2572ms!!!

9---serial stable test:41.7413ms ~~ 44.2572ms!!!

10---serial stable test:41.7413ms ~~ 44.2572ms!!!

11---serial stable test:41.7413ms ~~ 44.2572ms!!!

12---serial stable test:41.7413ms ~~ 44.2572ms!!!

13---serial stable test:41.7413ms ~~ 44.2572ms!!!

14---serial stable test:41.7413ms ~~ 44.2572ms!!!

15---serial stable test:41.7413ms ~~ 44.2572ms!!!

16---serial stable test:41.7413ms ~~ 44.2572ms!!!

17---serial stable test:41.7413ms ~~ 44.2572ms!!!

18---serial stable test:41.7277ms ~~ 44.2572ms!!!

19---serial stable test:41.7277ms ~~ 44.2572ms!!!

20---serial stable test:41.7277ms ~~ 44.2572ms!!!

21---serial stable test:41.7277ms ~~ 44.2572ms!!!

22---serial stable test:41.7277ms ~~ 44.2572ms!!!

23---serial stable test:41.7277ms ~~ 44.2572ms!!!

24---serial stable test:41.7277ms ~~ 44.2572ms!!!

25---serial stable test:41.7277ms ~~ 44.2572ms!!!

26---serial stable test:41.7277ms ~~ 44.2572ms!!!

27---serial stable test:41.7277ms ~~ 44.2572ms!!!

28---serial stable test:41.7277ms ~~ 44.2572ms!!!

29---serial stable test:41.7277ms ~~ 44.2572ms!!!

30---serial stable test:41.7277ms ~~ 44.2572ms!!!

31---serial stable test:41.7277ms ~~ 44.2572ms!!!

32---serial stable test:41.7277ms ~~ 44.2572ms!!!

33---serial stable test:41.7277ms ~~ 44.2572ms!!!

34---serial stable test:41.7277ms ~~ 44.2572ms!!!

35---serial stable test:41.7277ms ~~ 44.2572ms!!!

36---serial stable test:41.7277ms ~~ 44.2572ms!!!

37---serial stable test:41.7277ms ~~ 44.2572ms!!!

38---serial stable test:41.7277ms ~~ 44.2572ms!!!

39---serial stable test:41.7277ms ~~ 44.2572ms!!!

40---serial stable test:41.7277ms ~~ 44.2572ms!!!

41---serial stable test:41.7277ms ~~ 44.2572ms!!!

42---serial stable test:41.7277ms ~~ 44.2572ms!!!

43---serial stable test:41.7277ms ~~ 44.2572ms!!!

44---serial stable test:41.7277ms ~~ 44.2572ms!!!

45---serial stable test:41.7277ms ~~ 44.2572ms!!!

46---serial stable test:41.7277ms ~~ 44.2572ms!!!

47---serial stable test:41.7277ms ~~ 44.2572ms!!!

48---serial stable test:41.7277ms ~~ 44.2572ms!!!

49---serial stable test:41.7277ms ~~ 44.2572ms!!!

50---serial stable test:41.7277ms ~~ 44.2572ms!!!

51---serial stable test:41.7277ms ~~ 44.2572ms!!!

52---serial stable test:41.7277ms ~~ 44.2572ms!!!

53---serial stable test:41.7277ms ~~ 44.2572ms!!!

54---serial stable test:41.7277ms ~~ 44.2572ms!!!

55---serial stable test:41.7277ms ~~ 44.2572ms!!!

56---serial stable test:41.7277ms ~~ 44.2572ms!!!

57---serial stable test:41.7277ms ~~ 44.2572ms!!!

58---serial stable test:41.7277ms ~~ 44.2572ms!!!

59---serial stable test:41.7277ms ~~ 44.2572ms!!!

60---serial stable test:41.7277ms ~~ 44.2572ms!!!

61---serial stable test:41.7277ms ~~ 44.2572ms!!!

62---serial stable test:41.7277ms ~~ 44.2572ms!!!

63---serial stable test:41.7277ms ~~ 44.2572ms!!!

64---serial stable test:41.7277ms ~~ 44.2572ms!!!

65---serial stable test:41.7277ms ~~ 44.2572ms!!!

66---serial stable test:41.7277ms ~~ 44.2572ms!!!

67---serial stable test:41.7277ms ~~ 44.2572ms!!!

68---serial stable test:41.7277ms ~~ 44.2572ms!!!

69---serial stable test:41.7277ms ~~ 44.2572ms!!!

70---serial stable test:41.7277ms ~~ 44.2572ms!!!

71---serial stable test:41.7277ms ~~ 44.2572ms!!!

72---serial stable test:41.7277ms ~~ 44.2572ms!!!

73---serial stable test:41.7277ms ~~ 44.2572ms!!!

74---serial stable test:41.7277ms ~~ 44.2572ms!!!

75---serial stable test:41.7277ms ~~ 44.2572ms!!!

76---serial stable test:41.7277ms ~~ 44.2572ms!!!

77---serial stable test:41.7277ms ~~ 44.2572ms!!!

78---serial stable test:41.7277ms ~~ 44.2572ms!!!

79---serial stable test:41.7277ms ~~ 44.2572ms!!!

80---serial stable test:41.7277ms ~~ 46.7984ms!!!

81---serial stable test:41.7277ms ~~ 46.7984ms!!!

82---serial stable test:41.7277ms ~~ 46.7984ms!!!

83---serial stable test:41.7277ms ~~ 46.7984ms!!!

84---serial stable test:41.7277ms ~~ 46.7984ms!!!

85---serial stable test:41.7277ms ~~ 46.7984ms!!!

86---serial stable test:41.7277ms ~~ 46.7984ms!!!

87---serial stable test:41.7277ms ~~ 46.7984ms!!!

88---serial stable test:41.7277ms ~~ 46.7984ms!!!

89---serial stable test:41.7277ms ~~ 46.7984ms!!!

90---serial stable test:41.7277ms ~~ 46.7984ms!!!

91---serial stable test:41.7277ms ~~ 46.7984ms!!!

92---serial stable test:41.7277ms ~~ 46.7984ms!!!

93---serial stable test:41.7277ms ~~ 46.7984ms!!!

94---serial stable test:41.7277ms ~~ 46.7984ms!!!

95---serial stable test:41.7277ms ~~ 46.7984ms!!!

96---serial stable test:41.7277ms ~~ 46.7984ms!!!

97---serial stable test:41.7277ms ~~ 46.7984ms!!!

98---serial stable test:41.7277ms ~~ 46.7984ms!!!

99---serial stable test:41.7277ms ~~ 46.7984ms!!!

和之前未解决耗时跳变时的耗时测试对比可以看到,对于1560幅图耗时只在41~46ms之间,已经非常稳定了。并行测试:

parallel performance:

load model successfully !

0---parallel stable test:9.63155ms ~~ 9.63155ms!!!

1---parallel stable test:8.76745ms ~~ 9.63155ms!!!

2---parallel stable test:8.76745ms ~~ 9.63155ms!!!

3---parallel stable test:8.76745ms ~~ 9.63155ms!!!

4---parallel stable test:8.76745ms ~~ 9.63155ms!!!

5---parallel stable test:8.76745ms ~~ 9.63155ms!!!

6---parallel stable test:8.76745ms ~~ 9.63155ms!!!

7---parallel stable test:8.76745ms ~~ 9.63155ms!!!

8---parallel stable test:8.76745ms ~~ 10.7076ms!!!

9---parallel stable test:8.76745ms ~~ 12.2607ms!!!

10---parallel stable test:8.76745ms ~~ 12.2607ms!!!

11---parallel stable test:8.76745ms ~~ 12.2607ms!!!

12---parallel stable test:8.76745ms ~~ 12.926ms!!!

13---parallel stable test:8.76745ms ~~ 12.926ms!!!

14---parallel stable test:8.76745ms ~~ 12.926ms!!!

15---parallel stable test:8.76745ms ~~ 14.8526ms!!!

16---parallel stable test:8.76745ms ~~ 14.8526ms!!!

17---parallel stable test:8.76745ms ~~ 14.8526ms!!!

18---parallel stable test:8.76745ms ~~ 14.8526ms!!!

19---parallel stable test:8.76745ms ~~ 14.8526ms!!!

20---parallel stable test:8.76745ms ~~ 14.8526ms!!!

21---parallel stable test:8.76745ms ~~ 14.8526ms!!!

22---parallel stable test:8.76745ms ~~ 14.8526ms!!!

23---parallel stable test:8.76745ms ~~ 14.8526ms!!!

24---parallel stable test:8.76745ms ~~ 14.8526ms!!!

25---parallel stable test:8.76745ms ~~ 14.8526ms!!!

26---parallel stable test:8.76745ms ~~ 14.8526ms!!!

27---parallel stable test:8.76745ms ~~ 14.8526ms!!!

28---parallel stable test:8.76745ms ~~ 14.8526ms!!!

29---parallel stable test:8.76745ms ~~ 14.8526ms!!!

30---parallel stable test:8.76745ms ~~ 14.8526ms!!!

31---parallel stable test:8.76745ms ~~ 14.8526ms!!!

32---parallel stable test:8.76745ms ~~ 14.8526ms!!!

33---parallel stable test:8.76745ms ~~ 14.8526ms!!!

34---parallel stable test:8.76745ms ~~ 14.8526ms!!!

35---parallel stable test:8.76745ms ~~ 14.8526ms!!!

36---parallel stable test:8.76745ms ~~ 14.8526ms!!!

37---parallel stable test:8.76745ms ~~ 14.8526ms!!!

38---parallel stable test:8.76745ms ~~ 14.8526ms!!!

39---parallel stable test:8.76745ms ~~ 14.8526ms!!!

40---parallel stable test:8.76745ms ~~ 14.8526ms!!!

41---parallel stable test:8.76745ms ~~ 14.8526ms!!!

42---parallel stable test:8.76745ms ~~ 14.8526ms!!!

43---parallel stable test:8.76745ms ~~ 14.8526ms!!!

44---parallel stable test:8.76745ms ~~ 14.8526ms!!!

45---parallel stable test:8.76745ms ~~ 14.8526ms!!!

46---parallel stable test:8.76745ms ~~ 14.8526ms!!!

47---parallel stable test:8.76745ms ~~ 14.8526ms!!!

48---parallel stable test:8.76745ms ~~ 14.8526ms!!!

49---parallel stable test:8.76745ms ~~ 14.8526ms!!!

50---parallel stable test:8.76745ms ~~ 14.8526ms!!!

51---parallel stable test:8.76745ms ~~ 14.8526ms!!!

52---parallel stable test:8.76745ms ~~ 14.8526ms!!!

53---parallel stable test:8.76745ms ~~ 14.8526ms!!!

54---parallel stable test:8.76745ms ~~ 14.8526ms!!!

55---parallel stable test:8.76745ms ~~ 14.8526ms!!!

56---parallel stable test:8.76745ms ~~ 14.8526ms!!!

57---parallel stable test:8.76745ms ~~ 14.8526ms!!!

58---parallel stable test:8.76745ms ~~ 14.8526ms!!!

59---parallel stable test:8.76745ms ~~ 14.8526ms!!!

60---parallel stable test:8.76745ms ~~ 14.8526ms!!!

61---parallel stable test:8.76745ms ~~ 14.8526ms!!!

62---parallel stable test:8.76745ms ~~ 14.8526ms!!!

63---parallel stable test:8.76745ms ~~ 14.8526ms!!!

64---parallel stable test:8.76745ms ~~ 14.8526ms!!!

65---parallel stable test:8.76745ms ~~ 14.8526ms!!!

66---parallel stable test:8.76745ms ~~ 14.8526ms!!!

67---parallel stable test:8.76745ms ~~ 14.8526ms!!!

68---parallel stable test:8.76745ms ~~ 14.8526ms!!!

69---parallel stable test:8.76745ms ~~ 14.8526ms!!!

70---parallel stable test:8.76745ms ~~ 14.8526ms!!!

71---parallel stable test:8.76745ms ~~ 14.8526ms!!!

72---parallel stable test:8.76745ms ~~ 14.8526ms!!!

73---parallel stable test:8.5113ms ~~ 14.8526ms!!!

74---parallel stable test:8.5113ms ~~ 14.8526ms!!!

75---parallel stable test:8.5113ms ~~ 14.8526ms!!!

76---parallel stable test:8.5113ms ~~ 14.8526ms!!!

77---parallel stable test:8.5113ms ~~ 14.8526ms!!!

78---parallel stable test:8.5113ms ~~ 14.8526ms!!!

79---parallel stable test:8.5113ms ~~ 14.8526ms!!!

80---parallel stable test:8.5113ms ~~ 14.8526ms!!!

81---parallel stable test:8.5113ms ~~ 14.8526ms!!!

82---parallel stable test:8.5113ms ~~ 14.8526ms!!!

83---parallel stable test:8.5113ms ~~ 14.8526ms!!!

84---parallel stable test:8.5113ms ~~ 14.8526ms!!!

85---parallel stable test:8.5113ms ~~ 14.8526ms!!!

86---parallel stable test:8.5113ms ~~ 14.8526ms!!!

87---parallel stable test:8.5113ms ~~ 14.8526ms!!!

88---parallel stable test:8.5113ms ~~ 14.8526ms!!!

89---parallel stable test:8.5113ms ~~ 14.8526ms!!!

90---parallel stable test:8.5113ms ~~ 14.8526ms!!!

91---parallel stable test:8.5113ms ~~ 14.8526ms!!!

92---parallel stable test:8.5113ms ~~ 14.8526ms!!!

93---parallel stable test:8.5113ms ~~ 14.8526ms!!!

94---parallel stable test:8.5113ms ~~ 14.8526ms!!!

95---parallel stable test:8.5113ms ~~ 14.8526ms!!!

96---parallel stable test:8.5113ms ~~ 14.8526ms!!!

97---parallel stable test:8.47865ms ~~ 14.8526ms!!!

98---parallel stable test:8.47865ms ~~ 14.8526ms!!!

99---parallel stable test:8.47865ms ~~ 14.8526ms!!!

和之前未解决耗时跳变时的耗时测试对比可以看到对于1560幅图,耗时跳变只在8~14ms之间,也已经非常稳定了。同样的代码,同样的1560张图片,图像的模型,可以看到必须要解决耗时跳变问题!!!解决后就可以工业应用了!!!

另外将[LightGBM] [Warning] Unknown parameter :None 警告消除方式也在刚刚发的那个下载链接里:

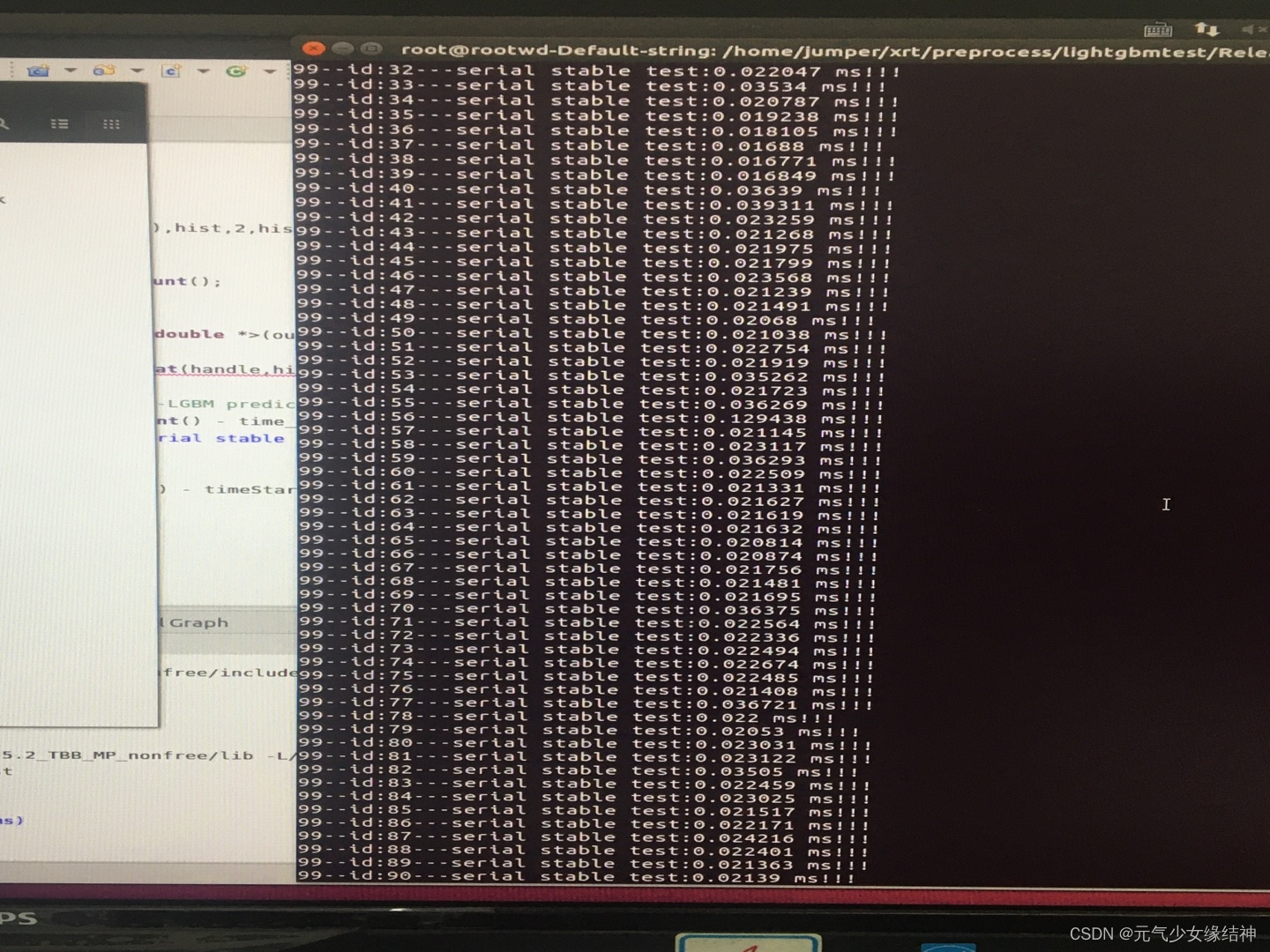

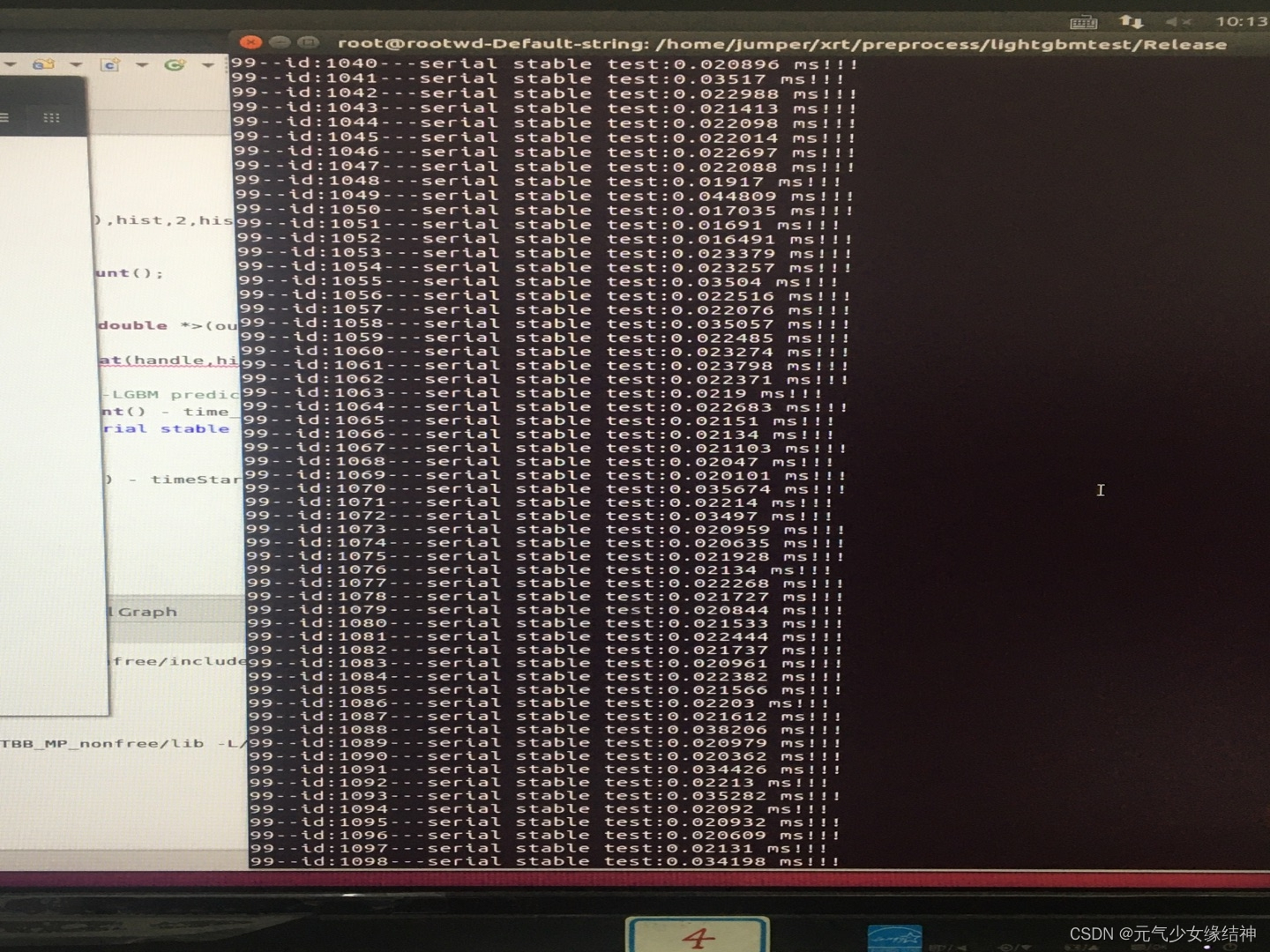

看解决跳变后,单张图片的耗时也非常非常非常稳定。始终在0.0x毫秒,测试代码也在刚发的链接里。

~~~~~~~~~~~~~~~~~~~~~~~~~分界线~~~~~~batch predict~~~~~~~~~~~~~~~~~~~~~~~~~~~

另外又查到多线程问题:Lightgbm多线程卡死问题定位 | 逸思杂陈

不知道LightGBM是否真的有这个问题。

查到https://github.com/microsoft/LightGBM/issues/1534

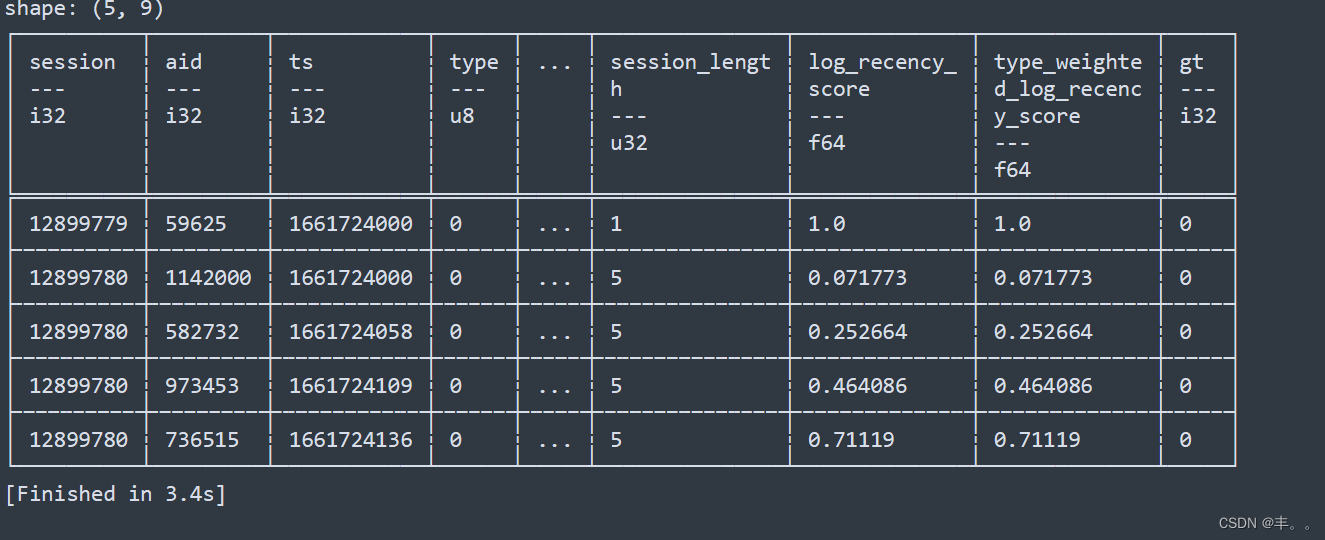

这是说如果predict的输入是单条特征,那么就只有1个CPU core;如果输入是多条特征,也就是batch predict,使用了多个CPU cores?然后我试了一下predict多个samples:

int main()

{char srcimg[400]={0};int numiterations = 1;BoosterHandle handle;int set = LGBM_BoosterCreateFromModelfile("/home/jumper/imgs/lgbmmodel/lbgm_zhu_1.model", &numiterations, &handle);if(set==0){std::cout << "load model successfully ! "<< std::endl;}int channels[]={1,2};int histsize[]={8,8};float ghistrange[]={0,256};float rhistrange[]={0,256};const float *histsranges[]={ghistrange,rhistrange};const int samplesnum=11;//2228;const int featuredim=64;Mat paradatas=Mat::zeros(samplesnum,featuredim,CV_32FC1);for(int index=0;index!=samplesnum;index++){sprintf(srcimg,"/home/jumper/imgs/cnntmp/doctorzhu_fluorite/orescnn/%d.png",index);Mat img=imread(srcimg);if(img.empty())continue;MatND hist;cv::calcHist(&img,1,channels,Mat(),hist,2,histsize,histsranges);for(int tmpi=0;tmpi!=64;tmpi++){paradatas.ptr<float>(index)[tmpi]=hist.data[tmpi];cout<<paradatas.ptr<float>(index)[tmpi]<<" ";}cout<<endl;}std::cout <<"created data..." <<std::endl;std::vector<double> out(samplesnum, 0);double *out_result = static_cast<double *>(out.data());int64_t out_len;int res = LGBM_BoosterPredictForMat(handle,paradatas.data,C_API_DTYPE_FLOAT32,samplesnum,featuredim,1,C_API_PREDICT_NORMAL,0,-1,"None",&out_len,out_result);for(int id=0;id!=out_len;id++){cout<<"sample id: "<<id<<" score:"<<out[id]<<endl;}return 0;

}输出:

load model successfully !

0 0 8 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 21 68 0 0 26 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 64 64 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 215 67 0 0 174 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 128 63 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 166 68 0 0 161 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 224 64 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 236 67 0 0 48 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 124 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 203 67 0 0 64 65 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 8 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 241 67 0 0 36 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 128 222 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 192 13 68 0 0 218 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 192 41 68 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 32 153 68 0 0 198 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 186 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 192 37 68 0 0 60 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 162 66 0 0 248 65 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

created data...

[LightGBM] [Warning] Unknown parameter: None

sample id: 0 score:0.847249

sample id: 1 score:0.820929

sample id: 2 score:0.772399

sample id: 3 score:0.772399

sample id: 4 score:0.820929

sample id: 5 score:0.820929

sample id: 6 score:0.845796

sample id: 7 score:0.772399

sample id: 8 score:0.844833

sample id: 9 score:0.400716

sample id: 10 score:0.820929为什么输出概率与single sample predict不一样??我把特征打印出来了 明明特征都是一样的,但结果不一样。batch的精度不正确,为什么比single的低?!!single sample predict的是:

load model successfully !

0 0 8 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 21 68 0 0 26 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:0 ---LGBM row predict result is: 0.964677

0 0 64 64 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 215 67 0 0 174 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:1 ---LGBM row predict result is: 0.877513

0 0 128 63 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 166 68 0 0 161 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:2 ---LGBM row predict result is: 0.973227

0 0 224 64 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 128 236 67 0 0 48 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:3 ---LGBM row predict result is: 0.895759

0 0 124 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 203 67 0 0 64 65 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:4 ---LGBM row predict result is: 0.945096

0 0 8 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 241 67 0 0 36 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:5 ---LGBM row predict result is: 0.792787

0 128 222 67 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 192 13 68 0 0 218 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:6 ---LGBM row predict result is: 0.902854

0 192 41 68 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 32 153 68 0 0 198 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:7 ---LGBM row predict result is: 0.965496

0 0 186 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 192 37 68 0 0 60 66 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:8 ---LGBM row predict result is: 0.92893

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:9 ---LGBM row predict result is: 0.110013

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 162 66 0 0 248 65 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[LightGBM] [Warning] Unknown parameter: None

image id:10 ---LGBM row predict result is: 0.903951这个概率才是对的,可以看到single sample与batch sample predict概率不一样,为何?

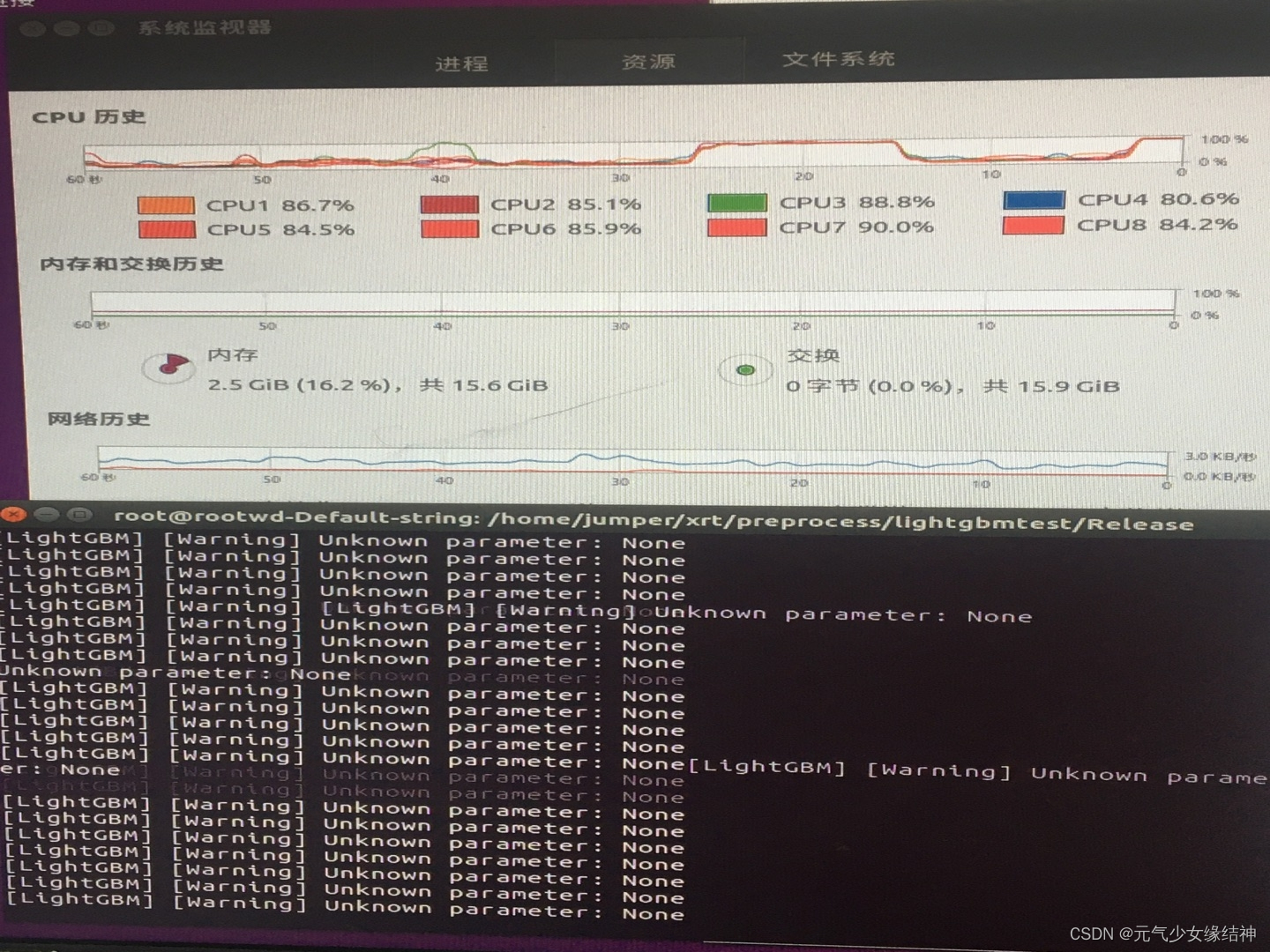

抛开score不同问题,想一直跑试试,发现真的每个核几乎都到了100%!!

也就是证明batch predict在batch够大时是自动并行了。另外之前的single sample predict-serial 和single sample predict-parallel都是自动并行的,核都会跑起来,因为predict函数内部用了omp。

至于batch predict时的精度低的问题,不知道是不是LGBM C++内部并行导致的,目前没查到有用的资料,如果大家发现也可以告诉我。所以我们还是先像我链接里那样稳妥的用single predict吧,毕竟这与python下一致。

single sample predict-serial :

single sample predict-parallel下:

至此,ubuntu下LightGBM C++应用的所有问题均已解决。用在工程中一段时间,没问题。

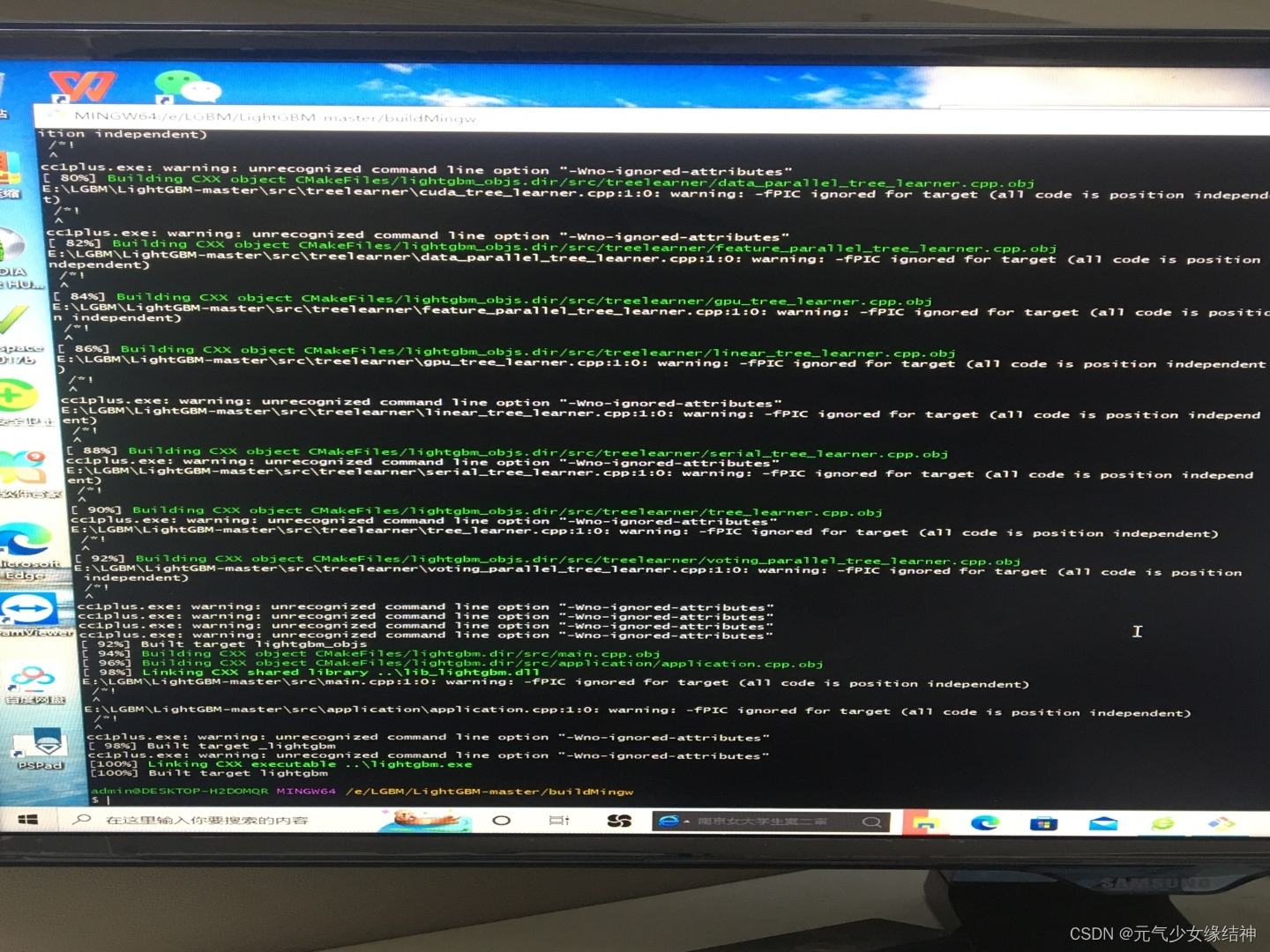

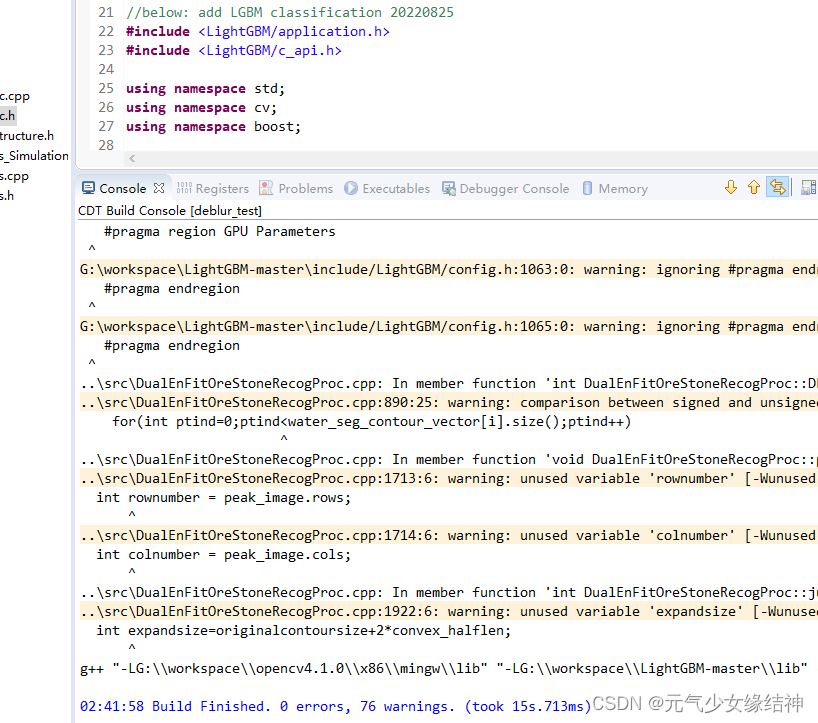

~~~~~~~~~~~~~~~~~~~~~~windows下 lightgbm c++~~~~~~~~~~~~~~

今天我又在另一台windows10上按照 Installation Guide — LightGBM 3.3.2.99 documentation 步骤安装:记得git也要添加进环境变量

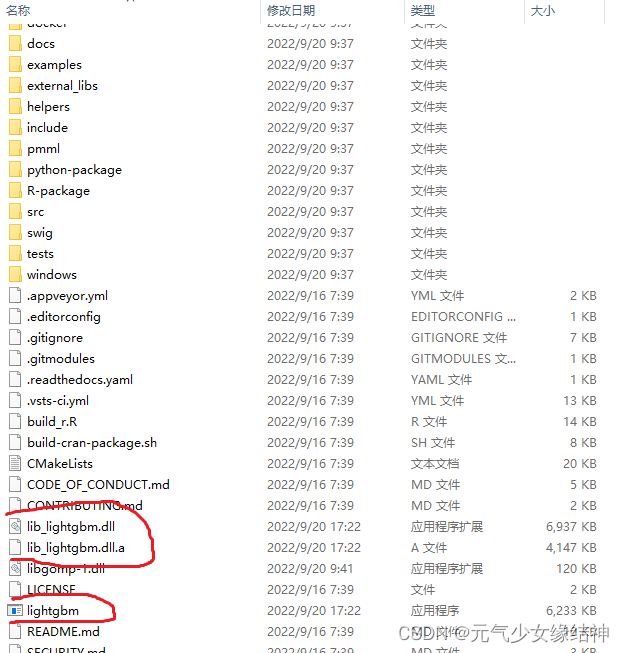

可以看到生成的库和lightgbm应用程序,记得将此时的路径添加进环境变量:

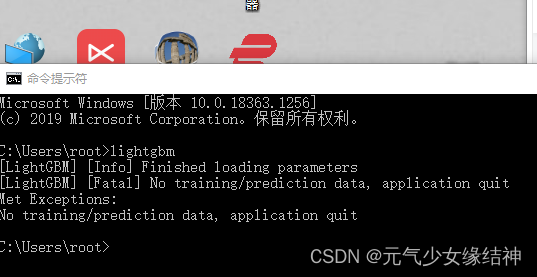

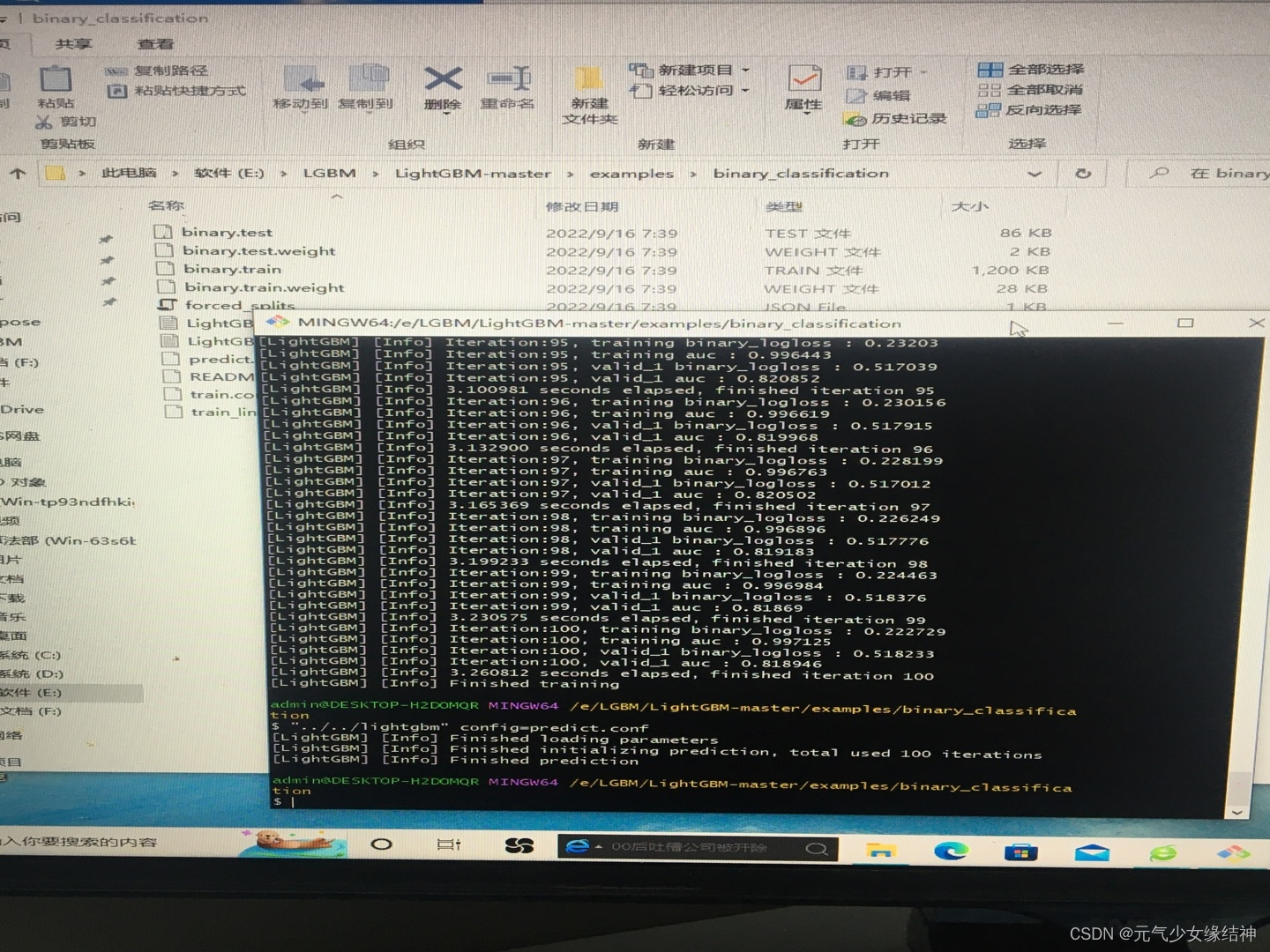

然后开始按照Readme中的操作跑示例代码,如下得到正确结果:

但是在我用lib_lightgbm.dll在测试时却出现:

G:\workspace\lightGBM\include/LightGBM/utils/../../../external_libs/fmt/include/fmt/format-inl.h: In function 'void fmt::v8::detail::print(FILE*, fmt::v8::string_view)':

G:\workspace\lightGBM\include/LightGBM/utils/../../../external_libs/fmt/include/fmt/format-inl.h:2603:22: error: '_fileno' was not declared in this scopeauto fd = _fileno(f);^

In file included from G:\workspace\lightGBM\include/LightGBM/config.h:16:0,from G:\workspace\lightGBM\include/LightGBM/application.h:8,from ..\src\DualEnFitOreStoneRecogProc.h:22,from ..\src\XRT_PC_Stream_Mathprocess.h:11,from ..\src\XRT_PC_Stream_Mathprocess.cpp:25:

G:\workspace\lightGBM\include/LightGBM/utils/common.h: In member function 'T LightGBM::Common::__StringToTHelper<T, true>::operator()(const string&) const':

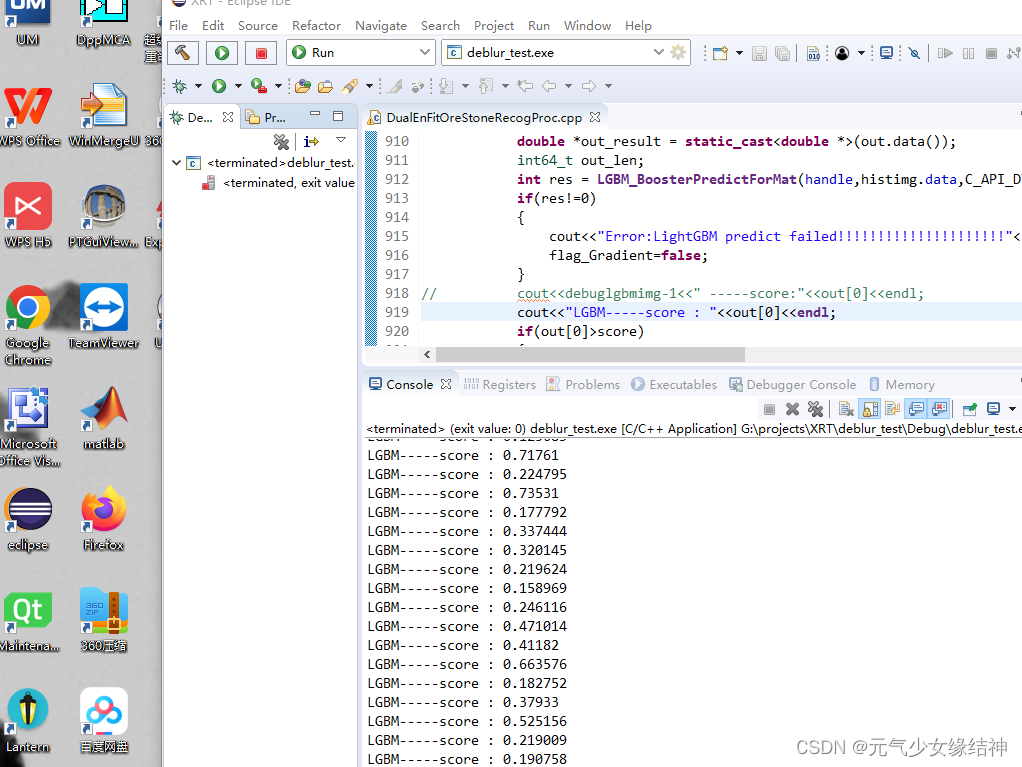

G:\workspace\lightGBM\include/LightGBM/utils/common.h:426:27: error: 'stod' is not a member of 'std'return static_cast<T>(std::stod(str));解决办法也在我的链接里,现在windows c++下使用LGBM 编译已经没问题了:

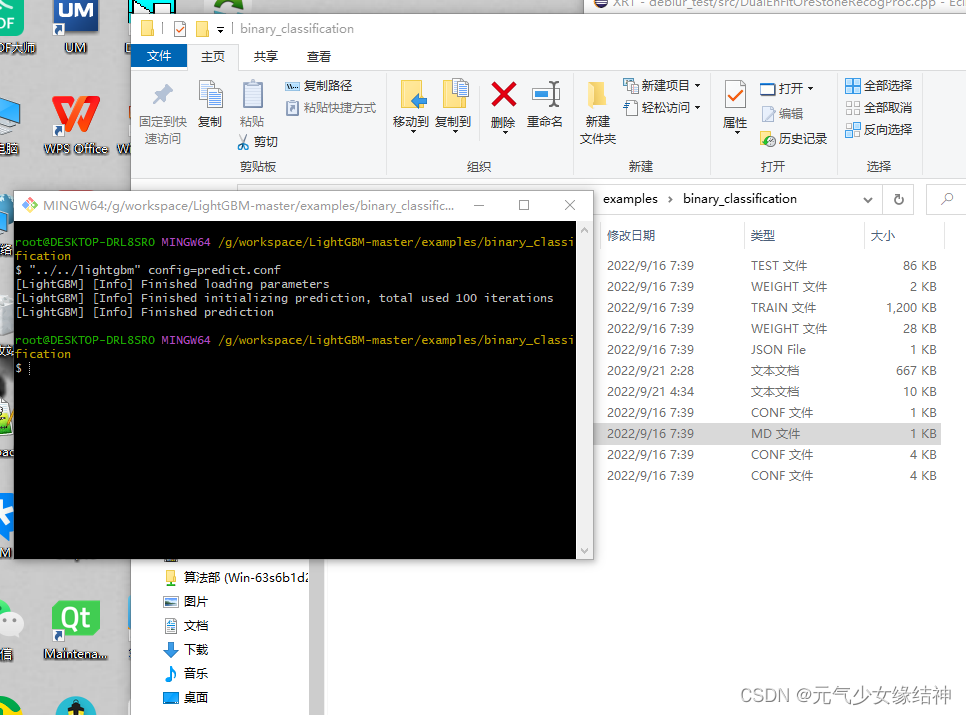

然后运行也OK,如下所示:

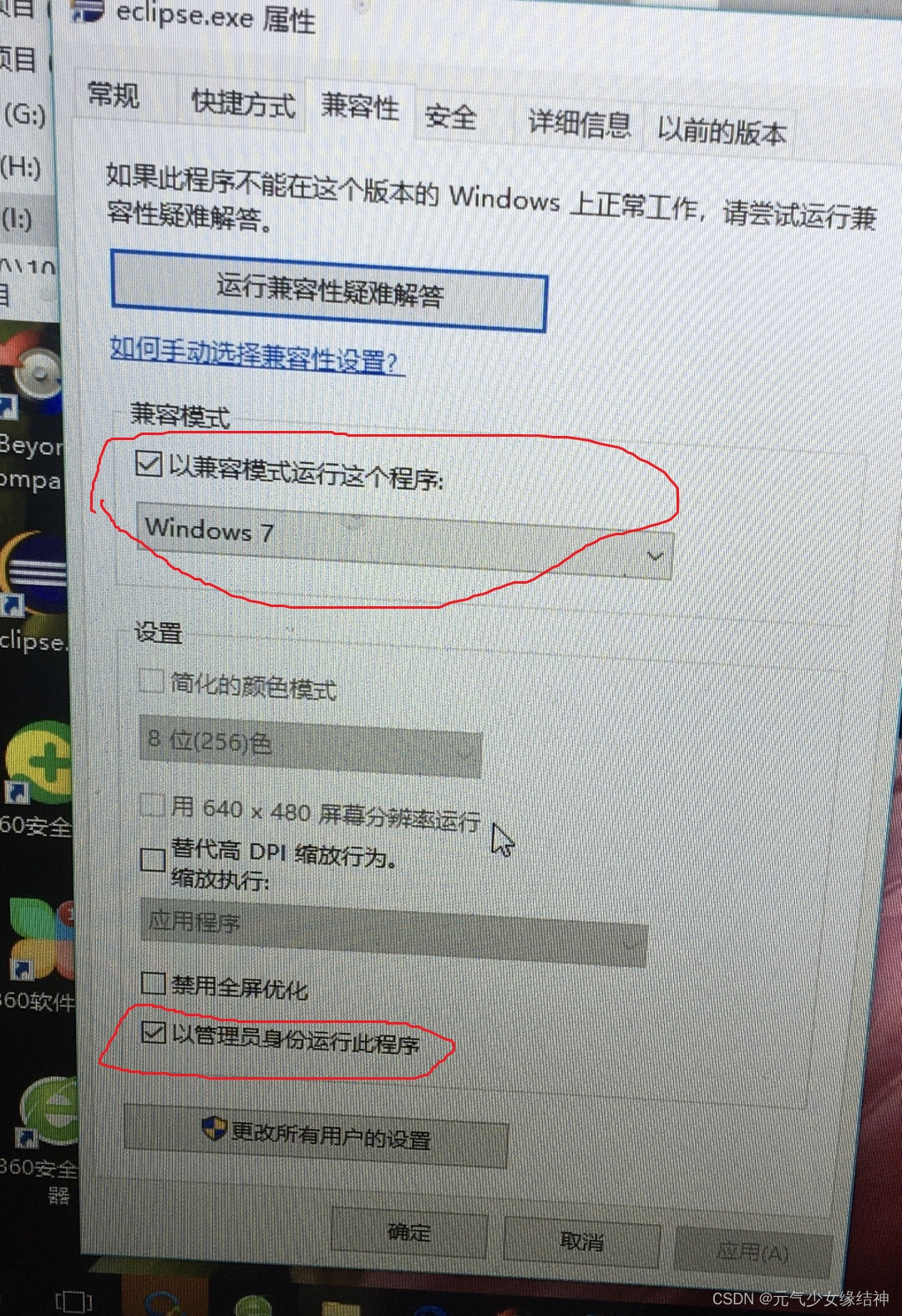

如果大家转移到别的电脑出现如下问题:

如果大家转移到别的电脑出现如下问题:

eclipse exit value -1073741701

应用程序无法正常启动0xc000007b,请单击确定关闭应用程序则应该先关闭软件,然后如下设置软件属性(设置一次即可),即可解决(感谢大佬的指点):

至此windows、ubuntu下LGBM C++动态库已经均完成编译、测试官例、测试自己的工程,均可正常使用。

![[机器学习] 模型融合GBDT(xgb/lgbm/rf)+LR 的原理及实践](https://img-blog.csdn.net/201810091707037?watermark/2/text/aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L2Fuc2h1YWlfYXcx/font/5a6L5L2T/fontsize/400/fill/I0JBQkFCMA==/dissolve/70)

![[C++] OpenCasCade空间几何库的模型展现](https://img-blog.csdnimg.cn/20190104124903515.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L20wXzM4MTI1Mjc4,size_16,color_FFFFFF,t_70)