IK分词器是ES的一个插件,主要用于把一段中文或者英文的划分成一个个的关键字,我们在搜索时候会把自己的信息进行分词,会把数据库中或者索引库中的数据进行分词,然后进行一个匹配操作,默认的中文分词器是将每个字看成一个词,比如"我爱技术"会被分为"我","爱","技","术",这显然不符合要求,所以我们需要安装中文分词器IK来解决这个问题;

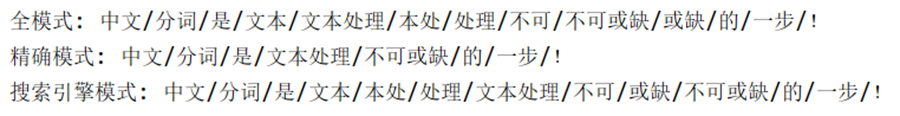

IK提供了两个分词算法:ik_smart和ik_max_word

ik_smart为最少切分,添加了歧义识别功能,推荐;

ik_max_word为最细切分,能切的都会被切掉;

示例:对“买一台笔记本” 进行分词

ik_smart分词结果:

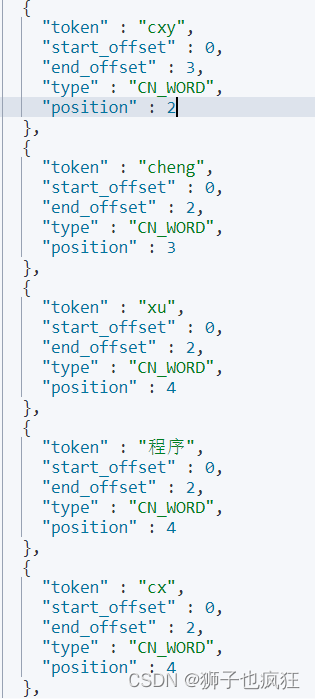

ik_max_word分词结果:

{"tokens" : [{"token" : "买一","start_offset" : 0,"end_offset" : 2,"type" : "CN_WORD","position" : 0},{"token" : "一台","start_offset" : 1,"end_offset" : 3,"type" : "CN_WORD","position" : 1},{"token" : "一","start_offset" : 1,"end_offset" : 2,"type" : "TYPE_CNUM","position" : 2},{"token" : "台笔","start_offset" : 2,"end_offset" : 4,"type" : "CN_WORD","position" : 3},{"token" : "台","start_offset" : 2,"end_offset" : 3,"type" : "COUNT","position" : 4},{"token" : "笔记本","start_offset" : 3,"end_offset" : 6,"type" : "CN_WORD","position" : 5},{"token" : "笔记","start_offset" : 3,"end_offset" : 5,"type" : "CN_WORD","position" : 6},{"token" : "本","start_offset" : 5,"end_offset" : 6,"type" : "CN_CHAR","position" : 7}]

}

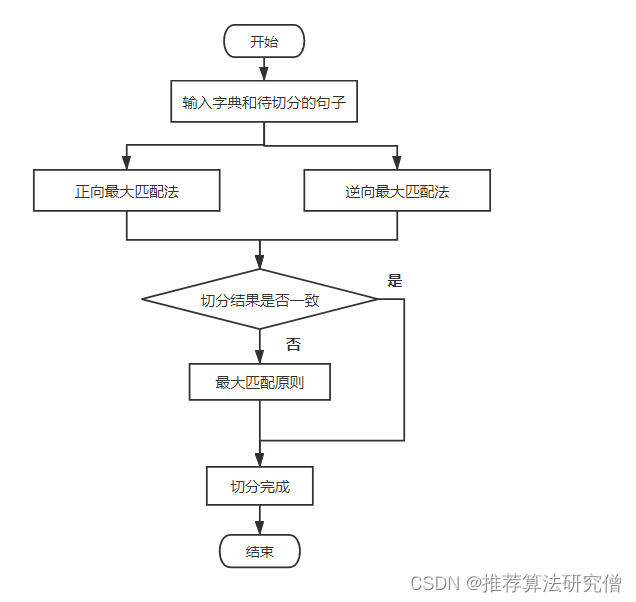

添加自定义词语:

在许多情况下会有一些专业数据,例如:

"于敏为祖国奉献一生", ik_smart分词后的结果是:

"于","敏", "为","祖国","奉献", "一生";而于敏是人名,被拆分开来了,需要将其作为一个词语添加到词典中;

在IK目录下有config文件夹,用于存储词典;

创建一个文件: mydict.dic , 在里面添加"于敏"

然后将文件名写入到IKAnalyzer.cfg.xml文件中:

保存后重启ES和Kibana

注意:文件的数主和属组权限保持一致,不然会无法识别;

启动ES的过程中可以看到加载了自己定义的字典;

[INFO ][o.w.a.d.Dictionary ] [A03-R28-I33-232-JCFB742.JD.LOCAL] [Dict Loading] /export/servers/elasticSearch/elasticsearch-7.15.1/plugins/ik/config/mydict.dic再次运行可以看到“于敏”被作为一个词分出来了