chenyuntc/simple-faster-r-cnn的代码详细讲解

- data

- data/voc_dataset.py

- data/util.py

- data/dataset.py

- mics

- convet_caffe_pretrain

- train_fast.py

- utils

- array_tool.py

- _config.py

- eval_tool.py

- vis_tool.py

- model

- utils

- nms

- build_.py

- _nms_gpu_post_py.py

- non_maximum_suppression.py

- _nms_gpu_post.pyd

- bbox_tools.py

- creator_tool.py

- roi_cupy.py

- faster_rcnn.py

- faster_rcnn.py

- region_proposal_network.py

- roi_module.py

- trainer_.py

- train_.py

这里是faster-r-cnn论文原文+翻译: csdn翻译

这里是陈云实现的faster-r-cnn代码链接: github

这里是陈云在知乎的讲解和一些问题问答: 知乎

整体的模型框架和作用作者已经介绍的很详细了,我就不再从整体的大框架讲解,但是遇到代码部分的细分我会再解说一次,水平有限,如有错误,还请指正。

data

data/voc_dataset.py

// An highlighted block

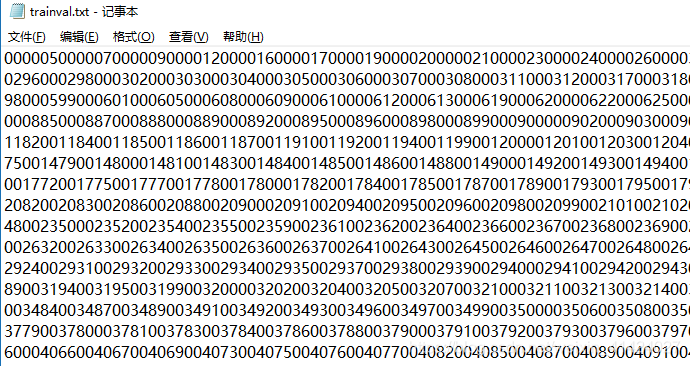

import os

import xml.etree.ElementTree as ET //ET类是专门用来解析标注xml文件的,语法简单,十分易用

import numpy as np

from .util import read_imageclass VOCBboxDataset: //读取voc数据集 实现魔术方法__getitem__(以便pytorch的DataLoader读取数据集) 返回其中一张的 图片img:numpy矩阵标签label: 0-19 标注框box:([ymin,xmin,ymax,xmax]) 是否难以标注difficult: 0 or 1一张图片可以有多个box(R,4)和label(R,)和difficult(R,) 那么将返回多维numpy数组形式如果要训练自己的数据集 那么请修改这里的魔术方法读取自己的数据集def __init__(self, data_dir, split='trainval', use_difficult=False, return_difficult=False,): //参数datadir由utils.config的voc_data_dir而来,是数据集存放的地址 use_difficult:表示是否启用难识别 图片标注的时候,比如我们要识别自行车,图片中有一堆自行车,我们没办法一个一个的标记出来,这时会用到difficult 一般来说训练不启用difficult 测试会用到return_difficult:是否返回difficultid_list_file = os.path.join(data_dir, 'ImageSets/Main/{0}.txt'.format(split)) //id_list_file =data_dir/ImageSets/Main/trainval.txt' 这个txt文本中 每行是图片数据的名字(不带后缀如.jpg后缀) self.ids = [id_.strip() for id_ in open(id_list_file)] //类表解析:读取上面的txt 按行读取后装入一个列表self.data_dir = data_dirself.use_difficult = use_difficultself.return_difficult = return_difficultself.label_names = VOC_BBOX_LABEL_NAMES //在最下面,是voc数据集所有物体name的tupledef __len__(self):return len(self.ids) //数据集的数量 就是ids列表的长度def get_example(self, i): //魔术方法:从数据集列表ids中 选取一个进行xml解析id_ = self.ids[i] //列表中选取一个数据anno = ET.parse(os.path.join(self.data_dir, 'Annotations', id_ + '.xml')) //找到名字对应的xml 用ET进行解析bbox = list()label = list()difficult = list()for obj in anno.findall('object'): //找到所有标注的object if not self.use_difficult and int(obj.find('difficult').text) == 1:continue //当没有启用difficult 但是我们找到了标注的difficult物体(标注值为1) 跳过这个object.text方法是获取xml标签里的内容 比如obj.find('difficult')=<difficult>1<difficult/>obj.find('difficult').text = 1 (string类型数据 要转成int)difficult.append(int(obj.find('difficult').text)) bndbox_anno = obj.find('bndbox') //找到标注框boundboxbbox.append([int(bndbox_anno.find(tag).text) - 1for tag in ('ymin', 'xmin', 'ymax', 'xmax')]) //列表解析:[ymin,xmin,ymax,xmax] 减一是为了让像素的索引从0开始name = obj.find('name').text.lower().strip() //name标注的对应VOC_BBOX_LABEL_NAMES中的一个label.append(VOC_BBOX_LABEL_NAMES.index(name)) //label就是VOC_BBOX_LABEL_NAME中name的索引 范围0-19bbox = np.stack(bbox).astype(np.float32) //将box从list转成np.float32类型label = np.stack(label).astype(np.int32) //label是 np.int32类型difficult = np.array(difficult, dtype=np.bool).astype(np.uint8) //由于pytorch不支持np.bool 我们要将difficult 转成np.bool后再转成unint8img_file = os.path.join(self.data_dir, 'JPEGImages', id_ + '.jpg') //获得id_对应图片的完整路径img = read_image(img_file, color=True) //调用data/util 中的read_image方法 读取图片数据return img, bbox, label, difficult __getitem__ = get_example //魔术方法 上面写的get_exampleVOC_BBOX_LABEL_NAMES = ('aeroplane','bicycle','bird','boat','bottle','bus','car','cat','chair','cow','diningtable','dog','horse','motorbike','person','pottedplant','sheep','sofa','train','tvmonitor')trainval.txt 记事本打开是这样的 其实暗藏/n换行符 是000005/n 000007/n 000009/n…

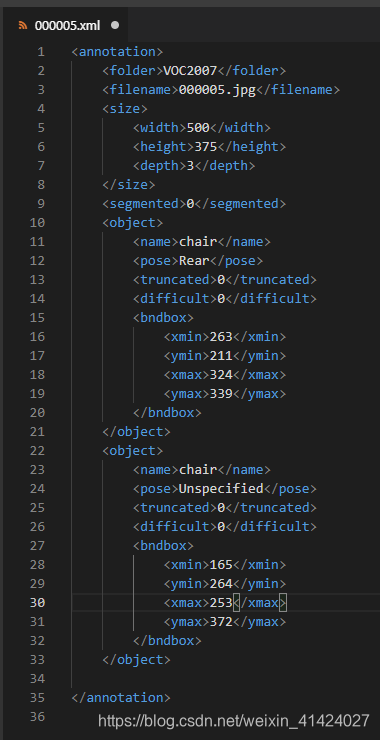

标注xml大概是这样的(与原xml有删减) object是物体 name是物体名字 bndbox是标注框 difficult 0或1(难识别)

data/util.py

import numpy as np

from PIL import Image

import randomdef read_image(path, dtype=np.float32, color=True): //根据path读取图片 转化成np矩阵返回f = Image.open(path) //PIL的Image方法 读取img文件try:if color: //默认都是彩色图三通道 img = f.convert('RGB') else:img = f.convert('P') //灰度矩阵img = np.asarray(img, dtype=dtype) //转换为np.float32矩阵finally:if hasattr(f, 'close'): //关闭文件f.close() if img.ndim == 2: //灰度图像 # reshape (H, W) -> (1, H, W)return img[np.newaxis]else:# transpose (H, W, C) -> (C, H, W)return img.transpose((2, 0, 1)) //Image读取的是H W C格式 转成 C H W 以便pytorch利用def resize_bbox(bbox, in_size, out_size): //bbox:一张图片中 R个标注box(ymin,xmin,ymax,xmax) shape:(R,4)insize:(height,width) outsize:你想要的(height,width) 当我们resize图片后 也应当resize_box 此函数单独使用没有意义bbox = bbox.copy()y_scale = float(out_size[0]) / in_size[0] //垂直y缩放倍数x_scale = float(out_size[1]) / in_size[1] //水平x缩放倍数bbox[:, 0] = y_scale * bbox[:, 0] //new yminbbox[:, 2] = y_scale * bbox[:, 2] //new xminbbox[:, 1] = x_scale * bbox[:, 1] //new ymaxbbox[:, 3] = x_scale * bbox[:, 3] //new xmaxreturn bboxdef flip_bbox(bbox, size, y_flip=False, x_flip=False): //数据增强部分 如果翻转了图片 那么bbox标注框也应该翻转此函数就是bbox的翻转 单独使用没有意义H, W = size //bbox:一张图片中 R个标注的box(ymin,xmin,ymax,xmax) shape:(R,4) 输入图片的size:(height,width) bbox = bbox.copy() if y_flip: //垂直翻转y_max = H - bbox[:, 0] //H-yminy_min = H - bbox[:, 2] //H-ymaxbbox[:, 0] = y_min //ymin = H-ymin 垂直翻转bbox[:, 2] = y_max //y_max = H-ymax 垂直翻转if x_flip: //水平翻转 同理x_max = W - bbox[:, 1]x_min = W - bbox[:, 3]bbox[:, 1] = x_minbbox[:, 3] = x_maxreturn bbox //翻转后的boxdef crop_bbox(bbox, y_slice=None, x_slice=None,allow_outside_center=True, return_param=False):pass //方法对应crop_img 由于我们训练的时候使用的是resize image 这个函数没有用def _slice_to_bounds(slice_):pass //上面crop_bbox用到的函数 此处略过def translate_bbox(bbox, y_offset=0, x_offset=0):pass//如果图片用了padding后者cropping 那么对应bbox也要变换 由于我们训练的时候使用的是resize image 这个函数没有用def random_flip(img, y_random=False, x_random=False, //数据增强 实现图片翻转 img:图片矩阵 y_random:是否使用垂直随机翻return_param=False, copy=False): 转,return_param:是否返回翻转状态 一个dict很好懂 copy:是否返回img的副本 y_flip, x_flip = False, False //默认不进行垂直翻转 和水平翻转if y_random: //如果使用随机垂直翻转y_flip = random.choice([True, False]) //随机选取T or F 即随机选取翻不翻转if x_random:x_flip = random.choice([True, False])if y_flip:img = img[:, ::-1, :] //图片翻转 C H W H翻转if x_flip:img = img[:, :, ::-1]if copy:img = img.copy()if return_param: //因为我们这里只翻转了图片 保留dict参数是为了翻转box时使用 如果img水平翻转了 那么x_flip=True 我们记录这个参数 以后也应当水平翻转这张图片的所有box R个boxreturn img, {'y_flip': y_flip, 'x_flip': x_flip}else:return imgdata/dataset.py

为了不让你对numpy的copy()感到困惑

请参考这篇文章: csdn numpy.copy()

from __future__ import absolute_import

from __future__ import division

import torch as t

from data.voc_dataset import VOCBboxDataset

from skimage import transform as sktsf

from torchvision import transforms as tvtsf

from data import util

import numpy as np

from utils.config import optdef inverse_normalize(img): //逆标准化图片 以便显示(可视化)的时候使用if opt.caffe_pretrain: //如果模型是caffe预训练的img = img + (np.array([122.7717, 115.9465, 102.9801]).reshape(3, 1, 1)) //img加均值 0-255caffe没有标准差 reshape是为了numpy的广播机制return img[::-1, :, :] //caffe是 [BGR,H,W] 需要转化成[RGB,H,W]显示return (img * 0.225 + 0.45).clip(min=0, max=1) * 255 //pytorch预训练的img 范围在0-1 加均值乘以标准差后 需要扩大到0-255 clip限定是为了防止超界 下界为0 上界为1 如果超过1就是1 如果小于0就是0 def pytorch_normalze(img): //pytoch图片标准化 为了让均值为0 标准差为1以便训练 F~(0,1)normalize = tvtsf.Normalize(mean=[0.485, 0.456, 0.406], //pytorch用法 将图片减均值除以标准差std=[0.229, 0.224, 0.225])img = normalize(t.from_numpy(img)) //因为Normalize方法只接受tensor对象 将img转化为tensor传入return img.numpy() //将标准化后的img 从tensor转化为numpydef caffe_normalize(img): //caffe图片标准化 caffe只有均值 没有标准差 F~(0,Σ) 如果你用过caffe发现caffe只有一个mean.binaryprotoimg = img[[2, 1, 0], :, :] // RGB to BGR 因为如果使用caffe_pretrain,那么整个模型参数都是基于caffe训练的,是bgr的我们需要把图片变为bgr的进行训练,训练之后再inverse_nomalize还原成rgb通道显示img = img * 255 //0-1 to 0-255mean = np.array([122.7717, 115.9465, 102.9801]).reshape(3, 1, 1) img = (img - mean).astype(np.float32, copy=True) //img减均值 返回一个np.float32 img矩阵的副本(-125-125 BGR)return imgdef preprocess(img, min_size=600, max_size=1000): //输入原始img矩阵 返回取值0-1的,经过resize的,标准化后的img numpy矩阵输入img min_size就是输出图片的短边长最长600(不是要求最小为600) mix_size输出图片长边最长1000 就是要求输出图片一边为600或者一边为1000C, H, W = img.shape scale1 = min_size / min(H, W) //600/短边scale2 = max_size / max(H, W) //1000/长边scale = min(scale1, scale2) //比较两个scale 看哪个才是主要影响缩放因子 如果是scale1更小 那么将图片缩放到600*Z 时 Z不会超过1000 如果scale2是 那么缩放到 Z*1000时 Z不会超过600通俗一点:长边不能超过1000 短边不能超过600 且 至少有一边是600或1000img = img / 255. //转化为0-1img = sktsf.resize(img, (C, H * scale, W * scale), mode='reflect') //根据scale resize imgsktsf.resize方法来自skimage的transformif opt.caffe_pretrain: //标准化过程normalize = caffe_normalizeelse:normalize = pytorch_normalzereturn normalize(img)//一张图片可能有R个box和label shape:box(R,4) label:(R,) 以后不再提示

class Transform(object): // 接受魔术方法get_example传来的一张图片的原始 img box label 返回resize和normalize后的img 对应处理后的box 以及label(没有处理)def __init__(self, min_size=600, max_size=1000):self.min_size = min_sizeself.max_size = max_sizedef __call__(self, in_data): img, bbox, label = in_data _, H, W = img.shapeimg = preprocess(img, self.min_size, self.max_size) //resize img 并进行标准化 输出范围0-1 numpy矩阵_, o_H, o_W = img.shapescale = o_H / Hbbox = util.resize_bbox(bbox, (H, W), (o_H, o_W)) //根据resize_img的scale 对box进行同等scale # horizontally flipimg, params = util.random_flip( //数据增强 随机水平翻转img, x_random=True, return_param=True)bbox = util.flip_bbox( //根据img水平翻转的情况 对box也进行翻转bbox, (o_H, o_W), x_flip=params['x_flip'])return img, bbox, label, scaleclass Dataset: //读取训练数据最大的类 如果你读过pytorch源码 你会发现其实并不用继承dataset类 因为那个类是空 的 只实现了两个pass空方法 getitem和len两个魔术方法 所以我们只要实现这两个方法就不用继承就可以传入DataLoaderdef __init__(self, opt): //opt是传来的参数 来自utils.config 包含了voc_data的路径self.opt = opt self.db = VOCBboxDataset(opt.voc_data_dir)self.tsf = Transform(opt.min_size, opt.max_size)def __getitem__(self, idx): //实现getitem魔术方法ori_img, bbox, label, difficult = self.db.get_example(idx)img, bbox, label, scale = self.tsf((ori_img, bbox, label))return img.copy(), bbox.copy(), label.copy(), scaledef __len__(self): //实现__len__魔术方法 return len(self.db)class TestDataset: //读取测试数据 split不同从而读取的是test.txt 启用use_difficult 不对图片 box等进行resize等处理def __init__(self, opt, split='test', use_difficult=True):self.opt = optself.db = VOCBboxDataset(opt.voc_data_dir, split=split, use_difficult=use_difficult)def __getitem__(self, idx):ori_img, bbox, label, difficult = self.db.get_example(idx)img = preprocess(ori_img)return img, ori_img.shape[1:], bbox, label, difficult //这里多返回了difficult 和原图ori_img.shape:(h,w) 去掉了cdef __len__(self):return len(self.db)mics

convet_caffe_pretrain

# code from ruotian luo

# https://github.com/ruotianluo/pytorch-faster-rcnn

import torch

from torch.utils.model_zoo import load_url

from torchvision import models//作者也说了下载预训练的caffe_pretrain model最后训练效果mAP会高一点,所以我们需要提前运行这个python类

下载预训练的caffe模型参数 如果不提前下载 训练时会自动用pytorch自带的vgg16模型参数 效果稍微差一点sd = load_url("https://s3-us-west-2.amazonaws.com/jcjohns-models/vgg16-00b39a1b.pth") //下载并加载模型

sd['classifier.0.weight'] = sd['classifier.1.weight'] //将分类器第1层权值给第0层

sd['classifier.0.bias'] = sd['classifier.1.bias'] //偏置同理

del sd['classifier.1.weight'] //删除第一层偏置和权重

del sd['classifier.1.bias']sd['classifier.3.weight'] = sd['classifier.4.weight']

sd['classifier.3.bias'] = sd['classifier.4.bias']

del sd['classifier.4.weight']

del sd['classifier.4.bias']import os

# speicify the path to save

if not os.path.exists('checkpoints'): //如果没有misc/checkpoints这个路径 创建路径保存模型参数os.makedirs('checkpoints')

torch.save(sd, "checkpoints/vgg16_caffe.pth")

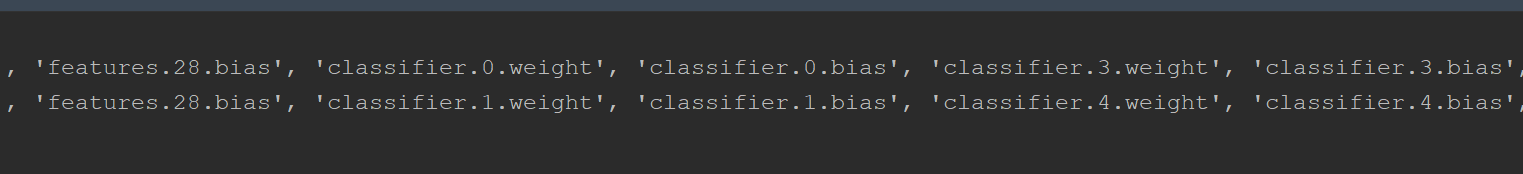

代码很容易,但是为什么要这样做 我们打印一下网络结构就知道了

上面是pytorch自带的vgg结构 下面是下载的sd网络 前面features是一样的 后面可能由于sd作者原因还是其他原因(我也不知道)导致参数名字与pytorch的vgg16参数名字不一样 所以我们这里需要将参数改名字(因为我们要加载pytorch自带的网络结构 所以参数名称必须与其一致)

train_fast.py

更快的训练模型,由于我们要讲解的 train. py 与这个大同小异,这个训练的轮数更少,改变lr,训练完后统一进行测试而不是训练一轮就测试一次等技法 使得训练更快 我也没有仔细读这个类!

utils

array_tool.py

import torch as t

import numpy as npdef tonumpy(data): //将数据转化为Numpyif isinstance(data, np.ndarray): //如果是np类型return data //直接返回if isinstance(data, t.Tensor): //是pytorch的tensorreturn data.detach().cpu().numpy() //将变量从图中分离(使得数据独立,以后你再如何操作都不会对图,对模型产生影响),如果是gpu类型变成cpu的(cpu类型调用cpu方法没有影响),再转化为numpy数组def totensor(data, cuda=True): //将数据转化为cuda或者tensor类型 cuda=True表示转化为cuda类型if isinstance(data, np.ndarray): //如果是numpy类型tensor = t.from_numpy(data) //调用pytorch常用的from_numpy 变成tensorif isinstance(data, t.Tensor): //如果是tensortensor = data.detach() //隔离变量if cuda: //需要转化为cuda变量tensor = tensor.cuda()return tensordef scalar(data): //取出数据的值if isinstance(data, np.ndarray): //如果是numpy类型(必须为1个数据 几维都行) 取出这个数据的值 return data.reshape(1)[0] if isinstance(data, t.Tensor): //如果是tensor类型 调用pytorch常用的item方法 取出tensor的值return data.item()

_config.py

from pprint import pprint

//参数作者注释很清楚 对模型了解的话都可以看懂

class Config:# datavoc_data_dir = '/home/cy/.chainer/dataset/pfnet/chainercv/voc/VOCdevkit/VOC2007/'min_size = 600 # image resizemax_size = 1000 # image resizenum_workers = 8 //工作线程数test_num_workers = 8# sigma for l1_smooth_lossrpn_sigma = 3.roi_sigma = 1.# param for optimizer# 0.0005 in origin paper but 0.0001 in tf-faster-rcnnweight_decay = 0.0005lr_decay = 0.1 # 1e-3 -> 1e-4lr = 1e-3# visualizationenv = 'faster-rcnn' # visdom envport = 8097 //visdom 端口plot_every = 40 # vis every N iter# presetdata = 'voc'pretrained_model = 'vgg16'# trainingepoch = 14use_adam = False # Use Adam optimizeruse_chainer = False # try match everything as chaineruse_drop = False # use dropout in RoIHead# debugdebug_file = '/tmp/debugf'test_num = 10000# modelload_path = Nonecaffe_pretrain = False # use caffe pretrained model instead of torchvision //建议使用caffe_pretrain_path = 'checkpoints/vgg16_caffe.pth'def _parse(self, kwargs): //解析并设置用户设定的参数state_dict = self._state_dict() //读取Config类的所有参数dict{para_name:para_value}for k, v in kwargs.items(): //遍历用户传来的dictif k not in state_dict: //遇到未知参数raise ValueError('UnKnown Option: "--%s"' % k)setattr(self, k, v) //设置参数print('======user config========')pprint(self._state_dict()) //打印参数 pprint函数是为了让显示结果更加优美print('==========end============')def _state_dict(self): //读取Config类的所有参数dict{para_name:para_value}return {k: getattr(self, k) for k, _ in Config.__dict__.items() if not k.startswith('_')}//字典解析,Config.__dict__.items() 取出类中所有的函数、全局变量以及一些内置的属性前面我们设定的都是全局变量(键值对:比如min_size = 600),没有函数,而系统内置属性都是_打头的,所以我们要not k.startswith('_') 返回结果dict{para_name0:para_value0,para_name1:para_value1,....}opt = Config() //创建config对象eval_tool.py

from __future__ import divisionfrom collections import defaultdict

import itertools

import numpy as np

import sixfrom model.utils.bbox_tools import bbox_ioudef eval_detection_voc(pred_bboxes, pred_labels, pred_scores, gt_bboxes, gt_labels,gt_difficults=None,iou_thresh=0.5, use_07_metric=False)://根据PASCAL VOC的evaluation code 计算平均精度test_num张图片(图片数据来自测试数据testdata)的预测框,标签,分数,和真实的框,标签和分数。所有参数都是list len(list)=opt.test_num(default=10000)pred_boxes:[(A,4),(B,4),(C,4)....共test_num个] 输入源gt_数据 经过train.predict函数预测出的结果框pred_labels[(A,),(B,),(C,)...共test_num个] pred_scores同pred_labels A,B,C,D是由nms决定的个数,即预测的框个数,不确定。gt_bboxes:[(a,4),(b,4)....共test_num个] a b...是每张图片标注真实框的个数gt_labels与gt_difficults同理use_07_metric (bool): 是否使用PASCAL VOC 2007 evaluation metric计算平均精度prec, rec = calc_detection_voc_prec_rec( //函数算出每个label类的准确率和召回率pred_bboxes, pred_labels, pred_scores,gt_bboxes, gt_labels, gt_difficults,iou_thresh=iou_thresh)ap = calc_detection_voc_ap(prec, rec, use_07_metric=use_07_metric) //根据prec和rec 计算ap和mapreturn {'ap': ap, 'map': np.nanmean(ap)}def calc_detection_voc_prec_rec(pred_bboxes, pred_labels, pred_scores, gt_bboxes, gt_labels,gt_difficults=None,iou_thresh=0.5)pred_bboxes = iter(pred_bboxes) //生成迭代器pred_labels = iter(pred_labels)pred_scores = iter(pred_scores)gt_bboxes = iter(gt_bboxes)gt_labels = iter(gt_labels)if gt_difficults is None: gt_difficults = itertools.repeat(None) //itertools.repeat生成一个重复的迭代器 None是每次迭代获得的数据else:gt_difficults = iter(gt_difficults)n_pos = defaultdict(int) //defaultdict当key不存在时 dict[key]=default(int)=0 default(list)=[] default(dict)={}score = defaultdict(list)match = defaultdict(list)for pred_bbox, pred_label, pred_score, gt_bbox, gt_label, gt_difficult in \six.moves.zip( //遍历每一张图片的box label scorepred_bboxes, pred_labels, pred_scores,gt_bboxes, gt_labels, gt_difficults):if gt_difficult is None:gt_difficult = np.zeros(gt_bbox.shape[0], dtype=bool) //全部设置为非difficult//遍历一张图片中 所有出现的labelfor l in np.unique(np.concatenate((pred_label, gt_label)).astype(int)): //拼接后返回无重复的从小到大排序的一维numpy 如[2,3,4,5,6] 并遍历这个一维数组,即遍历这张图片出现过的标签数字(gt_label+pred_label)pred_mask_l = pred_label == l //广播pred_mask_l=[eg. T,F,T,T,F,F,F,T..] 所有预测label中等于L的为T 否则Fpred_bbox_l = pred_bbox[pred_mask_l] //选出label=L的所有pre_boxpred_score_l = pred_score[pred_mask_l] //label=L 对应所有pre_score# sort by scoreorder = pred_score_l.argsort()[::-1] //获得score降序排序索引pred_bbox_l = pred_bbox_l[order] //按照分数从高到低 对box进行排序pred_score_l = pred_score_l[order] //对score进行排序gt_mask_l = gt_label == l //广播gt_mask_l =[eg. T,F,T,T,F,F,F,T..] 所有真实label中等于L的为T 否则Fgt_bbox_l = gt_bbox[gt_mask_l] //选出label=L的所有boxgt_difficult_l = gt_difficult[gt_mask_l] //选出label=L的所有difficultn_pos[l] += np.logical_not(gt_difficult_l).sum() //对T,F取反求和 即统计difficult=0的个数score[l].extend(pred_score_l) //score={l:predscore_1,....} extend是针对list的方法if len(pred_bbox_l) == 0: //没有预测的label=L的box 即真实label有L,我们全没有预测到 continue //跳过这张图片 此时没有对match字典操作 之前score[l].extend操作也为空 保持了match和score形状一致if len(gt_bbox_l) == 0: //没有真实的label=L的box 即预测label有L,真实中没有 我们都预测错了match[l].extend((0,) * pred_bbox_l.shape[0]) //match{L:[0,0,0,.. n_pred_box个0]} continue //预测错label就是0 已不需要后续操作 跳过此图片# VOC evaluation follows integer typed bounding boxes.//我不太懂这么做的目的,作者给的注释是follows integer typed bounding boxes但是只改变了ymax,xmax的值,重要的是这样做并不能转化为整数 pred_bbox和gt_bbox只参与了IOU计算且后面没有参与其他计算 有待解答。pred_bbox_l = pred_bbox_l.copy()pred_bbox_l[:, 2:] += 1 //ymax,xmax +=1gt_bbox_l = gt_bbox_l.copy()gt_bbox_l[:, 2:] += 1 //ymax,xmax +=1iou = bbox_iou(pred_bbox_l, gt_bbox_l) //计算两个box的IOUgt_index = iou.argmax(axis=1) //有len(pred_bbox_l)个索引 第i个索引值n表示 gt_box[n]与pred_box[i] IOU最大# set -1 if there is no matching ground truthgt_index[iou.max(axis=1) < iou_thresh] = -1 //将gt_box与pred_box iou<thresh的索引值置为-1即针对每个pred_bbox,与每个gt_bbox IOU的最大值 如果最大值小于阀值则置为-1即我们预测的这个box效果并不理想 后续会将index=-1的 matchlabel=0del iouselec = np.zeros(gt_bbox_l.shape[0], dtype=bool)for gt_idx in gt_index: //遍历gt_index索引值if gt_idx >= 0: //即IOU满足条件if gt_difficult_l[gt_idx]: //对应的gt_difficult =1 即困难标识match[l].append(-1) //match[l]追加一个-1else: //不是困难标识if not selec[gt_idx]: //没有被选过 select[gt_idx]=0时match[l].append(1) //match[l]追加一个1else: //对应的gt_box已经被选择过一次 即已经和前面某pred_box IOU最大match[l].append(0) //match[l]追加一个0selec[gt_idx] = True //select[gt_idx]=1 置为1,表示已经被选过一次else: //不满足IOU>thresh 效果并不理想match[l].append(0) //match[l]追加一个0//我们注意到 上面为每个pred_box都打了label 0,1,-1 len(match[l])=len(score[l])=len(pred_bbox_l)for iter_ in ( //上面的 six.moves.zip遍历会在某一iter遍历到头后停止,由于pred_bboxes等是全局iter对象,我们此时继续调用next取下一数据,如果有任一数据不为None,那么说明他们的len是不相等的 有悖常理,数据错误pred_bboxes, pred_labels, pred_scores,gt_bboxes, gt_labels, gt_difficults):if next(iter_, None) is not None: //next(iter,None) 表示调用next 如果已经遍历到头 不抛出异常而是返回Noneraise ValueError('Length of input iterables need to be same.')//注:对PR曲线不了解的可以去看一看,跟ROC曲线是有区别的n_fg_class = max(n_pos.keys()) + 1 //有n_fg_class个类prec = [None] * n_fg_class //list[None,.....len(n_fg_class)]rec = [None] * n_fg_classfor l in n_pos.keys(): //遍历所有Labelscore_l = np.array(score[l]) //list to np.arraymatch_l = np.array(match[l], dtype=np.int8)order = score_l.argsort()[::-1] match_l = match_l[order] //对应match按照 score由大到小排序tp = np.cumsum(match_l == 1) //统计累计 match_1=1的个数fp = np.cumsum(match_l == 0) //统计累计 match_1=0的个数//tp eg. [1 2 3 3 4 5 5 6 7 8 8 9 10 11........]//fp eg. [0 0 0 0 0 0 0 1 1 1 1 1 1 2 ......]# 如果 fp + tp = 0, 那么计算出的prec[l] = nanprec[l] = tp / (fp + tp) //计算准确率if n_pos[l] > 0: //如果n_pos[l] = 0,那么rec[l] =Nonerec[l] = tp / n_pos[l] //计算召回率return prec, recdef calc_detection_voc_ap(prec, rec, use_07_metric=False):n_fg_class = len(prec)ap = np.empty(n_fg_class)for l in six.moves.range(n_fg_class): //遍历每个labelif prec[l] is None or rec[l] is None: //如果为None 则ap置为np.nanap[l] = np.nancontinueif use_07_metric: //如果按07分数矩阵计算ap# 11 point metricap[l] = 0for t in np.arange(0., 1.1, 0.1): //t=0 0.1 0.2 ....1.0if np.sum(rec[l] >= t) == 0: //这个标签的召回率没有>=t的p = 0else:p = np.max(np.nan_to_num(prec[l])[rec[l] >= t]) //p=(rec>=t时 对应index:prec中的最大值)P=(X>t时,X对应的Y:Y的最大值) np.nan_to_num 是为了让None=0 以便计算ap[l] += p / 11 else:mpre = np.concatenate(([0], np.nan_to_num(prec[l]), [0])) //头尾填0mrec = np.concatenate(([0], rec[l], [1])) //头填0 尾填1mpre = np.maximum.accumulate(mpre[::-1])[::-1] //我们知道 我们是按score由高到低排序的 而且我们给box打了label0,1,-1 score高时1的概率会大,所以pre是累计降序的而rec是累积升序的,那么此时将pre倒序再maxuim.ac获得累积最大值,再倒序后 从小到大排序的累积最大值i = np.where(mrec[1:] != mrec[:-1])[0] //差位比较,看哪里改变了recall的值 记录Index (x轴)# and sum (\Delta recall) * precap[l] = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1]) //差值*mpre_max值 (x轴差值*y_max)return apvis_tool.py

matplotlib只是一个中间过程 我们利用matplotlib 画一张图片,画所有的bbox和label and score (所有的都在一张图里)最后并不显示,而是将figure转化为numpy数组 CHW 范围0-1 以供visdom显示 visdom是一个可视化的工具库 支持numpy和pytorch

请参考这篇文章: 在PyTorch中使用Visdom可视化工具ptorch.com 和 visdom github: Visdom GitHub

import timeimport numpy as np

import matplotlib

import torch as t

import visdom

matplotlib.use('Agg')

from matplotlib import pyplot as plot

# from data.voc_dataset import VOC_BBOX_LABEL_NAMES

//voc标注物体的名字,可以从之前导入,我不知道为什么作者这里又写了一份简写的,可能方便显示吧

VOC_BBOX_LABEL_NAMES = ('fly','bike','bird','boat','pin','bus','c','cat','chair','cow','table','dog','horse','moto','p','plant','shep','sofa','train','tv',

)def vis_image(img, ax=None): //画图像: img是经过逆标准化0-255的RGB图像,ax是传来的matplotlib.axes.Axis对象 告诉我们画在哪里if ax is None: //如果没有这个对象 创建一个(1,1,1)的fig = plot.figure()ax = fig.add_subplot(1, 1, 1)# CHW -> HWCimg = img.transpose((1, 2, 0))ax.imshow(img.astype(np.uint8)) //matplot支持0-1的图形显示也支持0-255的图形显示 转成uint8是为了告诉它我们的图形是0-255的return ax //返回axis对象后续使用,vis_box会继续调用,在此图片基础上画框等def vis_bbox(img, bbox, label=None, score=None, ax=None):label_names = list(VOC_BBOX_LABEL_NAMES) + ['bg'] //20个物体对象+后景['bg']if label is not None and not len(bbox) == len(label): //框个数不等于标签个数raise ValueError('The length of label must be same as that of bbox')if score is not None and not len(bbox) == len(score): //框个数不等于分数个数raise ValueError('The length of score must be same as that of bbox')ax = vis_image(img, ax=ax) //显示图像if len(bbox) == 0: //没有框return axfor i, bb in enumerate(bbox): //遍历一张图所有框xy = (bb[1], bb[0]) //左上角height = bb[2] - bb[0] //高width = bb[3] - bb[1] //宽ax.add_patch(plot.Rectangle( //画矩形 红色 2粗xy, width, height, fill=False, edgecolor='red', linewidth=2))caption = list()if label is not None and label_names is not None:lb = label[i] //此框对应的labelif not (-1 <= lb < len(label_names)): //超界raise ValueError('No corresponding name is given')caption.append(label_names[lb]) //物体名字加入caption listif score is not None:sc = score[i] //找到此框对应分数caption.append('{:.2f}'.format(sc)) //加入caption listif len(caption) > 0:ax.text(bb[1], bb[0], //将物体名字 分数 画在框左上角': '.join(caption),style='italic',bbox={'facecolor': 'white', 'alpha': 0.5, 'pad': 0})return ax //返回axis对象后续被fig4vis调用,将图像转化为numpy数组def fig2data(fig): //将matplotlib的figure图像 转化为RGBA形式的Numpy返回fig.canvas.draw() //画图,渲染w, h = fig.canvas.get_width_height() //得到figure的w,hbuf = np.fromstring(fig.canvas.tostring_argb(), dtype=np.uint8) //得到numpy形式的 argb图像buf.shape = (w, h, 4) //(w,h,argb)buf = np.roll(buf, 3, axis=2) //(w,h,rgba) 在第二轴水平向右滚动3个距离return buf.reshape(h, w, 4) //(h,w,rgba)def fig4vis(fig): //将matplotlib的figure图像 转化为pytorch常用的3通道RGB,CHW 0-1图像ax = fig.get_figure() img_data = fig2data(ax).astype(np.int32)plot.close()return img_data[:, :, :3].transpose((2, 0, 1)) / 255. //(H,W,RGBA)->(H,W,RGB)->(RGB,H,W)即CHW /255->0-1 def visdom_bbox(*args, **kwargs): //我们要使用的函数 包含上面所有函数调用 传入img box label score 画成一张图像 以numpy形式返回fig = vis_bbox(*args, **kwargs)data = fig4vis(fig)return dataclass Visualizer(object): //Visdom可视化部分def __init__(self, env='default', **kwargs):self.vis = visdom.Visdom(env=env, use_incoming_socket=False, **kwargs) //visdom对象self._vis_kw = kwargs //参数self.index = {}self.log_text = ''def reinit(self, env='default', **kwargs)://可能由于用户要改变kwargs初始化参数 而重新初始化visdom对象self.vis = visdom.Visdom(env=env, **kwargs)return selfdef plot_many(self, d): //plot//参数 d: dict (name,value) i.e. ('loss',0.11)for k, v in d.items():if v is not None:self.plot(k, v) //调用下面的plot函数 画折线图(各种损失折线图,训练map折线图)def img_many(self, d): //参数 d: dict (name,value) i.e. ('loss',0.11)for k, v in d.items():self.img(k, v) //调用下面的img函数 画图def plot(self, name, y, **kwargs): //画一条直线 title=namex = self.index.get(name, 0)self.vis.line(Y=np.array([y]), X=np.array([x]),win=name,opts=dict(title=name),update=None if x == 0 else 'append',**kwargs)self.index[name] = x + 1def img(self, name, img_, **kwargs): //画图片 title=nameself.vis.images(t.Tensor(img_).cpu().numpy(),win=name,opts=dict(title=name),**kwargs)def log(self, info, win='log_text'): //打印log日志 时间+infoself.log_text += ('[{time}] {info} <br>'.format(time=time.strftime('%m%d_%H%M%S'), \info=info))self.vis.text(self.log_text, win)def __getattr__(self, name): //按name获得一个属性return getattr(self.vis, name)def state_dict(self): //返回现有参数 dictreturn {'index': self.index,'vis_kw': self._vis_kw,'log_text': self.log_text,'env': self.vis.env}def load_state_dict(self, d): //参数 d: dict (name,value) i.e. ('loss',0.11) 初始化参数self.vis = visdom.Visdom(env=d.get('env', self.vis.env), **(self.d.get('vis_kw')))self.log_text = d.get('log_text', '')self.index = d.get('index', dict())return selfmodel

utils

nms

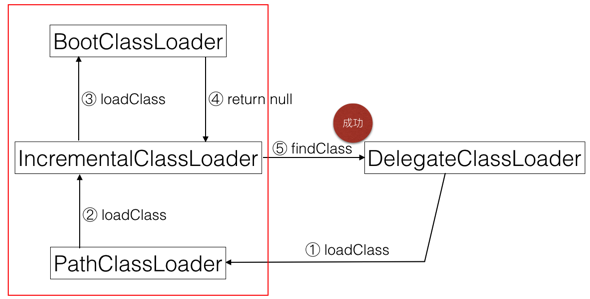

我们知道python 本来就是用c写的,最近刚好看过源码(一切皆对象)由于其本身特性和高级语言等原因,运行速度是一直是一个问题。虽然pytorch是用C加速和CUDA运算,但是NMS这一块需要我们自己写,用python速度很慢,这里作者结合了Cupy cuda编程 和Cython结合,写出了nms. Cupy是numpy的一个加速cuda计算库,可以将Numpy放到gpu计算,Cython则是将python代码编译成C语言,C编译器将.c文件编译链接成pyd拓展模块,这样对象的类型大小在编译时就可以确定,会加速很多。官网是这样说的

A .pyx file is compiled by Cython to a .c file, containing the code of a Python extension module.

The .c file is compiled by a C compiler to a .so file (or .pyd on Windows) which can be import-ed directly into a Python session.

win上生成pyd linux生成.so 动态链接库 可以被python直接导入(实际上好多库的函数都是这么干的…python实在太慢了)

我当时训练完模型,有人要求我用cpu进行预测,所以当时我把gpu部分去掉了 你调试的时候也可以换成cpu代码进行调试

nms cpu python代码: csdn:nms.cpu

build_.py

from distutils.core import setup

from distutils.extension import Extension

from Cython.Distutils import build_ext

//构建动态链接库的代码,Cython标准写法,详见Cython,这里就是将我们写的_nms_gpu_post.pyx变成pyd

或.so文件的过程 所以我们要先运行这个类,运行方法作者也介绍的很清楚了,最终生成pyd or .so链接库 .c是中间文件ext_modules = [Extension("_nms_gpu_post", ["_nms_gpu_post.pyx"])]

setup(name="Hello pyx",cmdclass={'build_ext': build_ext},ext_modules=ext_modules

)

_nms_gpu_post_py.py

如果环境不允许或其他原因 我们没有使用Cython加速 那么将加载这个python原生函数(慢很多) 代码与_nms_gpu_post.pyx 大同小异 这里不做讲解

non_maximum_suppression.py

非极大值抑制主函数 注:cupy与numpy函数库无太大差异,np.函数 在cp上也都有,用法也一样 只不过一个在gpu计算 一个在本地计算

from __future__ import division

import numpy as np

import cupy as cp

import torch as t

try:from ._nms_gpu_post import _nms_gpu_post //尝试加载pyd

except: //加载失败 告诉使用者,使用pyd会更快和生成pyd的方法import warningswarnings.warn('''the python code for non_maximum_suppression is about 2x slowIt is strongly recommended to build cython code: `cd model/utils/nms/; python3 build.py build_ext --inplace''')from ._nms_gpu_post_py import _nms_gpu_post //加载python原生函数//cupy函数 这里直接使用cuda编程 kernel_name是函数名字,方便调用。code是原生c-cuda-code需要自己编写

函数作用就是让我们自己编写的c cuda code变成一个函数 供我们调用。

@cp.util.memoize(for_each_device=True)

def _load_kernel(kernel_name, code, options=()):cp.cuda.runtime.free(0)assert isinstance(options, tuple)kernel_code = cp.cuda.compile_with_cache(code, options=options)return kernel_code.get_function(kernel_name)def non_maximum_suppression(bbox, thresh, score=None,limit=None)://根据传入的box 和score 规定的thresh 计算返回nms处理后选择的box索引(按照分数从高到低排序)return _non_maximum_suppression_gpu(bbox, thresh, score, limit)def _non_maximum_suppression_gpu(bbox, thresh, score=None, limit=None):if len(bbox) == 0: //没有候选框 直接返回0return cp.zeros((0,), dtype=np.int32)n_bbox = bbox.shape[0] //传入box的个数if score is not None: //传入了score分数order = score.argsort()[::-1].astype(np.int32) //获得按分数降序排列的索引else: //没有传入order = cp.arange(n_bbox, dtype=np.int32) // 索引等于 0-(n_box-1)sorted_bbox = bbox[order, :] //将传入框 按分数从高到低排序selec, n_selec = _call_nms_kernel( //调用nms_kernel函数 返回选中的索引(索引是依据sorted_boxed的,也可以说是依据order的)和 选中框的个数sorted_bbox, thresh)selec = selec[:n_selec] selec = order[selec] //根据order取 框真正的索引if limit is not None: //限制返回框个数 参数自己定selec = selec[:limit]return cp.asnumpy(selec) //转化为numpy返回 返回的是选择框的索引shape:(N,)def _call_nms_kernel(bbox, thresh):n_bbox = bbox.shape[0] //框的个数threads_per_block = 64 //一个block有多少threadcol_blocks = np.ceil(n_bbox / threads_per_block).astype(np.int32) //cuda常用的对齐block操作 保证线程数最小限度全覆盖数据blocks = (col_blocks, col_blocks, 1) //看到这里我有点慌了 因为对齐一个blocks按理说是(n_blocks,1,1) 说明后面要全排列了threads = (threads_per_block, 1, 1) mask_dev = cp.zeros((n_bbox * col_blocks,), dtype=np.uint64) //开辟64*n_box*sizeof(np.uint64)的连续内存 置为0 用于存放结果bbox = cp.ascontiguousarray(bbox, dtype=np.float32) //将bbox从numpy转成cupycuda计算 放到连续的内存中以便cuda运算 很重要kern = _load_kernel('nms_kernel', _nms_gpu_code) //加载自己写的c-cuda核函数kern(blocks, threads, args=(cp.int32(n_bbox), cp.float32(thresh), //调用核函数bbox, mask_dev))mask_host = mask_dev.get() //将计算结果从gpu取到本地selection, n_selec = _nms_gpu_post( //调用我们Cython导入的nms函数进行计算mask_host, n_bbox, threads_per_block, col_blocks)return selection, n_selec_nms_gpu_code = '''

#define DIVUP(m,n) ((m) / (n) + ((m) % (n) > 0)) //进位除法 保证全线程覆盖数据

int const threadsPerBlock = sizeof(unsigned long long) * 8;__device__ //将从gpu本地函数进行调用

inline float devIoU(float const *const bbox_a, float const *const bbox_b) { //计算两个box的IOU float top = max(bbox_a[0], bbox_b[0]); //重合部分上界float bottom = min(bbox_a[2], bbox_b[2]); //重合部分下界float left = max(bbox_a[1], bbox_b[1]); //重合部分左界float right = min(bbox_a[3], bbox_b[3]); //重合部分右界float height = max(bottom - top, 0.f); //重合部分高float width = max(right - left, 0.f); //重合部分宽float area_i = height * width; //重合部分面积float area_a = (bbox_a[2] - bbox_a[0]) * (bbox_a[3] - bbox_a[1]); //box_a 框面积float area_b = (bbox_b[2] - bbox_b[0]) * (bbox_b[3] - bbox_b[1]); //box_b 框面积return area_i / (area_a + area_b - area_i); //IOU

}extern "C"

__global__ //将从cpu调用 我们调用入口点

void nms_kernel(const int n_bbox, const float thresh,const float *dev_bbox,unsigned long long *dev_mask) {下面我要举例子了因为干讲怕理解不了,我们假设n_bbox=2000那么blocks=(32,32,1) threads=(64,1,1) 我们想象blocks就是32*32的格子 每个格子有64个线程在工作 每个格子互不干扰那么传入2000个框时,我们将拥有32*32*64=65536个线程在同时计算 blocks和threads可以让我们寻找到这个线程 就是下面的blockIdx.y这个是cuda自行给我们标识的 我们可以直接调用获得当前线程 blockIdx=0-31 blockidy=0-31 threadIdx.x=0-63 有了这个你就知道当前线程是哪个了const int row_start = blockIdx.y; const int col_start = blockIdx.x;const int row_size =min(n_bbox - row_start * threadsPerBlock, threadsPerBlock); //保证不越界 限制线程const int col_size =min(n_bbox - col_start * threadsPerBlock, threadsPerBlock); //保证不越界 限制线程__shared__ float block_bbox[threadsPerBlock * 4]; //一个block的thread共享一片内存 不同block互不影响if (threadIdx.x < col_size) { //threadIdx.x < col_size是保证不越界,这里的目的是将box数据 复制到block_bboxblock_bbox[threadIdx.x * 4 + 0] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 0];block_bbox[threadIdx.x * 4 + 1] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 1];block_bbox[threadIdx.x * 4 + 2] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 2];block_bbox[threadIdx.x * 4 + 3] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 3];}__syncthreads(); //等待同步 所有线程必须都干完了才能开始下面的工作if (threadIdx.x < row_size) { //保证不越界const int cur_box_idx = threadsPerBlock * row_start + threadIdx.x; //框idxconst float *cur_box = dev_bbox + cur_box_idx * 4; //生成框游标索引int i = 0;unsigned long long t = 0;int start = 0;if (row_start == col_start) { //跳过相同数据 自己和自己比IOUstart = threadIdx.x + 1;}for (i = start; i < col_size; i++) {if (devIoU(cur_box, block_bbox + i * 4) >= thresh) { //分别计算IOU 如果大于阈值 那么将t的i位置为1t |= 1ULL << i;}}const int col_blocks = DIVUP(n_bbox, threadsPerBlock);dev_mask[cur_box_idx * col_blocks + col_start] = t; 储存t值}

}

'''

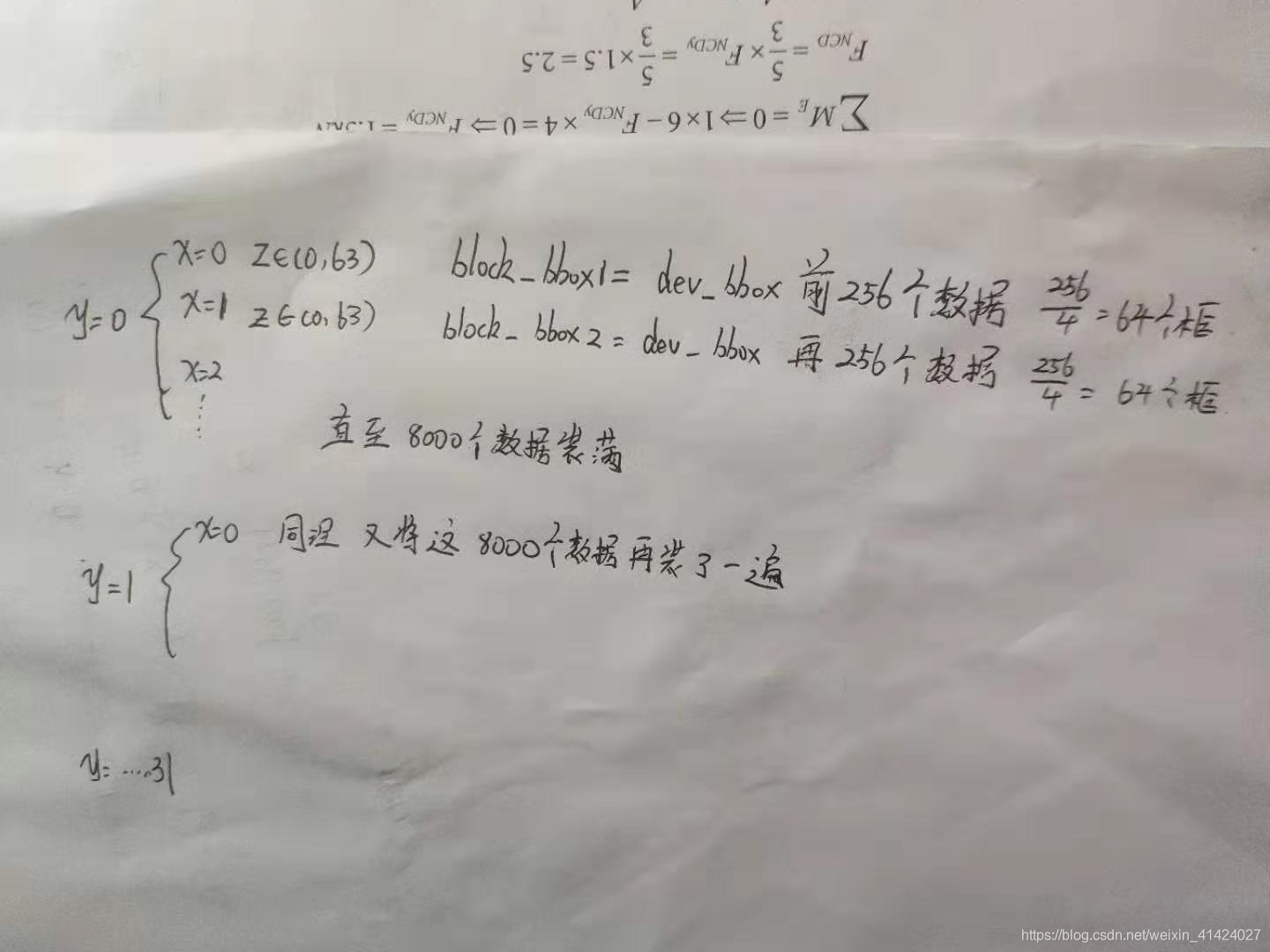

我们以n_bbox=2000,继续画图详细讲解一下,cuda代码在干什么吧:

if (threadIdx.x < col_size) { block_bbox[threadIdx.x * 4 + 0] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 0];block_bbox[threadIdx.x * 4 + 1] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 1];block_bbox[threadIdx.x * 4 + 2] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 2];block_bbox[threadIdx.x * 4 + 3] =dev_bbox[(threadsPerBlock * col_start + threadIdx.x) * 4 + 3];}

首先这里 threadIdx.x < col_size 是保证不越界,dev_bbox是我们传入的2000个bbox,dev_bbox的索引自然是0-1999, threadsPerBlock(64) * col_start(0-31) + threadIdx.x(0-63)= 0-2047 因为我们要保证线程只能多不能少于数据 所以多的那48个线程访问dev_bbox 就会越界,这就是前面row_size和限制 if(threadIdx.x < col_size)的作用

且注意:这是C语言 我们传入的只是dev_bbox数组的首地址,他会按float类型的指针进行寻址,bbox是(2000,4(ymax,xmax,ymin,xmin的))所以实际上按照dev_bbox的长度是2000*4=8000 寻址对应也要乘以4

由于每个block共享一片内存block_bbox[256],所以上面的代码干了一件这样的事(设blockIdx.y=y,blockIdx.x=x,threadIdx.x=z)

y=0,x=0时,将我们传入的2000个框 前64个框装入block_bbox

y=0,x=1时,将我们传入的2000个框 再64个框装入block_bbox

直至x=31 把2000个框装完

注意block_bbox是互不干扰的 生存在自己的显存空间里。

y=1,2…31时又将2000个框装了一遍…

相当于对源数据2000个框 复制了32次 想到当时对mask_dev开辟了32*2000的意义了吗 想到我当初说全排列的意义了吗

他是想要2000个框内 每两个不相同的框计算一次IOU 再巧妙的储存下来。

接着下面代码

if (threadIdx.x < row_size) { //保证不越界const int cur_box_idx = threadsPerBlock * row_start + threadIdx.x; //框idxconst float *cur_box = dev_bbox + cur_box_idx * 4; //生成框游标索引int i = 0;unsigned long long t = 0;int start = 0;if (row_start == col_start) { //跳过相同数据 自己和自己比IOUstart = threadIdx.x + 1;}for (i = start; i < col_size; i++) {if (devIoU(cur_box, block_bbox + i * 4) >= thresh) { //分别计算IOU 如果大于阈值 那么将t的i位置为1t |= 1ULL << i;}}const int col_blocks = DIVUP(n_bbox, threadsPerBlock);dev_mask[cur_box_idx * col_blocks + col_start] = t; 储存t值}

我们将x,y,z带入 直接看devIoU部分

if (y == x) { //跳过相同数据 自己和自己比IOUstart = z + 1;}for (i = start; i < col_size; i++) {if (devIoU(dev_bbox[4(y*64+z)], dev_bbox[4(x*64+z)+4i]) >= thresh) { //分别计算IOU 如果大于阈值 那么将t的i位置为1t |= 1ULL << i;}

现在就很清楚了如果x=y时 start=0时 我们比的就是同一个框自己 所以x=y时 start要等一z+1 向后错一个框

当y=0 x=0时 比的是第0个框和1-63个框的IOU 按1-63位 IOU大于阀值的话,t的1-63位会对应置为1 储存起来

同理y=0,x=1…x=1 y=0…每俩个框计算了一次IOU 当然由于不能重复比 t储存的数据数也会发生变化

只要32*2000个位置就可以储存所有结果 这个结果由于我们是 dev_mask[cur_box_idx * col_blocks + col_start] = t 储存的。

所以后面解析的时候可以清楚的知道哪两个框IOU大于thresh 是易于还原的。

_nms_gpu_post.pyd

上面我们得到了每2个框的IOU值 并巧妙的储存了起来,这里就是选择框的过程了,由于我们巧妙的储存了t,所以这里也会巧妙的把t读取来,完成NMS操作。这里的代码纯属技法问题,如果你熟悉cuda寻址,熟悉一些算法,这里很容易还原算法。这个类的作用就是NMS的作用:选出score最大的框,这个框肯定框柱了一个物体,如果其他与这个IOU大于阀值,那么认为其他框也是框的这个物体,但是score置信度还不行,果断扔掉。再找一个score次高的,循环做下去…就是nms了

cimport numpy as np

from libc.stdint cimport uint64_t

import numpy as np//根据我们的mask_dev 选出候选框 Cython写法 与python没什么不同 声明一些变量类型而已 Cpython会自动帮我们依据半python代码(pyd)将此pyd编译成.c c编译器会再次编译.c文件生成我们要的动态链接库def _nms_gpu_post(np.ndarray[np.uint64_t, ndim=1] mask,int n_bbox,int threads_per_block,int col_blocks):cdef:int i, j, nblock, indexuint64_t inblockint n_selection = 0uint64_t one_ull = 1np.ndarray[np.int32_t, ndim=1] selectionnp.ndarray[np.uint64_t, ndim=1] remvselection = np.zeros((n_bbox,), dtype=np.int32)remv = np.zeros((col_blocks,), dtype=np.uint64)for i in range(n_bbox): //遍历2000个框nblock = i // threads_per_block //nblock 0-31inblock = i % threads_per_block //inblock 0-63if not (remv[nblock] & one_ull << inblock): //如果IOU不小于阀值(标注的是一个新的物体)selection[n_selection] = i //记录这个box的indexn_selection += 1 //选取框数目+1index = i * col_blocks //index寻址for j in range(nblock, col_blocks): //将对应选中框的所有IOU对应结果存入remv[j] |= mask[index + j]return selection, n_selection由于我们传入的box都是按score排序好的,所以该算法可以实现。

首先我们会进入if not语句中,记录第一个score最大的框,下面for j的循环就是找出所有与第一个框的IOU记录

赋值给remv 下面就是依次判断第二个框第三个框是否与第一个框IOU大于阀值 移一位就是一个框的IOU记录

如果大于阀值则继续下一个for i循环 小于阀值认定是不同的框,由于其分数最高 所以记录在selection中 再找出与此框的所有IOU记录继续遍历…

bbox_tools.py

import numpy as np

import numpy as xpimport six

from six import __init__def bbox2loc(src_bbox, dst_bbox)://给定两个框 返回源框到目标框变换的loc 这原论文中说的很详细height = src_bbox[:, 2] - src_bbox[:, 0] //源框高width = src_bbox[:, 3] - src_bbox[:, 1] //源框宽ctr_y = src_bbox[:, 0] + 0.5 * height //矩中心y坐标ctr_x = src_bbox[:, 1] + 0.5 * width //矩中心x坐标base_height = dst_bbox[:, 2] - dst_bbox[:, 0]base_width = dst_bbox[:, 3] - dst_bbox[:, 1]base_ctr_y = dst_bbox[:, 0] + 0.5 * base_heightbase_ctr_x = dst_bbox[:, 1] + 0.5 * base_widtheps = xp.finfo(height.dtype).eps //防止数据错误height = xp.maximum(height, eps)width = xp.maximum(width, eps)dy = (base_ctr_y - ctr_y) / height //按论文计算dy dx dh dwdx = (base_ctr_x - ctr_x) / width dh = xp.log(base_height / height) dw = xp.log(base_width / width)loc = xp.vstack((dy, dx, dh, dw)).transpose()return locdef loc2bbox(src_bbox, loc)://给定源框和loc 反向计算目标框 还原方法 与bbox2loc对应if src_bbox.shape[0] == 0:return xp.zeros((0, 4), dtype=loc.dtype)src_bbox = src_bbox.astype(src_bbox.dtype, copy=False)src_height = src_bbox[:, 2] - src_bbox[:, 0]src_width = src_bbox[:, 3] - src_bbox[:, 1]src_ctr_y = src_bbox[:, 0] + 0.5 * src_heightsrc_ctr_x = src_bbox[:, 1] + 0.5 * src_widthdy = loc[:, 0::4]dx = loc[:, 1::4]dh = loc[:, 2::4]dw = loc[:, 3::4]ctr_y = dy * src_height[:, xp.newaxis] + src_ctr_y[:, xp.newaxis]ctr_x = dx * src_width[:, xp.newaxis] + src_ctr_x[:, xp.newaxis]h = xp.exp(dh) * src_height[:, xp.newaxis]w = xp.exp(dw) * src_width[:, xp.newaxis]dst_bbox = xp.zeros(loc.shape, dtype=loc.dtype)dst_bbox[:, 0::4] = ctr_y - 0.5 * hdst_bbox[:, 1::4] = ctr_x - 0.5 * wdst_bbox[:, 2::4] = ctr_y + 0.5 * hdst_bbox[:, 3::4] = ctr_x + 0.5 * wreturn dst_bboxdef bbox_iou(bbox_a, bbox_b)://计算两个框的IOU值 我们在cuda计算时已经见过了 但是这个方法的bbox_a与bbox_b可以是多维数组的(X,4)(Y,4)则返回的结果也是多维的结果(X,Y)//防止数据错误if bbox_a.shape[1] != 4 or bbox_b.shape[1] != 4:raise IndexErrortl = xp.maximum(bbox_a[:, None, :2], bbox_b[:, :2])br = xp.minimum(bbox_a[:, None, 2:], bbox_b[:, 2:])area_i = xp.prod(br - tl, axis=2) * (tl < br).all(axis=2)area_a = xp.prod(bbox_a[:, 2:] - bbox_a[:, :2], axis=1)area_b = xp.prod(bbox_b[:, 2:] - bbox_b[:, :2], axis=1)return area_i / (area_a[:, None] + area_b - area_i)def __test():passif __name__ == '__main__':__test()def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32])://生成Anocher 论文中介绍的很清楚了,要在feature map每个点生成9种不同size和长宽比的box这里只是生成最基本的Anchorbase 还没有在feature map上滑动py = base_size / 2. #y中点px = base_size / 2. #x中点anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4), //shape=(3*3,4)dtype=np.float32)for i in six.moves.range(len(ratios)): //9个box(ymax,xmax,ymin,xmin) 共36个参数for j in six.moves.range(len(anchor_scales)):h = base_size * anchor_scales[j] * np.sqrt(ratios[i])w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i])index = i * len(anchor_scales) + j anchor_base[index, 0] = py - h / 2.anchor_base[index, 1] = px - w / 2.anchor_base[index, 2] = py + h / 2.anchor_base[index, 3] = px + w / 2.return anchor_basecreator_tool.py

import numpy as np

import cupy as cpfrom model.utils.bbox_tools import bbox2loc, bbox_iou, loc2bbox

from model.utils.nms import non_maximum_suppression//从ProposalCreator生成的Rois中 这里是选取正负样本共n_sample个(这里是128)为了后续放入RoiPooling后

卷积全连接进行(20+1)类的分类损失计算FC21和21x4的box位置损失计算FC84 我们规定与真实gt_box框 IOU>0.5为正样本

0.5<IOU<0.1为负样本class ProposalTargetCreator(object):def __init__(self,n_sample=128,pos_ratio=0.25, pos_iou_thresh=0.5,neg_iou_thresh_hi=0.5, neg_iou_thresh_lo=0.0):self.n_sample = n_sample //要选取的样本数self.pos_ratio = pos_ratio //正样本率self.pos_iou_thresh = pos_iou_thresh //正样本iou阀值self.neg_iou_thresh_hi = neg_iou_thresh_hi //负样本iou最高阀值self.neg_iou_thresh_lo = neg_iou_thresh_lo # NOTE:default 0.1 in py-faster-rcnn 负样本iou最低阀值def __call__(self, roi, bbox, label, //输入 N个ROI (N,4) 真实标注的bbox(R,4),真实的label(R,)loc_normalize_mean=(0., 0., 0., 0.), //loc均值loc_normalize_std=(0.1, 0.1, 0.2, 0.2)): //loc标准差n_bbox, _ = bbox.shape //bbox个数roi = np.concatenate((roi, bbox), axis=0) //(N,4)to (N+R,4) R是真实框个数 N是ROI个数pos_roi_per_image = np.round(self.n_sample * self.pos_ratio) //正样本数 四舍五入iou = bbox_iou(roi, bbox) //计算IOUgt_assignment = iou.argmax(axis=1) //最大值索引,gt_assighment[i]=j 表示第i个roi 与第j个bbox的IOU最大max_iou = iou.max(axis=1) //对应上面索引的最大值gt_roi_label = label[gt_assignment] + 1 // 所有roi对应IOU最大的真实gt_box的label 一一对应[0, n_fg_class - 1] to [1, n_fg_class] 0是背景剩下n_fg_class个前景pos_index = np.where(max_iou >= self.pos_iou_thresh)[0] //选择IOU大于阀值的索引pos_roi_per_this_image = int(min(pos_roi_per_image, pos_index.size)) //如果得到索引个数大于设定 则变为设定个数if pos_index.size > 0:pos_index = np.random.choice( //随机选取pos_roi_per_this_image个 pos_index, size=pos_roi_per_this_image, replace=False)neg_index = np.where((max_iou < self.neg_iou_thresh_hi) & //IOU小于设定的索引 为负样本(max_iou >= self.neg_iou_thresh_lo))[0]neg_roi_per_this_image = self.n_sample - pos_roi_per_this_image //计算负样本个数neg_roi_per_this_image = int(min(neg_roi_per_this_image, neg_index.size))if neg_index.size > 0:neg_index = np.random.choice( //随机选择设定负样本个数的负样本neg_index, size=neg_roi_per_this_image, replace=False)keep_index = np.append(pos_index, neg_index) //所有选取的样本indexgt_roi_label = gt_roi_label[keep_index] //对应的labelgt_roi_label[pos_roi_per_this_image:] = 0 # negative labels --> 0sample_roi = roi[keep_index] //根据keep_index选出对应roi框# Compute offsets and scales to match sampled RoIs to the GTs.gt_roi_loc = bbox2loc(sample_roi, bbox[gt_assignment[keep_index]]) //计算我们取样的roi和真实bbox 的loc 便于后面Loss的计算gt_roi_loc = ((gt_roi_loc - np.array(loc_normalize_mean, np.float32) //标准化) / np.array(loc_normalize_std, np.float32))return sample_roi, gt_roi_loc, gt_roi_label//从20000多个Anchor中 选择正负样本128共256个进行 对anchor进行9*2(前,后景)的分类任务 9*4(ymax,xmax,ymin,xmin)个位置回归任务 规定IOU最高为正 IOU>0.7为正样本,IOU<0.3为负样本 只计算前景损失

class AnchorTargetCreator(object):def __init__(self,n_sample=256,pos_iou_thresh=0.7, neg_iou_thresh=0.3,pos_ratio=0.5):self.n_sample = n_sampleself.pos_iou_thresh = pos_iou_threshself.neg_iou_thresh = neg_iou_threshself.pos_ratio = pos_ratiodef __call__(self, bbox, anchor, img_size):img_H, img_W = img_size //图片高 宽n_anchor = len(anchor) //anchor个数inside_index = _get_inside_index(anchor, img_H, img_W) //将超出图片边界的anchor过滤 返回在界内的anchor索引anchor = anchor[inside_index] //选择界内的anchorargmax_ious, label = self._create_label( //根据真实bbox 给anchor创建标签label 并返回标签对应的[(iou最大值的gt_box)的index]inside_index, anchor, bbox)loc = bbox2loc(anchor, bbox[argmax_ious]) //计算anchor和自己对应IOU最大的gt_bbox的损失label = _unmap(label, n_anchor, inside_index, fill=-1) //将label数据 置于原来总anchor的位置,并将其余没选出的位置置为-1loc = _unmap(loc, n_anchor, inside_index, fill=0) //将loc数据 置于原来总anchor的位置,并将其余没选出的位置置为0return loc, labeldef _create_label(self, inside_index, anchor, bbox):# label: 1 is positive, 0 is negative, -1 is dont carelabel = np.empty((len(inside_index),), dtype=np.int32) //len(边界内的anochor)label.fill(-1)argmax_ious, max_ious, gt_argmax_ious = \ //见函数self._calc_ious(anchor, bbox, inside_index)# assign negative labels first so that positive labels can clobber themlabel[max_ious < self.neg_iou_thresh] = 0 //将IOU小于阀值的置为1label[gt_argmax_ious] = 1 //将于gt_box IOU最大的anchor label置为1label[max_ious >= self.pos_iou_thresh] = 1 //IOU大于阀值的置为1n_pos = int(self.pos_ratio * self.n_sample) //我们要的正样本数pos_index = np.where(label == 1)[0] //实际正样本数if len(pos_index) > n_pos: //实际>我们要的disable_index = np.random.choice( //从中随机选择不要的样本pos_index, size=(len(pos_index) - n_pos), replace=False)label[disable_index] = -1 //没选中的置为-1 dont caren_neg = self.n_sample - np.sum(label == 1) //我们要的负样本数neg_index = np.where(label == 0)[0] 同上if len(neg_index) > n_neg:disable_index = np.random.choice(neg_index, size=(len(neg_index) - n_neg), replace=False)label[disable_index] = -1return argmax_ious, label def _calc_ious(self, anchor, bbox, inside_index):ious = bbox_iou(anchor, bbox) //计算IOU argmax_ious = ious.argmax(axis=1) //argmax_ious[i]=j 表示第i个anchor与第j个bbox IOU值最大max_ious = ious[np.arange(len(inside_index)), argmax_ious] //max_ious[i]=j 表示第i个anchor与对应gt_box IOU的最大值是 jgt_argmax_ious = ious.argmax(axis=0) //gt_argmax_ious[i]=j 表示第i个bbox与第j个anchor IOU值最大gt_max_ious = ious[gt_argmax_ious, np.arange(ious.shape[1])] //max_ious[i]=j 表示第i个gt_bbox与对应anchor IOU的最大值是 jgt_argmax_ious = np.where(ious == gt_max_ious)[0] //是一个索引gt_argmax_ious[a,b,c,d,e] 表示第a个anchor与对应box IOU值最大,其中a<=b<=c<=d<=e 即表示我们将要选出的框的索引 表示a这个anchor和某个bbox IOU最大这已经够了,我们要选他,并不关心他与哪个框最大。所以为什么不取 gt_argmax_ious = ious.argmax(axis=0) 的结果呢,因为他没有把等值算上,比如第二个box与 第20个anchor和第300个anchor IOU最大都是0.9 前面的运算只会选择第20个anchor而不会选择300 return argmax_ious, max_ious, gt_argmax_iousdef _unmap(data, count, index, fill=0):if len(data.shape) == 1: //1维arrayret = np.empty((count,), dtype=data.dtype) ret.fill(fill) //填满fillret[index] = data //索引处换为数据else: //维度对齐ret = np.empty((count,) + data.shape[1:], dtype=data.dtype) //根据data的shape创建 np.arrayret.fill(fill) //填满fillret[index, :] = data //索引处换为数据return retdef _get_inside_index(anchor, H, W)://返回anchor在图片内(不超出边界)的anchor索引index_inside = np.where((anchor[:, 0] >= 0) &(anchor[:, 1] >= 0) &(anchor[:, 2] <= H) &(anchor[:, 3] <= W))[0]return index_inside//计算所有anchor是前景的概率,选择概率大的12000(训练)/6000(测试)个 修正位置参数,获得ROIS 再经过NMS后

选择2000/300 个为真正的ROIS 输出(2000/300,R)的ROISclass ProposalCreator:def __init__(self,parent_model,nms_thresh=0.7,n_train_pre_nms=12000,n_train_post_nms=2000,n_test_pre_nms=6000,n_test_post_nms=300,min_size=16):self.parent_model = parent_modelself.nms_thresh = nms_threshself.n_train_pre_nms = n_train_pre_nmsself.n_train_post_nms = n_train_post_nmsself.n_test_pre_nms = n_test_pre_nmsself.n_test_post_nms = n_test_post_nmsself.min_size = min_sizedef __call__(self, loc, score, //传入,预测的loc补偿,score分数,featuremap的所有anchor anchor, img_size, scale=1.):if self.parent_model.training:n_pre_nms = self.n_train_pre_nmsn_post_nms = self.n_train_post_nmselse:n_pre_nms = self.n_test_pre_nmsn_post_nms = self.n_test_post_nms//anchor调整位置后获得roiroi = loc2bbox(anchor, loc)//限制调整后得到的roi候选框在图片内(防止越界)roi[:, slice(0, 4, 2)] = np.clip( // 0<ymax,ymin<H 小于0部分变为0 大于H部分变为Hroi[:, slice(0, 4, 2)], 0, img_size[0])roi[:, slice(1, 4, 2)] = np.clip( // 0<xmax,xmin<W 小于0部分变为0 大于W部分变为Wroi[:, slice(1, 4, 2)], 0, img_size[1])min_size = self.min_size * scale //threshold 框最小阀值,去除比较小的rois框hs = roi[:, 2] - roi[:, 0] ws = roi[:, 3] - roi[:, 1]keep = np.where((hs >= min_size) & (ws >= min_size))[0]roi = roi[keep, :]score = score[keep]order = score.ravel().argsort()[::-1] //分数由高到低排序if n_pre_nms > 0: //进入nms前 我们想保留的ROIS个数order = order[:n_pre_nms]roi = roi[order, :]keep = non_maximum_suppression( //NMScp.ascontiguousarray(cp.asarray(roi)),thresh=self.nms_thresh)if n_post_nms > 0: //我们想保留的nms后ROIS的个数keep = keep[:n_post_nms]roi = roi[keep]return roiROI_POOLING2D的函数,作者用cuda写了一遍,嫌弃chainer和pytorch自带的pooling2d函数运行的慢…

这里只解释向前过程forward。backward代码因为作者想证明自己写的代码正确,将自己forward后的结果,backward后的梯度与chainer的进行比较 都相同则说明自己写的没问题…

roi_cupy.py

kernel_forward = '''extern "C"__global__ void roi_forward(const float* const bottom_data,const float* const bottom_rois,float* top_data, int* argmax_data,const double spatial_scale,const int channels,const int height, const int width, const int pooled_height, const int pooled_width,const int NN){

//第一个参数bottom_data feature map首地址 我们卷积之后得到512*H/16*W/16的 featuremap第二个参数bottom_rois 是rois的首地址(N,5) N是rois的个数128 (第一列表示featuremap_batch中的索引,即该rois对应哪个featuremap,由于作者只实现了batch_size=1 所以这个没有用,其余四列表示其余的左上角和右下角坐标)//第三个参数 第四个参数 两个都是我们开辟的(128,512(通道),7(outh),7(outw)) 大小的用来储存结果的地址// spatial_scale:原图和feature map的比例 这里是1/16 ,const int channels:512,const int height: feature map H, const int width:feature map W, const int pooled_height: pooling后H 论文是7, const int pooled_width pooling后W 论文是7, const int NN:值数为128*512*7*7 防止寻址越界int idx = blockIdx.x * blockDim.x + threadIdx.x; //cuda一维最常用寻址 能包含(128,512,7,7)大小的所有线程idif(idx>=NN) //超出rois数据范围 返回return;//由于我们要pooling成7x7的,每一次遍历就是找出7*7=49中 1*1的区域内最大值进行最大池化操作 所以每49个线程 进行1个roi的1个通道的池化 每7*7*512个线程pooling一个roi 共128*512*7*7个线程 记录这些池化值const int pw = idx % pooled_width; const int ph = (idx / pooled_width) % pooled_height;const int c = (idx / pooled_width / pooled_height) % channels; //当前通道int num = idx / pooled_width / pooled_height / channels; //当前rois个数const int roi_batch_ind = bottom_rois[num * 5 + 0]; //图像标签const int roi_start_w = round(bottom_rois[num * 5 + 1] * spatial_scale); //xminconst int roi_start_h = round(bottom_rois[num * 5 + 2] * spatial_scale); //yminconst int roi_end_w = round(bottom_rois[num * 5 + 3] * spatial_scale); //xmaxconst int roi_end_h = round(bottom_rois[num * 5 + 4] * spatial_scale); //ymax// Force malformed ROIs to be 1x1const int roi_width = max(roi_end_w - roi_start_w + 1, 1); //witdh 至少为1const int roi_height = max(roi_end_h - roi_start_h + 1, 1); //height 至少为1const float bin_size_h = static_cast<float>(roi_height) // float: height/7/ static_cast<float>(pooled_height);const float bin_size_w = static_cast<float>(roi_width) // float: width/7/ static_cast<float>(pooled_width);int hstart = static_cast<int>(floor(static_cast<float>(ph) // height/7 * ph 向下取整* bin_size_h));int wstart = static_cast<int>(floor(static_cast<float>(pw) // width/7 * pw 向下取整* bin_size_w));int hend = static_cast<int>(ceil(static_cast<float>(ph + 1) //width/7 *(ph+1) 向下取整* bin_size_h));int wend = static_cast<int>(ceil(static_cast<float>(pw + 1) // width/7 * (pw+1) 向下取整* bin_size_w));// Add roi offsets and clip to input boundaries 保证不越界 hstart = min(max(hstart + roi_start_h, 0), height); hend = min(max(hend + roi_start_h, 0), height);wstart = min(max(wstart + roi_start_w, 0), width);wend = min(max(wend + roi_start_w, 0), width);bool is_empty = (hend <= hstart) || (wend <= wstart); //没有结果// 空roipooling记位0float maxval = is_empty ? 0 : -1E+37;// If nothing is pooled, argmax=-1 causes nothing to be backprop'dint maxidx = -1;const int data_offset = (roi_batch_ind * channels + c) * height * width; //roi对应的feature map for (int h = hstart; h < hend; ++h) { //遍历7x7的其中一个1x1区域找出最大值 进行最大池化操作for (int w = wstart; w < wend; ++w) {int bottom_index = h * width + w;if (bottom_data[data_offset + bottom_index] > maxval) {maxval = bottom_data[data_offset + bottom_index];maxidx = bottom_index;}}}top_data[idx]=maxval; //记录最大值和argmax_data[idx]=maxidx; //此最大值在feature map中的index}

faster_rcnn.py

from __future__ import absolute_import

from __future__ import division

import torch as t

import numpy as np

import cupy as cp

from utils import array_tool as at

from model.utils.bbox_tools import loc2bbox

from model.utils.nms import non_maximum_suppressionfrom torch import nn

from data.dataset import preprocess

from torch.nn import functional as F

from utils.config import optdef nograd(f): //取消所有tensor的梯度 预测时加快程序运行def new_f(*args,**kwargs):with t.no_grad():return f(*args,**kwargs)return new_fclass FasterRCNN(nn.Module):def __init__(self, extractor, rpn, head,loc_normalize_mean = (0., 0., 0., 0.),loc_normalize_std = (0.1, 0.1, 0.2, 0.2)):super(FasterRCNN, self).__init__()self.extractor = extractorself.rpn = rpnself.head = head# mean and stdself.loc_normalize_mean = loc_normalize_meanself.loc_normalize_std = loc_normalize_stdself.use_preset('evaluate')@property //装饰器 get方法def n_class(self):# Total number of classes including the background.return self.head.n_classdef forward(self, x, scale=1.): //向前过程img_size = x.shape[2:] //h,wh = self.extractor(x) //feature maprpn_locs, rpn_scores, rois, roi_indices, anchor = \ //送入rpn self.rpn(h, img_size, scale)roi_cls_locs, roi_scores = self.head( //送入 roi_headh, rois, roi_indices)return roi_cls_locs, roi_scores, rois, roi_indicesdef use_preset(self, preset)://在预测可视化的时候会对score阀值要求高一点 显示效果更好if preset == 'visualize':self.nms_thresh = 0.3self.score_thresh = 0.7//在进行模型评估的时候会将score阀值置的很低elif preset == 'evaluate':self.nms_thresh = 0.3self.score_thresh = 0.05else:raise ValueError('preset must be visualize or evaluate')def _suppress(self, raw_cls_bbox, raw_prob): //由predict调用 预测时对每类候选框进行阀值和Nms操作bbox = list()label = list()score = list()// 从1开始 0是背景 for l in range(1, self.n_class): //遍历20个前景cls_bbox_l = raw_cls_bbox.reshape((-1, self.n_class, 4))[:, l, :] //取出第L类的boxprob_l = raw_prob[:, l] //取出第L类的probmask = prob_l > self.score_thresh //大于阀值cls_bbox_l = cls_bbox_l[mask] //保留大于阀值的boxprob_l = prob_l[mask] //保留大于阀值的probkeep = non_maximum_suppression( //nmscp.array(cls_bbox_l), self.nms_thresh, prob_l)keep = cp.asnumpy(keep) //经过nms后保留的indexbbox.append(cls_bbox_l[keep]) //加入预测框# The labels are in [0, self.n_class - 2].label.append((l - 1) * np.ones((len(keep),))) //加入labelscore.append(prob_l[keep]) //加入probbbox = np.concatenate(bbox, axis=0).astype(np.float32)label = np.concatenate(label, axis=0).astype(np.int32)score = np.concatenate(score, axis=0).astype(np.float32)return bbox, label, score//预测函数,预测图片的框和分数,标签并返回 取消变量梯度,加快计算@nograd //装饰器 取消梯度def predict(self, imgs,sizes=None,visualize=False):self.eval() //取消BN和dropoutif visualize:self.use_preset('visualize')prepared_imgs = list()sizes = list()for img in imgs:size = img.shape[1:]img = preprocess(at.tonumpy(img)) //预处理prepared_imgs.append(img)sizes.append(size)else:prepared_imgs = imgs bboxes = list()labels = list()scores = list()for img, size in zip(prepared_imgs, sizes): //遍历我们要预测的每张图片img = at.totensor(img[None]).float() #1 C H Wscale = img.shape[3] / size[1] roi_cls_loc, roi_scores, rois, _ = self(img, scale=scale) //进入forward 向前计算roi_score = roi_scores.dataroi_cls_loc = roi_cls_loc.dataroi = at.totensor(rois) / scale //对应resize前真实图片的roimean = t.Tensor(self.loc_normalize_mean).cuda(). \ //均值repeat n_classrepeat(self.n_class)[None]std = t.Tensor(self.loc_normalize_std).cuda(). \ //标准差repeat n_classrepeat(self.n_class)[None]roi_cls_loc = (roi_cls_loc * std + mean) //还原roi_cls_loc shape:(R,84)roi_cls_loc = roi_cls_loc.view(-1, self.n_class, 4) //shape(R,21,4)roi = roi.view(-1, 1, 4).expand_as(roi_cls_loc) //roi(R,4) to (R,21,4)cls_bbox = loc2bbox(at.tonumpy(roi).reshape((-1, 4)), //修正roi //shape(21R,4)at.tonumpy(roi_cls_loc).reshape((-1, 4)))cls_bbox = at.totensor(cls_bbox) cls_bbox = cls_bbox.view(-1, self.n_class * 4) //shape(R,84)# 防止越界 box 超出真实imgcls_bbox[:, 0::2] = (cls_bbox[:, 0::2]).clamp(min=0, max=size[0]) cls_bbox[:, 1::2] = (cls_bbox[:, 1::2]).clamp(min=0, max=size[1])prob = at.tonumpy(F.softmax(at.totensor(roi_score), dim=1)) //softmax scoreraw_cls_bbox = at.tonumpy(cls_bbox)raw_prob = at.tonumpy(prob)bbox, label, score = self._suppress(raw_cls_bbox, raw_prob) //对box按n_class进行阀值保留和Nms操作 得到真正预测后的预测框bboxes.append(bbox)labels.append(label)scores.append(score)self.use_preset('evaluate') self.train() //启用BN和dropoutreturn bboxes, labels, scoresdef get_optimizer(self)://返回optimizerlr = opt.lrparams = []for key, value in dict(self.named_parameters()).items():if value.requires_grad: //需要梯度if 'bias' in key: //有偏置的参数lr*2params += [{'params': [value], 'lr': lr * 2, 'weight_decay': 0}]else: //学习速率衰减params += [{'params': [value], 'lr': lr, 'weight_decay': opt.weight_decay}]if opt.use_adam: //Adam优化self.optimizer = t.optim.Adam(params)else: //SGDself.optimizer = t.optim.SGD(params, momentum=0.9)return self.optimizerdef scale_lr(self, decay=0.1): //学习速率衰减for param_group in self.optimizer.param_groups:param_group['lr'] *= decayreturn self.optimizer

faster_rcnn.py

faster_rcnn_vgg16.py

from __future__ import absolute_import

import torch as t

from torch import nn

from torchvision.models import vgg16

from model.region_proposal_network import RegionProposalNetwork

from model.faster_rcnn import FasterRCNN

from model.roi_module import RoIPooling2D

from utils import array_tool as at

from utils.config import optdef decom_vgg16():if opt.caffe_pretrain: //加载caffepretrain参数model = vgg16(pretrained=False)if not opt.load_path:model.load_state_dict(t.load(opt.caffe_pretrain_path))else:model = vgg16(not opt.load_path) //加载pytorch pretrain参数features = list(model.features)[:30] //除去最后最大池化classifier = model.classifier //Linear ReLu Dropoutclassifier = list(classifier)del classifier[6] //删除最后一个全连接层if not opt.use_drop: //不使用dropout 删除两个dropout层del classifier[5]del classifier[2]classifier = nn.Sequential(*classifier) //新的classifier# 冻结前四个卷积层的参数 加快训练速度 不需要求梯度不用BP过程for layer in features[:10]: for p in layer.parameters():p.requires_grad = Falsereturn nn.Sequential(*features), classifierclass FasterRCNNVGG16(FasterRCNN):def __init__(self,n_fg_class=20,ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32]):extractor, classifier = decom_vgg16()rpn = RegionProposalNetwork(512, 512,ratios=ratios,anchor_scales=anchor_scales,feat_stride=self.feat_stride,)head = VGG16RoIHead(n_class=n_fg_class + 1,roi_size=7,spatial_scale=(1. / self.feat_stride),classifier=classifier)super(FasterRCNNVGG16, self).__init__(extractor,rpn,head,)class VGG16RoIHead(nn.Module):def __init__(self, n_class, roi_size, spatial_scale,classifier):# n_class includes the backgroundsuper(VGG16RoIHead, self).__init__()self.classifier = classifierself.cls_loc = nn.Linear(4096, n_class * 4)self.score = nn.Linear(4096, n_class)normal_init(self.cls_loc, 0, 0.001)normal_init(self.score, 0, 0.01)self.n_class = n_classself.roi_size = roi_sizeself.spatial_scale = spatial_scaleself.roi = RoIPooling2D(self.roi_size, self.roi_size, self.spatial_scale)def forward(self, x, rois, roi_indices):roi_indices = at.totensor(roi_indices).float() //shape(128,)rois = at.totensor(rois).float() //shape(128,4)indices_and_rois = t.cat([roi_indices[:, None], rois], dim=1) //shape(128,5)xy_indices_and_rois = indices_and_rois[:, [0, 2, 1, 4, 3]] /index ymin xmin ymax xmax TO index xmin ymin xmax ymaxindices_and_rois = xy_indices_and_rois.contiguous() //返回连续内存的数据 以便放入cudapool = self.roi(x, indices_and_rois) //roipooling return:(128,512,7,7)pool = pool.view(pool.size(0), -1) //(128,25088) 正好与VGG16的全连接层连接fc7 = self.classifier(pool) roi_cls_locs = self.cls_loc(fc7) roi_scores = self.score(fc7) return roi_cls_locs, roi_scoresdef normal_init(m, mean, stddev, truncated=False): //正态分布初始化参数if truncated: //截断高斯m.weight.data.normal_().fmod_(2).mul_(stddev).add_(mean) # not a perfect approximationelse: //普通高斯m.weight.data.normal_(mean, stddev)m.bias.data.zero_()region_proposal_network.py

import numpy as np

from torch.nn import functional as F

import torch as t

from torch import nnfrom model.utils.bbox_tools import generate_anchor_base

from model.utils.creator_tool import ProposalCreatorclass RegionProposalNetwork(nn.Module):def __init__(self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32], feat_stride=16,proposal_creator_params=dict(),):super(RegionProposalNetwork, self).__init__()self.anchor_base = generate_anchor_base( anchor_scales=anchor_scales, ratios=ratios)self.feat_stride = feat_strideself.proposal_layer = ProposalCreator(self, **proposal_creator_params)n_anchor = self.anchor_base.shape[0]self.conv1 = nn.Conv2d(in_channels, mid_channels, 3, 1, 1)self.score = nn.Conv2d(mid_channels, n_anchor * 2, 1, 1, 0)self.loc = nn.Conv2d(mid_channels, n_anchor * 4, 1, 1, 0)normal_init(self.conv1, 0, 0.01)normal_init(self.score, 0, 0.01)normal_init(self.loc, 0, 0.01)def forward(self, x, img_size, scale=1.):n, _, hh, ww = x.shape //feature mapanchor = _enumerate_shifted_anchor( //利用base_anchor 在featuremap上滑动 生成所有的9*hh*ww的Anchornp.array(self.anchor_base),self.feat_stride, hh, ww)n_anchor = anchor.shape[0] // (hh * ww) //1个pix有多少个anchor=9h = F.relu(self.conv1(x)) //第一个卷积层+relurpn_locs = self.loc(h) //四个位置的cls shape(1, A:36,H,W) HW:feature_map A:4*9(1个像素9anchor)rpn_locs = rpn_locs.permute(0, 2, 3, 1).contiguous().view(n, -1, 4) //(1, A:36,H,W,) to (1,H,W,A) to (1,HW9,4)rpn_scores = self.score(h) // 卷积结果(1,2*9,H,W)rpn_scores = rpn_scores.permute(0, 2, 3, 1).contiguous() //(1,H,W,2*9)rpn_softmax_scores = F.softmax(rpn_scores.view(n, hh, ww, n_anchor, 2), dim=4) //(1,H,W,9,2)按二分类进行softmaxrpn_fg_scores = rpn_softmax_scores[:, :, :, :, 1].contiguous() //前景分数rpn_fg_scores = rpn_fg_scores.view(n, -1)rpn_scores = rpn_scores.view(n, -1, 2) //rpn分数rois = list()roi_indices = list()for i in range(n): //batchsize=n=1roi = self.proposal_layer( //调用Proposal Creator 得到roirpn_locs[i].cpu().data.numpy(),rpn_fg_scores[i].cpu().data.numpy(),anchor, img_size,scale=scale)batch_index = i * np.ones((len(roi),), dtype=np.int32) //这里是标注roi对于batch的索引 batch=1 所以没有作用rois.append(roi)roi_indices.append(batch_index)rois = np.concatenate(rois, axis=0)roi_indices = np.concatenate(roi_indices, axis=0)return rpn_locs, rpn_scores, rois, roi_indices, anchordef _enumerate_shifted_anchor(anchor_base, feat_stride, height, width):import numpy as xpshift_y = xp.arange(0, height * feat_stride, feat_stride) //因为down sample了16倍 在featuremap移动一个相当于原图移动16shift_x = xp.arange(0, width * feat_stride, feat_stride) //同理shift_x, shift_y = xp.meshgrid(shift_x, shift_y) //生成网格点坐标矩阵 如果你用matlab那么这函数应该挺熟悉,就是生成shift_x与shift_y组成的矩形中所有的点(含边缘) 点间隔feat_stride=16 结果的shift_x y按位置组成一个坐标 shift = xp.stack((shift_y.ravel(), shift_x.ravel(), //将y,x平铺后组合,生成shape(K,4) = (K,(y,x)(y,x))的shift shift_y.ravel(), shift_x.ravel()), axis=1)A = anchor_base.shape[0] //1个点对应A(9)个anchorK = shift.shape[0] //共K个点anchor = anchor_base.reshape((1, A, 4)) + \ //anchor=(1,A,4) + (K,1,4) 根据广播将进行K和A的全排列 生成(K,A,4)所有锚shift.reshape((1, K, 4)).transpose((1, 0, 2))anchor = anchor.reshape((K * A, 4)).astype(np.float32)return anchordef _enumerate_shifted_anchor_torch(anchor_base, feat_stride, height, width)://pytorch版本def normal_init(m, mean, stddev, truncated=False): //正态初始化参数if truncated:m.weight.data.normal_().fmod_(2).mul_(stddev).add_(mean) else:m.weight.data.normal_(mean, stddev)m.bias.data.zero_()roi_module.py

from collections import namedtuple

from string import Template

import cupy, torch

import cupy as cp

import torch as t

from torch.autograd import Function

from model.utils.roi_cupy import kernel_backward, kernel_forwardStream = namedtuple('Stream', ['ptr']) @cupy.util.memoize(for_each_device=True) //nms中见过,加载code

def load_kernel(kernel_name, code, **kwargs):cp.cuda.runtime.free(0)code = Template(code).substitute(**kwargs)kernel_code = cupy.cuda.compile_with_cache(code)return kernel_code.get_function(kernel_name)CUDA_NUM_THREADS = 1024def GET_BLOCKS(N, K=CUDA_NUM_THREADS): //全覆盖计算 向上取整除return (N + K - 1) // Kclass RoI(Function):def __init__(self, outh, outw, spatial_scale):self.forward_fn = load_kernel('roi_forward', kernel_forward)self.backward_fn = load_kernel('roi_backward', kernel_backward)self.outh, self.outw, self.spatial_scale = outh, outw, spatial_scaledef forward(self, x, rois): //向前传播过程x = x.contiguous() //连续内存rois = rois.contiguous() //连续内存self.in_size = B, C, H, W = x.size() //featuremapself.N = N = rois.size(0)output = t.zeros(N, C, self.outh, self.outw).cuda() //储存roipooling结果self.argmax_data = t.zeros(N, C, self.outh, self.outw).int().cuda() //储存maxdata在featuremap中的索引结果self.rois = roisargs = [x.data_ptr(), rois.data_ptr(),output.data_ptr(),self.argmax_data.data_ptr(),self.spatial_scale, C, H, W,self.outh, self.outw,output.numel()]stream = Stream(ptr=torch.cuda.current_stream().cuda_stream)self.forward_fn(args=args,block=(CUDA_NUM_THREADS, 1, 1),grid=(GET_BLOCKS(output.numel()), 1, 1),stream=stream)return outputdef backward(self, grad_output): //反向传播过程 为了验证cuda code正确性grad_output = grad_output.contiguous()B, C, H, W = self.in_sizegrad_input = t.zeros(self.in_size).cuda()stream = Stream(ptr=torch.cuda.current_stream().cuda_stream)args = [grad_output.data_ptr(),self.argmax_data.data_ptr(),self.rois.data_ptr(),grad_input.data_ptr(),self.N, self.spatial_scale, C, H, W, self.outh, self.outw,grad_input.numel()]self.backward_fn(args=args,block=(CUDA_NUM_THREADS, 1, 1),grid=(GET_BLOCKS(grad_input.numel()), 1, 1),stream=stream)return grad_input, Noneclass RoIPooling2D(t.nn.Module):def __init__(self, outh, outw, spatial_scale):super(RoIPooling2D, self).__init__()self.RoI = RoI(outh, outw, spatial_scale)def forward(self, x, rois):return self.RoI(x, rois)def test_roi_module(): //测试写的roiB, N, C, H, W, PH, PW = 2, 8, 4, 32, 32, 7, 7 //生成测试数据bottom_data = t.randn(B, C, H, W).cuda()bottom_rois = t.randn(N, 5)bottom_rois[:int(N / 2), 0] = 0bottom_rois[int(N / 2):, 0] = 1bottom_rois[:, 1:] = (t.rand(N, 4) * 100).float()bottom_rois = bottom_rois.cuda()spatial_scale = 1. / 16outh, outw = PH, PW# pytorch versionmodule = RoIPooling2D(outh, outw, spatial_scale) x = bottom_data.requires_grad_() rois = bottom_rois.detach()output = module(x, rois)output.sum().backward() def t2c(variable): //tensor to cupynpa = variable.data.cpu().numpy()return cp.array(npa)def test_eq(variable, array, info): //判断结果是否相等cc = cp.asnumpy(array)neq = (cc != variable.data.cpu().numpy())assert neq.sum() == 0, 'test failed: %s' % info# chainer version,if you're going to run this# pip install chainer import chainer.functions as Ffrom chainer import Variablex_cn = Variable(t2c(x)) //pytorch to cupy to chainero_cn = F.roi_pooling_2d(x_cn, t2c(rois), outh, outw, spatial_scale) //chainer roi_poolingtest_eq(output, o_cn.array, 'forward')F.sum(o_cn).backward() test_eq(x.grad, x_cn.grad, 'backward')print('test pass') //测试成功trainer_.py

from __future__ import absolute_import

import os

from collections import namedtuple

import time

from torch.nn import functional as F

from model.utils.creator_tool import AnchorTargetCreator, ProposalTargetCreatorfrom torch import nn

import torch as t

from utils import array_tool as at

from utils.vis_tool import Visualizerfrom utils.config import opt

from torchnet.meter import ConfusionMeter, AverageValueMeterLossTuple = namedtuple('LossTuple', //看名字很好理解,作用就是储存这几个loss['rpn_loc_loss','rpn_cls_loss','roi_loc_loss','roi_cls_loss','total_loss'])class FasterRCNNTrainer(nn.Module):def __init__(self, faster_rcnn):super(FasterRCNNTrainer, self).__init__()self.faster_rcnn = faster_rcnnself.rpn_sigma = opt.rpn_sigmaself.roi_sigma = opt.roi_sigmaself.anchor_target_creator = AnchorTargetCreator()self.proposal_target_creator = ProposalTargetCreator()self.loc_normalize_mean = faster_rcnn.loc_normalize_meanself.loc_normalize_std = faster_rcnn.loc_normalize_stdself.optimizer = self.faster_rcnn.get_optimizer()self.rpn_cm = ConfusionMeter(2) //前后景误差矩阵 小彩蛋:这个方法的源码...classification拼错了self.roi_cm = ConfusionMeter(21) //21分类误差矩阵self.meters = {k: AverageValueMeter() for k in LossTuple._fields} //字典解析,平均loss AverageValueMeter会自动求均值def forward(self, imgs, bboxes, labels, scale)://训练过程中的向前过程,是最大的forward类 读这个函数可以了解faster-r-cnn的过程n = bboxes.shape[0]if n != 1:raise ValueError('Currently only batch size 1 is supported.')_, _, H, W = imgs.shapeimg_size = (H, W)features = self.faster_rcnn.extractor(imgs) //生成featuremaprpn_locs, rpn_scores, rois, roi_indices, anchor = \ self.faster_rcnn.rpn(features, img_size, scale)# Since batch size is one, convert variables to singular formbbox = bboxes[0]label = labels[0]rpn_score = rpn_scores[0]rpn_loc = rpn_locs[0]roi = rois# Sample RoIs and forward# it's fine to break the computation graph of rois, # consider them as constant inputsample_roi, gt_roi_loc, gt_roi_label = self.proposal_target_creator(roi,at.tonumpy(bbox),at.tonumpy(label),self.loc_normalize_mean,self.loc_normalize_std)# NOTE it's all zero because now it only support for batch=1 nowsample_roi_index = t.zeros(len(sample_roi))roi_cls_loc, roi_score = self.faster_rcnn.head(features,sample_roi,sample_roi_index)# ------------------ RPN losses -------------------#gt_rpn_loc, gt_rpn_label = self.anchor_target_creator(at.tonumpy(bbox),anchor,img_size)gt_rpn_label = at.totensor(gt_rpn_label).long()gt_rpn_loc = at.totensor(gt_rpn_loc)rpn_loc_loss = _fast_rcnn_loc_loss( //rpn loc损失rpn_loc,gt_rpn_loc,gt_rpn_label.data,self.rpn_sigma)# NOTE: default value of ignore_index is -100 ...rpn_cls_loss = F.cross_entropy(rpn_score, gt_rpn_label.cuda(), ignore_index=-1) //交叉熵损失_gt_rpn_label = gt_rpn_label[gt_rpn_label > -1]_rpn_score = at.tonumpy(rpn_score)[at.tonumpy(gt_rpn_label) > -1]self.rpn_cm.add(at.totensor(_rpn_score, False), _gt_rpn_label.data.long())# ------------------ ROI losses (fast rcnn loss) -------------------#n_sample = roi_cls_loc.shape[0]roi_cls_loc = roi_cls_loc.view(n_sample, -1, 4)roi_loc = roi_cls_loc[t.arange(0, n_sample).long().cuda(), \at.totensor(gt_roi_label).long()]gt_roi_label = at.totensor(gt_roi_label).long()gt_roi_loc = at.totensor(gt_roi_loc)roi_loc_loss = _fast_rcnn_loc_loss( //roi loc损失roi_loc.contiguous(),gt_roi_loc,gt_roi_label.data,self.roi_sigma)roi_cls_loss = nn.CrossEntropyLoss()(roi_score, gt_roi_label.cuda()) //交叉熵损失self.roi_cm.add(at.totensor(roi_score, False), gt_roi_label.data.long())losses = [rpn_loc_loss, rpn_cls_loss, roi_loc_loss, roi_cls_loss]losses = losses + [sum(losses)]return LossTuple(*losses)def train_step(self, imgs, bboxes, labels, scale): //整个模型的一个step 从0梯度到向前过程,误差反向传播更新参数的过程self.optimizer.zero_grad() losses = self.forward(imgs, bboxes, labels, scale)losses.total_loss.backward()self.optimizer.step()self.update_meters(losses)return lossesdef save(self, save_optimizer=False, save_path=None, **kwargs): //保存模型参数和configsave_dict = dict()save_dict['model'] = self.faster_rcnn.state_dict()save_dict['config'] = opt._state_dict()save_dict['other_info'] = kwargsif save_optimizer: //保存优化器参数save_dict['optimizer'] = self.optimizer.state_dict()if save_path is None: //未指定保存地址timestr = time.strftime('%m%d%H%M') //保存在checkpoints/时间戳命名save_path = 'checkpoints/fasterrcnn_%s' % timestrfor k_, v_ in kwargs.items():save_path += '_%s' % v_save_dir = os.path.dirname(save_path)if not os.path.exists(save_dir):os.makedirs(save_dir)t.save(save_dict, save_path)def load(self, path, load_optimizer=True, parse_opt=False, ): //加载模型参数state_dict = t.load(path)if 'model' in state_dict:self.faster_rcnn.load_state_dict(state_dict['model'])else: # legacy way, for backward compatibilityself.faster_rcnn.load_state_dict(state_dict)return selfif parse_opt:opt._parse(state_dict['config'])if 'optimizer' in state_dict and load_optimizer:self.optimizer.load_state_dict(state_dict['optimizer'])return selfdef update_meters(self, losses): //更新average lossloss_d = {k: at.scalar(v) for k, v in losses._asdict().items()}for key, meter in self.meters.items():meter.add(loss_d[key])def reset_meters(self): //重置meters for key, meter in self.meters.items():meter.reset()self.roi_cm.reset()self.rpn_cm.reset()def get_meter_data(self): //返回meter中的值return {k: v.value()[0] for k, v in self.meters.items()}def _smooth_l1_loss(x, t, in_weight, sigma): //按照论文写得losssigma2 = sigma ** 2diff = in_weight * (x - t)abs_diff = diff.abs()flag = (abs_diff.data < (1. / sigma2)).float()y = (flag * (sigma2 / 2.) * (diff ** 2) +(1 - flag) * (abs_diff - 0.5 / sigma2))return y.sum()def _fast_rcnn_loc_loss(pred_loc, gt_loc, gt_label, sigma): //按照论文写得lossin_weight = t.zeros(gt_loc.shape).cuda()# Localization loss is calculated only for positive rois.# NOTE: unlike origin implementation, # we don't need inside_weight and outside_weight, they can calculate by gt_labelin_weight[(gt_label > 0).view(-1, 1).expand_as(in_weight).cuda()] = 1loc_loss = _smooth_l1_loss(pred_loc, gt_loc, in_weight.detach(), sigma)# Normalize by total number of negtive and positive rois.loc_loss /= ((gt_label >= 0).sum().float()) # ignore gt_label==-1 for rpn_lossreturn loc_losstrain_.py

训练类 这里是程序入口

from __future__ import absolute_import

# though cupy is not used but without this line, it raise errors...

import cupy as cp

import osimport ipdb

import matplotlib

from tqdm import tqdmfrom utils.config import opt

from data.dataset import Dataset, TestDataset, inverse_normalize

from model import FasterRCNNVGG16

from torch.utils import data as data_

from trainer import FasterRCNNTrainer

from utils import array_tool as at

from utils.vis_tool import visdom_bbox

from utils.eval_tool import eval_detection_voc

import resource

rlimit = resource.getrlimit(resource.RLIMIT_NOFILE)

resource.setrlimit(resource.RLIMIT_NOFILE, (20480, rlimit[1]))

//resource is a Unix specific package which is why it worked for Linux, but raised an error when trying to use it in Windows.matplotlib.use('agg')def eval(dataloader, faster_rcnn, test_num=10000): //从testdata中 选择10000个进行 ap map计算pred_bboxes, pred_labels, pred_scores = list(), list(), list()gt_bboxes, gt_labels, gt_difficults = list(), list(), list()for ii, (imgs, sizes, gt_bboxes_, gt_labels_, gt_difficults_) in tqdm(enumerate(dataloader)):sizes = [sizes[0][0].item(), sizes[1][0].item()]pred_bboxes_, pred_labels_, pred_scores_ = faster_rcnn.predict(imgs, [sizes])gt_bboxes += list(gt_bboxes_.numpy())gt_labels += list(gt_labels_.numpy())gt_difficults += list(gt_difficults_.numpy())pred_bboxes += pred_bboxes_pred_labels += pred_labels_pred_scores += pred_scores_if ii == test_num: breakresult = eval_detection_voc(pred_bboxes, pred_labels, pred_scores,gt_bboxes, gt_labels, gt_difficults,use_07_metric=True)return resultdef train(**kwargs):opt._parse(kwargs)dataset = Dataset(opt)print('load data')dataloader = data_.DataLoader(dataset, \batch_size=1, \shuffle=True, \# pin_memory=True,num_workers=opt.num_workers)testset = TestDataset(opt)test_dataloader = data_.DataLoader(testset,batch_size=1,num_workers=opt.test_num_workers,shuffle=False, \pin_memory=True)faster_rcnn = FasterRCNNVGG16()print('model construct completed')trainer = FasterRCNNTrainer(faster_rcnn).cuda()if opt.load_path: //加载模型继续训练 或predicttrainer.load(opt.load_path)print('load pretrained model from %s' % opt.load_path)trainer.vis.text(dataset.db.label_names, win='labels')best_map = 0lr_ = opt.lr for epoch in range(opt.epoch):trainer.reset_meters() //每个epoch将meters重置for ii, (img, bbox_, label_, scale) in tqdm(enumerate(dataloader)):scale = at.scalar(scale)img, bbox, label = img.cuda().float(), bbox_.cuda(), label_.cuda()trainer.train_step(img, bbox, label, scale)if (ii + 1) % opt.plot_every == 0: //训练每多少个img 打印一次结果if os.path.exists(opt.debug_file):ipdb.set_trace()# plot losstrainer.vis.plot_many(trainer.get_meter_data()) //plot所有av_loss# plot groud truth bboxesori_img_ = inverse_normalize(at.tonumpy(img[0]))gt_img = visdom_bbox(ori_img_,at.tonumpy(bbox_[0]),at.tonumpy(label_[0]))trainer.vis.img('gt_img', gt_img)# plot predicti bboxes_bboxes, _labels, _scores = trainer.faster_rcnn.predict([ori_img_], visualize=True)pred_img = visdom_bbox(ori_img_,at.tonumpy(_bboxes[0]),at.tonumpy(_labels[0]).reshape(-1),at.tonumpy(_scores[0]))trainer.vis.img('pred_img', pred_img)# rpn confusion matrix(meter)trainer.vis.text(str(trainer.rpn_cm.value().tolist()), win='rpn_cm')# roi confusion matrixtrainer.vis.img('roi_cm', at.totensor(trainer.roi_cm.conf, False).float())eval_result = eval(test_dataloader, faster_rcnn, test_num=opt.test_num)trainer.vis.plot('test_map', eval_result['map'])lr_ = trainer.faster_rcnn.optimizer.param_groups[0]['lr']log_info = 'lr:{}, map:{},loss:{}'.format(str(lr_),str(eval_result['map']),str(trainer.get_meter_data()))trainer.vis.log(log_info)if eval_result['map'] > best_map: //记录best_mapbest_map = eval_result['map']best_path = trainer.save(best_map=best_map)if epoch == 9: //加载best_map继续训练trainer.load(best_path)trainer.faster_rcnn.scale_lr(opt.lr_decay)lr_ = lr_ * opt.lr_decayif epoch == 13: breakif __name__ == '__main__':import firefire.Fire() //入口 方便运行调试各个函数有些地方解释的不合理或者不正确请自行判断,结合原作者的注释学习效率更佳。

整个模型的过程就是:batch=1

读取数据gt_boxl label img difficult只在eval的时候会用于计算ap和map img 要经过resize和标准化 flip增强 对应box也要做同等操作. img被送入卷积生成feature map down sampling了16倍。然后我们将feature resize后的imgshape resize scale送入rpn 按照论文在feature map上进行滑动生成anchor 将featuremap送入卷积网络 得到每个anchor的loc(4) 和score(前后景) 根据score 我们选取前景概率大的6000个anchor 将这6000个anchor的位置用loc修正 送入Nms后 选择300个成为ROIS

然后将300个ROIS 与GT_BOX 进行IOU计算 如果某个roi和(对应IOU最大的gt_box)的IOU大于某个阀值 我们将其设定为正样本 他的label也就置为gt_box的label+1 如果小于某阀值 认定为背景 label=0。共选取正负样本共128个 计算这128个roi和对应gt_box(IOU最大)的loc(只计算正样本) 这时我们得到了128个roi roi的label和 128个roi的loc

然后我们将得到的128个roi和feature送入ROIhead 根据featuremap(512) 将roi roi_pooling到7x7 得到7x7x128x512的结果 平铺送入vgg16的全连接层 (巧妙利用权值共享) 得到这张图片 每个类的cls修正 和属于每个类的score

另外我们根据anchor和gt_bbox 的IOU 随机选择>0.7的设置为正样本 <0.3的为负样本 共256个anchor 计算256个anchor和对应gt_box(IOU最大)的 loc (只计算正样本) 返回256个anchor在所有anchor中的位置 和对应loc label

按论文公式进行loss计算 反向传播更新参数

predict预测的时候选出300个ROIS时,将ROIS全部送入ROIhead 得到这300个rois的每个类的cls修正和每个类的score

利用300个rois 和每个类cls修正 进行广播 得到300个每个类的修正后的rois 利用score和rois 进行每个类的nms操作 选出候选框 即我们预测的候选框 score经过softmax后就是这个框的prob 接着就是可视化的过程了