原文链接: tf hub bigGan 猫变狗

上一篇: tf hub mobile_net 使用

下一篇: tf hub 使用缓存 数据

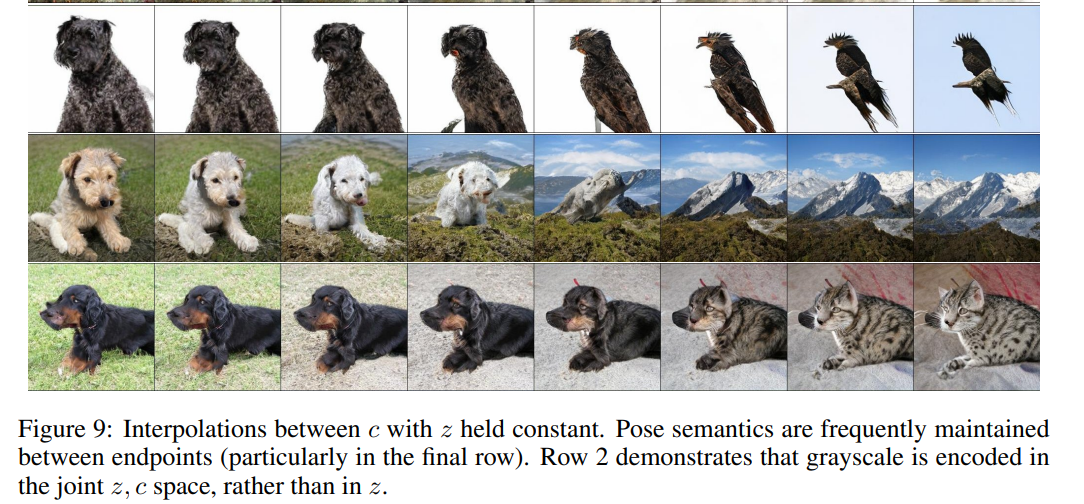

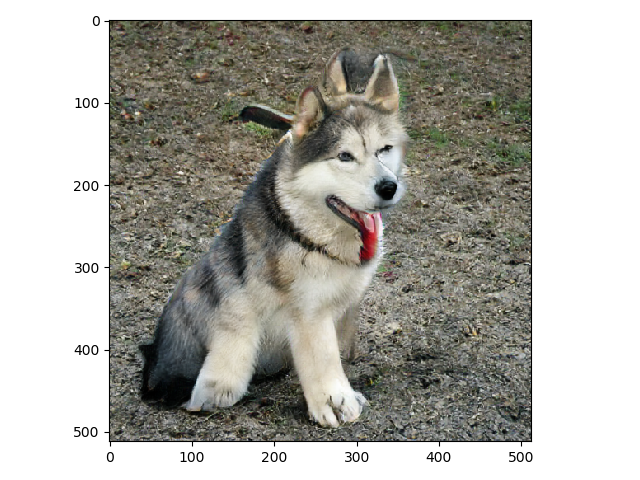

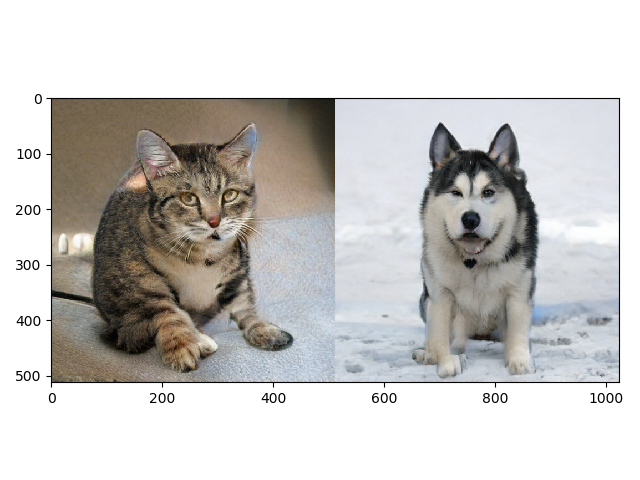

根据输入的标签和噪声生成指定类别的图片,类似infogan

每次向着目标前进一小步,将其中的过程变化记录下来

import tensorflow as tf

import numpy as np

import tensorflow_hub as hub

import matplotlib.pyplot as pltimport skvideoFFmpeg_path = r"C:\Users\Ace\Downloads\ffmpeg-20190227-85051fe-win64-static\bin"

skvideo.setFFmpegPath(FFmpeg_path)from skvideo import ioout_path = './cat2dog.mp4'# start_index = 949 # 草莓

# aim_index = 953 # 菠萝

start_index = 281 # 猫

aim_index = 248 # 狗

start_y = tf.keras.utils.to_categorical([start_index], 1000)

end_y = tf.keras.utils.to_categorical([aim_index], 1000)

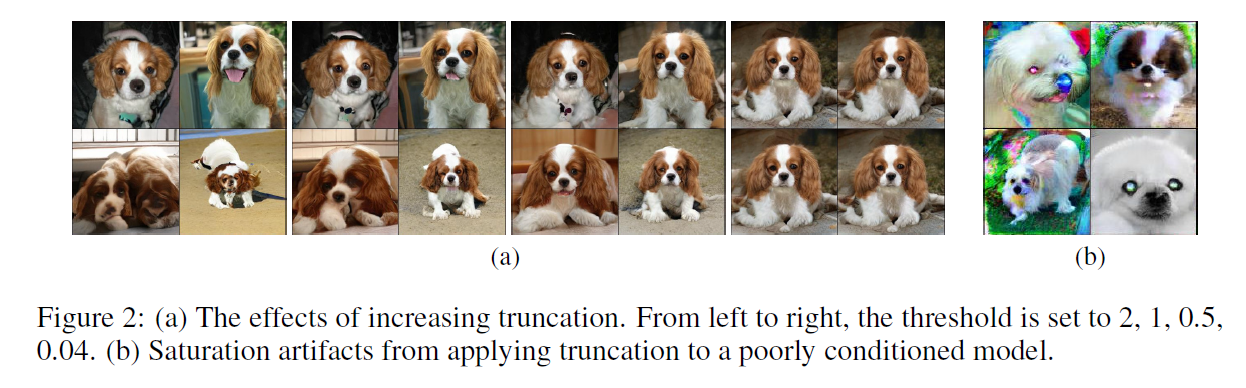

truncation = 0.5 # scalar truncation value in [0.02, 1.0]

start_z = truncation * np.random.normal(size=[1, 128]) # noise sample

end_z = truncation * np.random.normal(size=[1, 128]) # noise sample

writer = skvideo.io.FFmpegWriter(out_path)with tf.Session() as sess:# start_z = truncation * np.random.normal(size=[1, 128]) # noise sample# end_z = truncation * np.random.normal(size=[1, 128]) # noise sample# start_z = truncation * tf.random.truncated_normal([1, 128]) # noise sample# start_z = sess.run(start_z)# end_z = truncation * tf.random.truncated_normal([1, 128]) # noise sample# end_z = sess.run(end_z)step = 200dy = (end_y - start_y) / stepdz = (end_z - start_z) / step# print(start_y)module = hub.Module('https://tfhub.dev/deepmind/biggan-512/2')in_y = tf.placeholder(tf.float32, [None, 1000])in_z = tf.placeholder(tf.float32, [None, 128])samples = module(dict(y=in_y, z=in_z, truncation=truncation))sess.run(tf.global_variables_initializer())for i in range(step + 1):in_y_val = start_y + i * dyin_z_val = start_z + i * dzout_image = sess.run(samples, {in_y: in_y_val,in_z: in_z_val,})# print(np.max(out_image), np.min(out_image))out_image = (out_image + 1) * 127.5out_image = np.clip(out_image, 0, 255).astype(np.uint8)out_image = np.concatenate(out_image, axis=1)plt.imshow(out_image)plt.show()writer.writeFrame(out_image)

writer.close()

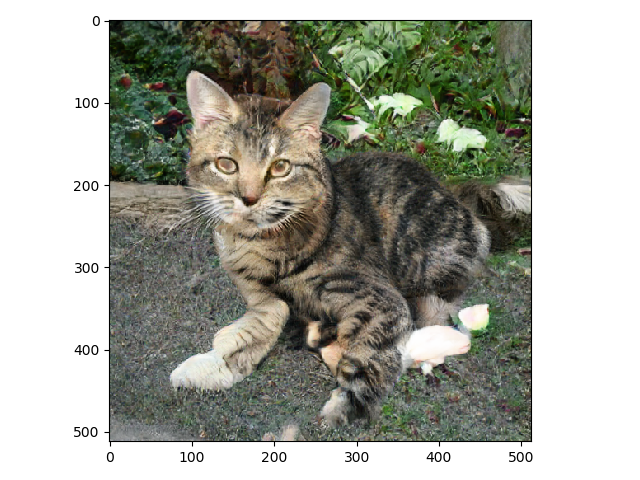

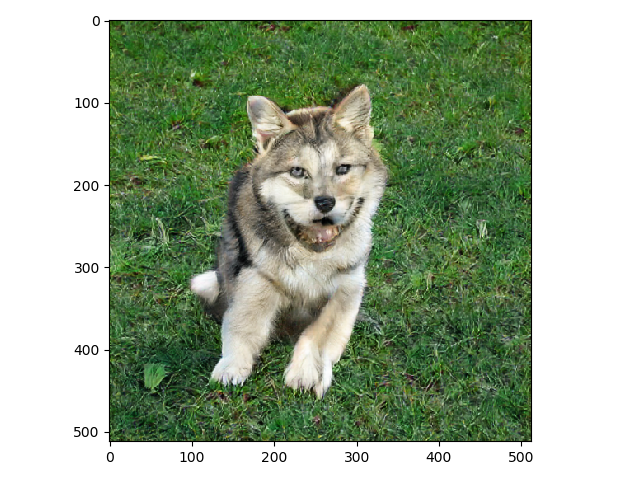

官网例子修改,生成猫和狗

import tensorflow as tf

import tensorflow_hub as hub

import numpy as np

import matplotlib.pyplot as plt# Load BigGAN 512 module.

module = hub.Module('https://tfhub.dev/deepmind/biggan-512/2')# Sample random noise (z) and ImageNet label (y) inputs.

batch_size = 2

truncation = 0.5 # scalar truncation value in [0.02, 1.0]

z = truncation * tf.random.truncated_normal([batch_size, 128]) # noise sample

# y_index = tf.random.uniform([batch_size], maxval=1000, dtype=tf.int32)

y_index = tf.constant([281, 248], dtype=tf.int32)

y = tf.one_hot(y_index, 1000) # one-hot ImageNet label# Call BigGAN on a dict of the inputs to generate a batch of images with shape

# [8, 512, 512, 3] and range [-1, 1].

samples = module(dict(y=y, z=z, truncation=truncation))print(samples)with tf.Session() as sess:sess.run(tf.global_variables_initializer())for i in range(10):out_image = sess.run(samples)print(np.max(out_image), np.min(out_image))out_image = (out_image + 1) * 127.5out_image = np.clip(out_image, 0, 255).astype(np.uint8)out_image = np.concatenate(out_image, axis=1)plt.imshow(out_image)plt.show()