qiuqiu Ultralight-SimplePose:https://github.com/dog-qiuqiu/Ultralight-SimplePose

💻 OS

Linux raspberrypi 5.10.60-v8+ #1448 SMP PREEMPT Sat Aug 21 10:48:18 BST 2021 aarch64 GNU/Linux

⚡️ 安装 protobuf

sudo apt-get install autoconf automake libtoolgit clone https://github.com/protocolbuffers/protobuf.git./autogen.sh ./configure --prefix=/usr/local/protobufmake -j4 & make check & make install ( make check 没有问题 在执行 install )protoc --version

😲 Q&A

-

执行 ./autogen.sh 不能有警告,否则后面编译也是会报错.

-

configure: WARNING: no configuration information is in third_party/googletest

git submodule update --init --recursive -

FAIL: protobuf-test (arm64)

内存不足,扩展交换内存 // 要停止操作系统使用当前的交换文件,运行以下命令。 1. sudo dphys-swapfile swapoff 2. sudo nano /etc/dphys-swapfile -> CONF_SWAPSIZE=100 -> CONF_SWAPSIZE=1024 // 运行此命令将删除原来的交换文件,并重新创建它以适应新定义的大小。 3. sudo dphys-swapfile setup // 要启动操作系统的交换系统,运行以下命令 4. sudo dphys-swapfile swapon 5. sudo reboot

🌟 安装 MNN

git clone https://github.com/alibaba/MNN

./schema/generate.sh

mkdir build & cd build

cmake .. -DMNN_BUILD_CONVERTER=true -DMNN_BUILD_QUANTOOLS=on & make -j

mkdir install & make DESTDIR=install

🌟 安装 NCNN

# check for updates (64-bit OS is still under development!)

sudo apt-get update

sudo apt-get upgrade

# install dependencies

sudo apt-get install cmake wget

sudo apt-get install libprotobuf-dev protobuf-compiler

# download ncnn

git clone --depth=1 https://github.com/Tencent/ncnn.git

# install ncnn

cd ncnn

mkdir build

cd build

# build 64-bit ncnn

cmake -D NCNN_DISABLE_RTTI=OFF \

-D CMAKE_TOOLCHAIN_FILE=../toolchains/aarch64-linux-gnu.toolchain.cmake ..

make -j4

make install

# copy output to dirs

sudo mkdir /usr/local/lib/ncnn

sudo cp -r install/include/ncnn /home/pi/J/lib/ncnn/

sudo cp -r install/lib/libncnn.a /home/pi/J/lib/ncnn/libncnn.a

# once you've placed the output in your /usr/local directory,

# you may delete the ncnn directory if you have no tools or examples compiledcd ~

sudo rm -rf ncnn

sudo /sbin/ldconfig

🌕 代码实现

-

NCNN

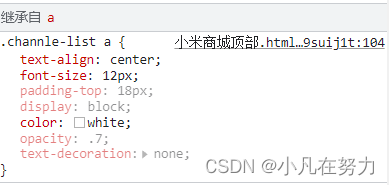

- CMakeLists.txt

project(SimplePose)cmake_minimum_required( VERSION 3.1 )# cvfind_package( OpenCV REQUIRED )include_directories(${OpenCV_INCLUDE_DIRS})#ncnnset(NCNN_DIR /usr/local/)include_directories(${NCNN_DIR}/include/ncnn/)link_directories(${NCNN_DIR}/lib/)#mnn #set(MNN_DIR /usr/local/)#include_directories("${MNN_PATH}/include")#link_directories("${MNN_PATH}/lib")find_package(OpenMP)ADD_EXECUTABLE(SimplePose ncnnpose.cpp)TARGET_LINK_LIBRARIES(SimplePose ${OpenCV_LIBS} libncnn.a OpenMP::OpenMP_CXX)- make

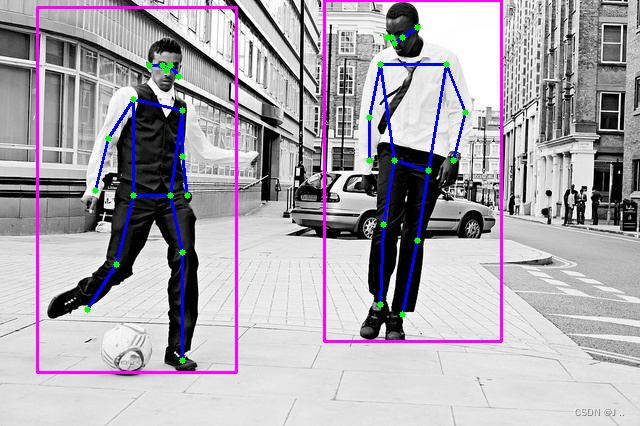

git clone https://github.com/dog-qiuqiu/Ultralight-SimplePose.gitmkdir build & cd buildcmake .. & make- 测试结果

people Time: 26.95 pose Time: 151.21

-

MNN

-

模型转换

Convert mnn model./MNNConvert -f ONNX --modelFile deploy.onnx --MNNModel deploy.mnn --bizCode bizUsage:MNNConvert [OPTION...]-h, --help Convert Other Model Format To MNN Model-v, --version 显示当前转换器版本-f, --framework arg 需要进行转换的模型类型, ex: [TF,CAFFE,ONNX,TFLITE,MNN]--modelFile arg 需要进行转换的模型文件名, ex: *.pb,*caffemodel --prototxt arg caffe模型结构描述文件, ex: *.prototx --MNNModel arg 转换之后保存的MNN模型文件名, ex: *.mnn --fp16 将conv/matmul/LSTM的float32参数保存为float16,模型将减小一半,精度基本无损 --benchmarkModel 不保存模型中conv/matmul/BN等层的参数,仅用于benchmark测试 --bizCode arg MNN模型Flag, ex: MNN --debug 使用debug模型显示更多转换信息 --forTraining 保存训练相关算子,如BN/Dropout,default: false --weightQuantBits arg arg=2~8,此功能仅对conv/matmul/LSTM的float32权值进行量化,仅优化模型大小,加载模型后会解码为float32,量化位宽可选2~8,运行速度和float32模型一致。8bit时精度基本无损,模型大小减小4倍default: 0,即不进行权值量化 --compressionParamsFile arg 使用MNN模型压缩工具箱生成的模型压缩信息文件 --saveStaticModel 固定输入形状,保存静态模型, default: false --inputConfigFile arg 保存静态模型所需要的配置文件, ex: ~/config.txt -

mnnpose.cpp ( 把 runpose方法 改成 mnn 实现 )

#include "benchmark.h"#include "cpu.h"#include "datareader.h"#include "net.h"#include "gpu.h"#include <opencv2/core/core.hpp>#include <opencv2/highgui/highgui.hpp>#include <opencv2/imgproc/imgproc.hpp>#include <stdio.h>#include <vector>#include <algorithm>#include <iostream>#include "MNN/Tensor.hpp"#include <MNN/Interpreter.hpp>MNN::Session* session_ = nullptr;std::shared_ptr<MNN::Interpreter> poseNet_ = nullptr;struct KeyPoint{cv::Point2f p;float prob;};static void draw_pose(const cv::Mat& image, const std::vector<KeyPoint>& keypoints){// draw bonestatic const int joint_pairs[16][2] = {{0, 1}, {1, 3}, {0, 2}, {2, 4}, {5, 6}, {5, 7}, {7, 9}, {6, 8}, {8, 10}, {5, 11}, {6, 12}, {11, 12}, {11, 13}, {12, 14}, {13, 15}, {14, 16}};for (int i = 0; i < 16; i++){const KeyPoint& p1 = keypoints[joint_pairs[i][0]];const KeyPoint& p2 = keypoints[joint_pairs[i][1]];if (p1.prob < 0.2f || p2.prob < 0.2f)continue;cv::line(image, p1.p, p2.p, cv::Scalar(255, 0, 0), 2);}// draw jointfor (size_t i = 0; i < keypoints.size(); i++){const KeyPoint& keypoint = keypoints[i];//fprintf(stderr, "%.2f %.2f = %.5f\n", keypoint.p.x, keypoint.p.y, keypoint.prob);if (keypoint.prob < 0.2f)continue;cv::circle(image, keypoint.p, 3, cv::Scalar(0, 255, 0), -1);}}int runpose(cv::Mat& roi, ncnn::Net &posenet, int pose_size_width, int pose_size_height, std::vector<KeyPoint>& keypoints,float x1, float y1){int w = roi.cols;int h = roi.rows;float scaleW = (float)w / (float)pose_size_width;float scaleH = (float)h / (float)pose_size_height;cv::Mat img_color;cv::cvtColor(roi, img_color, cv::COLOR_BGR2RGB);cv::Mat small;cv::resize(img_color, small, cv::Size(), 1./scaleW, 1./scaleH, cv::INTER_LINEAR);small.convertTo(small, CV_32FC3, 1./255);auto input = poseNet_->getSessionInput(session_, NULL);std::vector<int> dim{1, pose_size_height, pose_size_width, 3};std::unique_ptr<MNN::Tensor> nhwc_Tensor(MNN::Tensor::create<float>(dim, NULL, MNN::Tensor::TENSORFLOW));auto nhwc_data = nhwc_Tensor->host<float>();auto nhwc_size = nhwc_Tensor->size();::memcpy(nhwc_data, small.data, nhwc_size);input->copyFromHostTensor(nhwc_Tensor.get());poseNet_->runSession(session_);auto output= poseNet_->getSessionOutput(session_, "hybridsequential0_conv7_fwd");MNN::Tensor output_host(output, output->getDimensionType());output->copyToHostTensor(&output_host);auto output_ptr = output_host.host<float>();keypoints.clear();for (int p = 0; p < output_host.channel(); p++){float max_prob = 0.f;int max_x = 0;int max_y = 0;for (int y = 0; y < output_host.height(); y++){for (int x = 0; x < output_host.width(); x++){float prob = output_ptr[ p * output_host.width() *output_host.height() + y * output_host.width() + x];if (prob > max_prob){max_prob = prob;max_x = x;max_y = y;}}}KeyPoint keypoint;keypoint.p = cv::Point2f(max_x * w / (float)output_host.width() + x1, max_y * h / (float)output_host.height() + y1 );keypoint.prob = max_prob;keypoints.push_back(keypoint);}return 0;}int demo(cv::Mat& image, ncnn::Net &detectornet, int detector_size_width, int detector_size_height, \ncnn::Net &posenet, int pose_size_width, int pose_size_height){cv::Mat bgr = image.clone();int img_w = bgr.cols;int img_h = bgr.rows;ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB,\bgr.cols, bgr.rows, detector_size_width, detector_size_height);//数据预处理const float mean_vals[3] = {0.f, 0.f, 0.f};const float norm_vals[3] = {1/255.f, 1/255.f, 1/255.f};in.substract_mean_normalize(mean_vals, norm_vals);ncnn::Extractor ex = detectornet.create_extractor();ex.set_num_threads(4);ex.input("data", in);ncnn::Mat out;ex.extract("output", out);for (int i = 0; i < out.h; i++){printf("==================================\n");float x1, y1, x2, y2, score, label;float pw,ph,cx,cy;const float* values = out.row(i);x1 = values[2] * img_w;y1 = values[3] * img_h;x2 = values[4] * img_w;y2 = values[5] * img_h;pw = x2-x1;ph = y2-y1;cx = x1+0.5*pw;cy = y1+0.5*ph;x1 = cx - 0.7*pw;y1 = cy - 0.6*ph;x2 = cx + 0.7*pw;y2 = cy + 0.6*ph;score = values[1];label = values[0];//处理坐标越界问题if(x1<0) x1=0;if(y1<0) y1=0;if(x2<0) x2=0;if(y2<0) y2=0;if(x1>img_w) x1=img_w;if(y1>img_h) y1=img_h;if(x2>img_w) x2=img_w;if(y2>img_h) y2=img_h;cv::Mat roi;roi = bgr(cv::Rect(x1, y1, x2-x1, y2-y1)).clone();std::vector<KeyPoint> keypoints;runpose(roi, posenet, pose_size_width, pose_size_height,keypoints, x1, y1);draw_pose(image, keypoints);cv::rectangle (image, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(255, 0, 255), 2, 8, 0);}return 0;}//图片测试int test_img(){double start = ncnn::get_current_time();cv::Mat img;img = cv::imread("../test.jpg");//定义检测器ncnn::Net detectornet; detectornet.load_param("../ncnnmodel/person_detector.param");detectornet.load_model("../ncnnmodel/person_detector.bin");int detector_size_width = 320;int detector_size_height = 320;double time1 = ncnn::get_current_time() - start;printf("people Time:%7.2f \n",time1);//定义人体姿态关键点预测器ncnn::Net posenet; poseNet_ = std::shared_ptr<MNN::Interpreter> (MNN::Interpreter::createFromFile("../ncnnmodel/pose.mnn"));MNN::ScheduleConfig config;config.numThread = 4;config.type = MNN_FORWARD_CPU;MNN::BackendConfig backendConfig;backendConfig.precision = MNN::BackendConfig::Precision_Low;config.backendConfig = &backendConfig;session_ = poseNet_->createSession(config);int pose_size_width = 192;int pose_size_height = 256;double s = ncnn::get_current_time(); demo(img, detectornet, detector_size_width, detector_size_height, posenet, pose_size_width,pose_size_height);double t = ncnn::get_current_time() - s;printf("====> pose Time:%7.2f \n",t);cv::imwrite("output.jpg", img);//cv::waitKey(0);return 0;}int main(){test_img();return 0;} -

CMakeLists.txt

project(SimplePose) cmake_minimum_required( VERSION 3.1 )# cv find_package( OpenCV REQUIRED ) include_directories(${OpenCV_INCLUDE_DIRS})#ncnn set(NCNN_DIR /usr/local/) include_directories(${NCNN_DIR}/include/ncnn/) link_directories(${NCNN_DIR}/lib/)#mnn set(MNN_DIR /usr/local/) include_directories("${MNN_PATH}/include") link_directories("${MNN_PATH}/lib")find_package(OpenMP) ADD_EXECUTABLE(SimplePose ncnnpose.cpp) TARGET_LINK_LIBRARIES(SimplePose ${OpenCV_LIBS} MNN libncnn.a OpenMP::OpenMP_CXX) -

测试结果

people Time: 26.95 pose Time: 150.09

-

🔚 参考连接

- https://github.com/dog-qiuqiu/Ultralight-SimplePose

- https://blog.csdn.net/weixin_39266208/article/details/122131303

- https://blog.csdn.net/sxf1061700625/article/details/121630741