转载来自:https://blog.csdn.net/zlfing/article/details/78070951

1.常用指令:打印文件块的位置信息

hdfs fsck /user/hadoop/wkz -files -blocks -locations

生产实例:hdfs fsck *文件路径* -list-corruptfileblocks

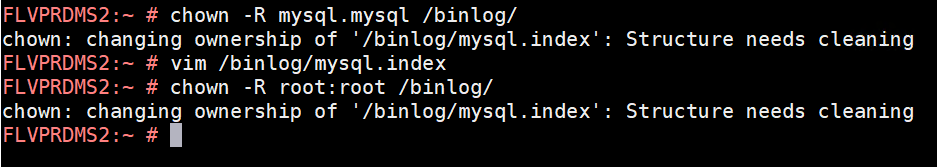

线上环境降副本后,出现报错:

Caused by: org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-346529924-10.162.0.3-1457576908834:blk_4999059987_3926823388

file=/user/hive/warehouse/....直接检查这个副本发现是healthy,但是到块所在的机器ip去查看,发现该机器有块硬盘坏了.

最终确定是改硬盘坏引起的问题,具体为啥知道块在这个硬盘上,是因为hdfs dfs -get这个文件报错

也可以在50070页面具体找到那个主机的那块盘坏了,例如:

其他指令:

在HDFS中,提供了fsck命令,用于检查HDFS上文件和目录的健康状态、获取文件的block信息和位置信息等。

fsck命令必须由HDFS超级用户来执行,普通用户无权限。

- [hadoop@dev ~]$ hdfs fsck

- Usage: DFSck [-list-corruptfileblocks | [-move | -delete | -openforwrite] [-files [-blocks [-locations | -racks]]]]

- start checking from this path

- -move move corrupted files to /lost+found

- -delete delete corrupted files

- -files print out files being checked

- -openforwrite print out files opened for write

- -includeSnapshots include snapshot data if the given path indicates a snapshottable directory or there are snapshottable directories under it

- -list-corruptfileblocks print out list of missing blocks and files they belong to

- -blocks print out block report

- -locations print out locations for every block

- -racks print out network topology for data-node locations

下面介绍每一个选项的含义及用法。

查看文件中损坏的块(-list-corruptfileblocks)

- [hadoop@dev ~]$ hdfs fsck /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ -list-corruptfileblocks

- The filesystem under path '/hivedata/warehouse/liuxiaowen.db/lxw_product_names/' has 0 CORRUPT files

将损坏的文件移动至/lost+found目录(-move)

- [hadoop@dev ~]$ hdfs fsck /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168 -move

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168 at Thu Aug 13 09:36:35 CST 2015

- .Status: HEALTHY

- Total size: 13497058 B

- Total dirs: 0

- Total files: 1

- Total symlinks: 0

- Total blocks (validated): 1 (avg. block size 13497058 B)

- Minimally replicated blocks: 1 (100.0 %)

- Over-replicated blocks: 0 (0.0 %)

- Under-replicated blocks: 0 (0.0 %)

- Mis-replicated blocks: 0 (0.0 %)

- Default replication factor: 2

- Average block replication: 2.0

- Corrupt blocks: 0

- Missing replicas: 0 (0.0 %)

- Number of data-nodes: 15

- Number of racks: 1

- FSCK ended at Thu Aug 13 09:36:35 CST 2015 in 1 milliseconds

- The filesystem under path '/hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168' is HEALTHY

删除损坏的文件(-delete)

- [hadoop@dev ~]$ hdfs fsck /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168 -delete

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168 at Thu Aug 13 09:37:58 CST 2015

- .Status: HEALTHY

- Total size: 13497058 B

- Total dirs: 0

- Total files: 1

- Total symlinks: 0

- Total blocks (validated): 1 (avg. block size 13497058 B)

- Minimally replicated blocks: 1 (100.0 %)

- Over-replicated blocks: 0 (0.0 %)

- Under-replicated blocks: 0 (0.0 %)

- Mis-replicated blocks: 0 (0.0 %)

- Default replication factor: 2

- Average block replication: 2.0

- Corrupt blocks: 0

- Missing replicas: 0 (0.0 %)

- Number of data-nodes: 15

- Number of racks: 1

- FSCK ended at Thu Aug 13 09:37:58 CST 2015 in 1 milliseconds

- The filesystem under path '/hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00168' is HEALTHY

检查并列出所有文件状态(-files)

- [hadoop@dev ~]$ hdfs fsck /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ -files

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ at Thu Aug 13 09:39:38 CST 2015

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ dir

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/_SUCCESS 0 bytes, 0 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00000 13583807 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00001 13577427 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00002 13588601 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00003 13479213 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00004 13497012 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00005 13557451 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00006 13580267 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00007 13486035 bytes, 1 block(s): OK

- /hivedata/warehouse/liuxiaowen.db/lxw_product_names/part-00008 13481498 bytes, 1 block(s): OK

- ...

检查并打印正在被打开执行写操作的文件(-openforwrite)

- [hadoop@dev ~]$ hdfs fsck /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ -openforwrite

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /hivedata/warehouse/liuxiaowen.db/lxw_product_names/ at Thu Aug 13 09:41:28 CST 2015

- ....................................................................................................

- ....................................................................................................

- .Status: HEALTHY

- Total size: 2704782548 B

- Total dirs: 1

- Total files: 201

- Total symlinks: 0

- Total blocks (validated): 200 (avg. block size 13523912 B)

- Minimally replicated blocks: 200 (100.0 %)

- Over-replicated blocks: 0 (0.0 %)

- Under-replicated blocks: 0 (0.0 %)

- Mis-replicated blocks: 0 (0.0 %)

- Default replication factor: 2

- Average block replication: 2.0

- Corrupt blocks: 0

- Missing replicas: 0 (0.0 %)

- Number of data-nodes: 15

- Number of racks: 1

- FSCK ended at Thu Aug 13 09:41:28 CST 2015 in 10 milliseconds

- The filesystem under path '/hivedata/warehouse/liuxiaowen.db/lxw_product_names/' is HEALTHY

打印文件的Block报告(-blocks)

需要和-files一起使用。

- [hadoop@dev ~]$ hdfs fsck /logs/site/2015-08-08/lxw1234.log -files -blocks

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /logs/site/2015-08-08/lxw1234.log at Thu Aug 13 09:45:59 CST 2015

- /logs/site/2015-08-08/lxw1234.log 7408754725 bytes, 56 block(s): OK

- 0. BP-1034052771-172.16.212.130-1405595752491:blk_1075892982_2152381 len=134217728 repl=2

- 1. BP-1034052771-172.16.212.130-1405595752491:blk_1075892983_2152382 len=134217728 repl=2

- 2. BP-1034052771-172.16.212.130-1405595752491:blk_1075892984_2152383 len=134217728 repl=2

- 3. BP-1034052771-172.16.212.130-1405595752491:blk_1075892985_2152384 len=134217728 repl=2

- 4. BP-1034052771-172.16.212.130-1405595752491:blk_1075892997_2152396 len=134217728 repl=2

- 5. BP-1034052771-172.16.212.130-1405595752491:blk_1075892998_2152397 len=134217728 repl=2

- 6. BP-1034052771-172.16.212.130-1405595752491:blk_1075892999_2152398 len=134217728 repl=2

- 7. BP-1034052771-172.16.212.130-1405595752491:blk_1075893000_2152399 len=134217728 repl=2

- 8. BP-1034052771-172.16.212.130-1405595752491:blk_1075893001_2152400 len=134217728 repl=2

- 9. BP-1034052771-172.16.212.130-1405595752491:blk_1075893002_2152401 len=134217728 repl=2

- 10. BP-1034052771-172.16.212.130-1405595752491:blk_1075893003_2152402 len=134217728 repl=2

- 11. BP-1034052771-172.16.212.130-1405595752491:blk_1075893004_2152403 len=134217728 repl=2

- 12. BP-1034052771-172.16.212.130-1405595752491:blk_1075893005_2152404 len=134217728 repl=2

- 13. BP-1034052771-172.16.212.130-1405595752491:blk_1075893006_2152405 len=134217728 repl=2

- 14. BP-1034052771-172.16.212.130-1405595752491:blk_1075893007_2152406 len=134217728 repl=2

- ...

其中,/logs/site/2015-08-08/lxw1234.log 7408754725 bytes, 56 block(s): 表示文件的总大小和block数;

0. BP-1034052771-172.16.212.130-1405595752491:blk_1075892982_2152381 len=134217728 repl=2

1. BP-1034052771-172.16.212.130-1405595752491:blk_1075892983_2152382 len=134217728 repl=2

2. BP-1034052771-172.16.212.130-1405595752491:blk_1075892984_2152383 len=134217728 repl=2

前面的0. 1. 2.代表该文件的block索引,56的文件块,就从0-55;

BP-1034052771-172.16.212.130-1405595752491:blk_1075892982_2152381表示block id;

len=134217728 表示该文件块大小;

repl=2 表示该文件块副本数;

打印文件块的位置信息(-locations)

需要和-files -blocks一起使用。

- [hadoop@dev ~]$ hdfs fsck /logs/site/2015-08-08/lxw1234.log -files -blocks -locations

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /logs/site/2015-08-08/lxw1234.log at Thu Aug 13 09:45:59 CST 2015

- /logs/site/2015-08-08/lxw1234.log 7408754725 bytes, 56 block(s): OK

- 0. BP-1034052771-172.16.212.130-1405595752491:blk_1075892982_2152381 len=134217728 repl=2 [172.16.212.139:50010, 172.16.212.135:50010]

- 1. BP-1034052771-172.16.212.130-1405595752491:blk_1075892983_2152382 len=134217728 repl=2 [172.16.212.140:50010, 172.16.212.133:50010]

- 2. BP-1034052771-172.16.212.130-1405595752491:blk_1075892984_2152383 len=134217728 repl=2 [172.16.212.136:50010, 172.16.212.141:50010]

- 3. BP-1034052771-172.16.212.130-1405595752491:blk_1075892985_2152384 len=134217728 repl=2 [172.16.212.133:50010, 172.16.212.135:50010]

- 4. BP-1034052771-172.16.212.130-1405595752491:blk_1075892997_2152396 len=134217728 repl=2 [172.16.212.142:50010, 172.16.212.139:50010]

- 5. BP-1034052771-172.16.212.130-1405595752491:blk_1075892998_2152397 len=134217728 repl=2 [172.16.212.133:50010, 172.16.212.139:50010]

- 6. BP-1034052771-172.16.212.130-1405595752491:blk_1075892999_2152398 len=134217728 repl=2 [172.16.212.141:50010, 172.16.212.135:50010]

- 7. BP-1034052771-172.16.212.130-1405595752491:blk_1075893000_2152399 len=134217728 repl=2 [172.16.212.144:50010, 172.16.212.142:50010]

- 8. BP-1034052771-172.16.212.130-1405595752491:blk_1075893001_2152400 len=134217728 repl=2 [172.16.212.133:50010, 172.16.212.138:50010]

- 9. BP-1034052771-172.16.212.130-1405595752491:blk_1075893002_2152401 len=134217728 repl=2 [172.16.212.140:50010, 172.16.212.134:50010]

- ...

和打印出的文件块信息相比,多了一个文件块的位置信息:[172.16.212.139:50010, 172.16.212.135:50010]

打印文件块位置所在的机架信息(-racks)

- [hadoop@dev ~]$ hdfs fsck /logs/site/2015-08-08/lxw1234.log -files -blocks -locations -racks

- FSCK started by hadoop (auth:SIMPLE) from /172.16.212.17 for path /logs/site/2015-08-08/lxw1234.log at Thu Aug 13 09:45:59 CST 2015

- /logs/site/2015-08-08/lxw1234.log 7408754725 bytes, 56 block(s): OK

- 0. BP-1034052771-172.16.212.130-1405595752491:blk_1075892982_2152381 len=134217728 repl=2 [/default-rack/172.16.212.139:50010, /default-rack/172.16.212.135:50010]

- 1. BP-1034052771-172.16.212.130-1405595752491:blk_1075892983_2152382 len=134217728 repl=2 [/default-rack/172.16.212.140:50010, /default-rack/172.16.212.133:50010]

- 2. BP-1034052771-172.16.212.130-1405595752491:blk_1075892984_2152383 len=134217728 repl=2 [/default-rack/172.16.212.136:50010, /default-rack/172.16.212.141:50010]

- 3. BP-1034052771-172.16.212.130-1405595752491:blk_1075892985_2152384 len=134217728 repl=2 [/default-rack/172.16.212.133:50010, /default-rack/172.16.212.135:50010]

- 4. BP-1034052771-172.16.212.130-1405595752491:blk_1075892997_2152396 len=134217728 repl=2 [/default-rack/172.16.212.142:50010, /default-rack/172.16.212.139:50010]

- 5. BP-1034052771-172.16.212.130-1405595752491:blk_1075892998_2152397 len=134217728 repl=2 [/default-rack/172.16.212.133:50010, /default-rack/172.16.212.139:50010]

- ...

和前面打印出的信息相比,多了机架信息:[/default-rack/172.16.212.139:50010, /default-rack/172.16.212.135:50010]