文章目录

- 1. 简介

- 2. LRU 组织

-

- 2.1 LRU 链表

- 2.2 LRU Cache

- 2.3 LRU 移动操作

-

- 2.3.1 page 加入 LRU

- 2.3.2 其他 LRU 移动操作

- 3. LRU 回收

-

- 3.1 LRU 更新

- 3.2 Swappiness

- 3.3 反向映射

- 3.4 代码实现

-

- 3.4.1 struct scan_control

- 3.4.2 shrink_node()

- 3.4.3 shrink_list()

- 3.4.4 shrink_active_list()

- 3.4.5 shrink_inactive_list()

- 3.4.6 try_to_unmap()

- 3.4.7 rmap_walk()

- 4. 其他回收方式

-

- 4.1 Shrinker

-

- 4.1.1 register_shrinker()

- 4.1.2 do_shrink_slab()

- 4.2 内存整理 (Compact)

- 4.3 内存合并 (KSM)

- 4.4 OOM Killer

- 参考文档:

1. 简介

Buddy 的内存分配和释放算法还是比较简单明晰的。分配的时候优先找 order 相等的空闲内存链表,找不到的话就去找 order 更大的空闲内存链表;释放的话先释放到对应 order 的空闲内存链表,然后尝试和 buddy 内存进行合并,尽量合并成更大的空闲内存块。

但是内存管理加入 缺页(PageFault) 和 回收(Reclaim) 以后,情况就变得异常复杂了。Linux 为了用同样多的内存养活更多的进程和服务操碎了心:

- PageFault:对新创建的进程,除了内核态的内存不得不马上分配,对用户态的内存严格遵循

lazy的延后分配策略,对私有数据设置COW(Copy On Write)策略只有在更改的时候才会触发 PageFault 重新分配物理页面,对新的文件映射也只是简单的分配VMA只有在实际访问的时候才会触发 PageFault 分配物理页面。 - Reclaim:在内存不够用的情况下,Linux 尝试回收一些内存。对于从文件映射的内存 (FileMap),因为文件中有备份所以先丢弃掉内存,需要访问时再重新触发 PageFault 从文件中加载;对于没有文件映射的内存 (AnonMap),可以先把它交换到 Swap 分区上去,需要访问时再重新触发 PageFault 从 Swap 中加载。

本篇文章就来详细的分析上述的 缺页(PageFault) 和 回收(Reclaim) 过程。

2. LRU 组织

用户态的内存(FileMap + AnonMap)的内存回收的主要来源。为了尽量减少内存回收锁引起的震荡,刚刚回收的内存马上又被访问需要重新分配。Linux 设计了 LRU (Last Recent Use) 链表,来优先回收最长时间没有访问的内存。

2.1 LRU 链表

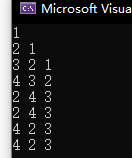

设计了 5 组 LRU 链表:

enum lru_list {LRU_INACTIVE_ANON = LRU_BASE, // inactive 匿名LRU_ACTIVE_ANON = LRU_BASE + LRU_ACTIVE, // active 匿名LRU_INACTIVE_FILE = LRU_BASE + LRU_FILE, // inactive 文件LRU_ACTIVE_FILE = LRU_BASE + LRU_FILE + LRU_ACTIVE, // active 文件LRU_UNEVICTABLE, // 不可回收内存NR_LRU_LISTS

};

在 4.8 版本以前是每个 zone 拥有独立的 lru 链表,在 4.8 版本以后改成了每个 node 一个 lru 链表:

typedef struct pglist_data {struct lruvec lruvec; ...

}struct lruvec {struct list_head lists[NR_LRU_LISTS]; // lru 链表struct zone_reclaim_stat reclaim_stat;/* Evictions & activations on the inactive file list /atomic_long_t inactive_age;/ Refaults at the time of last reclaim cycle */unsigned long refaults;

#ifdef CONFIG_MEMCGstruct pglist_data *pgdat;

#endif

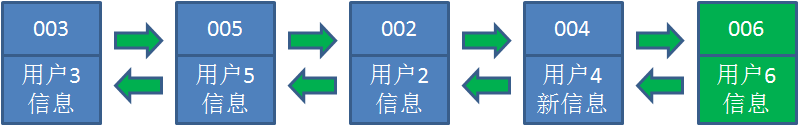

};2.2 LRU Cache

为了减少多个CPU在操作LRU链表时的拿锁冲突,系统设计了 PerCPU 的 lru cache。每个 cache 能容纳 14 个 page,一共定义了以下几类 cache:

static DEFINE_PER_CPU(struct pagevec, lru_add_pvec); // 将不处于lru链表的新页放入到lru链表中

static DEFINE_PER_CPU(struct pagevec, lru_rotate_pvecs); // 将非活动lru链表中的页移动到非活动lru链表尾部

static DEFINE_PER_CPU(struct pagevec, lru_deactivate_file_pvecs); // 将处于活动lru链表的页移动到非活动lru链表

static DEFINE_PER_CPU(struct pagevec, lru_lazyfree_pvecs);

#ifdef CONFIG_SMP

static DEFINE_PER_CPU(struct pagevec, activate_page_pvecs); // 将处于非活动lru链表的页移动到活动lru链表

#endif

/* 14 pointers + two long’s align the pagevec structure to a power of two */

#define PAGEVEC_SIZE 14struct pagevec {unsigned long nr;bool percpu_pvec_drained;struct page *pages[PAGEVEC_SIZE];

};2.3 LRU 移动操作

page 可以加入到 lru 链表,并且根据条件在 active/inactive 链表间移动。

2.3.1 page 加入 LRU

关于 lru 的操作,其中最重要的是把 新分配的 page 加入到 lru 中。这部分工作一般由 do_page_fault() 来处理。

do_page_fault() 分配内存 page,以及把新 page 加入到 lru 的典型场景如下:

| 场景 | 入口函数 | LRU函数调用关系 | 条件 | LRU链表 | LRU list | page flags |

|---|---|---|---|---|---|---|

| 匿名内存第一次发生缺页 | do_anonymous_page() | lru_cache_add_active_or_unevictable() → lru_cache_add() → __lru_cache_add() → __pagevec_lru_add() → pagevec_lru_move_fn() → __pagevec_lru_add_fn() | !(vma->vm_flags & VM_LOCKED) | 匿名 active lru | pglist_data->lruvec.lists[LRU_ACTIVE_ANON] | PG_swapbacked + PG_active + PG_lru |

| ↑ | ↑ | lru_cache_add_active_or_unevictable() → add_page_to_unevictable_list() | (vma->vm_flags & VM_LOCKED) | 不可回收 lru | pglist_data->lruvec.lists[LRU_UNEVICTABLE] | PG_swapbacked + PG_unevictable + PG_lru |

| 匿名内存被swap出去后发生缺页 | do_swap_page() | lru_cache_add_active_or_unevictable() | ↑ | ↑ | ↑ | ↑ |

| 私有文件内存写操作缺页 | do_cow_fault() | finish_fault() → alloc_set_pte() → lru_cache_add_active_or_unevictable() | !(vma->vm_flags & VM_LOCKED) | 匿名 active lru | pglist_data->lruvec.lists[LRU_ACTIVE_ANON] | PG_swapbacked + PG_active + PG_lru |

| ↑ | ↑ | finish_fault() → alloc_set_pte() → lru_cache_add_active_or_unevictable() | (vma->vm_flags & VM_LOCKED) | 不可回收 lru | pglist_data->lruvec.lists[LRU_UNEVICTABLE] | PG_swapbacked + PG_unevictable + PG_lru |

| 私有内存写操作缺页 | do_wp_page() | wp_page_copy() → lru_cache_add_active_or_unevictable() | ↑ | ↑ | ↑ | ↑ |

| 文件内存读操作缺页 | do_read_fault() | pagecache_get_page() → add_to_page_cache_lru() → lru_cache_add() | - | 文件 lru | pglist_data->lruvec.lists[LRU_INACTIVE_FILE / LRU_ACTIVE_FILE] | PG_active + PG_lru |

| 共享文件内存写操作缺页 | do_shared_fault() | - | ↑ | ↑ | ↑ | ↑ |

其中核心部分的代码分析如下:

static void __lru_cache_add(struct page *page)

{struct pagevec *pvec = &get_cpu_var(lru_add_pvec);

get_page(page); /* (1) 首先把 page 加入到 lru cache 中 */ if (!pagevec_add(pvec, page) || PageCompound(page)) /* (2) 如果 lru cache 空间已满,把page加入到各自对应的 lru 链表中 */ __pagevec_lru_add(pvec); put_cpu_var(lru_add_pvec); }

↓

void __pagevec_lru_add(struct pagevec pvec)

{

/ (2.1) 遍历 lru cache 中的 page,根据 page 的标志把 page 加入到不同类型的 lru 链表 */

pagevec_lru_move_fn(pvec, __pagevec_lru_add_fn, NULL);

}

↓

static void __pagevec_lru_add_fn(struct page *page, struct lruvec *lruvec,

void arg)

{

int file = page_is_file_cache(page);

int active = PageActive(page);

/ (2.1.1) 根据 page 中的标志,获取到 page 想要加入的 lru 类型 */

enum lru_list lru = page_lru(page);

VM_BUG_ON_PAGE(PageLRU(page), page);/* (2.1.2) 加入 lru 链表的 page 设置 PG_lru 标志 */

SetPageLRU(page);

/* (2.1.3) 加入 lru 链表 */

add_page_to_lru_list(page, lruvec, lru);

update_page_reclaim_stat(lruvec, file, active);

trace_mm_lru_insertion(page, lru);

}

↓

static __always_inline enum lru_list page_lru(struct page *page)

{

enum lru_list lru;

/* (2.1.1.1) page 标志设置了 PG_unevictable,lru = LRU_UNEVICTABLE */

if (PageUnevictable(page))lru = LRU_UNEVICTABLE;

/* (2.1.1.2) page 标志设置:PG_swapbacked,lru = LRU_INACTIVE_ANONPG_swapbacked + PG_active,lru = LRU_ACTIVE_ANON,lru = LRU_INACTIVE_FILEPG_active,lru = LRU_ACTIVE_FILE*/

else {lru = page_lru_base_type(page);if (PageActive(page))lru += LRU_ACTIVE;

}

return lru;

}

static inline enum lru_list page_lru_base_type(struct page *page)

{

if (page_is_file_cache(page))

return LRU_INACTIVE_FILE;

return LRU_INACTIVE_ANON;

}

static inline int page_is_file_cache(struct page *page)

{

return !PageSwapBacked(page);

}

2.3.2 其他 LRU 移动操作

| action | function |

|---|---|

| 将处于非活动链表中的页移动到非活动链表尾部 | rotate_reclaimable_page() → pagevec_move_tail() → pagevec_move_tail_fn() |

| 将活动lru链表中的页加入到非活动lru链表中 | deactivate_page() → lru_deactivate_file_fn() |

| 将非活动lru链表的页加入到活动lru链表 | activate_page() → __activate_page() |

3. LRU 回收

使用 LRU 回收内存的大概流程如下所示:

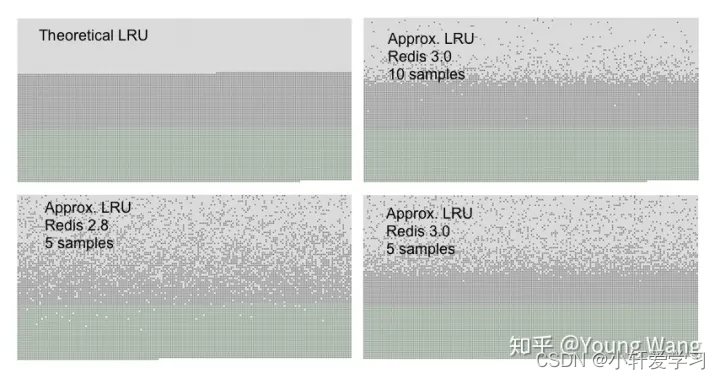

3.1 LRU 更新

为了减少对性能的影响,系统把加入到 LRU 的内存 page 分为 active 和 inactive,从 inactive 链表中回收内存。page 近期被访问过即 active,近期没有被访问即 inactive。

系统并不会设计一个定时器,而是通过判断两次扫描之间 PTE 中的 Accessed bit 没有被置位,从而来判断对应 page 有没有被访问过。每次扫描完会清理 Accessed bit:

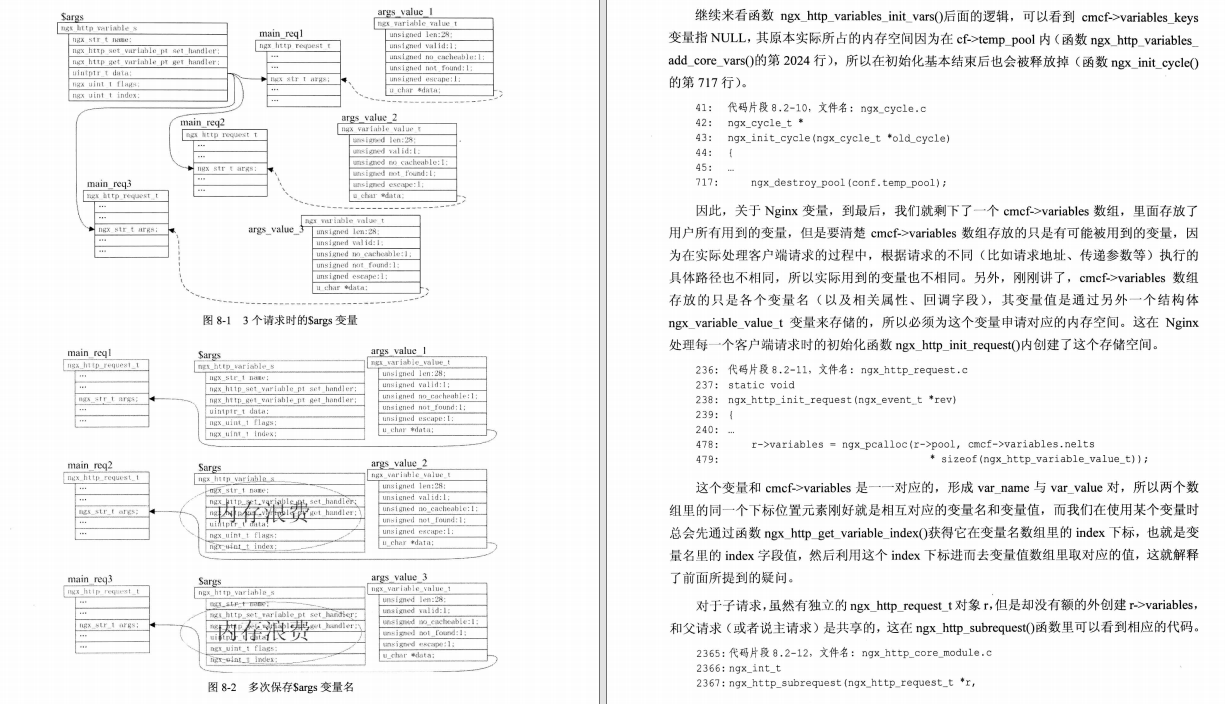

一个 page 可能会被多个 vma 锁映射,系统通过 反向映射 找到所有 vma ,并统计 有多少个 vma 的 pte 被访问过 accessed。

page_referenced()

- 1、在 active 链表回收扫描函数 shrink_active_list() 中的处理:

shrink_node() → shrink_node_memcg() → shrink_list() → shrink_active_list():

shrink_active_list()

{

…

/* (1) page 对于的任一 vma 的 pte Accessed bit 有被置位,且是代码段

这种 page 先重新放回 active 链表的表头

/

if (page_referenced(page, 0, sc->target_mem_cgroup,

&vm_flags)) {

nr_rotated += hpage_nr_pages(page);

/

* Identify referenced, file-backed active pages and

* give them one more trip around the active list. So

* that executable code get better chances to stay in

* memory under moderate memory pressure. Anon pages

* are not likely to be evicted by use-once streaming

* IO, plus JVM can create lots of anon VM_EXEC pages,

* so we ignore them here.

*/

if ((vm_flags & VM_EXEC) && page_is_file_cache(page)) {

list_add(&page->lru, &l_active);

continue;

}

}

/* (2) 对于其他 page,将其从 active 链表移动到 inactive 链表 */ClearPageActive(page); /* we are de-activating */list_add(&page->lru, &l_inactive);

...

}

- 2、inactive 链表回收扫描函数 shrink_inactive_list() 中的处理:

shrink_node() → shrink_node_memcg() → shrink_list() → shrink_inactive_list() → shrink_page_list() → page_check_references():

static enum page_references page_check_references(struct page *page,

struct scan_control *sc)

{

int referenced_ptes, referenced_page;

unsigned long vm_flags;

/* (1) page 对于的任一 vma 的 pte Accessed bit 有被置位 */

referenced_ptes = page_referenced(page, 1, sc->target_mem_cgroup,&vm_flags);

/* (2) 判断 page 结构中的 PG_referenced 标志并清除 */

referenced_page = TestClearPageReferenced(page);/** Mlock lost the isolation race with us. Let try_to_unmap()* move the page to the unevictable list.*/

if (vm_flags & VM_LOCKED)return PAGEREF_RECLAIM;/* (3) Accessed > 0 */

if (referenced_ptes) {/* (3.1) 情况1:匿名内存 + Accessed,page 从 inactive 链表移回 active 链表 */if (PageSwapBacked(page))return PAGEREF_ACTIVATE;/** All mapped pages start out with page table* references from the instantiating fault, so we need* to look twice if a mapped file page is used more* than once.** Mark it and spare it for another trip around the* inactive list. Another page table reference will* lead to its activation.** Note: the mark is set for activated pages as well* so that recently deactivated but used pages are* quickly recovered.*//* (3.2) 文件内存 + Accessed,设置 PG_referenced 标志 */ SetPageReferenced(page);/* (3.3) 情况2:文件内存 + Accessed>1 + 之前PG_referenced,page 从 inactive 链表移回 active 链表相当于两次扫描都是 Accessed 被置位,第一次扫描是情况4 设置了 PG_referenced 并且保留在 inactive 链表,第二次扫描 到了当前的情况2*/if (referenced_page || referenced_ptes > 1)return PAGEREF_ACTIVATE;/** Activate file-backed executable pages after first usage.*//* (3.3) 情况3:文件内存 + Accessed + 代码段,page 从 inactive 链表移回 active 链表 */if (vm_flags & VM_EXEC)return PAGEREF_ACTIVATE;/* (3.3) 情况4:文件内存 + Accessed + 其他情况,page 保留在 inactive 链表不回收 */return PAGEREF_KEEP;

}/* (4) Accessed == 0,page 可以回收 */

/* Reclaim if clean, defer dirty pages to writeback */

if (referenced_page && !PageSwapBacked(page))return PAGEREF_RECLAIM_CLEAN;return PAGEREF_RECLAIM;

}

3.2 Swappiness

对于内存回收时是优先回收匿名内存还是文件内存,由/proc/sys/vm/swappiness这个参数来指定。

swappiness的值从0到100不等,默认一般是60(只是一个经验值),这个值越高,则回收的时候越优先选择anonymous pages。当swappiness等于100的时候,anonymous pages和page cache就具有相同的优先级。

shrink_node() → shrink_node_memcg() → get_scan_count():

3.3 反向映射

page 通过 反向映射能查找到所有映射的 vma,这个也是重中之重。写了一篇独立的文章来说明:Rmap 内存反向映射机制。

3.4 代码实现

关于 lru 回收的核心代码由 shrink_node() 实现,不论是 get_page_from_freelist()、__alloc_pages_slowpath() 中启动的哪种回收,最后都会调用到 shrink_node()。

3.4.1 struct scan_control

虽然都是调用 shrink_node(),但是传入的参数不一样内存回收的行为也不一样。struct scan_control 定义了回收参数,简称 sc。

struct scan_control {/* How many pages shrink_list() should reclaim *//* 需要回收的page数量 */unsigned long nr_to_reclaim;

/* This context's GFP mask */ /* 申请内存时使用的分配标志 */ gfp_t gfp_mask; /* Allocation order */ /* 申请内存时使用的order值 */ int order; /* * Nodemask of nodes allowed by the caller. If NULL, all nodes * are scanned. */ nodemask_t *nodemask; /* * The memory cgroup that hit its limit and as a result is the * primary target of this reclaim invocation. */ struct mem_cgroup *target_mem_cgroup; /* Scan (total_size >> priority) pages at once */ /* 扫描优先级,代表一次扫描(total_size >> priority)个页框 * 优先级越低,一次扫描的页框数量就越多 * 优先级越高,一次扫描的数量就越少 * 默认优先级为12 */ int priority; /* The highest zone to isolate pages for reclaim from */ enum zone_type reclaim_idx; /* Writepage batching in laptop mode; RECLAIM_WRITE */ /* 是否能够进行回写操作(与分配标志的__GFP_IO和__GFP_FS有关) */ unsigned int may_writepage:1; /* Can mapped pages be reclaimed? */ /* 能否进行unmap操作,就是将所有映射了此页的页表项清空 */ unsigned int may_unmap:1; /* Can pages be swapped as part of reclaim? */ /* 是否能够进行swap交换,如果不能,在内存回收时则不扫描匿名页lru链表 */ unsigned int may_swap:1; /* * Cgroups are not reclaimed below their configured memory.low, * unless we threaten to OOM. If any cgroups are skipped due to * memory.low and nothing was reclaimed, go back for memory.low. */ unsigned int memcg_low_reclaim:1; unsigned int memcg_low_skipped:1; unsigned int hibernation_mode:1; /* One of the zones is ready for compaction */ /* 扫描结束后会标记,用于内存回收判断是否需要进行内存压缩 */ unsigned int compaction_ready:1; /* Incremented by the number of inactive pages that were scanned */ /* 已经扫描的页框数量 */ unsigned long nr_scanned; /* Number of pages freed so far during a call to shrink_zones() */ /* 已经回收的页框数量 */ unsigned long nr_reclaimed; };

3.4.2 shrink_node()

shrink_node() → shrink_node_memcg():

static void shrink_node_memcg(struct pglist_data *pgdat, struct mem_cgroup *memcg,

struct scan_control *sc, unsigned long lru_pages)

{

/ (1) 获取到当前 node 的 lruvec */

struct lruvec *lruvec = mem_cgroup_lruvec(pgdat, memcg);

unsigned long nr[NR_LRU_LISTS];

unsigned long targets[NR_LRU_LISTS];

unsigned long nr_to_scan;

enum lru_list lru;

unsigned long nr_reclaimed = 0;

unsigned long nr_to_reclaim = sc->nr_to_reclaim;

struct blk_plug plug;

bool scan_adjusted;

/* (2) 根据sc参数 和 swappiness 参数,计算出每种类型 lru 链表需要扫描的个数,放在 nr[] 数组中 */

get_scan_count(lruvec, memcg, sc, nr, lru_pages);/* Record the original scan target for proportional adjustments later */

memcpy(targets, nr, sizeof(nr));/** Global reclaiming within direct reclaim at DEF_PRIORITY is a normal* event that can occur when there is little memory pressure e.g.* multiple streaming readers/writers. Hence, we do not abort scanning* when the requested number of pages are reclaimed when scanning at* DEF_PRIORITY on the assumption that the fact we are direct* reclaiming implies that kswapd is not keeping up and it is best to* do a batch of work at once. For memcg reclaim one check is made to* abort proportional reclaim if either the file or anon lru has already* dropped to zero at the first pass.*/

scan_adjusted = (global_reclaim(sc) && !current_is_kswapd() &&sc->priority == DEF_PRIORITY);blk_start_plug(&plug);

/* (3) 有扫描额度,循环进行扫描 */

while (nr[LRU_INACTIVE_ANON] || nr[LRU_ACTIVE_FILE] ||nr[LRU_INACTIVE_FILE]) {unsigned long nr_anon, nr_file, percentage;unsigned long nr_scanned;/* (4) 逐个对可回收的 lru 链表进行回收扫描,包括:LRU_INACTIVE_ANONLRU_ACTIVE_ANONLRU_INACTIVE_FILELRU_ACTIVE_FILE*/for_each_evictable_lru(lru) {if (nr[lru]) {nr_to_scan = min(nr[lru], SWAP_CLUSTER_MAX);nr[lru] -= nr_to_scan;/* (4.1) 核心:对 active 和 inactive 链表进行回收扫描 */nr_reclaimed += shrink_list(lru, nr_to_scan,lruvec, sc);}}cond_resched();if (nr_reclaimed < nr_to_reclaim || scan_adjusted)continue;/** For kswapd and memcg, reclaim at least the number of pages* requested. Ensure that the anon and file LRUs are scanned* proportionally what was requested by get_scan_count(). We* stop reclaiming one LRU and reduce the amount scanning* proportional to the original scan target.*/nr_file = nr[LRU_INACTIVE_FILE] + nr[LRU_ACTIVE_FILE];nr_anon = nr[LRU_INACTIVE_ANON] + nr[LRU_ACTIVE_ANON];/** It's just vindictive to attack the larger once the smaller* has gone to zero. And given the way we stop scanning the* smaller below, this makes sure that we only make one nudge* towards proportionality once we've got nr_to_reclaim.*/if (!nr_file || !nr_anon)break;if (nr_file > nr_anon) {unsigned long scan_target = targets[LRU_INACTIVE_ANON] +targets[LRU_ACTIVE_ANON] + 1;lru = LRU_BASE;percentage = nr_anon * 100 / scan_target;} else {unsigned long scan_target = targets[LRU_INACTIVE_FILE] +targets[LRU_ACTIVE_FILE] + 1;lru = LRU_FILE;percentage = nr_file * 100 / scan_target;}/* Stop scanning the smaller of the LRU */nr[lru] = 0;nr[lru + LRU_ACTIVE] = 0;/** Recalculate the other LRU scan count based on its original* scan target and the percentage scanning already complete*/lru = (lru == LRU_FILE) ? LRU_BASE : LRU_FILE;nr_scanned = targets[lru] - nr[lru];nr[lru] = targets[lru] * (100 - percentage) / 100;nr[lru] -= min(nr[lru], nr_scanned);lru += LRU_ACTIVE;nr_scanned = targets[lru] - nr[lru];nr[lru] = targets[lru] * (100 - percentage) / 100;nr[lru] -= min(nr[lru], nr_scanned);scan_adjusted = true;

}

blk_finish_plug(&plug);

sc->nr_reclaimed += nr_reclaimed;/** Even if we did not try to evict anon pages at all, we want to* rebalance the anon lru active/inactive ratio.*/

if (inactive_list_is_low(lruvec, false, sc, true))shrink_active_list(SWAP_CLUSTER_MAX, lruvec,sc, LRU_ACTIVE_ANON);

}

3.4.3 shrink_list()

static unsigned long shrink_list(enum lru_list lru, unsigned long nr_to_scan,struct lruvec *lruvec, struct scan_control *sc)

{/* (4.1.1) 扫描 active 链表只有在 inactive 链表数量过小的时候,才会启动对 active 链表的扫描扫描 active 链表不会产生 page 回收,只会把某些没有访问的 page 移动到 inactive 链表*/if (is_active_lru(lru)) {if (inactive_list_is_low(lruvec, is_file_lru(lru), sc, true))shrink_active_list(nr_to_scan, lruvec, sc, lru);return 0;}

/* (4.1.2) 扫描 inactive 链表。真正启动对内存 page 的回收 */ return shrink_inactive_list(nr_to_scan, lruvec, sc, lru); }

↓

/*

- The inactive anon list should be small enough that the VM never has

- to do too much work.

- inactive anon 链表应该足够小,这样VM就不会做太多的工作。

- The inactive file list should be small enough to leave most memory

- to the established workingset on the scan-resistant active list,

- but large enough to avoid thrashing the aggregate readahead window.

- inactive 文件链表应该足够小,以便将大部分内存留给抗扫描的 active 链表上的已建立的工作集,但又需要足够大以避免冲击聚合预读窗口。

- Both inactive lists should also be large enough that each inactive

- page has a chance to be referenced again before it is reclaimed.

- 两个 inactive 链表也应该足够大,以便每个非活动页面在被回收之前有机会再次被引用。

- If that fails and refaulting is observed, the inactive list grows.

- 如果失败,并观察到 refaulting,非活动列表增长。

- The inactive_ratio is the target ratio of ACTIVE to INACTIVE pages

- on this LRU, maintained by the pageout code. An inactive_ratio

- of 3 means 3:1 or 25% of the pages are kept on the inactive list.

- inactive_ratio 是这个LRU上ACTIVE和INACTIVE页面的目标比率,由分页输出代码维护。inactive_ratio为3意味着3:1或25%的页面保持在非活动列表中。

- total target max

- memory ratio inactive

-

- 10MB 1 5MB

- 100MB 1 50MB

- 1GB 3 250MB

- 10GB 10 0.9GB

- 100GB 31 3GB

- 1TB 101 10GB

- 10TB 320 32GB

*/

static bool inactive_list_is_low(struct lruvec *lruvec, bool file,

struct scan_control *sc, bool trace)

{

enum lru_list active_lru = file * LRU_FILE + LRU_ACTIVE;

struct pglist_data *pgdat = lruvec_pgdat(lruvec);

enum lru_list inactive_lru = file * LRU_FILE;

unsigned long inactive, active;

unsigned long inactive_ratio;

unsigned long refaults;

unsigned long gb;

/** If we don't have swap space, anonymous page deactivation* is pointless.*/

if (!file && !total_swap_pages)return false;inactive = lruvec_lru_size(lruvec, inactive_lru, sc->reclaim_idx);

active = lruvec_lru_size(lruvec, active_lru, sc->reclaim_idx);/** When refaults are being observed, it means a new workingset* is being established. Disable active list protection to get* rid of the stale workingset quickly.*/

/* inactive page 比率的计算 */

refaults = lruvec_page_state(lruvec, WORKINGSET_ACTIVATE);

if (file && lruvec->refaults != refaults) {inactive_ratio = 0;

} else {gb = (inactive + active) >> (30 - PAGE_SHIFT);if (gb)inactive_ratio = int_sqrt(10 * gb);elseinactive_ratio = 1;

}if (trace)trace_mm_vmscan_inactive_list_is_low(pgdat->node_id, sc->reclaim_idx,lruvec_lru_size(lruvec, inactive_lru, MAX_NR_ZONES), inactive,lruvec_lru_size(lruvec, active_lru, MAX_NR_ZONES), active,inactive_ratio, file);return inactive * inactive_ratio < active;

}

3.4.4 shrink_active_list()

该函数负责对 active lru list 进行扫描,以得到更多的 inactive page。

static void shrink_active_list(unsigned long nr_to_scan,struct lruvec *lruvec,struct scan_control *sc,enum lru_list lru)

{unsigned long nr_taken;unsigned long nr_scanned;unsigned long vm_flags;LIST_HEAD(l_hold); /* The pages which were snipped off */LIST_HEAD(l_active);LIST_HEAD(l_inactive);struct page *page;struct zone_reclaim_stat *reclaim_stat = &lruvec->reclaim_stat;unsigned nr_deactivate, nr_activate;unsigned nr_rotated = 0;isolate_mode_t isolate_mode = 0;int file = is_file_lru(lru);struct pglist_data *pgdat = lruvec_pgdat(lruvec);

lru_add_drain(); if (!sc->may_unmap) isolate_mode |= ISOLATE_UNMAPPED; spin_lock_irq(&pgdat->lru_lock); /* (4.1.1.1) 从目标链表 结尾 中取出 nr_to_scan 个 page,暂存在 l_hold 链表中 */ nr_taken = isolate_lru_pages(nr_to_scan, lruvec, &l_hold, &nr_scanned, sc, isolate_mode, lru); __mod_node_page_state(pgdat, NR_ISOLATED_ANON + file, nr_taken); reclaim_stat->recent_scanned[file] += nr_taken; __count_vm_events(PGREFILL, nr_scanned); count_memcg_events(lruvec_memcg(lruvec), PGREFILL, nr_scanned); spin_unlock_irq(&pgdat->lru_lock); /* (4.1.1.2) 逐个扫描 l_hold 链表中的 page,进行处理 */ while (!list_empty(&l_hold)) { cond_resched(); page = lru_to_page(&l_hold); list_del(&page->lru); /* (4.1.1.2.1) 如果 page 不是可回收的,将其放回 lru 链表 */ if (unlikely(!page_evictable(page))) { putback_lru_page(page); continue; } /* (4.1.1.2.2) 如果 buffer_heads 超过限制,尝试释放是 buffer_heads 的 page */ if (unlikely(buffer_heads_over_limit)) { if (page_has_private(page) && trylock_page(page)) { if (page_has_private(page)) try_to_release_page(page, 0); unlock_page(page); } } /* (4.1.1.2.3) 查询 page 所有的反向映射 vma,其中 pte 中的 accessed bit 是否被置位 如果有任一 vma 的 accessed 被置位,说明该 page 被访问过,是 referenced */ if (page_referenced(page, 0, sc->target_mem_cgroup, &vm_flags)) { nr_rotated += hpage_nr_pages(page); /* * Identify referenced, file-backed active pages and * give them one more trip around the active list. So * that executable code get better chances to stay in * memory under moderate memory pressure. Anon pages * are not likely to be evicted by use-once streaming * IO, plus JVM can create lots of anon VM_EXEC pages, * so we ignore them here. * 识别引用的、有文件支持的活动页面,并让它们在活动列表中多走一圈。 * 因此,在适度的内存压力下,可执行代码有更好的机会留在内存中。 * Anon页不太可能被一次性使用的流IO逐出,而且JVM可以创建大量的Anon VM_EXEC页,所以我们在这里忽略它们。 */ /* 对于 file 代码段,如果被访问过,再给一次机会,先不要 移入到 inactive 链表,准备放回 active 链表 */ if ((vm_flags & VM_EXEC) && page_is_file_cache(page)) { list_add(&page->lru, &l_active); continue; } } /* (4.1.1.2.4) 对于其他的 page,清除 PG_active 标志,准备放进 inactive 链表 */ ClearPageActive(page); /* we are de-activating */ list_add(&page->lru, &l_inactive); } /* * Move pages back to the lru list. */ spin_lock_irq(&pgdat->lru_lock); /* * Count referenced pages from currently used mappings as rotated, * even though only some of them are actually re-activated. This * helps balance scan pressure between file and anonymous pages in * get_scan_count. */ reclaim_stat->recent_rotated[file] += nr_rotated; /* (4.1.1.3) 将临时链表 l_active 中的 page 放回原来的 active 链表 */ nr_activate = move_active_pages_to_lru(lruvec, &l_active, &l_hold, lru); /* (4.1.1.4) 将临时链表 l_inactive 中的 page 移进正式的 inactive 链表 */ nr_deactivate = move_active_pages_to_lru(lruvec, &l_inactive, &l_hold, lru - LRU_ACTIVE); __mod_node_page_state(pgdat, NR_ISOLATED_ANON + file, -nr_taken); spin_unlock_irq(&pgdat->lru_lock); mem_cgroup_uncharge_list(&l_hold); /* (4.1.1.5) 将临时链表 l_hold 中剩余无人要的的 page 释放 */ free_unref_page_list(&l_hold); trace_mm_vmscan_lru_shrink_active(pgdat->node_id, nr_taken, nr_activate, nr_deactivate, nr_rotated, sc->priority, file); }

3.4.5 shrink_inactive_list()

该函数负责对 inactive lru list 进行扫描,尝试回收内存 page。

static noinline_for_stack unsigned long

shrink_inactive_list(unsigned long nr_to_scan, struct lruvec *lruvec,struct scan_control *sc, enum lru_list lru)

{LIST_HEAD(page_list);unsigned long nr_scanned;unsigned long nr_reclaimed = 0;unsigned long nr_taken;struct reclaim_stat stat = {};isolate_mode_t isolate_mode = 0;int file = is_file_lru(lru);struct pglist_data *pgdat = lruvec_pgdat(lruvec);struct zone_reclaim_stat *reclaim_stat = &lruvec->reclaim_stat;bool stalled = false;

while (unlikely(too_many_isolated(pgdat, file, sc))) { if (stalled) return 0; /* wait a bit for the reclaimer. */ msleep(100); stalled = true; /* We are about to die and free our memory. Return now. */ if (fatal_signal_pending(current)) return SWAP_CLUSTER_MAX; } lru_add_drain(); if (!sc->may_unmap) isolate_mode |= ISOLATE_UNMAPPED; spin_lock_irq(&pgdat->lru_lock); /* (4.1.2.1) 尝试从 inactive 链表中摘取 nr_to_scan 个 page,放到临时链表 page_list */ nr_taken = isolate_lru_pages(nr_to_scan, lruvec, &page_list, &nr_scanned, sc, isolate_mode, lru); __mod_node_page_state(pgdat, NR_ISOLATED_ANON + file, nr_taken); reclaim_stat->recent_scanned[file] += nr_taken; if (current_is_kswapd()) { if (global_reclaim(sc)) __count_vm_events(PGSCAN_KSWAPD, nr_scanned); count_memcg_events(lruvec_memcg(lruvec), PGSCAN_KSWAPD, nr_scanned); } else { if (global_reclaim(sc)) __count_vm_events(PGSCAN_DIRECT, nr_scanned); count_memcg_events(lruvec_memcg(lruvec), PGSCAN_DIRECT, nr_scanned); } spin_unlock_irq(&pgdat->lru_lock); if (nr_taken == 0) return 0; /* (4.1.2.2) 扫描临时链表 page_list,执行真正的回收动作 */ nr_reclaimed = shrink_page_list(&page_list, pgdat, sc, 0, &stat, false); spin_lock_irq(&pgdat->lru_lock); if (current_is_kswapd()) { if (global_reclaim(sc)) __count_vm_events(PGSTEAL_KSWAPD, nr_reclaimed); count_memcg_events(lruvec_memcg(lruvec), PGSTEAL_KSWAPD, nr_reclaimed); } else { if (global_reclaim(sc)) __count_vm_events(PGSTEAL_DIRECT, nr_reclaimed); count_memcg_events(lruvec_memcg(lruvec), PGSTEAL_DIRECT, nr_reclaimed); } /* (4.1.2.3) 扫描返回时 page_list 中存放的是不能回收需要放回 inactive/active 链表的 page */ putback_inactive_pages(lruvec, &page_list); __mod_node_page_state(pgdat, NR_ISOLATED_ANON + file, -nr_taken); spin_unlock_irq(&pgdat->lru_lock); mem_cgroup_uncharge_list(&page_list); /* (4.1.2.3) 释放掉 page_list 中剩余的 page */ free_unref_page_list(&page_list); /* * If reclaim is isolating dirty pages under writeback, it implies * that the long-lived page allocation rate is exceeding the page * laundering rate. Either the global limits are not being effective * at throttling processes due to the page distribution throughout * zones or there is heavy usage of a slow backing device. The * only option is to throttle from reclaim context which is not ideal * as there is no guarantee the dirtying process is throttled in the * same way balance_dirty_pages() manages. * * Once a zone is flagged ZONE_WRITEBACK, kswapd will count the number * of pages under pages flagged for immediate reclaim and stall if any * are encountered in the nr_immediate check below. */ if (stat.nr_writeback && stat.nr_writeback == nr_taken) set_bit(PGDAT_WRITEBACK, &pgdat->flags); /* * If dirty pages are scanned that are not queued for IO, it * implies that flushers are not doing their job. This can * happen when memory pressure pushes dirty pages to the end of * the LRU before the dirty limits are breached and the dirty * data has expired. It can also happen when the proportion of * dirty pages grows not through writes but through memory * pressure reclaiming all the clean cache. And in some cases, * the flushers simply cannot keep up with the allocation * rate. Nudge the flusher threads in case they are asleep. */ if (stat.nr_unqueued_dirty == nr_taken) wakeup_flusher_threads(WB_REASON_VMSCAN); /* * Legacy memcg will stall in page writeback so avoid forcibly * stalling here. */ if (sane_reclaim(sc)) { /* * Tag a zone as congested if all the dirty pages scanned were * backed by a congested BDI and wait_iff_congested will stall. */ if (stat.nr_dirty && stat.nr_dirty == stat.nr_congested) set_bit(PGDAT_CONGESTED, &pgdat->flags); /* Allow kswapd to start writing pages during reclaim. */ if (stat.nr_unqueued_dirty == nr_taken) set_bit(PGDAT_DIRTY, &pgdat->flags); /* * If kswapd scans pages marked marked for immediate * reclaim and under writeback (nr_immediate), it implies * that pages are cycling through the LRU faster than * they are written so also forcibly stall. */ if (stat.nr_immediate && current_may_throttle()) congestion_wait(BLK_RW_ASYNC, HZ/10); } /* * Stall direct reclaim for IO completions if underlying BDIs or zone * is congested. Allow kswapd to continue until it starts encountering * unqueued dirty pages or cycling through the LRU too quickly. */ if (!sc->hibernation_mode && !current_is_kswapd() && current_may_throttle()) wait_iff_congested(pgdat, BLK_RW_ASYNC, HZ/10); trace_mm_vmscan_lru_shrink_inactive(pgdat->node_id, nr_scanned, nr_reclaimed, stat.nr_dirty, stat.nr_writeback, stat.nr_congested, stat.nr_immediate, stat.nr_activate, stat.nr_ref_keep, stat.nr_unmap_fail, sc->priority, file); return nr_reclaimed; }

↓

static unsigned long shrink_page_list(struct list_head *page_list,

struct pglist_data *pgdat,

struct scan_control *sc,

enum ttu_flags ttu_flags,

struct reclaim_stat *stat,

bool force_reclaim)

{

LIST_HEAD(ret_pages);

LIST_HEAD(free_pages);

int pgactivate = 0;

unsigned nr_unqueued_dirty = 0;

unsigned nr_dirty = 0;

unsigned nr_congested = 0;

unsigned nr_reclaimed = 0;

unsigned nr_writeback = 0;

unsigned nr_immediate = 0;

unsigned nr_ref_keep = 0;

unsigned nr_unmap_fail = 0;

cond_resched();/* (4.1.2.2.1) 扫描临时链表 page_list,尝试逐个回收其中的 page */

while (!list_empty(page_list)) {struct address_space *mapping;struct page *page;int may_enter_fs;enum page_references references = PAGEREF_RECLAIM_CLEAN;bool dirty, writeback;cond_resched();page = lru_to_page(page_list);list_del(&page->lru);if (!trylock_page(page))goto keep;VM_BUG_ON_PAGE(PageActive(page), page);sc->nr_scanned++;if (unlikely(!page_evictable(page)))goto activate_locked;if (!sc->may_unmap && page_mapped(page))goto keep_locked;/* Double the slab pressure for mapped and swapcache pages */if ((page_mapped(page) || PageSwapCache(page)) &&!(PageAnon(page) && !PageSwapBacked(page)))sc->nr_scanned++;may_enter_fs = (sc->gfp_mask & __GFP_FS) ||(PageSwapCache(page) && (sc->gfp_mask & __GFP_IO));/** The number of dirty pages determines if a zone is marked* reclaim_congested which affects wait_iff_congested. kswapd* will stall and start writing pages if the tail of the LRU* is all dirty unqueued pages.*/page_check_dirty_writeback(page, &dirty, &writeback);if (dirty || writeback)nr_dirty++;if (dirty && !writeback)nr_unqueued_dirty++;/** Treat this page as congested if the underlying BDI is or if* pages are cycling through the LRU so quickly that the* pages marked for immediate reclaim are making it to the* end of the LRU a second time.*/mapping = page_mapping(page);if (((dirty || writeback) && mapping &&inode_write_congested(mapping->host)) ||(writeback && PageReclaim(page)))nr_congested++;/** If a page at the tail of the LRU is under writeback, there* are three cases to consider.** 1) If reclaim is encountering an excessive number of pages* under writeback and this page is both under writeback and* PageReclaim then it indicates that pages are being queued* for IO but are being recycled through the LRU before the* IO can complete. Waiting on the page itself risks an* indefinite stall if it is impossible to writeback the* page due to IO error or disconnected storage so instead* note that the LRU is being scanned too quickly and the* caller can stall after page list has been processed.** 2) Global or new memcg reclaim encounters a page that is* not marked for immediate reclaim, or the caller does not* have __GFP_FS (or __GFP_IO if it's simply going to swap,* not to fs). In this case mark the page for immediate* reclaim and continue scanning.** Require may_enter_fs because we would wait on fs, which* may not have submitted IO yet. And the loop driver might* enter reclaim, and deadlock if it waits on a page for* which it is needed to do the write (loop masks off* __GFP_IO|__GFP_FS for this reason); but more thought* would probably show more reasons.** 3) Legacy memcg encounters a page that is already marked* PageReclaim. memcg does not have any dirty pages* throttling so we could easily OOM just because too many* pages are in writeback and there is nothing else to* reclaim. Wait for the writeback to complete.** In cases 1) and 2) we activate the pages to get them out of* the way while we continue scanning for clean pages on the* inactive list and refilling from the active list. The* observation here is that waiting for disk writes is more* expensive than potentially causing reloads down the line.* Since they're marked for immediate reclaim, they won't put* memory pressure on the cache working set any longer than it* takes to write them to disk.*//* (4.1.2.2.2) 需要回写的page的处理:放回 active 链表 */if (PageWriteback(page)) {/* Case 1 above */if (current_is_kswapd() &&PageReclaim(page) &&test_bit(PGDAT_WRITEBACK, &pgdat->flags)) {nr_immediate++;goto activate_locked;/* Case 2 above */} else if (sane_reclaim(sc) ||!PageReclaim(page) || !may_enter_fs) {/** This is slightly racy - end_page_writeback()* might have just cleared PageReclaim, then* setting PageReclaim here end up interpreted* as PageReadahead - but that does not matter* enough to care. What we do want is for this* page to have PageReclaim set next time memcg* reclaim reaches the tests above, so it will* then wait_on_page_writeback() to avoid OOM;* and it's also appropriate in global reclaim.*/SetPageReclaim(page);nr_writeback++;goto activate_locked;/* Case 3 above */} else {unlock_page(page);wait_on_page_writeback(page);/* then go back and try same page again */list_add_tail(&page->lru, page_list);continue;}}if (!force_reclaim)references = page_check_references(page, sc);/* (4.1.2.2.3) 读取 Accessed 和 PG_referenced 标志,来决定 page 是回收、还是放回 inactive/active 链表 */switch (references) {case PAGEREF_ACTIVATE:goto activate_locked;case PAGEREF_KEEP:nr_ref_keep++;goto keep_locked;case PAGEREF_RECLAIM:case PAGEREF_RECLAIM_CLEAN:; /* try to reclaim the page below */}/** Anonymous process memory has backing store?* Try to allocate it some swap space here.* Lazyfree page could be freed directly*//* (4.1.2.2.4) 匿名可交换 page 的回收处理:把page交换到swap,并设置对应的 mapping */if (PageAnon(page) && PageSwapBacked(page)) {if (!PageSwapCache(page)) {if (!(sc->gfp_mask & __GFP_IO))goto keep_locked;if (PageTransHuge(page)) {/* cannot split THP, skip it */if (!can_split_huge_page(page, NULL))goto activate_locked;/** Split pages without a PMD map right* away. Chances are some or all of the* tail pages can be freed without IO.*/if (!compound_mapcount(page) &&split_huge_page_to_list(page,page_list))goto activate_locked;}if (!add_to_swap(page)) {if (!PageTransHuge(page))goto activate_locked;/* Fallback to swap normal pages */if (split_huge_page_to_list(page,page_list))goto activate_locked;

#ifdef CONFIG_TRANSPARENT_HUGEPAGE

count_vm_event(THP_SWPOUT_FALLBACK);

#endif

if (!add_to_swap(page))

goto activate_locked;

}

may_enter_fs = 1;/* Adding to swap updated mapping */mapping = page_mapping(page);}} else if (unlikely(PageTransHuge(page))) {/* Split file THP */if (split_huge_page_to_list(page, page_list))goto keep_locked;}/** The page is mapped into the page tables of one or more* processes. Try to unmap it here.*//* (4.1.2.2.5) 匿名/文件 page 的回收处理:遍历反向映射的vma,解除所有 vma mmu的映射,并释放 page */if (page_mapped(page)) {enum ttu_flags flags = ttu_flags | TTU_BATCH_FLUSH;if (unlikely(PageTransHuge(page)))flags |= TTU_SPLIT_HUGE_PMD;if (!try_to_unmap(page, flags)) {nr_unmap_fail++;goto activate_locked;}}/* (4.1.2.2.6) dirty page 的处理:放回 active 链表 */if (PageDirty(page)) {/** Only kswapd can writeback filesystem pages* to avoid risk of stack overflow. But avoid* injecting inefficient single-page IO into* flusher writeback as much as possible: only* write pages when we've encountered many* dirty pages, and when we've already scanned* the rest of the LRU for clean pages and see* the same dirty pages again (PageReclaim).*/if (page_is_file_cache(page) &&(!current_is_kswapd() || !PageReclaim(page) ||!test_bit(PGDAT_DIRTY, &pgdat->flags))) {/** Immediately reclaim when written back.* Similar in principal to deactivate_page()* except we already have the page isolated* and know it's dirty*/inc_node_page_state(page, NR_VMSCAN_IMMEDIATE);SetPageReclaim(page);goto activate_locked;}if (references == PAGEREF_RECLAIM_CLEAN)goto keep_locked;if (!may_enter_fs)goto keep_locked;if (!sc->may_writepage)goto keep_locked;/** Page is dirty. Flush the TLB if a writable entry* potentially exists to avoid CPU writes after IO* starts and then write it out here.*/try_to_unmap_flush_dirty();switch (pageout(page, mapping, sc)) {case PAGE_KEEP:goto keep_locked;case PAGE_ACTIVATE:goto activate_locked;case PAGE_SUCCESS:if (PageWriteback(page))goto keep;if (PageDirty(page))goto keep;/** A synchronous write - probably a ramdisk. Go* ahead and try to reclaim the page.*/if (!trylock_page(page))goto keep;if (PageDirty(page) || PageWriteback(page))goto keep_locked;mapping = page_mapping(page);case PAGE_CLEAN:; /* try to free the page below */}}/** If the page has buffers, try to free the buffer mappings* associated with this page. If we succeed we try to free* the page as well.** We do this even if the page is PageDirty().* try_to_release_page() does not perform I/O, but it is* possible for a page to have PageDirty set, but it is actually* clean (all its buffers are clean). This happens if the* buffers were written out directly, with submit_bh(). ext3* will do this, as well as the blockdev mapping.* try_to_release_page() will discover that cleanness and will* drop the buffers and mark the page clean - it can be freed.** Rarely, pages can have buffers and no ->mapping. These are* the pages which were not successfully invalidated in* truncate_complete_page(). We try to drop those buffers here* and if that worked, and the page is no longer mapped into* process address space (page_count == 1) it can be freed.* Otherwise, leave the page on the LRU so it is swappable.*//* (4.1.2.2.6) head buffer 的处理:直接释放 page */if (page_has_private(page)) {if (!try_to_release_page(page, sc->gfp_mask))goto activate_locked;if (!mapping && page_count(page) == 1) {unlock_page(page);if (put_page_testzero(page))goto free_it;else {/** rare race with speculative reference.* the speculative reference will free* this page shortly, so we may* increment nr_reclaimed here (and* leave it off the LRU).*/nr_reclaimed++;continue;}}}/* (4.1.2.2.4) 匿名不可交换 page 的回收处理:继续保持 page 在 inactive 链表 */if (PageAnon(page) && !PageSwapBacked(page)) {/* follow __remove_mapping for reference */if (!page_ref_freeze(page, 1))goto keep_locked;if (PageDirty(page)) {page_ref_unfreeze(page, 1);goto keep_locked;}count_vm_event(PGLAZYFREED);count_memcg_page_event(page, PGLAZYFREED);} else if (!mapping || !__remove_mapping(mapping, page, true))goto keep_locked;/** At this point, we have no other references and there is* no way to pick any more up (removed from LRU, removed* from pagecache). Can use non-atomic bitops now (and* we obviously don't have to worry about waking up a process* waiting on the page lock, because there are no references.*/__ClearPageLocked(page);

free_it:

nr_reclaimed++;

/** Is there need to periodically free_page_list? It would* appear not as the counts should be low*/if (unlikely(PageTransHuge(page))) {mem_cgroup_uncharge(page);(*get_compound_page_dtor(page))(page);} elselist_add(&page->lru, &free_pages);continue;/* (4.1.2.2.5) 把 page 从 inactive 链表移动到 active 链表的处理:设置 PG_active 标志 */

activate_locked:

/* Not a candidate for swapping, so reclaim swap space. /

if (PageSwapCache(page) && (mem_cgroup_swap_full(page) ||

PageMlocked(page)))

try_to_free_swap(page);

VM_BUG_ON_PAGE(PageActive(page), page);

if (!PageMlocked(page)) {

SetPageActive(page);

pgactivate++;

count_memcg_page_event(page, PGACTIVATE);

}

/ (4.1.2.2.6) 不回收page,继续保留在 inactive 链表中 */

keep_locked:

unlock_page(page);

keep:

list_add(&page->lru, &ret_pages);

VM_BUG_ON_PAGE(PageLRU(page) || PageUnevictable(page), page);

}

mem_cgroup_uncharge_list(&free_pages);

try_to_unmap_flush();

free_unref_page_list(&free_pages);list_splice(&ret_pages, page_list);

count_vm_events(PGACTIVATE, pgactivate);if (stat) {stat->nr_dirty = nr_dirty;stat->nr_congested = nr_congested;stat->nr_unqueued_dirty = nr_unqueued_dirty;stat->nr_writeback = nr_writeback;stat->nr_immediate = nr_immediate;stat->nr_activate = pgactivate;stat->nr_ref_keep = nr_ref_keep;stat->nr_unmap_fail = nr_unmap_fail;

}

return nr_reclaimed;

}

3.4.6 try_to_unmap()

遍历反向映射的vma,解除所有 vma mmu的映射,并释放 page:

bool try_to_unmap(struct page *page, enum ttu_flags flags)

{struct rmap_walk_control rwc = {.rmap_one = try_to_unmap_one,.arg = (void *)flags,.done = page_mapcount_is_zero,.anon_lock = page_lock_anon_vma_read,};

/* * During exec, a temporary VMA is setup and later moved. * The VMA is moved under the anon_vma lock but not the * page tables leading to a race where migration cannot * find the migration ptes. Rather than increasing the * locking requirements of exec(), migration skips * temporary VMAs until after exec() completes. */ if ((flags & (TTU_MIGRATION|TTU_SPLIT_FREEZE)) && !PageKsm(page) && PageAnon(page)) rwc.invalid_vma = invalid_migration_vma; /* (1) 根据 page 的反向映射,遍历所有关联的 vma */ if (flags & TTU_RMAP_LOCKED) rmap_walk_locked(page, &rwc); else rmap_walk(page, &rwc); return !page_mapcount(page) ? true : false; }

↓

try_to_unmap_one()

{

/* 解除 mmu 映射,释放 page 内存 */

}

3.4.7 rmap_walk()

反向映射的遍历方法:

void rmap_walk(struct page *page, struct rmap_walk_control *rwc)

{if (unlikely(PageKsm(page)))/* (1) KSM 内存的反向映射遍历 */rmap_walk_ksm(page, rwc);else if (PageAnon(page))/* (2) 匿名内存的反向映射遍历 */rmap_walk_anon(page, rwc, false);else/* (3) 文件内存的反向映射遍历 */rmap_walk_file(page, rwc, false);

}

|→

static void rmap_walk_anon(struct page *page, struct rmap_walk_control *rwc,

bool locked)

{

struct anon_vma *anon_vma;

pgoff_t pgoff_start, pgoff_end;

struct anon_vma_chain *avc;

/* (2.1) 找到 page 对应的 anon_vma 结构 */

if (locked) {anon_vma = page_anon_vma(page);/* anon_vma disappear under us? */VM_BUG_ON_PAGE(!anon_vma, page);

} else {anon_vma = rmap_walk_anon_lock(page, rwc);

}

if (!anon_vma)return;/* (2.2) 计算 page 在vma中的偏移 pgoff */

pgoff_start = page_to_pgoff(page);

pgoff_end = pgoff_start + hpage_nr_pages(page) - 1;

/* (2.3) 逐个遍历 anon_vma 树中符合条件的 vma */

anon_vma_interval_tree_foreach(avc, &anon_vma->rb_root,pgoff_start, pgoff_end) {struct vm_area_struct *vma = avc->vma;unsigned long address = vma_address(page, vma);cond_resched();if (rwc->invalid_vma && rwc->invalid_vma(vma, rwc->arg))continue;if (!rwc->rmap_one(page, vma, address, rwc->arg))break;if (rwc->done && rwc->done(page))break;

}if (!locked)anon_vma_unlock_read(anon_vma);

}

|→

static void rmap_walk_file(struct page *page, struct rmap_walk_control rwc,

bool locked)

{

/ (3.1) 找到 page 对应的 address_space mapping 结构 */

struct address_space *mapping = page_mapping(page);

pgoff_t pgoff_start, pgoff_end;

struct vm_area_struct *vma;

/** The page lock not only makes sure that page->mapping cannot* suddenly be NULLified by truncation, it makes sure that the* structure at mapping cannot be freed and reused yet,* so we can safely take mapping->i_mmap_rwsem.*/

VM_BUG_ON_PAGE(!PageLocked(page), page);if (!mapping)return;/* (3.2) 计算 page 在文件中的偏移 pgoff */

pgoff_start = page_to_pgoff(page);

pgoff_end = pgoff_start + hpage_nr_pages(page) - 1;

if (!locked)i_mmap_lock_read(mapping);

/* (3.3) 逐个遍历 mapping 树中符合条件的 vma */

vma_interval_tree_foreach(vma, &mapping->i_mmap,pgoff_start, pgoff_end) {unsigned long address = vma_address(page, vma);cond_resched();if (rwc->invalid_vma && rwc->invalid_vma(vma, rwc->arg))continue;if (!rwc->rmap_one(page, vma, address, rwc->arg))goto done;if (rwc->done && rwc->done(page))goto done;

}

done:

if (!locked)

i_mmap_unlock_read(mapping);

}

4. 其他回收方式

除了从 LRU 链表中回收内存,系统还有一些手段来回收内存。

4.1 Shrinker

除了用户态的匿名内存和文件内存。内核模块可以把一些可回收的资源注册成 shrinker,在内存紧张的情况下尝试进行回收。类如 android 下大名鼎鼎的 lmk 驱动。

4.1.1 register_shrinker()

shrinker 的注册函数:

int register_shrinker(struct shrinker *shrinker)

{int err = prealloc_shrinker(shrinker);

if (err) return err; register_shrinker_prepared(shrinker); return 0; }

↓

void register_shrinker_prepared(struct shrinker shrinker)

{

down_write(&shrinker_rwsem);

/ (1) 把新的 shrinker 加入到全局链表 shrinker_list */

list_add_tail(&shrinker->list, &shrinker_list);

up_write(&shrinker_rwsem);

}

4.1.2 do_shrink_slab()

内存回收时,调用 shrinker 的回收函数:

shrink_node() → shrink_slab():

static unsigned long shrink_slab(gfp_t gfp_mask, int nid,

struct mem_cgroup *memcg,

unsigned long nr_scanned,

unsigned long nr_eligible)

{

struct shrinker *shrinker;

unsigned long freed = 0;

if (memcg && (!memcg_kmem_enabled() || !mem_cgroup_online(memcg)))return 0;if (nr_scanned == 0)nr_scanned = SWAP_CLUSTER_MAX;if (!down_read_trylock(&shrinker_rwsem)) {/** If we would return 0, our callers would understand that we* have nothing else to shrink and give up trying. By returning* 1 we keep it going and assume we'll be able to shrink next* time.*/freed = 1;goto out;

}/* (1) 遍历全局链表 shrinker_list 中的 shrinker,逐个进行回收尝试 */

list_for_each_entry(shrinker, &shrinker_list, list) {struct shrink_control sc = {.gfp_mask = gfp_mask,.nid = nid,.memcg = memcg,};/** If kernel memory accounting is disabled, we ignore* SHRINKER_MEMCG_AWARE flag and call all shrinkers* passing NULL for memcg.*/if (memcg_kmem_enabled() &&!!memcg != !!(shrinker->flags & SHRINKER_MEMCG_AWARE))continue;if (!(shrinker->flags & SHRINKER_NUMA_AWARE))sc.nid = 0;/* (2) 调用 shrinker 的回收 */freed += do_shrink_slab(&sc, shrinker, nr_scanned, nr_eligible);/** Bail out if someone want to register a new shrinker to* prevent the regsitration from being stalled for long periods* by parallel ongoing shrinking.*/if (rwsem_is_contended(&shrinker_rwsem)) {freed = freed ? : 1;break;}

}up_read(&shrinker_rwsem);

out:

cond_resched();

return freed;

}

↓

static unsigned long do_shrink_slab(struct shrink_control *shrinkctl,

struct shrinker *shrinker,

unsigned long nr_scanned,

unsigned long nr_eligible)

{

unsigned long freed = 0;

unsigned long long delta;

long total_scan;

long freeable;

long nr;

long new_nr;

int nid = shrinkctl->nid;

long batch_size = shrinker->batch ? shrinker->batch

: SHRINK_BATCH;

long scanned = 0, next_deferred;

freeable = shrinker->count_objects(shrinker, shrinkctl);

if (freeable == 0)return 0;/** copy the current shrinker scan count into a local variable* and zero it so that other concurrent shrinker invocations* don't also do this scanning work.*/

nr = atomic_long_xchg(&shrinker->nr_deferred[nid], 0);total_scan = nr;

delta = (4 * nr_scanned) / shrinker->seeks;

delta *= freeable;

do_div(delta, nr_eligible + 1);

total_scan += delta;

if (total_scan < 0) {pr_err("shrink_slab: %pF negative objects to delete nr=%ld\n",shrinker->scan_objects, total_scan);total_scan = freeable;next_deferred = nr;

} elsenext_deferred = total_scan;/** We need to avoid excessive windup on filesystem shrinkers* due to large numbers of GFP_NOFS allocations causing the* shrinkers to return -1 all the time. This results in a large* nr being built up so when a shrink that can do some work* comes along it empties the entire cache due to nr >>>* freeable. This is bad for sustaining a working set in* memory.** Hence only allow the shrinker to scan the entire cache when* a large delta change is calculated directly.*/

if (delta < freeable / 4)total_scan = min(total_scan, freeable / 2);/** Avoid risking looping forever due to too large nr value:* never try to free more than twice the estimate number of* freeable entries.*/

if (total_scan > freeable * 2)total_scan = freeable * 2;trace_mm_shrink_slab_start(shrinker, shrinkctl, nr,nr_scanned, nr_eligible,freeable, delta, total_scan);/** Normally, we should not scan less than batch_size objects in one* pass to avoid too frequent shrinker calls, but if the slab has less* than batch_size objects in total and we are really tight on memory,* we will try to reclaim all available objects, otherwise we can end* up failing allocations although there are plenty of reclaimable* objects spread over several slabs with usage less than the* batch_size.** We detect the "tight on memory" situations by looking at the total* number of objects we want to scan (total_scan). If it is greater* than the total number of objects on slab (freeable), we must be* scanning at high prio and therefore should try to reclaim as much as* possible.*/

while (total_scan >= batch_size ||total_scan >= freeable) {unsigned long ret;unsigned long nr_to_scan = min(batch_size, total_scan);shrinkctl->nr_to_scan = nr_to_scan;shrinkctl->nr_scanned = nr_to_scan;ret = shrinker->scan_objects(shrinker, shrinkctl);if (ret == SHRINK_STOP)break;freed += ret;count_vm_events(SLABS_SCANNED, shrinkctl->nr_scanned);total_scan -= shrinkctl->nr_scanned;scanned += shrinkctl->nr_scanned;cond_resched();

}if (next_deferred >= scanned)next_deferred -= scanned;

elsenext_deferred = 0;

/** move the unused scan count back into the shrinker in a* manner that handles concurrent updates. If we exhausted the* scan, there is no need to do an update.*/

if (next_deferred > 0)new_nr = atomic_long_add_return(next_deferred,&shrinker->nr_deferred[nid]);

elsenew_nr = atomic_long_read(&shrinker->nr_deferred[nid]);trace_mm_shrink_slab_end(shrinker, nid, freed, nr, new_nr, total_scan);

return freed;

}

4.2 内存整理 (Compact)

Compact 功能是尝试把多个内存碎片,规整成一大块连续的内存。具体功能这里就不详细展开。

参考:Linux中的Memory Compaction

4.3 内存合并 (KSM)

共享内存的概念在现代操作系统中很常用了,比如,一个程序启动时会与父进程共用它的全部内存。但子或父进程需要修改共享内存的时候,linux便再分配新内存,然后copy原区域内容到新内存。这个过程就叫copy on write。

而KSM(Kernel Samepage Merging)是linux的新属性,它做的东西刚好与共享内存相反。 当linux启用了KSM之后,KSM会检查多个运行中的进程,并比对它们的内存。如果任何区域或者分页是一样的,KSM就会毫不犹豫地合并他们成一个分页。 那么新分页也是被标记成copy on write。如果VM要修改内存的话,那么linux就会分配新的内存给这个VM。KSM可以在KVM大有作为。

参考:玩转KVM: 聊聊KSM内存合并

4.4 OOM Killer

Linux OOM(Out Of Memory) killer,就是系统在内存极度紧张的情况下,开始通过杀进程来释放内存了。

原生 Linux 启动杀进程的时机非常晚,因为系统很难区分出哪些进程可以被杀哪些进程不能被杀。而 Android 下启动 LMK 来杀进程释放内存的时机非常早,因为 Android 可以区分出进程的前台和后台,后台的进程大部分是可以被杀掉的。

参考:Linux内核OOM killer机制

参考文档:

1.linux内存源码分析 - 内存回收(lru链表)

2.linux内存源码分析 - 内存回收(匿名页反向映射)

3.linux内存源码分析 - 内存碎片整理(实现流程)

4.linux内存源码分析 - 内存碎片整理(同步关系)

5.page reclaim 参数

6.kernel-4.9内存回收核心流程

7.Linux内存管理 (21)OOM

8.linux内核page结构体的PG_referenced和PG_active标志

9.Linux中的内存回收[一]

10.Page 页帧管理详解

11.Linux中的Memory Compaction

12.玩转KVM: 聊聊KSM内存合并

13.Linux内核OOM killer机制

本文来自博客园,作者:pwl999,转载请注明原文链接:Linux mem 2.5 Buddy 内存回收机制 - pwl999 - 博客园

posted @ 2021-04-22 19:45 pwl999 阅读(30) 评论(0) 编辑 收藏 举报

</div><!--end: topics 文章、评论容器-->

刷新评论刷新页面返回顶部

LoadPostCategoriesTags(cb_blogId, cb_entryId); LoadPostInfoBlock(cb_blogId, cb_entryId, cb_blogApp, cb_blogUserGuid);

GetPrevNextPost(cb_entryId, cb_blogId, cb_entryCreatedDate, cb_postType);

loadOptUnderPost();

GetHistoryToday(cb_blogId, cb_blogApp, cb_entryCreatedDate);

</div><!--end: forFlow -->

</div><!--end: mainContent 主体内容容器-->

<div id="sideBar"><div id="sideBarMain"><div id="sidebar_news" class="newsItem"><script>loadBlogNews();</script>

Copyright © 2022 pwl999

Powered by .NET 6 on Kubernetes

</div><!--end: footer -->

<input type="hidden" id="antiforgery_token" value="CfDJ8AuMt_3FvyxIgNOR82PHE4msjAEnd9XWco5qHsBv4VFgIFFFKYwGIoGAns8Wh4IboINoUHHfi00dteKC2S9IjKcZLHWOGH-ztb4ZrmppKTPrqfDRrTMEg386yMPbYOiVz_ECoW1SQH9twIeuwANn3y8" /><script async src="https://www.googletagmanager.com/gtag/js?id=UA-476124-1"></script>

<script>window.dataLayer = window.dataLayer || [];function gtag(){dataLayer.push(arguments);}gtag('js', new Date());var kv = getGACustom();if (kv) {gtag('set', kv);}gtag('config', 'UA-476124-1');

</script>