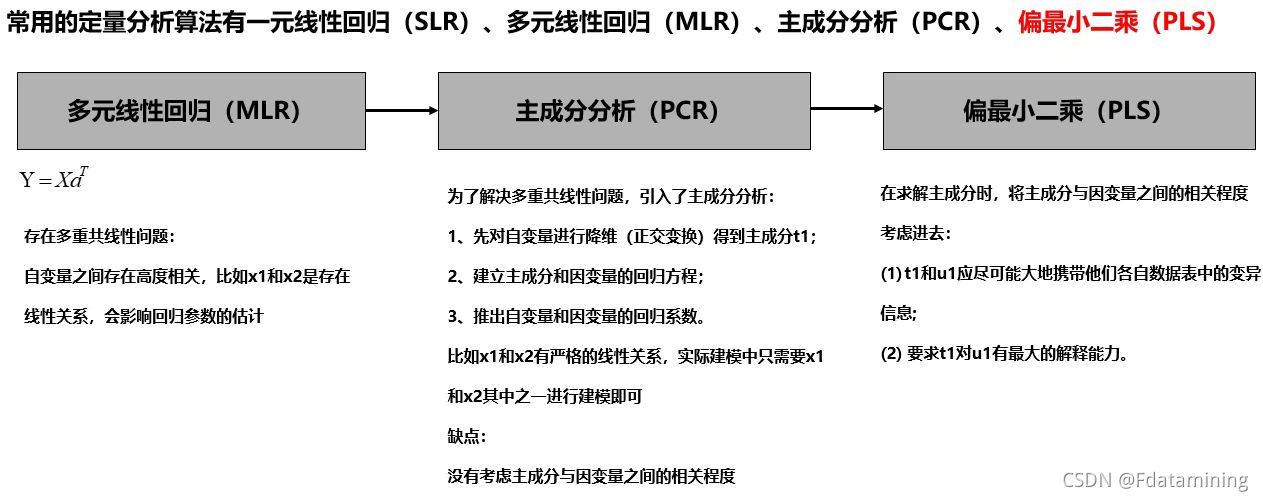

算法简介:

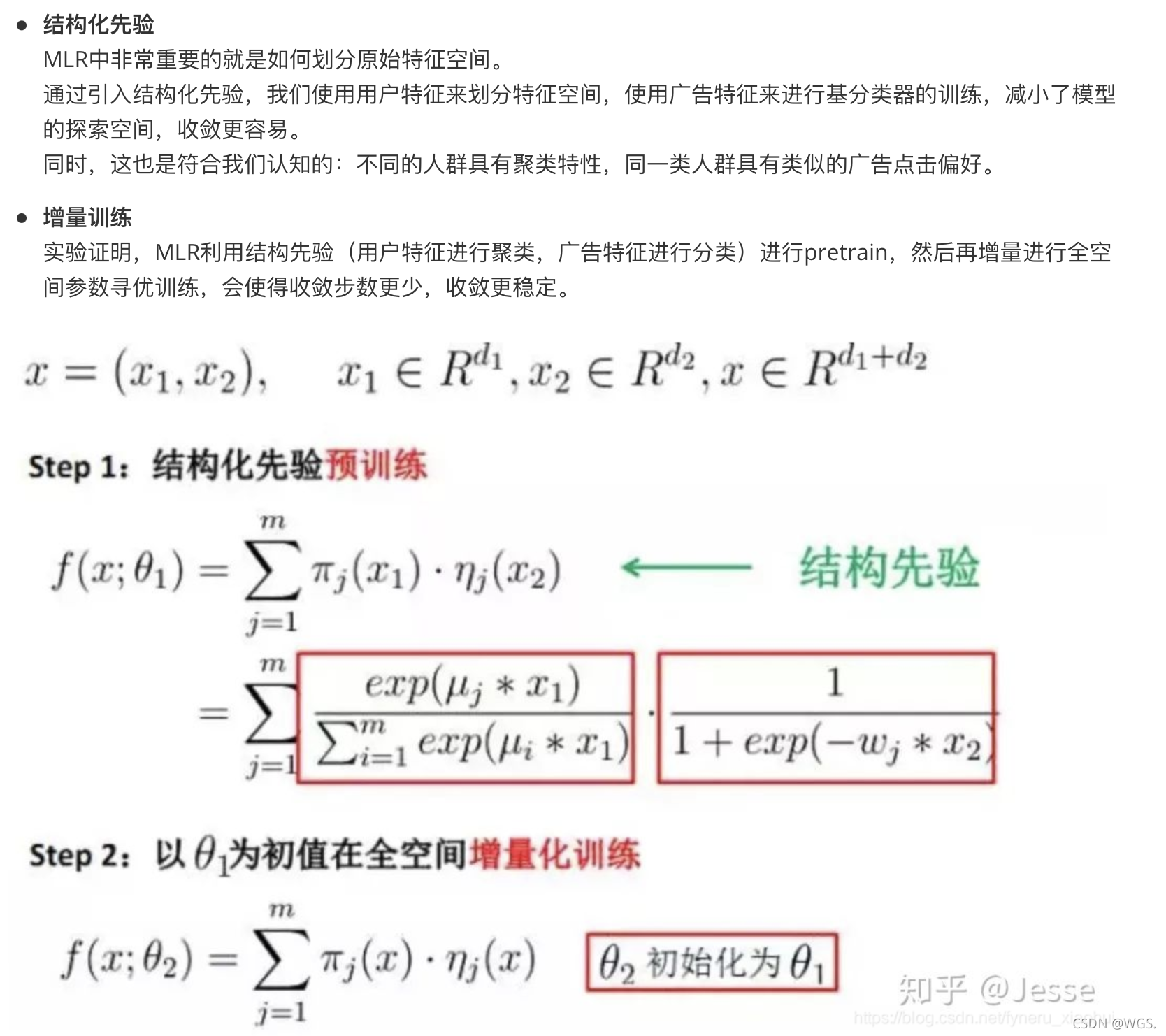

该算法是阿里的盖坤大神力作:Learning Piece-wise Linear Models from Large Scale Data for Ad Click Prediction,介绍了阿里广告的一个主要ctr预估模型Large Scale Piece-wise Linear Model (LS-PLM),在2012年就开始使用,据说早期叫做Mixture of LR(MLR)。

代码地址在: https://github.com/CastellanZhang/alphaPLM

算法介绍:http://castellanzhang.github.io/2017/06/01/mlr_plm/

以上git和博客对mlr 做出详细的介绍,本文主要介绍在学习和实践中的一些领悟,能力一般,水平有限,望路过大神多多指教.

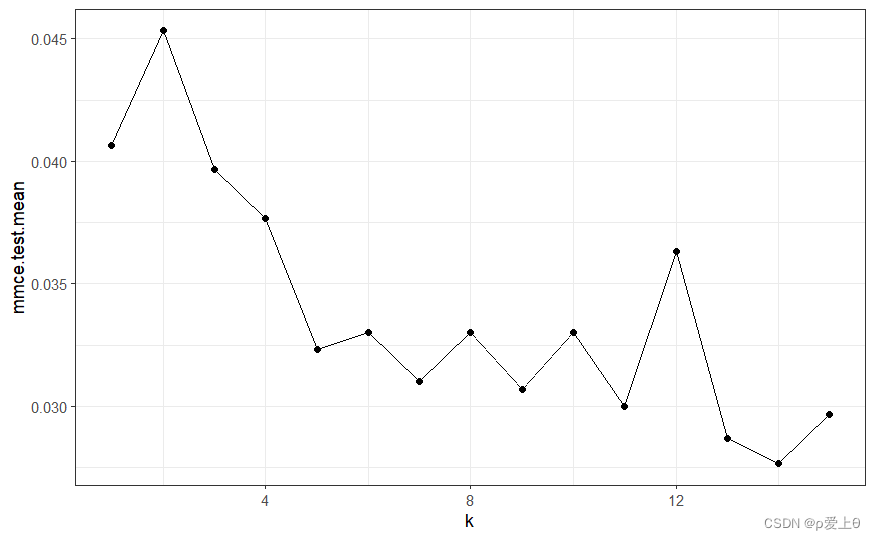

在使用中发现,使用该算法生成模型,会产生大量的训练参数,造成实际生产中资源使用不足的问题,以个人使用的数据训练,每天训练集的特征数据大约8kw(已经经过特征筛选过滤),训练piece_num=12(训练参数,影响参数数量),模型40-50G,(这对于我等小集群,完全扛不住),怎么办呢???

就在此时,一小胖从旁走过,手拿鸡翅,汉堡,如此之人不瘦怎行!!!感叹至此,令我不禁怀想,如此之模型,不瘦身让我如何部署?想到此处,模型瘦身之念顿起。那如何瘦身嗫?

业内大佬有云“模型瘦身,首看普瑞狄特(predict),在看权零”。此话不虚,见mlr 之 predict 如下:

在#ifndef FTRL_MODEL_H_

#define FTRL_MODEL_H_#include <unordered_map>

#include <string>

#include <vector>

#include <mutex>

#include <iostream>

#include <cmath>

#include "../Utils/utils.h"using namespace std;//每一个特征维度的模型单元

class ftrl_model_unit

{

public:vector<double> u;vector<double> u_n;vector<double> u_z;vector<double> w;vector<double> w_n;vector<double> w_z;mutex mtx;

public:ftrl_model_unit(int piece_num, double u_mean, double u_stdev, double w_mean, double w_stdev){u.resize(piece_num);u_n.resize(piece_num);u_z.resize(piece_num);for(int f = 0; f < piece_num; ++f){u[f] = utils::gaussian(u_mean, u_stdev);u_n[f] = 0.0;u_z[f] = 0.0;}w.resize(piece_num);w_n.resize(piece_num);w_z.resize(piece_num);for(int f = 0; f < piece_num; ++f){w[f] = utils::gaussian(w_mean, w_stdev);w_n[f] = 0.0;w_z[f] = 0.0;}}ftrl_model_unit(int piece_num, const vector<string>& modelLineSeg){u.resize(piece_num);u_n.resize(piece_num);u_z.resize(piece_num);w.resize(piece_num);w_n.resize(piece_num);w_z.resize(piece_num);for(int f = 0; f < piece_num; ++f){u[f] = stod(modelLineSeg[1 + f]);w[f] = stod(modelLineSeg[piece_num + 1 + f]);u_n[f] = stod(modelLineSeg[2 * piece_num + 1 + f]);w_n[f] = stod(modelLineSeg[3 * piece_num + 1 + f]);u_z[f] = stod(modelLineSeg[4 * piece_num + 1 + f]);w_z[f] = stod(modelLineSeg[5 * piece_num + 1 + f]);}}void reinit_u(double u_mean, double u_stdev){int size = u.size();for(int f = 0; f < size; ++f){u[f] = utils::gaussian(u_mean, u_stdev);}}void reinit_w(double w_mean, double w_stdev){int size = w.size();for(int f = 0; f < size; ++f){w[f] = utils::gaussian(w_mean, w_stdev);}}friend inline ostream& operator <<(ostream& os, const ftrl_model_unit& mu){if(mu.u.size() > 0){os << mu.u[0];}for(int f = 1; f < mu.u.size(); ++f){os << " " << mu.u[f];}for(int f = 0; f < mu.w.size(); ++f){os << " " << mu.w[f];}for(int f = 0; f < mu.u_n.size(); ++f){os << " " << mu.u_n[f];}for(int f = 0; f < mu.w_n.size(); ++f){os << " " << mu.w_n[f];}for(int f = 0; f < mu.u_z.size(); ++f){os << " " << mu.u_z[f];}for(int f = 0; f < mu.w_z.size(); ++f){os << " " << mu.w_z[f];}return os;}

};class ftrl_model

{

public:ftrl_model_unit* muBias;unordered_map<string, ftrl_model_unit*> muMap;int piece_num;double u_stdev;double u_mean;double w_stdev;double w_mean;public:ftrl_model(double _piece_num);ftrl_model(double _piece_num, double _u_mean, double _u_stdev, double _w_mean, double _w_stdev);ftrl_model_unit* getOrInitModelUnit(string index);ftrl_model_unit* getOrInitModelUnitBias();double get_uTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, vector<ftrl_model_unit*>& theta, int f);double get_wTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, vector<ftrl_model_unit*>& theta, int f);double get_uTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta, int f);double get_wTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta, int f);double getScore(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta);void outputModel(ofstream& out);bool loadModel(ifstream& in);void debugPrintModel();private:double get_uif(unordered_map<string, ftrl_model_unit*>& theta, const string& index, int f);double get_wif(unordered_map<string, ftrl_model_unit*>& theta, const string& index, int f);

private:mutex mtx;mutex mtx_bias;

};ftrl_model::ftrl_model(double _piece_num)

{piece_num = _piece_num;u_mean = 0.0;u_stdev = 0.0;w_mean = 0.0;w_stdev = 0.0;muBias = NULL;

}ftrl_model::ftrl_model(double _piece_num, double _u_mean, double _u_stdev, double _w_mean, double _w_stdev)

{piece_num = _piece_num;u_mean = _u_mean;u_stdev = _u_stdev;w_mean = _w_mean;w_stdev = _w_stdev;muBias = NULL;

}ftrl_model_unit* ftrl_model::getOrInitModelUnit(string index)

{unordered_map<string, ftrl_model_unit*>::iterator iter = muMap.find(index);if(iter == muMap.end()){mtx.lock();ftrl_model_unit* pMU = new ftrl_model_unit(piece_num, u_mean, u_stdev, w_mean, w_stdev);muMap.insert(make_pair(index, pMU));mtx.unlock();return pMU;}else{return iter->second;}

}ftrl_model_unit* ftrl_model::getOrInitModelUnitBias()

{if(NULL == muBias){mtx_bias.lock();muBias = new ftrl_model_unit(piece_num, 0, 0, 0, 0);mtx_bias.unlock();}return muBias;

}double ftrl_model::get_uTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, vector<ftrl_model_unit*>& theta, int f)

{double result = 0;result += muBias.u[f];for(int i = 0; i < x.size(); ++i){result += theta[i]->u[f] * x[i].second;}return result;

}double ftrl_model::get_wTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, vector<ftrl_model_unit*>& theta, int f)

{double result = 0;result += muBias.w[f];for(int i = 0; i < x.size(); ++i){result += theta[i]->w[f] * x[i].second;}return result;

}double ftrl_model::get_uTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta, int f)

{double result = 0;result += muBias.u[f];for(int i = 0; i < x.size(); ++i){result += get_uif(theta, x[i].first, f) * x[i].second;}return result;

}double ftrl_model::get_wTx(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta, int f)

{double result = 0;result += muBias.w[f];for(int i = 0; i < x.size(); ++i){result += get_wif(theta, x[i].first, f) * x[i].second;}return result;

}**// 计算得分**

double ftrl_model::getScore(const vector<pair<string, double> >& x, ftrl_model_unit& muBias, unordered_map<string, ftrl_model_unit*>& theta)

{double result = 0;vector<double> uTx(piece_num);double max_uTx = numeric_limits<double>::lowest();for(int f = 0; f < piece_num; ++f){uTx[f] = get_uTx(x, muBias, theta, f);if(uTx[f] > max_uTx) max_uTx = uTx[f];}double numerator = 0.0;double denominator = 0.0;for(int f = 0; f < piece_num; ++f){uTx[f] -= max_uTx;uTx[f] = exp(uTx[f]);double wTx = get_wTx(x, muBias, theta, f);double s_wx = utils::sigmoid(wTx);numerator += uTx[f] * s_wx;denominator += uTx[f];}return numerator / denominator;

}double ftrl_model::get_uif(unordered_map<string, ftrl_model_unit*>& theta, const string& index, int f)

{unordered_map<string, ftrl_model_unit*>::iterator iter = theta.find(index);if(iter == theta.end()){return 0.0;}else{return iter->second->u[f];}

}double ftrl_model::get_wif(unordered_map<string, ftrl_model_unit*>& theta, const string& index, int f)

{unordered_map<string, ftrl_model_unit*>::iterator iter = theta.find(index);if(iter == theta.end()){return 0.0;}else{return iter->second->w[f];}

}void ftrl_model::outputModel(ofstream& out)

{out << "bias " << *muBias << endl;for(unordered_map<string, ftrl_model_unit*>::iterator iter = muMap.begin(); iter != muMap.end(); ++iter){out << iter->first << " " << *(iter->second) << endl;}

}void ftrl_model::debugPrintModel()

{cout << "bias " << *muBias << endl;for(unordered_map<string, ftrl_model_unit*>::iterator iter = muMap.begin(); iter != muMap.end(); ++iter){cout << iter->first << " " << *(iter->second) << endl;}

}bool ftrl_model::loadModel(ifstream& in)

{string line;if(!getline(in, line)){return false;}vector<string> strVec;utils::splitString(line, ' ', &strVec);if(strVec.size() != 6 * piece_num + 1){return false;}muBias = new ftrl_model_unit(piece_num, strVec);while(getline(in, line)){strVec.clear();utils::splitString(line, ' ', &strVec);if(strVec.size() != 6 * piece_num + 1){return false;}string& index = strVec[0];ftrl_model_unit* pMU = new ftrl_model_unit(piece_num, strVec);muMap[index] = pMU;}return true;

}

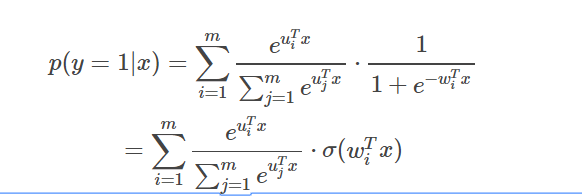

#endif /*FTRL_MODEL_H_*/仔细阅读getScore发现predict 很简单,就是实现了一下mlr 的公式:

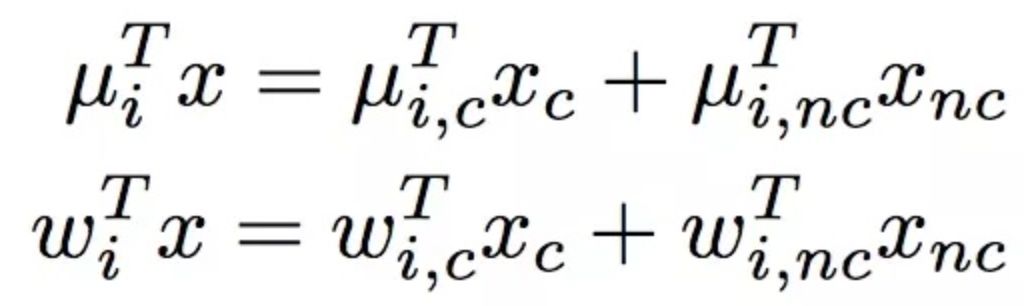

从公式可以看出,数据predict 时仅使用uf, wf 权重,实例截图如下:

根据以上分析,得出过滤条件uf, wf 均不为零是特征为有效特征。因此模型瘦身实现如下:

# -*- coding: utf-8 -*-

# load model and predict mlr

from __future__ import unicode_literals

#!/usr/bin/python

import sys

piece_num = int(sys.argv[3])

lite_model = open(sys.argv[2], 'w')

line_count = 0

for line in open(sys.argv[1], 'r'):if not line:breakelse:if piece_num == 1:if line.split('\t')[2] != '0':lite_model.write(line)else :line_sp = line.split()filter = Truefeature = line_sp[0]for i in xrange(piece_num):if line_sp[1+i] != '0' or line_sp[1+piece_num+i] != '0':lite_model.write(feature+"\t"+str(i)+"\t"+line_sp[1+i]+"\t"+line_sp[1+piece_num+i]+"\n")line_count+=1if line_count % 1000000 == 0:print "finishede line count:%d"%line_count# break

lite_model.close()

测试预测文件(即实现加载lite model,的predict):

# -*- coding: utf-8 -*-

# load model and predict mlr

from __future__ import unicode_literals

#!/usr/bin/python

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

import numpy as np

import math

print len(sys.argv)

if len(sys.argv)< 3:print "please input full model file name and save result file name at least!!!"exit(0)piece_num = 12

if len(sys.argv) == 4:piece_num = int(sys.argv[3])

result = open(sys.argv[2], 'w')

line_count = 0

model = {}

for line in open(sys.argv[1], 'r'):if not line:breakelse:line_sp = line.split()feature = line_sp[0]index = int(line_sp[1])u_v = float(line_sp[2])w_v = float(line_sp[3])if feature in model:model[feature][index] = [u_v, w_v]else:model[feature] = {}model[feature][index] = [u_v, w_v]line_count+=1if line_count % 1000000 == 0:print "finished line count:%d"%line_count# breakdef sigmode(x):return 1 / (1 + np.exp(-x))def get_uw_T_x(features, model):u_T_x = np.zeros(piece_num)w_T_x = np.zeros(piece_num)features.append("bias")for feature in features:u_zeros = np.zeros(piece_num)w_zeros = np.zeros(piece_num)if feature in model:for v in model[feature]:u_zeros[v] = model[feature][v][0]w_zeros[v] = model[feature][v][1]# print u_zerosu_T_x += u_zerosw_T_x += w_zerosprint u_T_xprint w_T_xu_T_x = u_T_x - np.max(u_T_x)return np.exp(u_T_x), sigmode(w_T_x)def predict(features, model):features_cal = [feature.split(":")[0] for feature in features]uTx, wTx = get_uw_T_x(features_cal, model)dim = 0.0re = 0.0for x, y in zip(uTx, wTx):re += x*ydim += xreturn re/dimfor line in sys.stdin:if not line:breakelse:# print modelline_sp = line.split("\t")line_news = "\t".join(line_sp[1:-1])label = line_sp[0]feature_line = line_sp[-1]# print feature_linefeatures = feature_line.split()# print features# breakscore = predict(features, model)print label+"\t"+str(score)+"\t"+line_newsresult.write(label+"\t"+str(score)+"\t"+line_news)break

满篇code实在难受,如若不想关心如何实现,直接使用,请关注git:

https://github.com/liguoyu1/alphaPLM/tree/master/src/scripts