文章目录

- ORL Character Recgnition

- 0 Abstract

- 1 Introduction

- 2 Related Work

- 2.1 Character recognition

- 2.2 Text detection

- 3 Connection Text Proposal Network

- 3.1 Anchor

- 3.2 Bi-Directional LSTM

- 3.3 RPN layer

- 3.4 Text line constructor

- 3.5 Loss function

- 3.6 Total system

- 3.7 Algorithm

- 4 Experiment

- 4.1Dataset

- 4.2Taining model

- 4.3 Algorithm analysis

- 5 Conclusion and future work

ORL Character Recgnition

0 Abstract

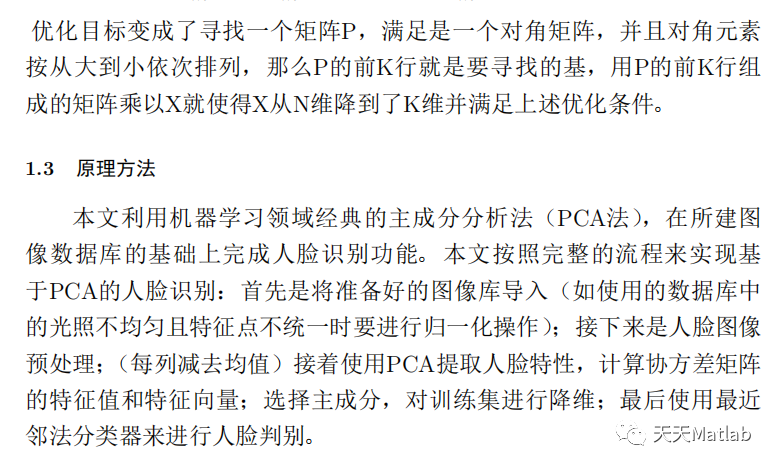

The paper uses Connectionist Text Proposal Network (CTPN) to make a character recognition in the natural image. The CTPN detects a text line in a sequence of fine-scale text proposals in convolutional feature maps. It consists of Anchor, Bi-Directional LSTM, RPN layer, and Text line constructor. The CTPN can jointly predict location and text or non-text to get an accurate location. It can learn from the rich context information of the image to detect ambiguous text. In the experiment, we used the method to train on the 2800 dataset. After the end of the 1200 iterations, we get a better result because the method can detect text perfectly.

Keywords: CTPN, character recognition, text detection

1 Introduction

For the character recognition of complex scene, the text detection is a research hotspot. The text recognition aims to detect bounding box. When overlap rate between the bounding box and ground truth is greater than 0.5. Then the detection result is correct.

We adopt the CTPN to detect text, The CTPN have vertical anchor regression mechanism detecting small scale text candidate box. It can decompose text bounding box into the same width small bounding box. After make regression in the y y y direction, to concatenate the some small bounding box into a big bounding box. Bi-Directional LSTM can learn sequence signatures. A Bi-Directional LSTM is equal to two connected LSTMs in opposite directions. It has a better effect than LSTM in a single direction. The RPN layer consists of 2k vertical coordinates, 2k scores, and k side-refinement. It can respectively output the location of the anchor, text and non-text scores of foreground and background, and ratio of side-refinement. The text line constructor can link some anchors to an integral whole and get a big nonoverlap bounding box.

In the experiment, we first scale the pre-normalized images to crop them into 800\times600. Then generate trained datasets, and validate datasets. Finally, train and validate the model. Through the test, we can get a better result via CTPN.

2 Related Work

2.1 Character recognition

Character recognition is a way that enables to translation of different types of documents into editable and searchable data, and Convolutional Neural Network is a deep learning architecture having a strong ability to learn. Therefore, many researchers have done research in character recognition using deep neural networks. Vaidya, Rohan, et al. proposed offline handwritten character detection via deep neural networks. The method adopted OpenCV for performing image networks and used Tensorflow to train the neural network based on Python. Although the technique can recognize offline handwriting, it can’t recognize cursive handwritten text [1]. Narayan, Adith, et al. presented a method based on CNN. It can detect and recognize handwritten text images with higher accuracy. The plans are used in English handwritten characters. However, the difficulty in contour-based techniques limited the recognition of natural scenes and handwritten[2]. Khan, Mohammad Meraj, et al. presented a deep CNN model using the SE-ResNeXt and used SE blocks to improve the performance. Getting the result of decreasing the hyper-parameter and complexity leads to an increase in the capability of learning very complex features[3]. Khandokar, I. et al. use CNN to progress in HCR by learning discriminatory characteristics from raw data. CNN can recognize the characters by considering the forms and contrasting the features[4]. Balaha, Hossam Magdy, et al. present a deep learning system with a convolutional neural network. The method has two frames, HMB1 and HMB2. HMB1 has a more complex architecture and more parameters than HMB2. Getting the conclusion of more parameters leads to accuracy[5]. Narang et al. used deep network character recognition to recognize ancient characters. It is a powerful application direction in character recognition, pattern recognition, etc[6].

2.2 Text detection

Text detection plays a vital role in recognition. Liao, Minghui, et al. proposed a Differentiable Binarization module. The method can gain the accuracy of text detection with a simple pipeline[7]. Shivakumara et al., a Laplacian multi-oriented text detection method, is presented to detect in the video. It can handle a text of arbitrary orientation via Fourier-Laplacian and k-means. This method can take graphics text and scene text of both horizontal and non-horizontal orientations [8]. Zhang, Zheng, et al. propose a localizing text line and a coarse-to-fine method. It predicts the salient map of text regions via DCN and evaluates text line hypotheses to use another FCN classifier to predict the centroid of each character[9]. Zhu, Yiqin, et al. use the Fourier domain and propose a Fourier Contour Embedding (FCE) method to represent arbitrarily shaped text contours as compact signatures. The method is accurate and robust by experiment[10].

3 Connection Text Proposal Network

3.1 Anchor

The method adopts a vertical anchor that can forecast the vertical direction of the text rather than the horizon direction because the technique only detects a slight widening of text fragment in the horizon direction and forecasts the height of the corresponding widening. Finally, link all the fragments together, as shown in Fig 1.

We always set the width to sixteen and the height to a group of ten on the same width. Because CTPN adopts VGG16 to extract features, the width and size of the conv5 feature map are always is 1 16 \frac{1}{16} 161 of the input image, and FC has the same width and height. Therefore, the anchor can cover each point of the original image, not overlap, and use a group of heights to solve the different heights of texts.

After getting the anchor, Softmax will determine whether the anchor contains text and choose the max positive softmax score. The bounding box regression amends the y center coordinate and height of the anchor.

v c = ( c y − c y a ) h a v_c = \frac{(c_y-c^a_y)}{h^a} vc=ha(cy−cya), v h = l o g ( h h a ) v_h=log(\frac{h}{h^a}) vh=log(hah), v c ∗ = ( c y ∗ − c y a ) h a v^*_c=\frac{(c^*_y-c^a_y)}{h^a} vc∗=ha(cy∗−cya), v h ∗ = l o g ( h ∗ / h a ) v^*_h=log(h^*/h^a) vh∗=log(h∗/ha)

c y c_y cyis the detected center coordinate, h h h is the detected height, c y a c^a_y cya is the center y coordinate of anchor, h a h^a ha is the height of the anchor, c y ∗ c^*_y cy∗ is the center coordinate of ground truth, h ∗ h^* h∗ is the center coordinate of ground truth. v c v_c vc and v h v_h vh are the coordinate transformers of regression predicted value and anchor. v c ∗ v^*_c vc∗ and v h ∗ v^*_h vh∗ are coordinate transformers of ground truth and anchor.

The institution of the anchor on the horizon and vertical.

3.2 Bi-Directional LSTM

The Bi-Directional LSTM is a left and right accumulating map zipped together and makes predictions over a sequence with both past and future context. It has two values of forwarding calculation A A A and backward calculation A ′ A' A′ to output y y y. Then time steps deal with one context in the input sequence at a time using forward-propagation and backward-propagation directions, as shown in Fig 2.

Structure of Bi-Directional LSTM[11]

3.3 RPN layer

The RPN of CTPN is similar to Faster R-CNN. The first branch is 2k vertical coordinates. It can output the location of the anchor( v c v_c vc, v h v_h vh). The second branch is 2k scores. It can output text scores of foreground and non-text scores of background, and softmax calculates scores. The third branch is k side-refinement. It can output the ratio of side-refinement o o o to modify the text boxes because horizontal direction may have inaccuracy, as shown in Fig 3.

o = ( x s i d e − c x a ) w a o = \frac{(x_{side}-c^a_x)}{w^a} o=wa(xside−cxa), o ∗ = x s i d e ∗ − c x a w a o^* = \frac{x^*_{side}-c^a_x}{w^a} o∗=waxside∗−cxa

o ∗ o^* o∗ is ground truth, x s i d e x_{side} xside is the left or right edge of the text box, and c x a c^a_x cxa is the horizontal center coordinate of an anchor. w a w_a wa is the fixed width of the anchor (16 pixels).

The RPN layer is an exact stretch to adjust the text boxes.

3.4 Text line constructor

The method first sorts anchor on the horizonal coordinate and calculates p a i r ( b o x j ) pair(box_j) pair(boxj) of each anchor ( b o x i ) (box_i) (boxi) to get p a i r ( x i , x j ) pair(x_i,x_j) pair(xi,xj), finally, construct a connect graph by p a i r ( x i , x j ) pair(x_i,x_j) pair(xi,xj) and gain the text detection box, as shown in Fig 4.

the text line constructor

Text line constructor has two ways. On the one hand, look for the candidate anchor on the horizontal positive coordinate by forwarding, and choose b o x i box_i boxi ( o v e r l a p v > 0.7 overlap_v >0.7 overlapv>0.7) in the vertical direction, then get the biggest scores b o x j box_j boxj ( s c o r e i score_i scorei) by softmax score. On the other hand, look for the candidate anchor in the horizontal negative coordinate backward and choose b o x i box_i boxi ( o v e r l a p v > 0.7 overlap_v >0.7 overlapv>0.7) in the vertical direction, then get the biggest scores b o x k box_k boxk ( s c o r e k score_k scorek) by softmax score. To contrast s c o r e i score_i scorei with s c o r e k score_k scorek, when s c o r e i ≥ s c o r e k score_i \ge score_k scorei≥scorek, set g r a p h ( i , j ) = T r u e graph(i, j) = True graph(i,j)=True. When s c o r e i < s c o r e k score_i < score_k scorei<scorek, this is not the longest link.

3.5 Loss function

The method adopts an end-to-end network predicting three outputs at a time. The loss function is expressed as:

L ( s i , v j , o k ) = 1 N s ∑ i L s c l ( s i , s i ∗ ) + λ 1 N v ∑ j L v r e ( v j , v j ∗ ) + λ 2 N o ∑ k L o r e ( o k , o k ∗ ) L(s_i,v_j,o_k)=\frac{1}{N_s}\sum_i L^{cl}_s(s_i,s^*_i)+\frac{\lambda_1}{N_v}\sum_jL^{re}_v(v_j,v^*_j)+\frac{\lambda_2}{N_o}\sum_kL^{re}_o(o_k,o^*_k) L(si,vj,ok)=Ns1∑iLscl(si,si∗)+Nvλ1∑jLvre(vj,vj∗)+Noλ2∑kLore(ok,ok∗)

i i i is the index of an anchor in a minibatch, s i s_i si is the predicted of anchor i i i, s i ∗ s^*_i si∗ is the ground truth, j j j is the index of an anchor in the set of valid anchors for y y y-coordinates regression. s i ∗ s^*_i si∗=1. v j v_j vj and v j ∗ v^*_j vj∗ are the prediction and ground truth y y y-coordinates associated with the j j j-th anchor. k k k is the index of a side-anchor. o k o_k ok and o k ∗ o^*_k ok∗ are the predicted and ground truth offsets. L v c l L^{cl}_v Lvcl is the classification loss, L v r e L^{re}_v Lvre and L o r e L^{re}_o Lore are the regression loss. λ 1 \lambda_1 λ1and λ 2 \lambda_2 λ2 are loss weights. N s N_s Ns, N v N_v Nv and N o N_o No are normalization parameters.

3.6 Total system

CTPN first uses a VGG16 backbone to extract space features. The method gets the output of VGG16 conv5 (Batch size × \times × Width × \times × Height × \times × Channel), and each feature point in the output feature map corresponds to 16 pixels of the original picture, as shown in Fig 5.

Then, to decode the feature map to extract space feature using a 3$\times$3 sliding window in im3col and get the new feature map (Batch size × \times × Width × \times × Height × \times × Channel) where each pixel point merges surrounding 3$\times$3 information.

In the following, reshape the feature map to (NH) × \times ×W × \times ×C and use bi-directional LSTM to get a feature from the sequence characteristics of the row. Finally, bi-directional produce a output of (NH) × \times ×W$\times 256 a n d r e s h a p e b a c k t o N 256 and reshape back to N 256andreshapebacktoN\times 256 256 256\times H H H\times$W.

The output of BLSTM passes through a convolution layer to get N × \times ×H × \times ×W$\times 512 , a n d i t s r e s u l t p a s s e s t h r o u g h a n e t w o r k l i k e R P N i n t h r e e w a y s . T h e f i r s t b r a n c h i s t h e 2 k v e r t i c a l c o o r d i n a t e s ( N 512, and its result passes through a network like RPN in three ways. The first branch is the 2k vertical coordinates(N 512,anditsresultpassesthroughanetworklikeRPNinthreeways.Thefirstbranchisthe2kverticalcoordinates(N\times H H H\times 2 k ) , a n d k i s t h e k a n c h o r s o f a p i x e l c o r r e s p o n d i n g t o k a n c h o r , 2 k i s t h e f o r e c a s t o f a c e r t a i n a n c h o r v = [ 2k), and k is the k anchors of a pixel corresponding to k anchor, 2k is the forecast of a certain anchor v=[ 2k),andkisthekanchorsofapixelcorrespondingtokanchor,2kistheforecastofacertainanchorv=[v_c$, v h v_h vh]. The second branch is 2k scores(N × \times ×H × \times ×W$\times$2k). The 2k is a 2k foreground-background score, s = [ t e x t , n o n − t e x t ] [text, non-text] [text,non−text]. The third branch is k side-refinement(N × \times ×H × \times ×W × \times ×k). The k side-refinement can forecast certain anchors.

CTPN overall framework and predicted result[12] (a) is CTPN overall framework. (b) is a predicted result.

3.7 Algorithm

To normalize the pre-normalized background images

data_base_normalize.py

normalize width and height

the pre-normalized images will firstly be rescaled if not of size 800x600, then 800x600 rects will be cropped from the rescaled images.

The 800x600 images will be stored in a newly-maked directory, ./images_base.

To generate validation data and training data

data_generator.py

validation data and training data will be generated.

These will be store in the newly-maked directories, ./data_valid and ./data_train, respectively.

To train and validate

script_detect.py

the model will be trained and validated.

The validation results will be stored in ./data_valid/results.

The ckpt files will be stored in a newly-maked directory, ./model_detect.

4 Experiment

4.1Dataset

We adopted a 2800 dataset to train the model. The dataset contains images and the context of images. In addition, we used 42 images and contexts to validate. Each image in the dataset has different locations, and the characters have their own coordinates. In image B, on the left are the coordinates of the characters. We modify images into 800$\times $600, as shown in Fig 6.

This shows an example of a training dataset. On the left, this is a training image. On the right, this is a training text corresponding to the image A.

4.2Taining model

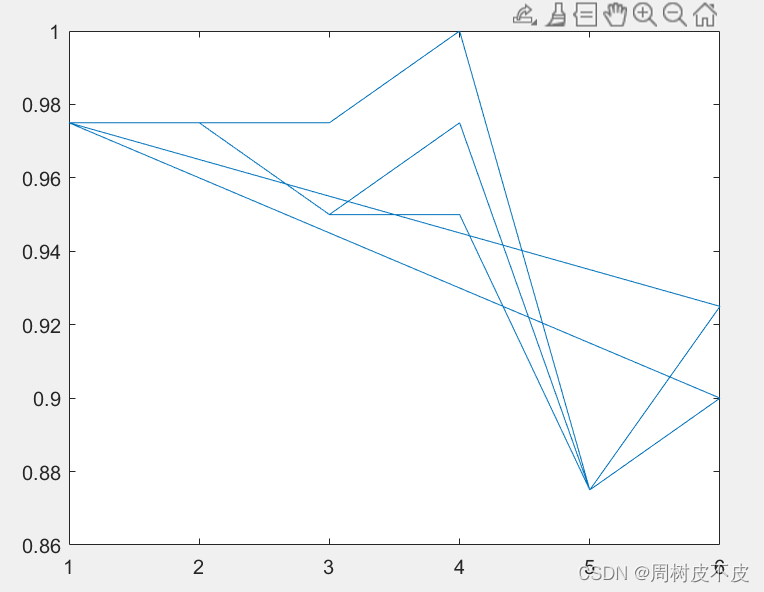

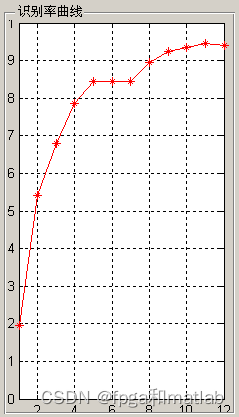

We set the learning rate as 0.001 and trained the model for 1200 iterations, as shown in the figure. When train the model is in 200 iterations, the model begins to converge, as shown in Fig 7.

When the learning rate is 0.001, train the model in 1200 iterations.

An example of predicted datasets

According to the experiment, we found that the method can produce a better detection of the characters. However, the bounding boxes have overlapped over the characters, and some bounding boxes do not include the complete character, and some bounding box incorrectly displayed in some area.

Because we use text line constructor and RPN layer, there are existing many bounding boxes over noncharacter area, and the iterations number of training lead to the matter.

4.3 Algorithm analysis

The algorithm of the method consists of normalizing the pre-normalized background images, generating validation data and training data, training and validating, the function model is transparent, but the codes are redundant and have much time to run the code.

5 Conclusion and future work

The paper adopts CTPN to recognize characters. This is an efficient text detector that is end-to-end trainable. After the experiment, In the algorithm, the CTPN is more accurate for the four points on the upper, lower, left, and right sides of the tested frame. However, CTPN only detects the text in the horizontal direction, and word by word breaks off in the vertical direction. Therefore, we can further improve the algorithm in the vertical direction and add iterations of training.

Reference

[1]Vaidya, Rohan, et al. “Handwritten character recognition using deep-learning.” 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT). IEEE, 2018.

[2]Narayan, Adith, and Raja Muthalagu. “Image Character Recognition using Convolutional Neural Networks.” 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII). IEEE, 2021.

[3]Khan, Mohammad Meraj, et al. “A squeeze and excitation resnext-based deep learning model for bangla handwritten compound character recognition.” Journal of King Saud University-Computer and Information Sciences (2021).

[4]Khandokar, I., et al. “Handwritten character recognition using convolutional neural network.” Journal of Physics: Conference Series. Vol. 1918. No. 4. IOP Publishing, 2021.

[5]Balaha, Hossam Magdy, et al. “A new Arabic handwritten character recognition deep learning system (AHCR-DLS).” Neural Computing and Applications 33.11 (2021): 6325-6367.

[6]Narang, Sonika Rani, Munish Kumar, and Manish Kumar Jindal. “DeepNetDevanagari: a deep learning model for Devanagari ancient character recognition.” Multimedia Tools and Applications 80.13 (2021): 20671-20686.

[7]Liao, Minghui, et al. “Real-time scene text detection with differentiable binarization and adaptive scale fusion.” IEEE Transactions on Pattern Analysis and Machine Intelligence (2022).

[8]Shivakumara, Palaiahnakote, Trung Quy Phan, and Chew Lim Tan. “A laplacian approach to multi-oriented text detection in video.” IEEE transactions on pattern analysis and machine intelligence 33.2 (2010): 412-419.

[9]Zhang, Zheng, et al. “Multi-oriented text detection with fully convolutional networks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

[10]Zhu, Yiqin, et al. “Fourier contour embedding for arbitrary-shaped text detection.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021.

[11]Olah, Christopher. “Neural networks, types, and functional programming.” (2015).

[12]Tian, Zhi, et al. “Detecting text in natural image with connectionist text proposal network.” European conference on computer vision. Springer, Cham, 2016.

ctional programming." (2015).

[12]Tian, Zhi, et al. “Detecting text in natural image with connectionist text proposal network.” European conference on computer vision. Springer, Cham, 2016.

![[编译原理]词法分析器的分析与实现](https://img-blog.csdn.net/20150616134813310)