目录

简要介绍

文件准备

代码注释

简要介绍

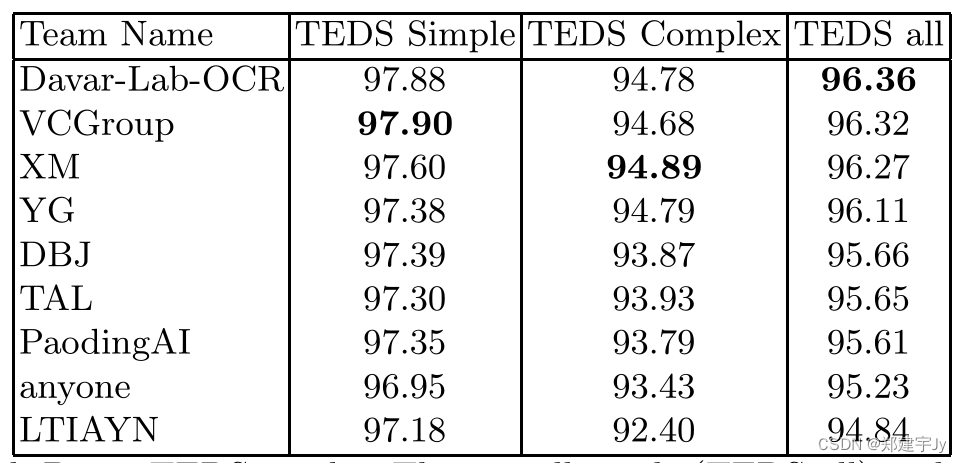

具体的介绍可以看这几篇文章,讲解的很详细了,本文主要参考这三篇文章并对官方给的代码做一些解释

ICDAR2013文本检测算法的衡量方法(一)Evaluation Levels

ICDAR2013文本检测算法的衡量方法(二)Rectangle Matching与DetEval

中文文字检测与识别的评测方法

文本定位分为Challenges 1、2、4三个挑战,其中

- Challenges 1(Born-Digital)的数据来源于电脑制作的,而Challenges 2和Challenges 4(Real Scene)的数据来源于摄像机的拍摄。

- Challenges 2主要是来源于用户有意识的对焦拍摄的(focused text)比如一些翻译的场景,这些场景中文字基本是对焦好的且水平的

- Challenges 4主要来源也是用户拍摄的,但是这些照片的拍摄是比较随意的(incidental text)这样会导致图片里的文字角度、清晰度、大小等情况非常的多。

针对不同的挑战,评价检测算法的方法就不相同:

-

Challenges 1和2使用的是叫做 DetEval的方法,该方法来自2006年C. Wolf的一篇文章《Object Count / Area Graphs for the Evaluation of Object Detection and Segmentation Algorithms》,ICDAR自己实现了一套Deteval方法

-

Challenges 4使用的是简单的通过IoU来判定算法的recall、precision的。

官方提供的三种评测方法下载地址 https://rrc.cvc.uab.es/?ch=2&com=mymethods&task=1,其中第一个ICDAR2013方法是官方根据论文实现的Deteval,与paper作者的实现有细微差别,具体见https://rrc.cvc.uab.es/?ch=2&com=faq。第二个就是Deteval原paper作者的实现,第三个是Iou的评测方法。

文件准备

本文的代码是官方实现的Deteval方法即上面链接中的第一个ICDAR2013。运行命令如下

- python script.py –g=gt.zip –s=submit.zip –o=./ -p={\"IOU_CONSTRAINT\":0.8}

其中gt.zip是标签文件,submit.zip是模型的识别结果,-o是保存result.zip的路径,result.zip中是每张图片的评测结果,-p是评测中用到的一些阈值,在script.py中的default_evaluation_params函数中定义了默认值,需要改动也可以在代码中改。因为代码中是按正则的方式读取txt文件,因此txt的名称和内容格式都需要严格按照代码中设定的格式。最方便的方法还是将需要评测的gt.zip和submit.zip转换成代码中的格式而不是去改代码。其中gt.zip和submit.zip中的txt文件名称如下所示

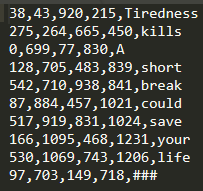

txt内保存水平框的xmin,ymin,xmax,ymax,icdar提供的gt中还有文本的内容

代码注释

评价识别结果考虑了三种情况,一对一、一对多、多对一

当评测一个ground truth文本实例和一个检测结果实例时,recall是它们的Intersect Area除以GT框的面积,precision是Intersection Area除以检测框的面积。

调用评测代码的入口是下面这行代码,其中default_evaluation_params是用到的一些阈值和参数,validate_data是检测传入的gt.zip和submit.zip是否按照要求的格式,evaluate_method则是评价检测结果的函数,代码如下

def evaluate_method(gtFilePath, submFilePath, evaluationParams):"""Method evaluate_method: evaluate method and returns the resultsResults. Dictionary with the following values:- method (required) Global method metrics. Ex: { 'Precision':0.8,'Recall':0.9 }- samples (optional) Per sample metrics. Ex: {'sample1' : { 'Precision':0.8,'Recall':0.9 } , 'sample2' : { 'Precision':0.8,'Recall':0.9 }"""# for module, alias in evaluation_imports().items():# globals()[alias] = importlib.import_module(module)def one_to_one_match(row, col):cont = 0for j in range(len(recallMat[0])): # len(detRects)if recallMat[row, j] >= evaluationParams['AREA_RECALL_CONSTRAINT'] and precisionMat[row, j] >= \evaluationParams['AREA_PRECISION_CONSTRAINT']: # 0.8,0.4cont = cont + 1if cont != 1:return Falsecont = 0for i in range(len(recallMat)):if recallMat[i, col] >= evaluationParams['AREA_RECALL_CONSTRAINT'] and precisionMat[i, col] >= \evaluationParams['AREA_PRECISION_CONSTRAINT']:cont = cont + 1if cont != 1:return Falseif recallMat[row, col] >= evaluationParams['AREA_RECALL_CONSTRAINT'] and precisionMat[row, col] >= \evaluationParams['AREA_PRECISION_CONSTRAINT']:return Truereturn Falsedef one_to_many_match(gtNum):many_sum = 0detRects = []for detNum in range(len(recallMat[0])):if gtRectMat[gtNum] == 0 and detRectMat[detNum] == 0 and detNum not in detDontCareRectsNum:if precisionMat[gtNum, detNum] >= evaluationParams['AREA_PRECISION_CONSTRAINT']: # 0.4many_sum += recallMat[gtNum, detNum]detRects.append(detNum)if many_sum >= evaluationParams['AREA_RECALL_CONSTRAINT']: # 0.8return True, detRectselse:return False, []def many_to_one_match(detNum):many_sum = 0gtRects = []for gtNum in range(len(recallMat)):if gtRectMat[gtNum] == 0 and detRectMat[detNum] == 0 and gtNum not in gtDontCareRectsNum:if recallMat[gtNum, detNum] >= evaluationParams['AREA_RECALL_CONSTRAINT']: # 0.8many_sum += precisionMat[gtNum, detNum]gtRects.append(gtNum)if many_sum >= evaluationParams['AREA_PRECISION_CONSTRAINT']: # 0.4return True, gtRectselse:return False, []def area(a, b):dx = min(a.xmax, b.xmax) - max(a.xmin, b.xmin) + 1dy = min(a.ymax, b.ymax) - max(a.ymin, b.ymin) + 1if (dx >= 0) and (dy >= 0):return dx * dyelse:return 0.def center(r):x = float(r.xmin) + float(r.xmax - r.xmin + 1) / 2.y = float(r.ymin) + float(r.ymax - r.ymin + 1) / 2.return Point(x, y)def point_distance(r1, r2):distx = math.fabs(r1.x - r2.x)disty = math.fabs(r1.y - r2.y)return math.sqrt(distx * distx + disty * disty)def center_distance(r1, r2):return point_distance(center(r1), center(r2))def diag(r):w = (r.xmax - r.xmin + 1)h = (r.ymax - r.ymin + 1)return math.sqrt(h * h + w * w)perSampleMetrics = {}methodRecallSum = 0methodPrecisionSum = 0Rectangle = namedtuple('Rectangle', 'xmin ymin xmax ymax')Point = namedtuple('Point', 'x y')gt = rrc_evaluation_funcs.load_zip_file(gtFilePath, evaluationParams['GT_SAMPLE_NAME_2_ID']) # 'gt_img_([0-9]+).txt'subm = rrc_evaluation_funcs.load_zip_file(submFilePath, evaluationParams['DET_SAMPLE_NAME_2_ID'], True) # 'res_img_([0-9]+).txt'numGt = 0numDet = 0for resFile in gt: # 一张ground truth# 189, b'121,0,177,12,###\r\n90,110,528,193,hellmann\r\n89,197,269,251,parcel\r\n295,204,528,254,systems\r\n'gtFile = rrc_evaluation_funcs.decode_utf8(gt[resFile])# 121,0,177,12,#### 90,110,528,193,hellmann# 89,197,269,251,parcel# 295,204,528,254,systemsrecall = 0precision = 0hmean = 0recallAccum = 0.precisionAccum = 0.gtRects = []detRects = []gtPolPoints = []detPolPoints = []gtDontCareRectsNum = [] # Array of Ground Truth Rectangles' keys marked as don't CaredetDontCareRectsNum = [] # Array of Detected Rectangles' matched with a don't Care GTpairs = []evaluationLog = ""recallMat = np.empty([1, 1])precisionMat = np.empty([1, 1])pointsList, _, transcriptionsList = rrc_evaluation_funcs.get_tl_line_values_from_file_contents(gtFile,evaluationParams['CRLF'],# FalseTrue, True,False)for n in range(len(pointsList)): # 一张ground truth上的一个文本实例points = pointsList[n] # list, [121.0, 0.0, 177.0, 12.0]transcription = transcriptionsList[n]dontCare = transcription == "###"gtRect = Rectangle(*points) # Rectangle(xmin=121.0, ymin=0.0, xmax=177.0, ymax=12.0)gtRects.append(gtRect)gtPolPoints.append(points)if dontCare:gtDontCareRectsNum.append(len(gtRects) - 1) # 一张图中dontcare文本实例的索引evaluationLog += "GT rectangles: " + str(len(gtRects)) + (" (" + str(len(gtDontCareRectsNum)) + " don't care)\n" if len(gtDontCareRectsNum) > 0 else "\n")# GT rectangles: 4 (1 don't care)if resFile in subm: # 对应这张ground truth的识别结果detFile = rrc_evaluation_funcs.decode_utf8(subm[resFile])# 一张图片的检测结果# <class 'str'># 121,0,177,12# 90,110,528,193# 89,197,269,251# 295,204,528,254pointsList, _, _ = rrc_evaluation_funcs.get_tl_line_values_from_file_contents(detFile,evaluationParams['CRLF'],True, False, False)# [[121.0, 0.0, 177.0, 12.0], [90.0, 110.0, 528.0, 193.0], [89.0, 197.0, 269.0, 251.0], [295.0, 204.0, 528.0, 254.0]]for n in range(len(pointsList)): # 这张识别结果中的一个文本实例points = pointsList[n]detRect = Rectangle(*points)detRects.append(detRect)detPolPoints.append(points)if len(gtDontCareRectsNum) > 0:for dontCareRectNum in gtDontCareRectsNum: # 遍历对应gt中的don't care文本实例dontCareRect = gtRects[dontCareRectNum]intersected_area = area(dontCareRect, detRect) # 拿这张图识别结果中的一个文本实例和对应gt中所有标为don't care的计算intersection areardDimensions = ((detRect.xmax - detRect.xmin + 1) * (detRect.ymax - detRect.ymin + 1))if rdDimensions == 0:precision = 0else:precision = intersected_area / rdDimensionsif precision > evaluationParams['AREA_PRECISION_CONSTRAINT']: # 0.4detDontCareRectsNum.append(len(detRects) - 1)# 识别结果中的一个文本实例和gt中标为don't care的一个文本实例匹配上了,记录这个识别的文本实例的索引break# 可能还与其它的don't care实例也匹配,但不关心了,breakevaluationLog += "DET rectangles: " + str(len(detRects)) + (" (" + str(len(detDontCareRectsNum)) + " don't care)\n" if len(detDontCareRectsNum) > 0 else "\n")# DET rectangles: 4 (1 don't care)if len(gtRects) == 0: # 这张图上没有gt box,dont't care也没有recall = 1precision = 0 if len(detRects) > 0 else 1if len(detRects) > 0:# Calculate recall and precision matricesoutputShape = [len(gtRects), len(detRects)] # 一行是1个gt,一列是1个detrecallMat = np.empty(outputShape) # 记录了一张gt中的每个文本实例与对应det中的每个文本实例的recallprecisionMat = np.empty(outputShape) # 记录了一张gt中的每个文本实例与对应det中的每个文本实例的precisiongtRectMat = np.zeros(len(gtRects), np.int8) # 记录每个gt是否匹配,匹配则值为1detRectMat = np.zeros(len(detRects), np.int8) # 记录每个det是否匹配,匹配则值为1# 分别遍历gt和det中的每一个text instancefor gtNum in range(len(gtRects)):for detNum in range(len(detRects)):rG = gtRects[gtNum]rD = detRects[detNum]intersected_area = area(rG, rD)rgDimensions = ((rG.xmax - rG.xmin + 1) * (rG.ymax - rG.ymin + 1))rdDimensions = ((rD.xmax - rD.xmin + 1) * (rD.ymax - rD.ymin + 1))recallMat[gtNum, detNum] = 0 if rgDimensions == 0 else intersected_area / rgDimensions# 召回率: 一个gt和一个det的intersection面积与该gt面积的比例precisionMat[gtNum, detNum] = 0 if rdDimensions == 0 else intersected_area / rdDimensions# 准确率: 一个gt和一个det的intersection面积与该det面积的比例# 在接下来判断一对一、一对多、多对一三种情况时,都忽略don't care的gt和det# Find one-to-one matchesevaluationLog += "Find one-to-one matches\n"for gtNum in range(len(gtRects)):for detNum in range(len(detRects)):if gtRectMat[gtNum] == 0 and detRectMat[detNum] == 0 and gtNum not in gtDontCareRectsNum and detNum not in detDontCareRectsNum:match = one_to_one_match(gtNum, detNum)# 一对一,即1个gt只与1个det满足recall和precision的阈值,同时这个det也只与这个gt满足recall和precision的阈值# recallMat的一行代表一个gt和所有det的recall,一列代表一个det和所有gt的recall,precisionMat也是如此# 当前gt所在的行与当前det所在的列中只有交叉点即该gt与det的recall和precision大于设定的阈值,即1对1匹配if match is True:rG = gtRects[gtNum]rD = detRects[detNum]normDist = center_distance(rG, rD)normDist /= diag(rG) + diag(rD)normDist *= 2.0if normDist < evaluationParams['EV_PARAM_IND_CENTER_DIFF_THR']: # 1gtRectMat[gtNum] = 1 # 当前gt已匹配,后面判断一对多和多对一的情况时,跳过该gtdetRectMat[detNum] = 1 # 当前det已匹配,后面判断一对多和多对一的情况时,跳过该detrecallAccum += evaluationParams['MTYPE_OO_O'] # 1precisionAccum += evaluationParams['MTYPE_OO_O']pairs.append({'gt': gtNum, 'det': detNum, 'type': 'OO'})evaluationLog += "Match GT #" + str(gtNum) + " with Det #" + str(detNum) + "\n"else:evaluationLog += "Match Discarded GT #" + str(gtNum) + " with Det #" + str(detNum) + " normDist: " + str(normDist) + " \n"# Find one-to-many matchesevaluationLog += "Find one-to-many matches\n"for gtNum in range(len(gtRects)):if gtNum not in gtDontCareRectsNum:match, matchesDet = one_to_many_match(gtNum)# 一对多,即1个gt对应多个det,遍历1个gt所在的行,若有det与该gt的precision大于设定阈值,记录该det以及gt与该det的recall# 若所有满足precision的det与gt的recall和大于设定阈值,则该gt与所有记录的det匹配上了if match is True:gtRectMat[gtNum] = 1recallAccum += evaluationParams['MTYPE_OM_O'] # 0.8precisionAccum += evaluationParams['MTYPE_OM_O'] * len(matchesDet)pairs.append({'gt': gtNum, 'det': matchesDet, 'type': 'OM'})for detNum in matchesDet:detRectMat[detNum] = 1evaluationLog += "Match GT #" + str(gtNum) + " with Det #" + str(matchesDet) + "\n"# Find many-to-one matchesevaluationLog += "Find many-to-one matches\n"for detNum in range(len(detRects)):if detNum not in detDontCareRectsNum:match, matchesGt = many_to_one_match(detNum)# 多对一,即多个gt对应1个det,遍历1个det所在的列,若有gt与该det的recall大于设定阈值,记录该gt以及det与该gt的precision# 若所有满足recall的gt与该det的precision和大于设定阈值,则该det与所有记录的gt匹配上了if match is True:detRectMat[detNum] = 1recallAccum += evaluationParams['MTYPE_OM_M'] * len(matchesGt) # 1precisionAccum += evaluationParams['MTYPE_OM_M']pairs.append({'gt': matchesGt, 'det': detNum, 'type': 'MO'})for gtNum in matchesGt:gtRectMat[gtNum] = 1evaluationLog += "Match GT #" + str(matchesGt) + " with Det #" + str(detNum) + "\n"numGtCare = (len(gtRects) - len(gtDontCareRectsNum))if numGtCare == 0:recall = float(1)precision = float(0) if len(detRects) > 0 else float(1)else:recall = float(recallAccum) / numGtCareprecision = float(0) if (len(detRects) - len(detDontCareRectsNum)) == 0 else float(precisionAccum) / (len(detRects) - len(detDontCareRectsNum))hmean = 0 if (precision + recall) == 0 else 2.0 * precision * recall / (precision + recall)evaluationLog += "Recall = " + str(recall) + "\n"evaluationLog += "Precision = " + str(precision) + "\n"methodRecallSum += recallAccummethodPrecisionSum += precisionAccumnumGt += len(gtRects) - len(gtDontCareRectsNum)numDet += len(detRects) - len(detDontCareRectsNum)perSampleMetrics[resFile] = {'precision': precision,'recall': recall,'hmean': hmean,'pairs': pairs,'recallMat': [] if len(detRects) > 100 else recallMat.tolist(),'precisionMat': [] if len(detRects) > 100 else precisionMat.tolist(),'gtPolPoints': gtPolPoints,'detPolPoints': detPolPoints,'gtDontCare': gtDontCareRectsNum,'detDontCare': detDontCareRectsNum,'evaluationParams': evaluationParams,'evaluationLog': evaluationLog}methodRecall = 0 if numGt == 0 else methodRecallSum / numGtmethodPrecision = 0 if numDet == 0 else methodPrecisionSum / numDetmethodHmean = 0 if methodRecall + methodPrecision == 0 else 2 * methodRecall * methodPrecision / (methodRecall + methodPrecision)methodMetrics = {'precision': methodPrecision, 'recall': methodRecall, 'hmean': methodHmean}resDict = {'calculated': True, 'Message': '', 'method': methodMetrics, 'per_sample': perSampleMetrics}return resDict