银行卡识别

- 前言

- 一、数据预处理

- 1.1 数据准备

- 1.2 数据增强

- 二、训练(CRNN)

- 三、需要修改的内容

- 3.1 数据增强

- 3.2 训练

- 四、CRNN 结构说明

- 4.1 CNN

- 4.2 BiLSTM

- 4.3 CTC

- 五、卡号检测

- 六、BIN码校验

- 参考链接

前言

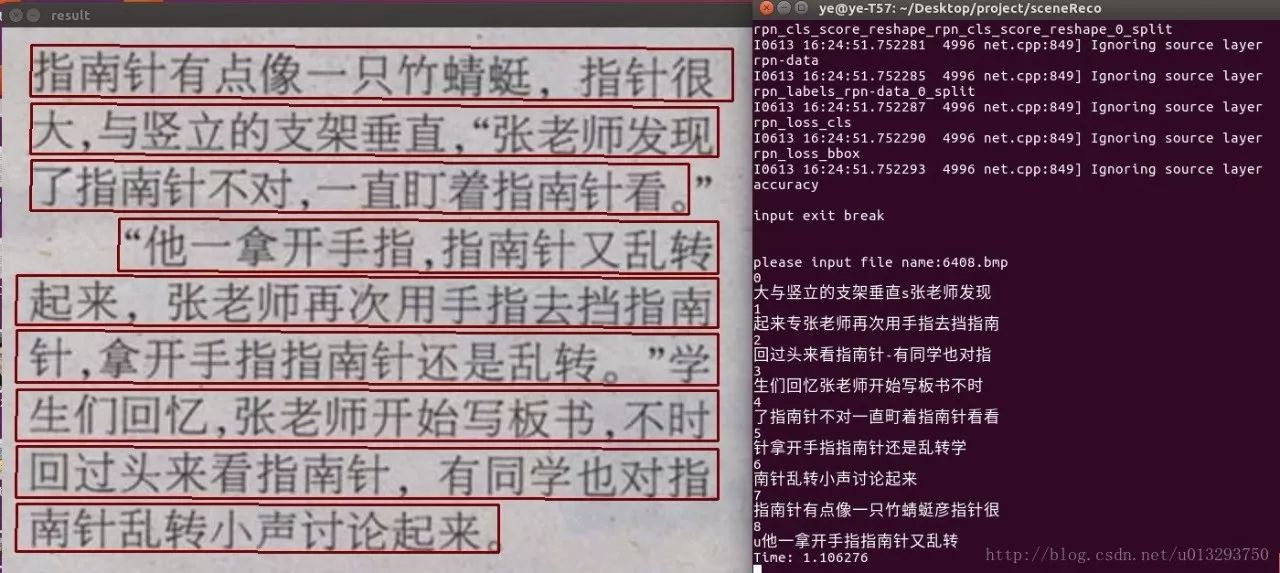

- 对各种银行卡进行卡号识别,CTPN 进行文字定位,CRNN 进行文字识别以及 Web 实现银行卡号码识别

- 我们国内现在目前用的卡类标准为 ISO/IEC7810:2003 的 ID-1 标准,卡片尺寸是 85.60mm*53.98mm,通过计算长宽比可以得到为 0.623.

- 先思考一下我们的大致思路: 因为我们最终是要完成卡面信息的识别,我们可以分为两个关键流程:

文本检测,文本识别

一、数据预处理

1.1 数据准备

bankcard_pre每张图片大小为120 * 46,图片命名格式为 0000a_0.png- 每个原始训练图片包括 4 个字符,待后来进行随机 4~6 个随机拼接形成类似银行卡号的长度。

- 训练集与验证集的比例是 9:1

1.2 数据增强

- 先对图像做数据增强,然后再做图像拼接。

- 随机缩放、平移、颜色变换、模糊处理、噪声,最后进行连接,增强倍数 96 倍

- noise: 随机在图像中添加 500 个白色点像素;(2次)

- resize: 在一定范围内非同比例缩放;(4次)

- colormap: 随机进行颜色变换;(1次)

- blur: 分别有高斯模糊、均值模糊、中值模糊、双边滤波;(4次)

- place_img: 上下浮动 10%~20%, 左右浮动 1.5%~2%;(4次)

代码实现:

import os

import numpy as np

from os import getcwd

import cv2

import PIL.Image

import random

import copy

import multiprocessingPATH = 'train_img/'def rand(a=0, b=1):return np.random.rand() * (b - a) + adef rand_resize(img, img_name, count, annotation, jitter=.3):w, h, _ = img.shapenew_ar = w / h * rand(1 - jitter, 1 + jitter) / rand(1 - jitter, 1 + jitter)scale = rand(.25, 2)if new_ar < 1:nh = int(scale * h)nw = int(nh * new_ar)else:nw = int(scale * w)nh = int(nw / new_ar)new_name = img_name[:6] + str(count) + '.png'count = count + 1annotation[PATH + new_name] = annotation[PATH + img_name]new_img = cv2.resize(img, (nh, nw), cv2.INTER_CUBIC)cv2.imwrite(PATH + new_name, new_img)return countdef place_img(img, img_name, count, annotation):H, W, C = img.shapeoffestH = int(rand(int(H * 0.1), int(H * 0.2)))offestW = int(rand(int(W * 0.015), int(W * 0.02)))dst = np.zeros((H, W, C), np.uint8)dst1 = np.zeros((H, W, C), np.uint8)dst2 = np.zeros((H, W, C), np.uint8)dst3 = np.zeros((H, W, C), np.uint8)for i in range(H - offestH):for j in range(W - offestW):dst[i + offestH, j + offestW] = img[i, j]dst1[i, j] = img[i + offestH, j + offestW]dst2[i + offestH, j] = img[i, j + offestW]dst3[i, j + offestW] = img[i + offestH, j]new_name = img_name[:6] + str(count) + '.png'count += 1new_name1 = img_name[:6] + str(count) + '.png'count += 1new_name2 = img_name[:6] + str(count) + '.png'count += 1new_name3 = img_name[:6] + str(count) + '.png'count += 1cv2.imwrite(PATH + new_name, dst)cv2.imwrite(PATH + new_name1, dst1)cv2.imwrite(PATH + new_name2, dst2)cv2.imwrite(PATH + new_name3, dst3)annotation[PATH + new_name] = annotation[PATH + img_name]annotation[PATH + new_name1] = annotation[PATH + img_name]annotation[PATH + new_name2] = annotation[PATH + img_name]annotation[PATH + new_name3] = annotation[PATH + img_name]return countdef colormap(img, img_name, count, annotation):rand_b = rand() + 1rand_g = rand() + 1rand_r = rand() + 1H, W, C = img.shapenew_name = img_name[:6] + str(count) + '.png'count += 1dst = np.zeros((H, W, C), np.uint8)for i in range(H):for j in range(W):(b, g, r) = img[i, j]b = int(b * rand_b)g = int(g * rand_g)r = int(r * rand_r)if b > 255:b = 255if g > 255:g = 255if r > 255:r = 255dst[i][j] = (b, g, r)annotation[PATH + new_name] = annotation[PATH + img_name]cv2.imwrite(PATH + new_name, dst)return countdef blur(img, img_name, count, annotation):img_GaussianBlur = cv2.GaussianBlur(img, (5, 5), 0)img_Mean = cv2.blur(img, (5, 5))img_Median = cv2.medianBlur(img, 3)img_Bilater = cv2.bilateralFilter(img, 5, 100, 100)new_name = img_name[:6] + str(count) + '.png'count += 1new_name1 = img_name[:6] + str(count) + '.png'count += 1new_name2 = img_name[:6] + str(count) + '.png'count += 1new_name3 = img_name[:6] + str(count) + '.png'count += 1annotation[PATH + new_name] = annotation[PATH + img_name]annotation[PATH + new_name1] = annotation[PATH + img_name]annotation[PATH + new_name2] = annotation[PATH + img_name]annotation[PATH + new_name3] = annotation[PATH + img_name]cv2.imwrite(PATH + new_name, img_GaussianBlur)cv2.imwrite(PATH + new_name1, img_Mean)cv2.imwrite(PATH + new_name2, img_Median)cv2.imwrite(PATH + new_name3, img_Bilater)return countdef noise(img, img_name, count, annotation):H, W, C = img.shapenoise_img = np.zeros((H, W, C), np.uint8)for i in range(H):for j in range(W):noise_img[i, j] = img[i, j]for i in range(500):x = np.random.randint(H)y = np.random.randint(W)noise_img[x, y, :] = 255new_name = img_name[:6] + str(count) + '.png'count += 1annotation[PATH + new_name] = annotation[PATH + img_name]cv2.imwrite(PATH + new_name, noise_img)return countdef concat(img, img_name, count, annotation, img_list):# img = cv2.imread(PATH+img_name)H, W, C = img.shapenum = int(rand(4, 6))imgs = random.sample(img_list, num)dst = np.zeros((H, W * (num + 1), C), np.uint8)for h in range(H):for w in range(W):dst[h, w] = img[h, w]new_name = img_name[:6] + str(count) + '.png'count += 1boxes = copy.copy(annotation[PATH + img_name])for i, image_name in enumerate(imgs):image = cv2.imread(PATH + image_name)for h in range(H):for w in range(W):dst[h, W * (i + 1) + w] = image[h, w]for label in annotation[PATH + image_name]:boxes.append(label)cv2.imwrite(PATH + new_name, dst)annotation[PATH + new_name] = boxescount = noise(dst, new_name, count, annotation) # 1count = noise(dst, new_name, count, annotation)for i in range(4):count = rand_resize(dst, new_name, count, annotation) # 4count = colormap(dst, new_name, count, annotation) # 1count = blur(dst, new_name, count, annotation) # 4count = place_img(dst, new_name, count, annotation) # 4return countdef main(img, img_name, annotation):count = 1for i in range(5):count = concat(img, img_name, count, annotation, img_list) # 1print(img_name + "增强完成")if __name__ == '__main__':trainval_percent = 0.2train_percent = 0.8img_list = os.listdir(PATH)manager = multiprocessing.Manager()annotation = manager.dict()for img_name in img_list:boxes = []a = np.linspace(0, 120, 5, dtype=np.int)for i in range(len(a) - 1):if img_name[i] == '_':continueboxes.append(img_name[i])annotation[PATH + img_name] = boxes''' 多进程处理数据,windows下测试出错 '''pool = multiprocessing.Pool(10)for img_name in img_list:img = cv2.imread(PATH + img_name, -1)pool.apply_async(main, (img, img_name, annotation,))pool.close()pool.join()''' 非多进程处理数据 '''# for img_name in img_list:# img = cv2.imread(PATH + img_name,-1)# main(img,img_name,annotation)train_file = open('train.txt', 'w')val_file = open('val.txt', 'w')val_split = 0.1rand_index = list(range(len(annotation)))random.shuffle(rand_index)val_index = rand_index[0: int(0.1 * len(annotation))]for i, names in enumerate(annotation.keys()):if i in val_index:val_file.write(names)for label in annotation[names]:val_file.write(" " + str(label))val_file.write('\n')else:train_file.write(names)for label in annotation[names]:train_file.write(" " + str(label))train_file.write('\n')train_file.close()val_file.close()

二、训练(CRNN)

- step 1: 传入训练的图像均为 灰度化图像,并且 shape: (32,256)

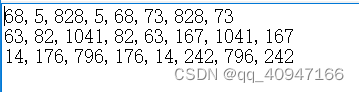

- step 2: 预测的 label 长度:img_w / 4(即每 4 个像素分隔有一个 pred,最后采用 ctc_loss 对齐)

- step 3: 训练指令如下

cd bankcardocr/train

CUDA_VISIBLE_DEVICES=0 python run.py

三、需要修改的内容

3.1 数据增强

- PATH 的路径

- train_file 的路径

- val_file 的路径

3.2 训练

- weight_save_path 的路径

- train_txt_path 的路径

- val_txt_path 的路径

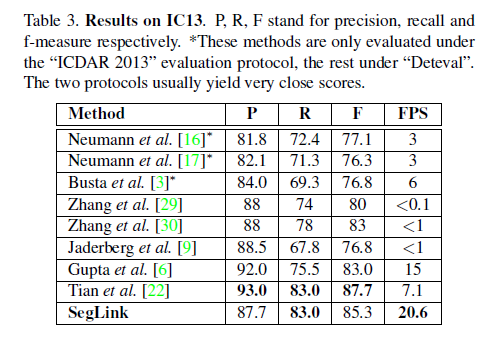

四、CRNN 结构说明

具体实现细节可参考我另一篇博客:CRNN- 博客地址:https://blog.csdn.net/libo1004/article/details/111595054

4.1 CNN

CNN: 采用 CNN(类似于 VGG 网络)进行特征提取,提取到的特征以序列方式输出。

# Map2Sequence part

x = Permute((2, 3, 1), name='permute')(conv_otput) # 64*512*1

rnn_input = TimeDistributed(Flatten(), name='for_flatten_by_time')(x) # 64*512

4.2 BiLSTM

BLSTM: 特征输入到 BLSTM,输出每个序列代表的值(这个值是一个序列,代表可能出现的值),对输出进行 softmax 操作,计算每个可能出现值得概率。

y = Bidirectional(LSTM(256, kernel_initializer=initializer, return_sequences=True), merge_mode='sum', name='LSTM_1')(rnn_input) # 64*512

y = BatchNormalization(name='BN_8')(y)

y = Bidirectional(LSTM(256, kernel_initializer=initializer, return_sequences=True), name='LSTM_2')(y) # 64*512

y_pred = Dense(num_classes, activation='softmax', name='y_pred')(y)

4.3 CTC

CTC: 相当于一个 loss,一个计算概率到实际输出的概率。

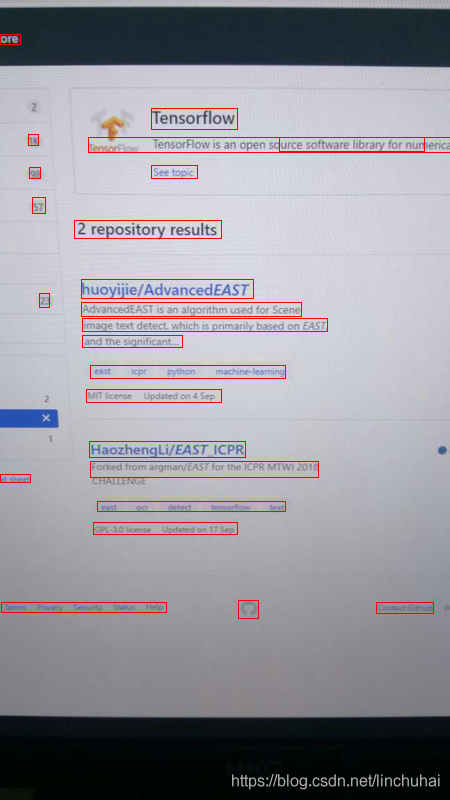

五、卡号检测

判断银行卡(储蓄卡,信用卡)的卡号的合法性用到了 Luhn 算法

算法流程如下:

- 从右到左给卡号字符串编号,最右边第一位是1,最右边第二位是2,最右边第三位是3….

- 从右向左遍历,对每一位字符t执行第三个步骤,并将每一位的计算结果相加得到一个数s。

- 对每一位的计算规则:如果这一位是奇数位,则返回t本身,如果是偶数位,则先将t乘以2得到一个数n,如果n是一位数(小于10),直接返回n,否则将n的个位数和十位数相加返回。

- 如果s能够整除10,则此号码有效,否则号码无效。

因为最终的结果会对10取余来判断是否能够整除10,所以又叫做模10算法。

算法代码:

def luhn_checksum(card_number):def digits_of(n):return [int(d) for d in str(n)]digits = digits_of(card_number)odd_digits = digits[-1::-2]even_digits = digits[-2::-2]checksum = 0checksum += sum(odd_digits)for d in even_digits:return checksum % 10def is_luhn_valid(card_number):return luhn_checksum(card_number) == 0

六、BIN码校验

银行卡号一般是13-19位组成,国内一般是 16,19 位,其中 16 位为信用卡,19 位为储蓄卡,通常情况下都是由 “卡BIN+发卡行自定位+校验位” 这三部分构成,

银行卡的前 6 位用来识别发卡银行或者发卡机构的,称为发卡行识别码,简称为卡BIN。拿出钱包里的卡,会发现如果是只带有银联标注的卡,十有八九都是以62开头的,但是也有例外。

这里边包含一个坑:你知道Bin码的规则,但是你不知道国内银行的BIN码,网上的也大都不全,只能以后慢慢人工扩充。

我这边整理了一份,但是也不全,大概包含有 1300 个 BIN 号,以后再慢慢整理

只需要将合法的卡号前6位切片出来进行查询就好了。

参考链接

- https://mp.weixin.qq.com/s/22DomsxOm6p9IotQ4XB0_Q

- https://mp.weixin.qq.com/s/M_iYGkSDUHbd5gkbr9PDPw