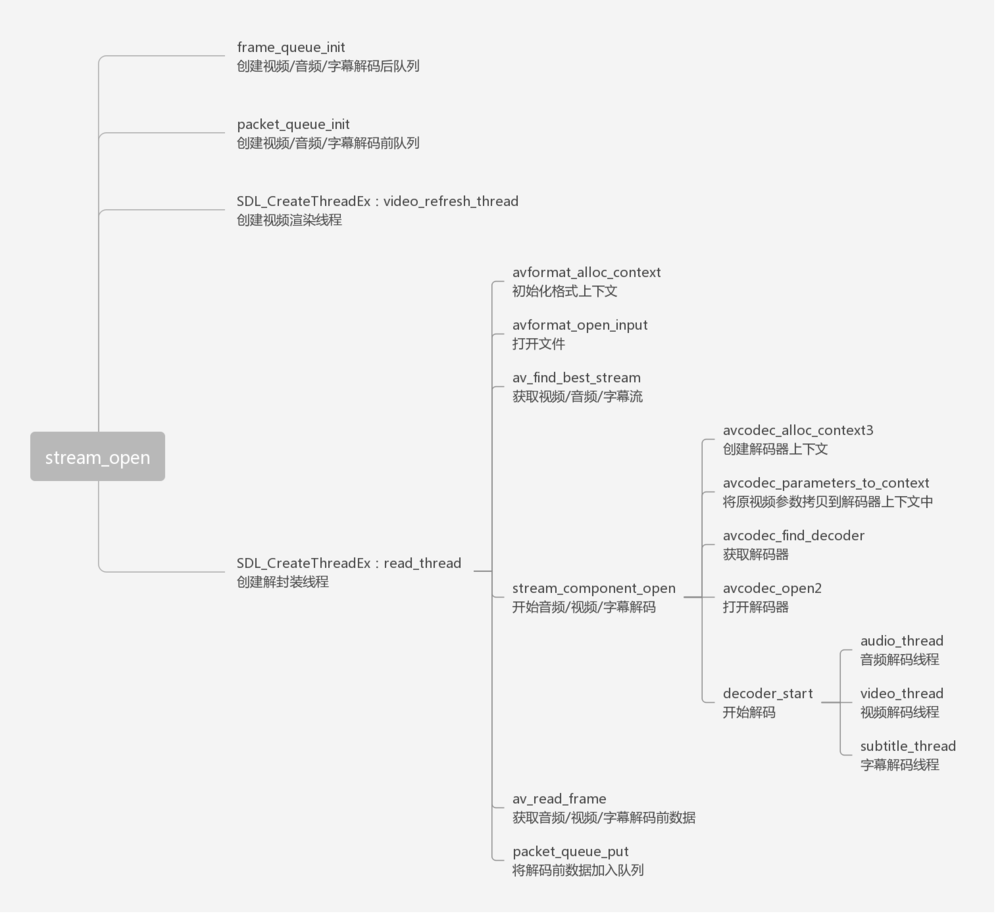

一、ijkplayer 初始化流程

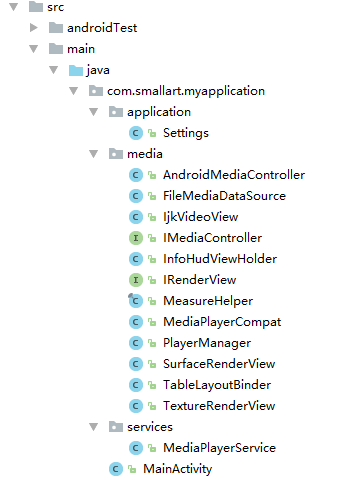

本文是基于 A4ijkplayer 项目进行 ijkplayer 源码分析,该项目是将 ijkplayer 改成基于 CMake 编译,可导入 Android Studio 编译运行,方便代码查找、函数跳转、单步调试、调用栈跟踪等。

初始化完成的主要工作是创建播放器对象:IjkMediaPlayer 。的其提供了两种形式的构造函数,区别在于是否传参 IjkLibLoader。默认不传,即使用默认的 System.loadLibrary(libName),两种构造函数最终都是调用 initPlayer() 函数初始化播放器。该函数内做了四件事:

- 加载 native 动态库:loadLibrariesOnce()

加载动态库,动态注册 JNI 函数,以及其他一些初始化操作 - 初始化 native 资源:initNativeOnce()

ijkplayer 源码中在该方法中并没有做什么操作 - 创建事件处理的 EventHandler

创建 java 层的 handler ,用于接收和处理 native 层回调上来的消息事件 - 设置 native 资源:native_setup()

创建 native IjkMediaPlayer 实例,创建消息队列,指定 msg_loop,创建视频渲染对象 SDL_Vout,创建平台相关的 IJKFF_Pipeline(包含视频解码和音频输出)等

public IjkMediaPlayer() {this(sLocalLibLoader);

}public IjkMediaPlayer(IjkLibLoader libLoader) {initPlayer(libLoader);

}private void initPlayer(IjkLibLoader libLoader) {loadLibrariesOnce(libLoader);initNativeOnce();Looper looper;if ((looper = Looper.myLooper()) != null) {mEventHandler = new EventHandler(this, looper);} else if ((looper = Looper.getMainLooper()) != null) {mEventHandler = new EventHandler(this, looper);} else {mEventHandler = null;}native_setup(new WeakReference<IjkMediaPlayer>(this));

}

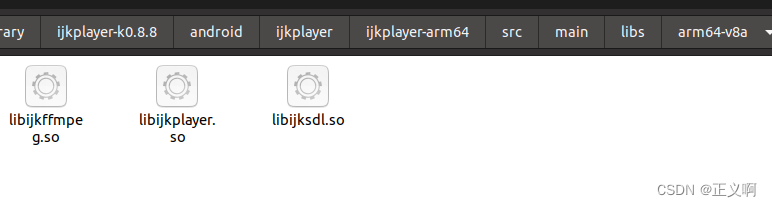

1、loadLibrariesOnce()

该函数是静态方法,整个进程的生命周期内只调用一次,用于加载所需要依赖的 natvie 动态库。ijkplayer 源码是加载 ijkffmpeg、ijksdl、ijkplayer 这个三个动态库,我为了方便调试和跟进 ijkplayer 源码,将其改成 CMake 方式编译,在 CMakeList 中将 ijksdl 和 ijkplayer 的源码编译到了同一个动态库 a4ijkplayer 中,所以调用如下:

private static volatile boolean mIsLibLoaded = false;public static void loadLibrariesOnce(IjkLibLoader libLoader) {synchronized (IjkMediaPlayer.class) {if (!mIsLibLoaded) {if (libLoader == null)libLoader = sLocalLibLoader;libLoader.loadLibrary("ijkffmpeg");libLoader.loadLibrary("a4ijkplayer");

// libLoader.loadLibrary("ijksdl");

// libLoader.loadLibrary("ijkplayer");mIsLibLoaded = true;}}}

loadLibrary 加载动态库时,会调用每个库 JNI 的 JNI_OnLoad() 方法,卸载时会调用 JNI_UnLoad()。

1.1 调用 libLoader.loadLibrary(“ijksdl”)

在加载 libijksdl.so 动态库时,会调用 ijkmedia/ijksdl/android/ijksdl_android_jni.c 文件中 JNI_OnLoad() 方法。

注:我在 A4ijkplayer项目中将 ijksdl 和 ijkplayer 的源码编译到了同一个动态库 a4ijkplayer 中,不会调用 libLoader.loadLibrary(“ijksdl”) 因而不会调用 ijksdl_android_jni.c 中的 JNI_OnLoad() 方法。为解决该问题,将其改名为 SDL_JNI_OnLoad(),然后在 ijkplayer_jni.c 文件的 JNI_OnLoad() 方法调用改名后的 SDL_JNI_OnLoad() 从而保证 SDL 库的原有 JNI_OnLoad() 中的相关方法能被调用到,相关修改见:这个提交

JNIEXPORT jint JNICALL SDL_JNI_OnLoad(JavaVM *vm, void *reserved)

{int retval;JNIEnv* env = NULL;g_jvm = vm;if ((*vm)->GetEnv(vm, (void**) &env, JNI_VERSION_1_4) != JNI_OK) {return -1;}retval = J4A_LoadAll__catchAll(env);JNI_CHECK_RET(retval == 0, env, NULL, NULL, -1);return JNI_VERSION_1_4;

}

1.2 调用 libLoader.loadLibrary(“a4ijkplayer”)

Ijkplayer 原本代码时调用 libLoader.loadLibrary(“ijkplayer”) , A4ijkplayer 项目中是加载合二为一的 liba4ijkplayer.so ,接着会调用 ijkmedia/ijkplayer/android/ijkplayer_jni.c 文件中 JNI_OnLoad() 方法,如下:

JNIEXPORT jint JNI_OnLoad(JavaVM *vm, void *reserved)

{JNIEnv* env = NULL;g_jvm = vm;if ((*vm)->GetEnv(vm, (void**) &env, JNI_VERSION_1_4) != JNI_OK) {return -1;}assert(env != NULL);// 因为把 sdl 和 ijkplayer 和成一个 so 了,不会调用 sdl 原本的 JNI_OnLoad,所以需在这里主动调用jint result = SDL_JNI_OnLoad(vm, reserved);if (result < 0) {return result;}pthread_mutex_init(&g_clazz.mutex, NULL );// FindClass returns LocalReferenceIJK_FIND_JAVA_CLASS(env, g_clazz.clazz, JNI_CLASS_IJKPLAYER);(*env)->RegisterNatives(env, g_clazz.clazz, g_methods, NELEM(g_methods) );ijkmp_global_init();ijkmp_global_set_inject_callback(inject_callback);FFmpegApi_global_init(env);return JNI_VERSION_1_4;

}

这里主要是动态注册 JNI 函数,映射关联 Java 和 jni 相关函数(RegisterNatives),以及其他一些初始化操作。

static JNINativeMethod g_methods[] = {// ...{ "_prepareAsync", "()V", (void *) IjkMediaPlayer_prepareAsync },{ "_start", "()V", (void *) IjkMediaPlayer_start },// ...{ "native_init", "()V", (void *) IjkMediaPlayer_native_init },{ "native_setup", "(Ljava/lang/Object;)V", (void *) IjkMediaPlayer_native_setup },{ "native_finalize", "()V", (void *) IjkMediaPlayer_native_finalize },// ...

};

2、initNativeOnce()

该方法也是静态方法,调用 native_init() 方法。

private static volatile boolean mIsNativeInitialized = false;

private static void initNativeOnce() {synchronized (IjkMediaPlayer.class) {if (!mIsNativeInitialized) {native_init();mIsNativeInitialized = true;}}

}

最终调用到底层 ijkmedia/ijkplayer/android/ijkplayer_jni.c 文件中的 IjkMediaPlayer_native_init() 方法。

ijkplayer 源码中在该方法中并没有做什么操作,如果需要修改源码,需要做一下全局初始化,可以放在该方法中执行。

// static JNINativeMethod g_methods[] = {...} 数组中

{ "native_init", "()V", (void *) IjkMediaPlayer_native_init },

static void

IjkMediaPlayer_native_init(JNIEnv *env)

{MPTRACE("%s\n", __func__);

}

3、创建 EventHandler

创建 java 层的 handler ,用于接收 native 层回调上来的消息,如:资源已准备好、播放完成、seek 完成、错误回调等。其消息处理默认是在 IjkMediaPlayer 构造函数的调用线程,如果没获取到当前线程的 looper,则消息处理在主线程。

Looper looper;

if ((looper = Looper.myLooper()) != null) {mEventHandler = new EventHandler(this, looper);

} else if ((looper = Looper.getMainLooper()) != null) {mEventHandler = new EventHandler(this, looper);

} else {mEventHandler = null;

}

底层在通知事件时,会回调到 java 层的 postEventFromNative() 函数,然后通过 EventHandler 发送消息到处理线程,避免阻塞底层执行。如下(IjkMediaPlayer.java 中):

@CalledByNative

private static void postEventFromNative(Object weakThiz, int what,int arg1, int arg2, Object obj) {// ...if (mp.mEventHandler != null) {Message m = mp.mEventHandler.obtainMessage(what, arg1, arg2, obj);mp.mEventHandler.sendMessage(m);}

}

然后在 EventHandler 的 handleMessage() 中处理事件,如下:

private static class EventHandler extends Handler {// ...@Overridepublic void handleMessage(Message msg) {// ...switch (msg.what) {case MEDIA_PREPARED:player.notifyOnPrepared();return;case MEDIA_PLAYBACK_COMPLETE:player.stayAwake(false);player.notifyOnCompletion();return;// ...}

}

再根据业务层设置的监听器,回调出去,如下:(AbstractMediaPlayer.java 中)

protected final void notifyOnPrepared() {if (mOnPreparedListener != null)mOnPreparedListener.onPrepared(this);

}protected final void notifyOnCompletion() {if (mOnCompletionListener != null)mOnCompletionListener.onCompletion(this);

}

4、native_setup()

最终调用到底层 ijkmedia/ijkplayer/android/ijkplayer_jni.c 文件中的 IjkMediaPlayer_native_setup() 方法。

// static JNINativeMethod g_methods[] = {...} 数组中

{ "native_setup", "(Ljava/lang/Object;)V", (void *) IjkMediaPlayer_native_setup },

static void

IjkMediaPlayer_native_setup(JNIEnv *env, jobject thiz, jobject weak_this)

{MPTRACE("%s\n", __func__);IjkMediaPlayer *mp = ijkmp_android_create(message_loop);JNI_CHECK_GOTO(mp, env, "java/lang/OutOfMemoryError", "mpjni: native_setup: ijkmp_create() failed", LABEL_RETURN);jni_set_media_player(env, thiz, mp);ijkmp_set_weak_thiz(mp, (*env)->NewGlobalRef(env, weak_this));ijkmp_set_inject_opaque(mp, ijkmp_get_weak_thiz(mp));ijkmp_set_ijkio_inject_opaque(mp, ijkmp_get_weak_thiz(mp));ijkmp_android_set_mediacodec_select_callback(mp, mediacodec_select_callback, ijkmp_get_weak_thiz(mp));LABEL_RETURN:ijkmp_dec_ref_p(&mp);

}

4.1 ijkmp_android_create()

可以通过我的 A4ijkplayer 项目在 Android Studio 中跳转查看,该方法在 ijkmedia/ijkplayer/android/ijkplayer_android.c 文件中。

IjkMediaPlayer *ijkmp_android_create(int(*msg_loop)(void*))

{IjkMediaPlayer *mp = ijkmp_create(msg_loop);if (!mp)goto fail;mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface();if (!mp->ffplayer->vout)goto fail;mp->ffplayer->pipeline = ffpipeline_create_from_android(mp->ffplayer);if (!mp->ffplayer->pipeline)goto fail;ffpipeline_set_vout(mp->ffplayer->pipeline, mp->ffplayer->vout);return mp;fail:ijkmp_dec_ref_p(&mp);return NULL;

}

如上,ijkmp_android_create() 一共做了四件事:

- ijkmp_create() :创建 IjkMediaPlayer 结构体实例

- SDL_VoutAndroid_CreateForAndroidSurface():创建视频图像渲染对象 SDL_Vout

- ffpipeline_create_from_android():创建平台相关的 IJKFF_Pipeline,包含视频解码和音频输出

- ffpipeline_set_vout():将管道和视频输出关联

4.1.1 ijkmp_create()

该函数创建一个 native 层的 IjkMediaPlayer 结构体实例,其结构定义在: ijkmedia/ijkplayer/ijkplayer_internal.h 中。

IjkMediaPlayer *ijkmp_create(int (*msg_loop)(void*))

{IjkMediaPlayer *mp = (IjkMediaPlayer *) mallocz(sizeof(IjkMediaPlayer));// .....mp->ffplayer = ffp_create();// .....mp->msg_loop = msg_loop;// .....return mp;

}

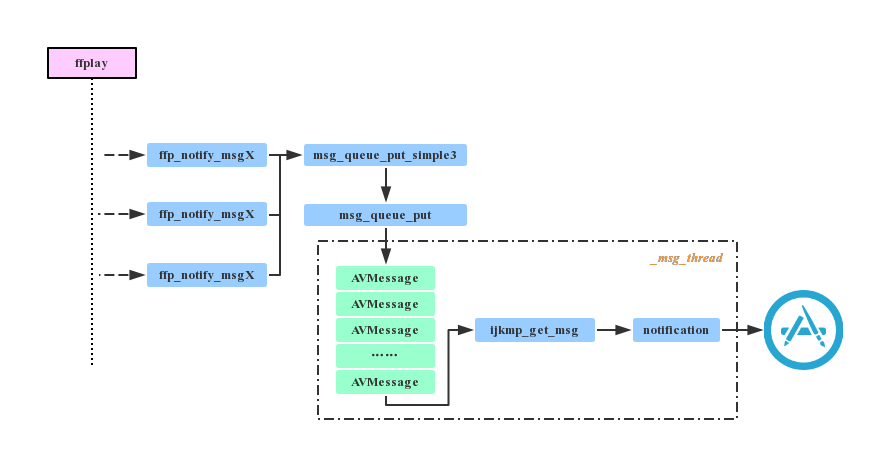

并通过 ffp_create() 创建 FFplayer 并指定消息事件处理函数 msg_loop。如下: message_loop() 将 native 线程绑定到 Java 线程(SDL_JNI_SetupThreadEnv 中),用于获取 JNIEnv 对象,以便可以从底层调用 Java 层方法。

其内部调用了message_loop_n() 方法,而 message_loop_n() 则是循环调用 ijkmp_get_msg() 来获取当前的事件,并通过 post_event() 方法将事件传递到 Java 层。

ijkmp_get_msg() 从消息队列中获取消息事件,队列为空没有事件时会阻塞,返回 -1 表示队列已退出。

post_event() 会通过 JNI 反向调用到 Java 层 IjkMediaPlayer 对象的 postEventFromNative() 方法,最终调用到 EventHandler 的handleMessage() 方法。

static int message_loop(void *arg)

{// ...JNIEnv *env = NULL;if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {return -1;}IjkMediaPlayer *mp = (IjkMediaPlayer*) arg;message_loop_n(env, mp);// ...return 0;

}

static void message_loop_n(JNIEnv *env, IjkMediaPlayer *mp)

{jobject weak_thiz = (jobject) ijkmp_get_weak_thiz(mp);while (1) {AVMessage msg;int retval = ijkmp_get_msg(mp, &msg, 1);if (retval < 0)break;switch (msg.what) {// ...case FFP_MSG_ERROR:post_event(env, weak_thiz, MEDIA_ERROR, MEDIA_ERROR_IJK_PLAYER, msg.arg1);break;case FFP_MSG_PREPARED:post_event(env, weak_thiz, MEDIA_PREPARED, 0, 0);break;// ...}}

}

inline static void post_event(JNIEnv *env, jobject weak_this, int what, int arg1, int arg2)

{J4AC_IjkMediaPlayer__postEventFromNative(env, weak_this, what, arg1, arg2, NULL);

}

4.1.2 SDL_VoutAndroid_CreateForAndroidSurface()

该函数用于创建 Android 平台视频图像渲染对象 SDL_Vout,如下:

SDL_Vout *SDL_VoutAndroid_CreateForAndroidSurface()

{return SDL_VoutAndroid_CreateForANativeWindow();

}

SDL_Vout *SDL_VoutAndroid_CreateForANativeWindow()

{SDL_Vout *vout = SDL_Vout_CreateInternal(sizeof(SDL_Vout_Opaque));if (!vout)return NULL;SDL_Vout_Opaque *opaque = vout->opaque;opaque->native_window = NULL;if (ISDL_Array__init(&opaque->overlay_manager, 32))goto fail;if (ISDL_Array__init(&opaque->overlay_pool, 32))goto fail;opaque->egl = IJK_EGL_create();if (!opaque->egl)goto fail;vout->opaque_class = &g_nativewindow_class;vout->create_overlay = func_create_overlay;vout->free_l = func_free_l;vout->display_overlay = func_display_overlay;return vout;

fail:func_free_l(vout);return NULL;

}

这里只是创建 SDL_Vout 结构体,及相关的内存和函数指针,GL 和 Surface 相关的操作在后续流程调用这里赋值的函数指针或赋值这里 SDL_Vout 的成员变量。如上面函数将 opaque->native_window 置空,native_window 真正赋值是在Java 层调用 setSurface 设置要显示的窗口时最后调用到 SDL_VoutAndroid_SetNativeWindow_l() 将上层的 Surface 和 opaque->native_window 关联。

func_create_overlay() 函数如下,这里只是将该函数指针赋值给 vout->create_overlay,真正调用是在渲染的时候调。在 ff_ffplay.c 中通过 SDL_Vout_CreateOverlay() 函数调用 vout->create_overlay 从而调到 func_create_overlay() 函数。

static SDL_VoutOverlay *func_create_overlay(int width, int height, int frame_format, SDL_Vout *vout)

{SDL_LockMutex(vout->mutex);SDL_VoutOverlay *overlay = func_create_overlay_l(width, height, frame_format, vout);SDL_UnlockMutex(vout->mutex);return overlay;

}

static SDL_VoutOverlay *func_create_overlay_l(int width, int height, int frame_format, SDL_Vout *vout)

{switch (frame_format) {case IJK_AV_PIX_FMT__ANDROID_MEDIACODEC:// 硬解码return SDL_VoutAMediaCodec_CreateOverlay(width, height, vout);default:// 软解码return SDL_VoutFFmpeg_CreateOverlay(width, height, frame_format, vout);}

}

func_display_overlay() 函数如下,这里只是将该函数指针赋值给 vout->display_overlay,真正调用是在 ff_ffplay.c

中 video_image_display2() 通过 SDL_VoutDisplayYUVOverlay() 函数调用 vout->display_overlay 从而调到 func_display_overlay() 函数。

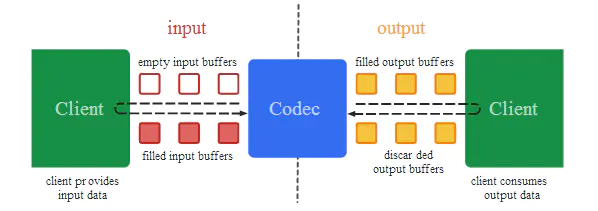

4.1.3 ffpipeline_create_from_android()

该函数用于创建平台相关的 IJKFF_Pipeline,包含视频解码和音频输出,这里只是给 pipeline 设置函数指针,如下:

IJKFF_Pipeline *ffpipeline_create_from_android(FFPlayer *ffp)

{ALOGD("ffpipeline_create_from_android()\n");IJKFF_Pipeline *pipeline = ffpipeline_alloc(&g_pipeline_class, sizeof(IJKFF_Pipeline_Opaque));if (!pipeline)return pipeline;IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;opaque->ffp = ffp;opaque->surface_mutex = SDL_CreateMutex();opaque->left_volume = 1.0f;opaque->right_volume = 1.0f;if (!opaque->surface_mutex) {ALOGE("ffpipeline-android:create SDL_CreateMutex failed\n");goto fail;}pipeline->func_destroy = func_destroy;pipeline->func_open_video_decoder = func_open_video_decoder;pipeline->func_open_audio_output = func_open_audio_output;pipeline->func_init_video_decoder = func_init_video_decoder;pipeline->func_config_video_decoder = func_config_video_decoder;return pipeline;

fail:ffpipeline_free_p(&pipeline);return NULL;

}

func_open_video_decoder :用于创建视频解码器的函数,在后续流程调用该函数,根据参数创建硬解码还是软解码。 无论硬解码还是软解码返回的都是 IJKFF_Pipenode,说其是解码器的抽象。

static IJKFF_Pipenode *func_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;IJKFF_Pipenode *node = NULL;if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2)// 视频硬解码node = ffpipenode_create_video_decoder_from_android_mediacodec(ffp, pipeline, opaque->weak_vout);if (!node) {// 视频软解码node = ffpipenode_create_video_decoder_from_ffplay(ffp);}return node;

}

真正调用初始化视频解码器是在 prepareAsync() 阶段,在开启视频解码线程之前,如下:

static int stream_component_open(FFPlayer *ffp, int stream_index)

{//...case AVMEDIA_TYPE_VIDEO:// 初始化视频解码器decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread);ffp->node_vdec = ffpipeline_open_video_decoder(ffp->pipeline, ffp);// 开始视频解码线程if ((ret = decoder_start(&is->viddec, video_thread, ffp, "ff_video_dec")) < 0)goto out;//...

}

IJKFF_Pipenode* ffpipeline_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{return pipeline->func_open_video_decoder(pipeline, ffp);

}

func_open_audio_output :用于创建音频输出的函数,在后续流程调用该函数,根据参数创建 AudioTrack 或者 OpenSL 用来播放音频。

static SDL_Aout *func_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{SDL_Aout *aout = NULL;if (ffp->opensles) {// 创建 OpenSLESaout = SDL_AoutAndroid_CreateForOpenSLES();} else {// 创建 AudioTrackaout = SDL_AoutAndroid_CreateForAudioTrack();}if (aout)SDL_AoutSetStereoVolume(aout, pipeline->opaque->left_volume, pipeline->opaque->right_volume);return aout;

}

func_init_video_decoder :用于初始化视频解码器的函数,只有硬解码才有效。

static IJKFF_Pipenode *func_init_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;IJKFF_Pipenode *node = NULL;if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2)node = ffpipenode_init_decoder_from_android_mediacodec(ffp, pipeline, opaque->weak_vout);return node;

}

func_config_video_decoder :用于创建配置视频解码器的函数,只有硬解码才有效。

static int func_config_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;int ret = NULL;if (ffp->node_vdec) {ret = ffpipenode_config_from_android_mediacodec(ffp, pipeline, opaque->weak_vout, ffp->node_vdec);}return ret;

}

4.1.4 ffpipeline_set_vout()

该函数用于将管道和视频输出关联,如下:

void ffpipeline_set_vout(IJKFF_Pipeline* pipeline, SDL_Vout *vout)

{if (!check_ffpipeline(pipeline, __func__))return;IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;opaque->weak_vout = vout;

}

4.2 jni_set_media_player()

将 native 层的 IjkMediaPlayer 对象保存到 Java 层,即将 native 层的 IjkMediaPlayer 指针保存到 Java 层 IjkMediaPlayer 的 mNativeMediaPlayer 变量。

static IjkMediaPlayer *jni_set_media_player(JNIEnv* env, jobject thiz, IjkMediaPlayer *mp)

{// ...IjkMediaPlayer *old = (IjkMediaPlayer*) (intptr_t) J4AC_IjkMediaPlayer__mNativeMediaPlayer__get__catchAll(env, thiz);if (mp) {ijkmp_inc_ref(mp);}J4AC_IjkMediaPlayer__mNativeMediaPlayer__set__catchAll(env, thiz, (intptr_t) mp);// ...return old;

}

public final class IjkMediaPlayer extends AbstractMediaPlayer {@AccessedByNativeprivate long mNativeMediaPlayer;

}

4.3 ijkmp_set_weak_thiz()

将 Java 层 IjkMediaPlayer 对象的弱引用保存到 native 层 IjkMediaPlayer 中(mp->weak_thiz),如下:

void *ijkmp_set_weak_thiz(IjkMediaPlayer *mp, void *weak_thiz)

{void *prev_weak_thiz = mp->weak_thiz;mp->weak_thiz = weak_thiz;return prev_weak_thiz;

}

private void initPlayer(IjkLibLoader libLoader) {// ...native_setup(new WeakReference<IjkMediaPlayer>(this));

}

4.4 ijkmp_set_inject_opaque()

void *ijkmp_set_inject_opaque(IjkMediaPlayer *mp, void *opaque)

{void *prev_weak_thiz = ffp_set_inject_opaque(mp->ffplayer, opaque);return prev_weak_thiz;

}

void *ffp_set_inject_opaque(FFPlayer *ffp, void *opaque)

{if (!ffp)return NULL;void *prev_weak_thiz = ffp->inject_opaque;ffp->inject_opaque = opaque;av_application_closep(&ffp->app_ctx);av_application_open(&ffp->app_ctx, ffp);ffp_set_option_int(ffp, FFP_OPT_CATEGORY_FORMAT, "ijkapplication", (int64_t)(intptr_t)ffp->app_ctx);ffp->app_ctx->func_on_app_event = app_func_event;return prev_weak_thiz;

}

4.5 ijkmp_set_ijkio_inject_opaque()

创建 io manager,用于管理 io 相关的操作和事件,比如缓存大小等。

void *ijkmp_set_ijkio_inject_opaque(IjkMediaPlayer *mp, void *opaque)

{void *prev_weak_thiz = ffp_set_ijkio_inject_opaque(mp->ffplayer, opaque);return prev_weak_thiz;

}

void *ffp_set_ijkio_inject_opaque(FFPlayer *ffp, void *opaque)

{if (!ffp)return NULL;void *prev_weak_thiz = ffp->ijkio_inject_opaque;ffp->ijkio_inject_opaque = opaque;ijkio_manager_destroyp(&ffp->ijkio_manager_ctx);ijkio_manager_create(&ffp->ijkio_manager_ctx, ffp);ijkio_manager_set_callback(ffp->ijkio_manager_ctx, ijkio_app_func_event);ffp_set_option_int(ffp, FFP_OPT_CATEGORY_FORMAT, "ijkiomanager", (int64_t)(intptr_t)ffp->ijkio_manager_ctx);return prev_weak_thiz;

}

4.6 ijkmp_android_set_mediacodec_select_callback()

设置选择硬解码时触发的回调。

void ijkmp_android_set_mediacodec_select_callback(IjkMediaPlayer *mp, bool (*callback)(void *opaque, ijkmp_mediacodecinfo_context *mcc), void *opaque)

{if (mp && mp->ffplayer && mp->ffplayer->pipeline) {ffpipeline_set_mediacodec_select_callback(mp->ffplayer->pipeline, callback, opaque);}// ...

}

void ffpipeline_set_mediacodec_select_callback(IJKFF_Pipeline* pipeline, bool (*callback)(void *opaque, ijkmp_mediacodecinfo_context *mcc), void *opaque)

{// ...pipeline->opaque->mediacodec_select_callback = callback;pipeline->opaque->mediacodec_select_callback_opaque = opaque;

}

Github : A4ijkplayer

参考链接:

https://www.jianshu.com/p/daf0a61cc1e0

https://anacz.blog.csdn.net/article/details/98893919