一、初始化

- (id)initWithContentURLString:(NSString *)aUrlStringwithOptions:(IJKFFOptions *)options

{if (aUrlString == nil)return nil;self = [super init];if (self) {ijkmp_global_init();ijkmp_global_set_inject_callback(ijkff_inject_callback);[IJKFFMoviePlayerController checkIfFFmpegVersionMatch:NO];if (options == nil)options = [IJKFFOptions optionsByDefault];// IJKFFIOStatRegister(IJKFFIOStatDebugCallback);// IJKFFIOStatCompleteRegister(IJKFFIOStatCompleteDebugCallback);// init fields_scalingMode = IJKMPMovieScalingModeAspectFit;_shouldAutoplay = YES;memset(&_asyncStat, 0, sizeof(_asyncStat));memset(&_cacheStat, 0, sizeof(_cacheStat));_monitor = [[IJKFFMonitor alloc] init];// init media resource_urlString = aUrlString;// init player_mediaPlayer = ijkmp_ios_create(media_player_msg_loop);_msgPool = [[IJKFFMoviePlayerMessagePool alloc] init];IJKWeakHolder *weakHolder = [IJKWeakHolder new];weakHolder.object = self;ijkmp_set_weak_thiz(_mediaPlayer, (__bridge_retained void *) self);ijkmp_set_inject_opaque(_mediaPlayer, (__bridge_retained void *) weakHolder);ijkmp_set_ijkio_inject_opaque(_mediaPlayer, (__bridge_retained void *)weakHolder);ijkmp_set_option_int(_mediaPlayer, IJKMP_OPT_CATEGORY_PLAYER, "start-on-prepared", _shouldAutoplay ? 1 : 0);// init video sink_glView = [[IJKSDLGLView alloc] initWithFrame:[[UIScreen mainScreen] bounds]];_glView.shouldShowHudView = NO;_view = _glView;[_glView setHudValue:nil forKey:@"scheme"];[_glView setHudValue:nil forKey:@"host"];[_glView setHudValue:nil forKey:@"path"];[_glView setHudValue:nil forKey:@"ip"];[_glView setHudValue:nil forKey:@"tcp-info"];[_glView setHudValue:nil forKey:@"http"];[_glView setHudValue:nil forKey:@"tcp-spd"];[_glView setHudValue:nil forKey:@"t-prepared"];[_glView setHudValue:nil forKey:@"t-render"];[_glView setHudValue:nil forKey:@"t-preroll"];[_glView setHudValue:nil forKey:@"t-http-open"];[_glView setHudValue:nil forKey:@"t-http-seek"];self.shouldShowHudView = options.showHudView;ijkmp_ios_set_glview(_mediaPlayer, _glView);ijkmp_set_option(_mediaPlayer, IJKMP_OPT_CATEGORY_PLAYER, "overlay-format", "fcc-_es2");

#ifdef DEBUG[IJKFFMoviePlayerController setLogLevel:k_IJK_LOG_DEBUG];

#else[IJKFFMoviePlayerController setLogLevel:k_IJK_LOG_SILENT];

#endif// init audio sink[[IJKAudioKit sharedInstance] setupAudioSession];[options applyTo:_mediaPlayer];_pauseInBackground = NO;// init extra_keepScreenOnWhilePlaying = YES;[self setScreenOn:YES];_notificationManager = [[IJKNotificationManager alloc] init];[self registerApplicationObservers];}return self;

}

二、播放

prepareToPlay为native方法,映射为ijkmp_prepare_async,经过一系列调用后会走到ijkplayer.c的ijkmp_prepare_async_l方法里面:

ijkmp_change_state_l(mp, MP_STATE_ASYNC_PREPARING);msg_queue_start(&mp->ffplayer->msg_queue);// released in msg_loopijkmp_inc_ref(mp);mp->msg_thread = SDL_CreateThreadEx(&mp->_msg_thread, ijkmp_msg_loop, mp, "ff_msg_loop");// msg_thread is detached inside msg_loop// TODO: 9 release weak_thiz if pthread_create() failed;int retval = ffp_prepare_async_l(mp->ffplayer, mp->data_source);if (retval < 0) {ijkmp_change_state_l(mp, MP_STATE_ERROR);return retval;}return 0;

这里显示启动了一个队列,然后启动了一个loop消息线程(一个独立的线程来处理消息派发),然后走了一个关键函数ffp_prepare_async_l。重点看下面的ffp_prepare_async_l,这个才是进入到准备播放的阶段:

int ffp_prepare_async_l(FFPlayer *ffp, const char *file_name)

{assert(ffp);assert(!ffp->is);assert(file_name);if (av_stristart(file_name, "rtmp", NULL) ||av_stristart(file_name, "rtsp", NULL)) {// There is total different meaning for 'timeout' option in rtmpav_log(ffp, AV_LOG_WARNING, "remove 'timeout' option for rtmp.\n");av_dict_set(&ffp->format_opts, "timeout", NULL, 0);}/* there is a length limit in avformat */if (strlen(file_name) + 1 > 1024) {av_log(ffp, AV_LOG_ERROR, "%s too long url\n", __func__);if (avio_find_protocol_name("ijklongurl:")) {av_dict_set(&ffp->format_opts, "ijklongurl-url", file_name, 0);file_name = "ijklongurl:";}}av_log(NULL, AV_LOG_INFO, "===== versions =====\n");ffp_show_version_str(ffp, "ijkplayer", ijk_version_info());ffp_show_version_str(ffp, "FFmpeg", av_version_info());ffp_show_version_int(ffp, "libavutil", avutil_version());ffp_show_version_int(ffp, "libavcodec", avcodec_version());ffp_show_version_int(ffp, "libavformat", avformat_version());ffp_show_version_int(ffp, "libswscale", swscale_version());ffp_show_version_int(ffp, "libswresample", swresample_version());av_log(NULL, AV_LOG_INFO, "===== options =====\n");ffp_show_dict(ffp, "player-opts", ffp->player_opts);ffp_show_dict(ffp, "format-opts", ffp->format_opts);ffp_show_dict(ffp, "codec-opts ", ffp->codec_opts);ffp_show_dict(ffp, "sws-opts ", ffp->sws_dict);ffp_show_dict(ffp, "swr-opts ", ffp->swr_opts);av_log(NULL, AV_LOG_INFO, "===================\n");av_opt_set_dict(ffp, &ffp->player_opts);if (!ffp->aout) {ffp->aout = ffpipeline_open_audio_output(ffp->pipeline, ffp);if (!ffp->aout)return -1;}#if CONFIG_AVFILTERif (ffp->vfilter0) {GROW_ARRAY(ffp->vfilters_list, ffp->nb_vfilters);ffp->vfilters_list[ffp->nb_vfilters - 1] = ffp->vfilter0;}

#endifVideoState *is = stream_open(ffp, file_name, NULL);if (!is) {av_log(NULL, AV_LOG_WARNING, "ffp_prepare_async_l: stream_open failed OOM");return EIJK_OUT_OF_MEMORY;}ffp->is = is;ffp->input_filename = av_strdup(file_name);return 0;

}

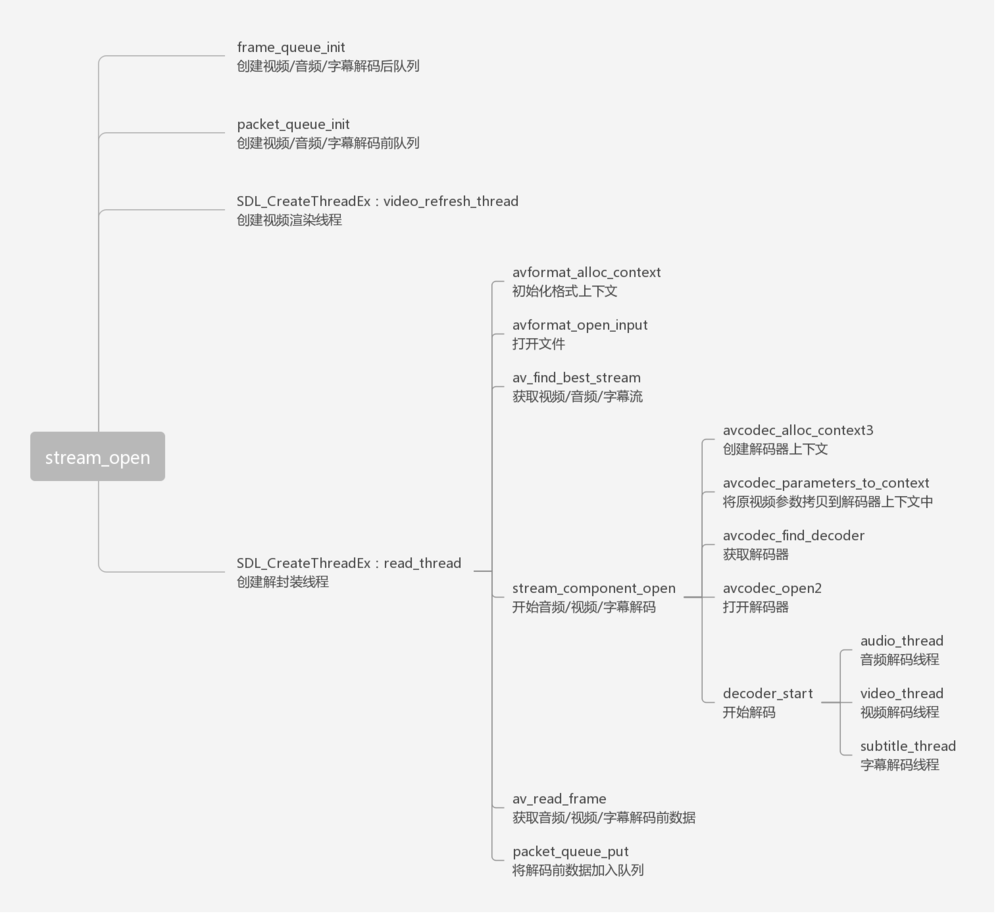

前面判断直播视频流协议rtmp或rtsp,再后面基本是输出信息的,真正的核心函数是stream_open。这个才是根据地址打开视频流,代码如下:

static VideoState *stream_open(FFPlayer *ffp, const char *filename, AVInputFormat *iformat)

{assert(!ffp->is);VideoState *is;is = av_mallocz(sizeof(VideoState));if (!is)return NULL;is->filename = av_strdup(filename);if (!is->filename)goto fail;is->iformat = iformat;is->ytop = 0;is->xleft = 0;

#if defined(__ANDROID__)if (ffp->soundtouch_enable) {is->handle = ijk_soundtouch_create();}

#endif/* start video display */if (frame_queue_init(&is->pictq, &is->videoq, ffp->pictq_size, 1) < 0)goto fail;if (frame_queue_init(&is->subpq, &is->subtitleq, SUBPICTURE_QUEUE_SIZE, 0) < 0)goto fail;if (frame_queue_init(&is->sampq, &is->audioq, SAMPLE_QUEUE_SIZE, 1) < 0)goto fail;if (packet_queue_init(&is->videoq) < 0 ||packet_queue_init(&is->audioq) < 0 ||packet_queue_init(&is->subtitleq) < 0)goto fail;if (!(is->continue_read_thread = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());goto fail;}if (!(is->video_accurate_seek_cond = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());ffp->enable_accurate_seek = 0;}if (!(is->audio_accurate_seek_cond = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());ffp->enable_accurate_seek = 0;}init_clock(&is->vidclk, &is->videoq.serial);init_clock(&is->audclk, &is->audioq.serial);init_clock(&is->extclk, &is->extclk.serial);is->audio_clock_serial = -1;if (ffp->startup_volume < 0)av_log(NULL, AV_LOG_WARNING, "-volume=%d < 0, setting to 0\n", ffp->startup_volume);if (ffp->startup_volume > 100)av_log(NULL, AV_LOG_WARNING, "-volume=%d > 100, setting to 100\n", ffp->startup_volume);ffp->startup_volume = av_clip(ffp->startup_volume, 0, 100);ffp->startup_volume = av_clip(SDL_MIX_MAXVOLUME * ffp->startup_volume / 100, 0, SDL_MIX_MAXVOLUME);is->audio_volume = ffp->startup_volume;is->muted = 0;is->av_sync_type = ffp->av_sync_type;is->play_mutex = SDL_CreateMutex();is->accurate_seek_mutex = SDL_CreateMutex();ffp->is = is;is->pause_req = !ffp->start_on_prepared;is->video_refresh_tid = SDL_CreateThreadEx(&is->_video_refresh_tid, video_refresh_thread, ffp, "ff_vout");if (!is->video_refresh_tid) {av_freep(&ffp->is);return NULL;}is->read_tid = SDL_CreateThreadEx(&is->_read_tid, read_thread, ffp, "ff_read");if (!is->read_tid) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateThread(): %s\n", SDL_GetError());

fail:is->abort_request = true;if (is->video_refresh_tid)SDL_WaitThread(is->video_refresh_tid, NULL);stream_close(ffp);return NULL;}return is;

}

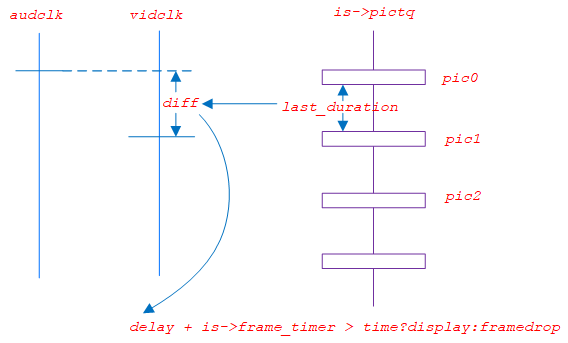

这里可以看到有3个队列初始化,分别是视频、音频和字幕,这些队列通过frame_queue_init又分别分为2个队列,原始的和解码后的。再往下看就是2个线程的创建,分别是video_refresh_thread和read_thread,从字面上理解,应当是输出刷新视频和读取线程。我们下面先从读取线程入手,整个代码很长,注意这里的ic变量。是个AVFormatContext类型,这里放的是流的信息和数据。

read_thread:

......

ic = avformat_alloc_context();

......

err = avformat_open_input(&ic, is->filename, is->iformat, &ffp->format_opts);

......

err = avformat_find_stream_info(ic, opts);

......

for (i = 0; i < ic->nb_streams; i++) {AVStream *st = ic->streams[i];enum AVMediaType type = st->codecpar->codec_type;st->discard = AVDISCARD_ALL;if (type >= 0 && ffp->wanted_stream_spec[type] && st_index[type] == -1)if (avformat_match_stream_specifier(ic, st, ffp->wanted_stream_spec[type]) > 0)st_index[type] = i;// choose first h264if (type == AVMEDIA_TYPE_VIDEO) {enum AVCodecID codec_id = st->codecpar->codec_id;video_stream_count++;if (codec_id == AV_CODEC_ID_H264) {h264_stream_count++;if (first_h264_stream < 0)first_h264_stream = i;}}}

....../* open the streams */if (st_index[AVMEDIA_TYPE_AUDIO] >= 0) {stream_component_open(ffp, st_index[AVMEDIA_TYPE_AUDIO]);}ret = -1;if (st_index[AVMEDIA_TYPE_VIDEO] >= 0) {ret = stream_component_open(ffp, st_index[AVMEDIA_TYPE_VIDEO]);}if (is->show_mode == SHOW_MODE_NONE)is->show_mode = ret >= 0 ? SHOW_MODE_VIDEO : SHOW_MODE_RDFT;if (st_index[AVMEDIA_TYPE_SUBTITLE] >= 0) {stream_component_open(ffp, st_index[AVMEDIA_TYPE_SUBTITLE]);}

....../* offset should be seeked*/if (ffp->seek_at_start > 0) {ffp_seek_to_l(ffp, ffp->seek_at_start);}for (;;) {......ret = av_read_frame(ic, pkt);....../* check if packet is in play range specified by user, then queue, otherwise discard */stream_start_time = ic->streams[pkt->stream_index]->start_time;pkt_ts = pkt->pts == AV_NOPTS_VALUE ? pkt->dts : pkt->pts;pkt_in_play_range = ffp->duration == AV_NOPTS_VALUE ||(pkt_ts - (stream_start_time != AV_NOPTS_VALUE ? stream_start_time : 0)) *av_q2d(ic->streams[pkt->stream_index]->time_base) -(double)(ffp->start_time != AV_NOPTS_VALUE ? ffp->start_time : 0) / 1000000<= ((double)ffp->duration / 1000000);......@@@if (pkt->stream_index == is->audio_stream && pkt_in_play_range) {packet_queue_put(&is->audioq, pkt);} else if (pkt->stream_index == is->video_stream && pkt_in_play_range&& !(is->video_st && (is->video_st->disposition & AV_DISPOSITION_ATTACHED_PIC))) {packet_queue_put(&is->videoq, pkt);} else if (pkt->stream_index == is->subtitle_stream && pkt_in_play_range) {packet_queue_put(&is->subtitleq, pkt);} else {av_packet_unref(pkt);}}......

avformat_alloc_context是分配空间初始化。然后在avformat_open_input里面填充ic,这个函数里要读取网络数据包的信息,并判定格式等等操作。这个for循环取出每一帧,然后进行判断类型,音视频字幕归类,并分别设置个数。再来进入到核心的一个函数,就是stream_component_open,这里根据不同类型的帧是否有来进行流的读取及解码工作。真正的读取在av_read_frame,读取解码后的数据,读取到pkt里。这个过程是在一个for无限循环内部进行的,在seek的处理之后进行。这个for不小心会漏看的…那么这个av_read_frame读取到的是什么数据呢?看结构AVPacket,这个的说明已经挺清晰了,是一个压缩的数据帧,是否是关键帧已经在flags里标记了。无论这个循环前后干了什么,都是要走这一步,读取数据帧。下面有一大段是对错误的处理,暂时略过。下面有一段检查数据包是否在用户指定的播放范围内,并根据时间戳排队,否则丢弃的过程。从stream_start_time=…开始,我理解的是计算出当前数据帧的时间戳后再计算出播放的起始时间到当前时间,然后看这个时间戳是否在此范围内。范围内的就put到队列中,否则丢弃。

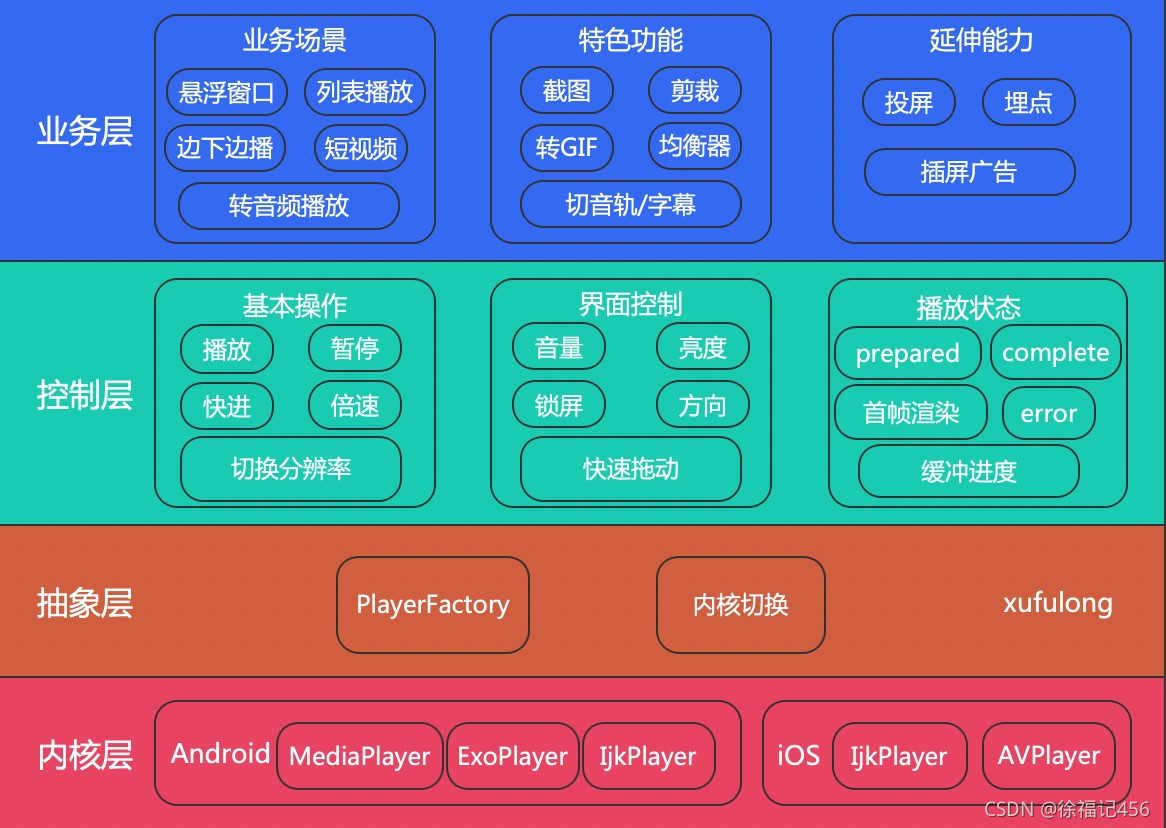

三、流程图