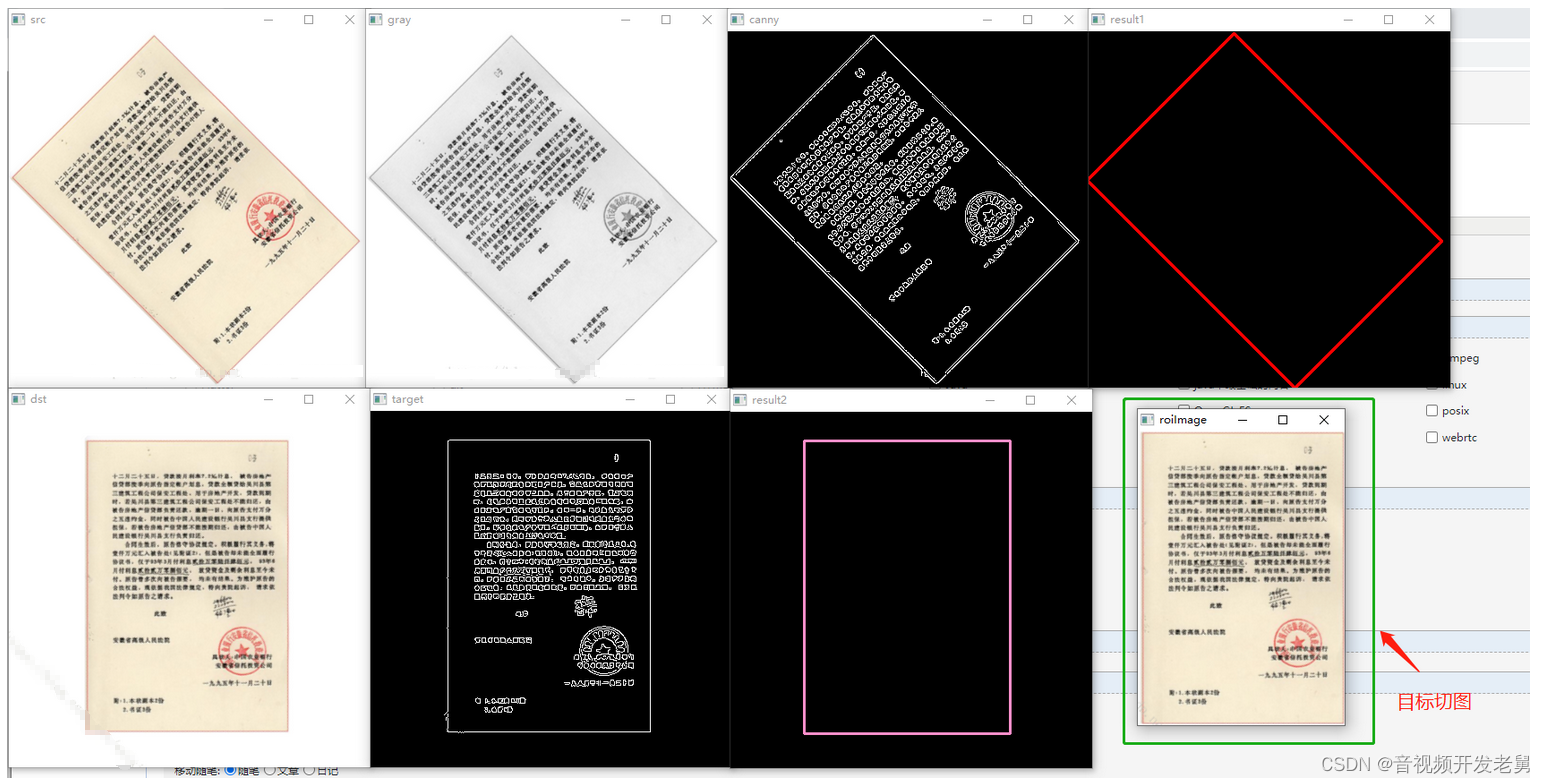

做图像矫正时使用了一下sift算法,尽管sift确实很牛,但还是会出现一些误匹配,直接计

算两张影像的单应矩阵会出现很大误差,因此可以在计算时使用RANSAC算法在单应矩

阵的约束下剔除误匹配,并计算单应矩阵,基本思路为:1、进行sift匹配。2、

RANSAC计算单应矩阵。3、使用插值函数进行重采样。基于OpenCV的c++代码:

int main(int argc,char* argv[])

{Mat img_1=imread("2Rotation5.png",CV_LOAD_IMAGE_GRAYSCALE);//宏定义时CV_LOAD_IMAGE_GRAYSCALE=0,也就是读取灰度图像Mat img_2=imread("2Rotation1.png",CV_LOAD_IMAGE_GRAYSCALE);//一定要记得这里路径的斜线方向,这与Matlab里面是相反的if(!img_1.data || !img_2.data)//如果数据为空{cout<<"opencv error"<<endl;return -1;}cout<<"open right"<<endl;//第一步,用SIFT算子检测关键点SiftFeatureDetector detector;//构造函数采用内部默认的std::vector<KeyPoint> keypoints_1,keypoints_2;//构造2个专门由点组成的点向量用来存储特征点detector.detect(img_1,keypoints_1);//将img_1图像中检测到的特征点存储起来放在keypoints_1中detector.detect(img_2,keypoints_2);//同理//在图像中画出特征点

// Mat img_keypoints_1,img_keypoints_2;

//

// drawKeypoints(img_1,keypoints_1,img_keypoints_1,Scalar::all(-1),DrawMatchesFlags::DEFAULT);//在内存中画出特征点

// drawKeypoints(img_2,keypoints_2,img_keypoints_2,Scalar::all(-1),DrawMatchesFlags::DEFAULT);

//

// imshow("sift_keypoints_1",img_keypoints_1);//显示特征点

// imshow("sift_keypoints_2",img_keypoints_2);//计算特征向量SiftDescriptorExtractor extractor;//定义描述子对象Mat descriptors_1,descriptors_2;//存放特征向量的矩阵extractor.compute(img_1,keypoints_1,descriptors_1);//计算特征向量extractor.compute(img_2,keypoints_2,descriptors_2);//用burte force进行匹配特征向量BruteForceMatcher<L2<float>>matcher;//定义一个burte force matcher对象vector<DMatch>matches;matcher.match(descriptors_1,descriptors_2,matches);double max_dist=0,min_dist=100;for (int i=0;i<descriptors_1.rows;i++){double dst=matches[i].distance;if(dst<min_dist)min_dist=dst;if(dst>max_dist)max_dist=dst;}std::vector<DMatch>good_matches;for (int i=0;i<descriptors_1.rows;i++){if (matches[i].distance<3*min_dist){good_matches.push_back(matches[i]);}}std::vector<Point2f>obj;std::vector<Point2f>scene;for (int i=0;i<good_matches.size();i++){obj.push_back(keypoints_1[good_matches[i].queryIdx].pt);scene.push_back(keypoints_2[good_matches[i].trainIdx].pt);}Mat h=findHomography(obj,scene,CV_RANSAC);Mat ts_img;Mat color_img_1=imread("2Rotation5.png");Mat color_img_2=imread("2Rotation1.png");warpPerspective(color_img_1,ts_img,h,Size(img_1.size().width,img_1.size().height));imshow("res",ts_img);imwrite("result.bmp",ts_img);Point2f *srcTri=new Point2f[matches.size()];Point2f *dstTri=new Point2f[matches.size()];//设置原图像和目标图像的同名点for (int i=0;i<matches.size();i+=20){srcTri[i]=keypoints_1[matches[i].queryIdx].pt;dstTri[i]=keypoints_2[matches[i].trainIdx].pt;}FlannBasedMatcher macher;Mat warp_mat(2,3,CV_32FC1);warp_mat=getAffineTransform(srcTri,dstTri);//cout<<warp_mat;//getchar();//绘制匹配线段Mat img_matches;drawMatches(img_1,keypoints_1,img_2,keypoints_2,matches,img_matches);//将匹配出来的结果放入内存img_matches中//显示匹配线段imshow("sift_Matches",img_matches);//显示的标题为MatcheswaitKey(0);return 0;}