PointNet代码详解

最近在做点云深度学习的机器人抓取,这篇博客主要是把近期学习PointNet的一些总结的知识点汇总一下。

PointNet概述详见以下网址和博客,这里也就不再赘述了。

三维深度学习之pointnet系列详解

PointNet网络结构详细解析

PointNet论文理解和代码分析

PointNet论文复现及代码详解

这里着重来探讨一下内部的代码(pointnet-master\models路径下的)。

PointNet原文及Github代码下载

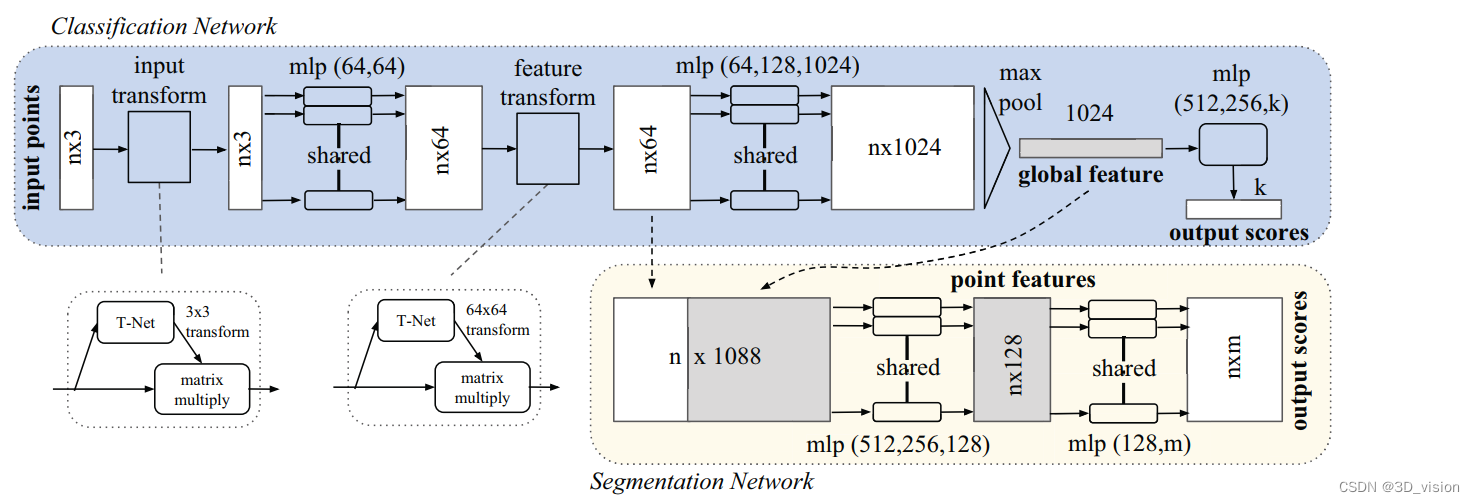

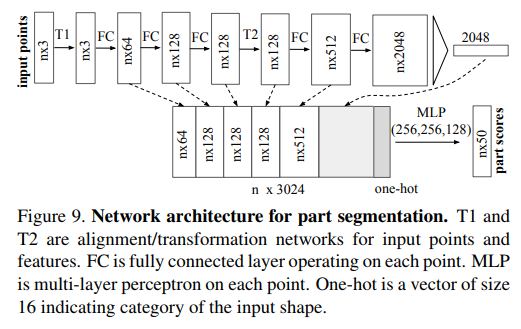

详细的网络结构图如下

主要讲一下应该注意的地方:

(1)网络结构内部主要分为分类和分割两部分,从 global feature 开始区分分类和分割,关于点云的分类和分割,详见点云分类与分割的区别联系。

(2)我们这边主要定义数据维度的表示为 (B, H, W, C) ,也就是Batch, Height, Width, Channel。 开始输入时是一个3D的张量 (B, n, 3),其中B即为训练的批量, n 为点云个数,3则代表了点云的(x,y,z)的3个位置,因此为了后续的卷积操作,会将其增加维度到4D张量(B, n, 3, 1),方便后面卷积核提取产生特征通道数C,(B, n, 3, C)。

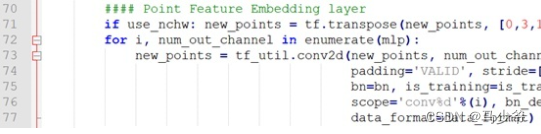

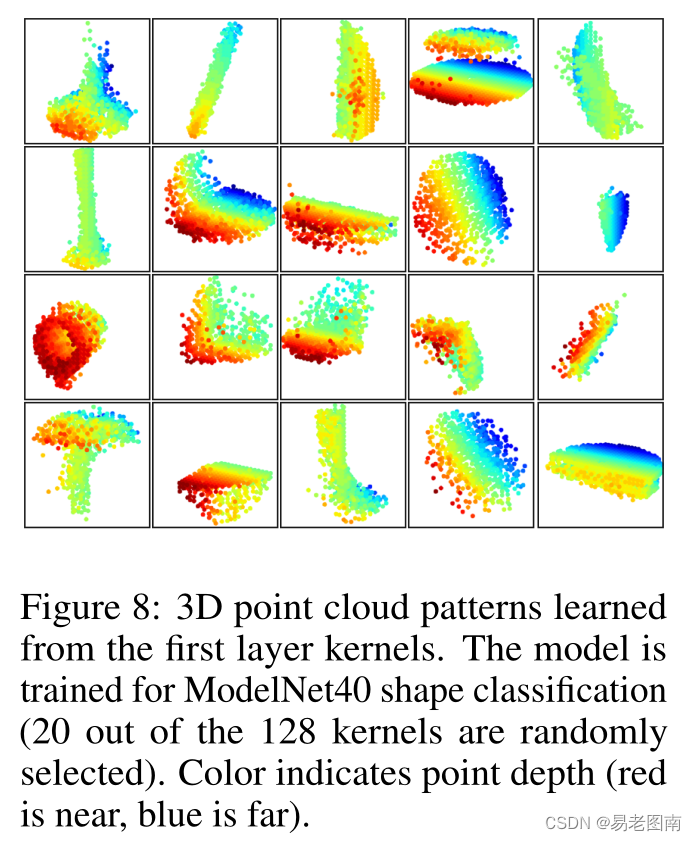

(3)第一层的卷积核大小为(1, 3),因为每个点的维度都是(x, y, z),后续的所有卷积核大小均为(1, 1),因为经过第一次卷积之后数据就变为了(B, n, 1, C)。

(4)整个网络框架内部使用了两个分支网络Transform(T-Net)。T-Net对原样本进行一定的卷积和全连接等操作后得到变换矩阵并与原样本相乘完成点云内部结构的调整,但并不改变原样本数据的格式。第一次T-Net输出一个33的矩阵,第二次T-Net输出一个6464的矩阵。

阅读后续代码前请仔细看完这篇博客,务必理解里面内容

PointNet网络结构详细解析

T-Net

对应文件为“pointnet-master\models\transform_nets.py”

根据网络结构图可知输入量时B×n×3,对于input_transform来说,主要经历了以下处理过程:

卷积:64–128–1024

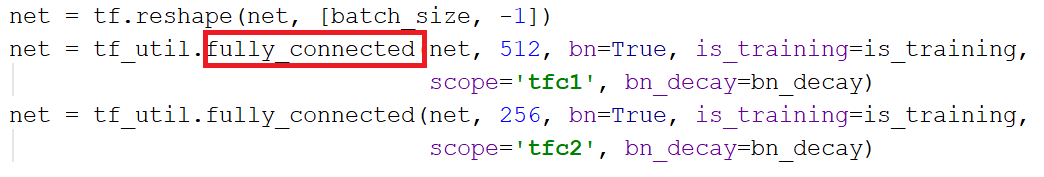

全连接:1024–512–256–3*K(代码中给出K=3)

最后reshape得到变换矩阵

def input_transform_net(point_cloud, is_training, bn_decay=None, K=3):""" Input (XYZ) Transform Net, input is BxNx3 gray imageReturn:Transformation matrix of size 3xK """#建议阅读时忽略batch_size的维度,将张量视作一个3维矩阵[n, W, C]#其中n为点云数,也就是Height;W为矩阵宽Width;C为特征通道数batch_size = point_cloud.get_shape()[0].value #得到训练批量num_point = point_cloud.get_shape()[1].value #得到点云个数input_image = tf.expand_dims(point_cloud, -1) #扩展维度为四维张量,-1表示最后一个维度,第四个维度来表示特征通道数#input_image [batch_size, num_point, 3, 1]#第一次卷积,采用64个大小为[1,3]的卷积核#完成之后 net表示为[batch_size, num_point, 1, 64]net = tf_util.conv2d(input_image, 64, [1,3],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv1', bn_decay=bn_decay)#第二次卷积,采用128个大小为[1,1]的卷积核#完成之后 net表示为[batch_size, num_point, 1, 128]net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv2', bn_decay=bn_decay)#第三次卷积,采用1024个大小为[1,1]的卷积核#完成之后 net表示为[batch_size, num_point, 1, 1024]net = tf_util.conv2d(net, 1024, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv3', bn_decay=bn_decay)#max pooling,扫描的模板大小为[num_point, 1],也就是每一个特征通道仅保留一个feature#完成该池化操作之后的net表示为[batch_size, 1, 1, 1024]net = tf_util.max_pool2d(net, [num_point,1],padding='VALID', scope='tmaxpool')#reshape张量,即将其平面化为[batch_size, 1024]net = tf.reshape(net, [batch_size, -1])net = tf_util.fully_connected(net, 512, bn=True, is_training=is_training,scope='tfc1', bn_decay=bn_decay)net = tf_util.fully_connected(net, 256, bn=True, is_training=is_training,scope='tfc2', bn_decay=bn_decay)#最后得到的net表示为[batch_size, 256],即每组只保留256个特征#再经过下述的全连接操作之后得到[batch_size, 3*K]大小的transformwith tf.variable_scope('transform_XYZ') as sc:assert(K==3)weights = tf.get_variable('weights', [256, 3*K],initializer=tf.constant_initializer(0.0),dtype=tf.float32)biases = tf.get_variable('biases', [3*K],initializer=tf.constant_initializer(0.0),dtype=tf.float32)biases += tf.constant([1,0,0,0,1,0,0,0,1], dtype=tf.float32)transform = tf.matmul(net, weights)transform = tf.nn.bias_add(transform, biases)#重新塑造变换矩阵[batch_size, 3*K]为[batch_size, 3, K]transform = tf.reshape(transform, [batch_size, 3, K])return transform

feature_transform同input_transform类似,经历处理过程表示为:

卷积:64–128–1024

全连接:1024–512–256–64*K(代码中给出K=64)

最后reshape得到变换矩阵

这里就不再注释,大家也可以通过下面未注释的代码测试一下上一个transform有没有看懂了。

def feature_transform_net(inputs, is_training, bn_decay=None, K=64):""" Feature Transform Net, input is BxNx1xKReturn:Transformation matrix of size KxK """batch_size = inputs.get_shape()[0].valuenum_point = inputs.get_shape()[1].valuenet = tf_util.conv2d(inputs, 64, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv1', bn_decay=bn_decay)net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv2', bn_decay=bn_decay)net = tf_util.conv2d(net, 1024, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='tconv3', bn_decay=bn_decay)net = tf_util.max_pool2d(net, [num_point,1],padding='VALID', scope='tmaxpool')net = tf.reshape(net, [batch_size, -1])net = tf_util.fully_connected(net, 512, bn=True, is_training=is_training,scope='tfc1', bn_decay=bn_decay)net = tf_util.fully_connected(net, 256, bn=True, is_training=is_training,scope='tfc2', bn_decay=bn_decay)with tf.variable_scope('transform_feat') as sc:weights = tf.get_variable('weights', [256, K*K],initializer=tf.constant_initializer(0.0),dtype=tf.float32)biases = tf.get_variable('biases', [K*K],initializer=tf.constant_initializer(0.0),dtype=tf.float32)biases += tf.constant(np.eye(K).flatten(), dtype=tf.float32)transform = tf.matmul(net, weights)transform = tf.nn.bias_add(transform, biases)transform = tf.reshape(transform, [batch_size, K, K])return transform

分类网络结构

分类网络内容即PointNet网络结构图中最上面的那个主要框图,即Classification Network。对应文件为“pointnet-master\models\pointnet_cls.py”

def get_model(point_cloud, is_training, bn_decay=None):""" Classification PointNet, input is BxNx3, output Bx40 """batch_size = point_cloud.get_shape()[0].value #得到训练批量num_point = point_cloud.get_shape()[1].value #得到点云个数end_points = {} #生成字典with tf.variable_scope('transform_net1') as sc:transform = input_transform_net(point_cloud, is_training, bn_decay, K=3) #通过第一个T-Net得到input_tranformpoint_cloud_transformed = tf.matmul(point_cloud, transform) #将原矩阵和input_transform相乘完成转换input_image = tf.expand_dims(point_cloud_transformed, -1) #扩展为4维张量[batch_size, num_point, 3, 1]#使用64个大小为[1,3]的卷积核得到64个特征通道net = tf_util.conv2d(input_image, 64, [1,3],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv1', bn_decay=bn_decay)#使用64个大小为[1,1]的卷积核得到64个特征通道net = tf_util.conv2d(net, 64, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv2', bn_decay=bn_decay)#最后得到net为[batch_size, point_num, 1, 64]#第二次使用T-Net进行转换with tf.variable_scope('transform_net2') as sc:transform = feature_transform_net(net, is_training, bn_decay, K=64)end_points['transform'] = transform #在字典中用transform保存feature transform,记录原始特征#squeeze操作删除大小为1的维度#注意到之前操作之后得到的net为[batch_size, num_point, 1, 64]#从维度0开始,这里指定为第二个维度,squeeze之后为[batch_size, num_point, 64]#然后相乘得到变换后的矩阵net_transformed = tf.matmul(tf.squeeze(net, axis=[2]), transform)#指定第二个维度并膨胀为4维张量[batch_size, num_point, 1, 64]net_transformed = tf.expand_dims(net_transformed, [2])#进行3次卷积操作,最后得到[batch_size, num_point, 1, 1024]net = tf_util.conv2d(net_transformed, 64, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv3', bn_decay=bn_decay)net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv4', bn_decay=bn_decay)net = tf_util.conv2d(net, 1024, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv5', bn_decay=bn_decay)# Symmetric function: max pooling#max pooling,扫描的模板大小为[num_point, 1],也就是每一个特征通道仅保留一个feature#完成该池化操作之后的net表示为[batch_size, 1, 1, 1024]net = tf_util.max_pool2d(net, [num_point,1],padding='VALID', scope='maxpool')#重塑张量得到[batch_size, 1024]net = tf.reshape(net, [batch_size, -1])#3次全连接并且进行2次dropout#最后得到40种分类结果net = tf_util.fully_connected(net, 512, bn=True, is_training=is_training,scope='fc1', bn_decay=bn_decay)net = tf_util.dropout(net, keep_prob=0.7, is_training=is_training,scope='dp1')net = tf_util.fully_connected(net, 256, bn=True, is_training=is_training,scope='fc2', bn_decay=bn_decay)net = tf_util.dropout(net, keep_prob=0.7, is_training=is_training,scope='dp2')net = tf_util.fully_connected(net, 40, activation_fn=None, scope='fc3')#return返回分类结果以及n*64的原始特征return net, end_points

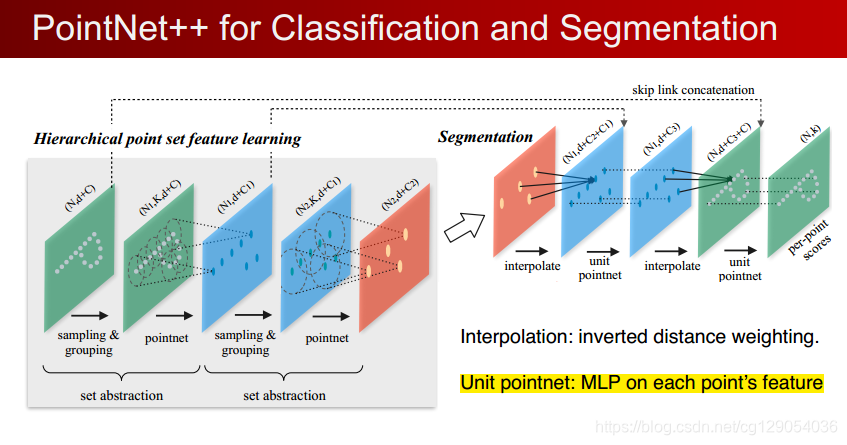

分割网络结构

在PointNet原网络结构图中从global_feature中向下的分支,即Segmentation Network。对应文件为“pointnet-master\models\pointnet_seg.py”。

由于结构与分类网络类似,这里仅简单注释一下。

def get_model(point_cloud, is_training, bn_decay=None):""" Classification PointNet, input is BxNx3, output BxNx50 """batch_size = point_cloud.get_shape()[0].valuenum_point = point_cloud.get_shape()[1].valueend_points = {}#第一次T-Net转换,转换完成之后膨胀为四维张量with tf.variable_scope('transform_net1') as sc:transform = input_transform_net(point_cloud, is_training, bn_decay, K=3)point_cloud_transformed = tf.matmul(point_cloud, transform)input_image = tf.expand_dims(point_cloud_transformed, -1)#两次卷积,完成后得到[batch_size, num_point, 1, 64]net = tf_util.conv2d(input_image, 64, [1,3],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv1', bn_decay=bn_decay)net = tf_util.conv2d(net, 64, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv2', bn_decay=bn_decay)#第二次T-Net转换,转换过程中注意维度的变换操作with tf.variable_scope('transform_net2') as sc:transform = feature_transform_net(net, is_training, bn_decay, K=64)end_points['transform'] = transformnet_transformed = tf.matmul(tf.squeeze(net, axis=[2]), transform)point_feat = tf.expand_dims(net_transformed, [2])print(point_feat)#3次卷积操作和1次最大池化操作#最后得到[batch_size, 1, 1, 1024]net = tf_util.conv2d(point_feat, 64, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv3', bn_decay=bn_decay)net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv4', bn_decay=bn_decay)net = tf_util.conv2d(net, 1024, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv5', bn_decay=bn_decay)global_feat = tf_util.max_pool2d(net, [num_point,1],padding='VALID', scope='maxpool')print(global_feat)#未进行性tile之前为[batch_size, 1, 1, 1024]#tile()函数用来对张量进行扩展,对维度1复制拓展至num_point倍#tile()操作之后为[batch_size, num_point, 1, 1024]global_feat_expand = tf.tile(global_feat, [1, num_point, 1, 1])#tf.concat()将两个矩阵进行拼接,拼接得到网络结构中的n×1088的矩阵,n即num_point,此处忽略batch_sizeconcat_feat = tf.concat(3, [point_feat, global_feat_expand])print(concat_feat)#5次卷积操作得到128个特征通道输出 [batch_size, num_point, 1, 128]net = tf_util.conv2d(concat_feat, 512, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv6', bn_decay=bn_decay)net = tf_util.conv2d(net, 256, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv7', bn_decay=bn_decay)net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv8', bn_decay=bn_decay)net = tf_util.conv2d(net, 128, [1,1],padding='VALID', stride=[1,1],bn=True, is_training=is_training,scope='conv9', bn_decay=bn_decay)#max_pooling操作得到50个特征输出#[batch_size, num_point, 1, 50]net = tf_util.conv2d(net, 50, [1,1],padding='VALID', stride=[1,1], activation_fn=None,scope='conv10')#squeeze()删除大小为1的第二个维度net = tf.squeeze(net, [2]) # BxNxCreturn net, end_points

以上便是此次全部代码解释内容,有错误请及时留言告知