总结一下实现多角度模板匹配踩的坑

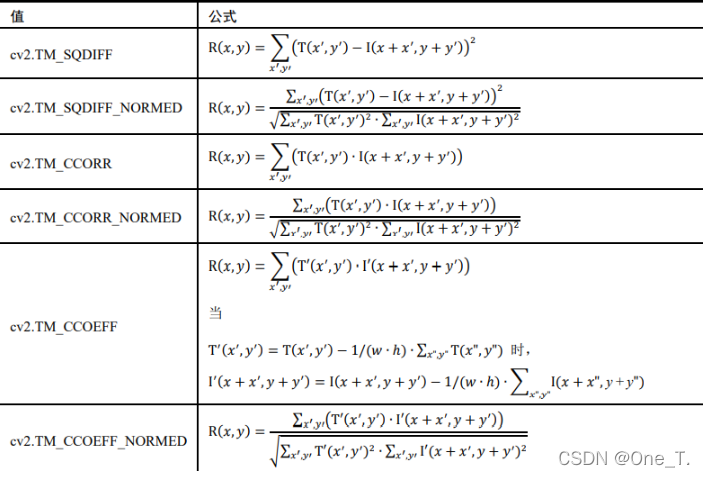

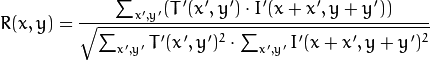

一 、多角度匹配涉及到要使用mask,首先opencv matchTemplateMask自带的源码如下:

static void matchTemplateMask( InputArray _img, InputArray _templ, OutputArray _result, int method, InputArray _mask )

{CV_Assert(_mask.depth() == CV_8U || _mask.depth() == CV_32F);CV_Assert(_mask.channels() == _templ.channels() || _mask.channels() == 1);CV_Assert(_templ.size() == _mask.size());CV_Assert(_img.size().height >= _templ.size().height &&_img.size().width >= _templ.size().width);Mat img = _img.getMat(), templ = _templ.getMat(), mask = _mask.getMat();if (img.depth() == CV_8U){img.convertTo(img, CV_32F);}if (templ.depth() == CV_8U){templ.convertTo(templ, CV_32F);}if (mask.depth() == CV_8U){// To keep compatibility to other masks in OpenCV: CV_8U masks are binary masksthreshold(mask, mask, 0/*threshold*/, 1.0/*maxVal*/, THRESH_BINARY);mask.convertTo(mask, CV_32F);}Size corrSize(img.cols - templ.cols + 1, img.rows - templ.rows + 1);_result.create(corrSize, CV_32F);Mat result = _result.getMat();// If mask has only one channel, we repeat it for every image/template channelif (templ.type() != mask.type()){// Assertions above ensured, that depth is the same and only number of channel differstd::vector<Mat> maskChannels(templ.channels(), mask);merge(maskChannels.data(), templ.channels(), mask);}if (method == CV_TM_SQDIFF || method == CV_TM_SQDIFF_NORMED){Mat temp_result(corrSize, CV_32F);Mat img2 = img.mul(img);Mat mask2 = mask.mul(mask);// If the mul() is ever unnested, declare MatExpr, *not* Mat, to be more efficient.// NORM_L2SQR calculates sum of squaresdouble templ2_mask2_sum = norm(templ.mul(mask), NORM_L2SQR);crossCorr(img2, mask2, temp_result, Point(0,0), 0, 0);crossCorr(img, templ.mul(mask2), result, Point(0,0), 0, 0);// result and temp_result should not be switched, because temp_result is potentially needed// for normalization.result = -2 * result + temp_result + templ2_mask2_sum;if (method == CV_TM_SQDIFF_NORMED){sqrt(templ2_mask2_sum * temp_result, temp_result);result /= temp_result;}}else if (method == CV_TM_CCORR || method == CV_TM_CCORR_NORMED){// If the mul() is ever unnested, declare MatExpr, *not* Mat, to be more efficient.Mat templ_mask2 = templ.mul(mask.mul(mask));crossCorr(img, templ_mask2, result, Point(0,0), 0, 0);if (method == CV_TM_CCORR_NORMED){Mat temp_result(corrSize, CV_32F);Mat img2 = img.mul(img);Mat mask2 = mask.mul(mask);// NORM_L2SQR calculates sum of squaresdouble templ2_mask2_sum = norm(templ.mul(mask), NORM_L2SQR);crossCorr( img2, mask2, temp_result, Point(0,0), 0, 0 );sqrt(templ2_mask2_sum * temp_result, temp_result);result /= temp_result;}}else if (method == CV_TM_CCOEFF || method == CV_TM_CCOEFF_NORMED){// Do mul() inline or declare MatExpr where possible, *not* Mat, to be more efficient.Scalar mask_sum = sum(mask);// T' * M where T' = M * (T - 1/sum(M)*sum(M*T))Mat templx_mask = mask.mul(mask.mul(templ - sum(mask.mul(templ)).div(mask_sum)));Mat img_mask_corr(corrSize, img.type()); // Needs separate channels// CCorr(I, T'*M)crossCorr(img, templx_mask, result, Point(0, 0), 0, 0);// CCorr(I, M)crossCorr(img, mask, img_mask_corr, Point(0, 0), 0, 0);// CCorr(I', T') = CCorr(I, T'*M) - sum(T'*M)/sum(M)*CCorr(I, M)// It does not matter what to use Mat/MatExpr, it should be evaluated to perform assign subtractionMat temp_res = img_mask_corr.mul(sum(templx_mask).div(mask_sum));if (img.channels() == 1){result -= temp_res;}else{// Sum channels of expressiontemp_res = temp_res.reshape(1, result.rows * result.cols);// channels are now columnsreduce(temp_res, temp_res, 1, REDUCE_SUM);// transform back, but now with only one channelresult -= temp_res.reshape(1, result.rows);}if (method == CV_TM_CCOEFF_NORMED){// norm(T')double norm_templx = norm(mask.mul(templ - sum(mask.mul(templ)).div(mask_sum)),NORM_L2);// norm(I') = sqrt{ CCorr(I^2, M^2) - 2*CCorr(I, M^2)/sum(M)*CCorr(I, M)// + sum(M^2)*CCorr(I, M)^2/sum(M)^2 }// = sqrt{ CCorr(I^2, M^2)// + CCorr(I, M)/sum(M)*{ sum(M^2) / sum(M) * CCorr(I,M)// - 2 * CCorr(I, M^2) } }Mat norm_imgx(corrSize, CV_32F);Mat img2 = img.mul(img);Mat mask2 = mask.mul(mask);Scalar mask2_sum = sum(mask2);Mat img_mask2_corr(corrSize, img.type());crossCorr(img2, mask2, norm_imgx, Point(0,0), 0, 0);crossCorr(img, mask2, img_mask2_corr, Point(0,0), 0, 0);temp_res = img_mask_corr.mul(Scalar(1.0, 1.0, 1.0, 1.0).div(mask_sum)).mul(img_mask_corr.mul(mask2_sum.div(mask_sum)) - 2 * img_mask2_corr);if (img.channels() == 1){norm_imgx += temp_res;}else{// Sum channels of expressiontemp_res = temp_res.reshape(1, result.rows*result.cols);// channels are now columns// reduce sums columns (= channels)reduce(temp_res, temp_res, 1, REDUCE_SUM);// transform back, but now with only one channelnorm_imgx += temp_res.reshape(1, result.rows);}sqrt(norm_imgx, norm_imgx);result /= norm_imgx * norm_templx;}}

}

可以看到使用用了四次dft来计算卷积,目标图像要与mask卷三次,来计算目标图像在模板区域内的和,平方和。其中最后一次CCorr(I, mask2)可以省略掉,它跟CCorr(I, mask)是一样的,因为mask是二值。

// CCorr(I, T'*M)crossCorr(img, templx_mask, result, Point(0, 0), 0, 0);// CCorr(I, M)crossCorr(img, mask, img_mask_corr, Point(0, 0), 0, 0);crossCorr(img2, mask2, norm_imgx, Point(0,0), 0, 0);crossCorr(img, mask2, img_mask2_corr, Point(0,0), 0, 0);

所以耗时的部分就是这三次卷积,可以用simd加速。opencv以及封装了simd指令,怎么用看这位博主OpenCV 4.x3.4.x版本以上也有中提供了强大的统一向量指令

实测,在高金字塔进行全局匹配的时候,用crossCorr来计算卷积,而用simd计算局部卷积,这样更快。

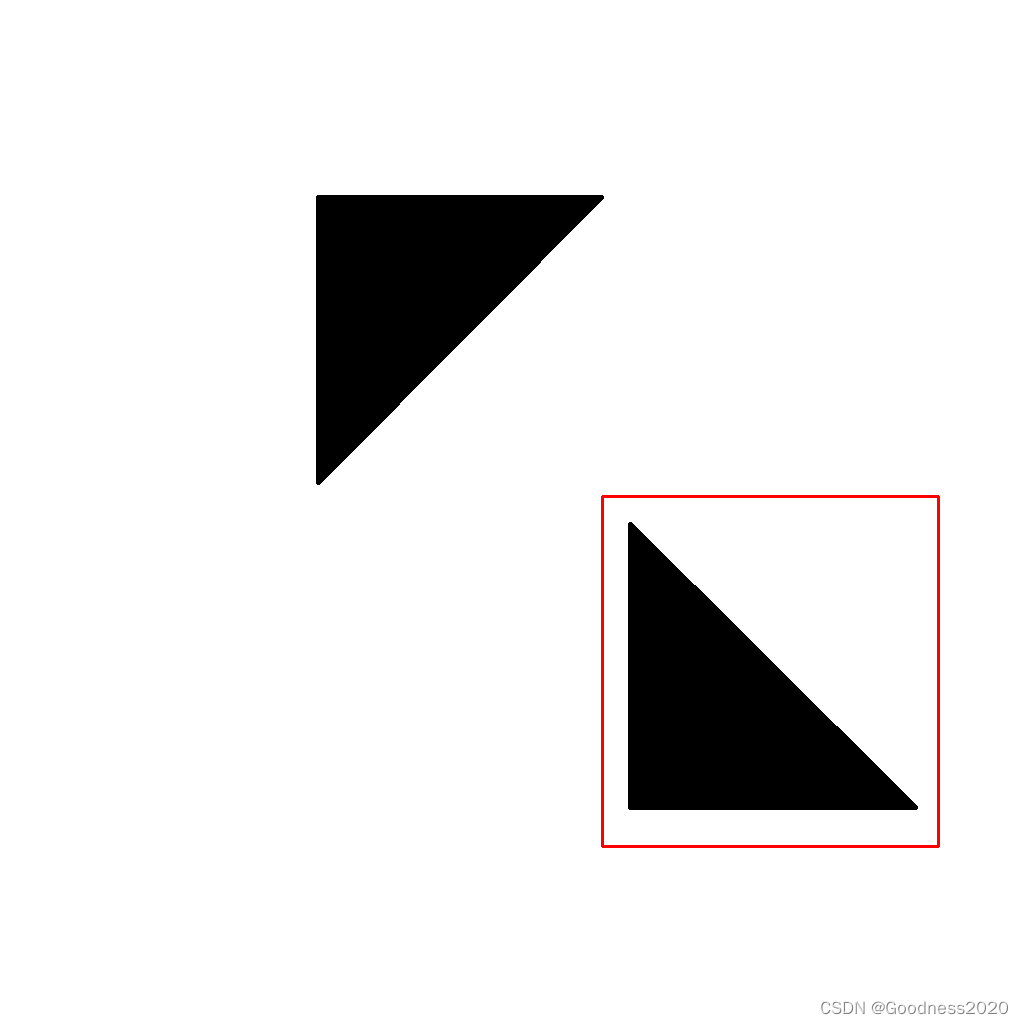

二、模板的旋转

- 创建一个paddingImg,其尺寸是模板的对角线长+1,然后再将模板图像拷贝到paddingImg中间去,这样旋转就不会超出边界,代码如下。

- 还有一个点,就是旋转的插值是最好使用INTER_NEAREST,试过其他几种,在比较高的金字塔层中匹配,会出现匹配不到的情况。

void NccMatch::RotateImg(Mat mImg, int nAngle, Mat& outImg, Mat& mask,RotatedRect* ptrMinRect, Point2d pRC)

{if (mImg.depth() != CV_32F) { mImg.convertTo(mImg, CV_32F); }int nDiagonal = sqrt(pow(mImg.cols, 2) + pow(mImg.rows, 2)) + 1;Mat paddingImg = -1 * Mat::ones(Size(nDiagonal, nDiagonal), mImg.type());Rect roi(Point((nDiagonal - mImg.cols) * 0.5, (nDiagonal - mImg.rows) * 0.5), Size(mImg.cols, mImg.rows));mImg.copyTo(paddingImg(roi));Mat M = getRotationMatrix2D(Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5), nAngle, 1.0);warpAffine(paddingImg, outImg, M, paddingImg.size(), INTER_NEAREST, 0, Scalar::all(-2));mask = outImg.clone();threshold(mask, mask, -1, 1, THRESH_BINARY);//RotatedRect rRect(Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5), Size2f(mImg.cols, mImg.rows), -nAngle);ptrMinRect->center = Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5);ptrMinRect->size = Size2f(mImg.cols, mImg.rows);ptrMinRect->angle = -nAngle;return;

}

三,匹配

一定要把目标图像进行padding,确保模板能滑过每一个像素,不然会发现有些图,死活都匹配不上了。

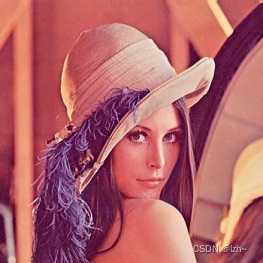

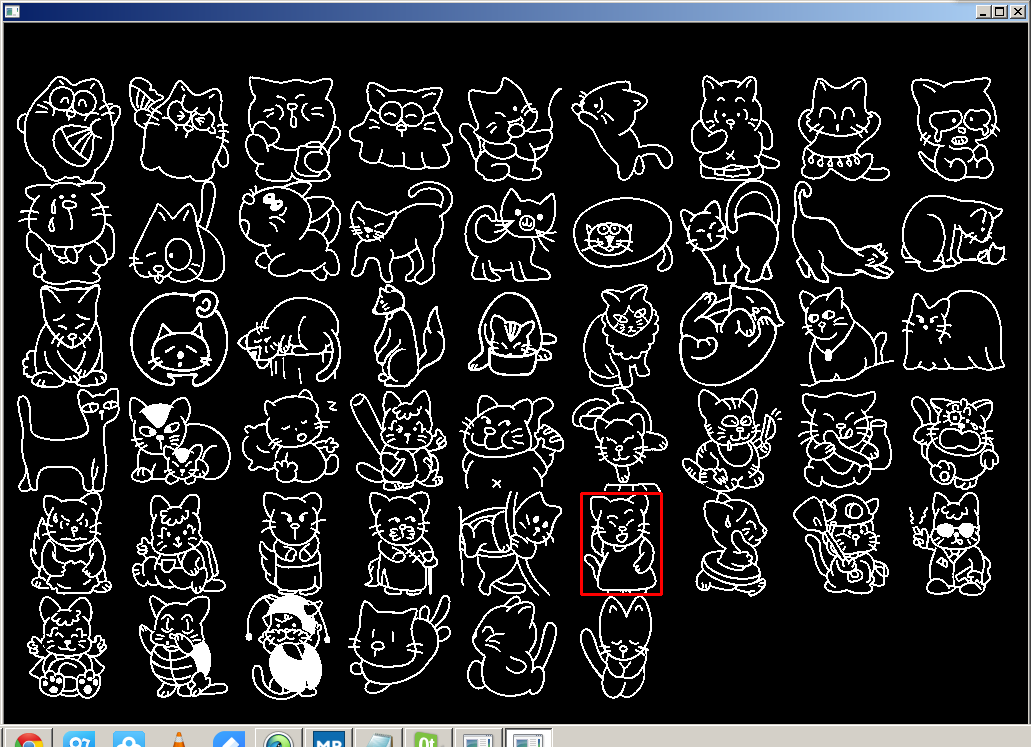

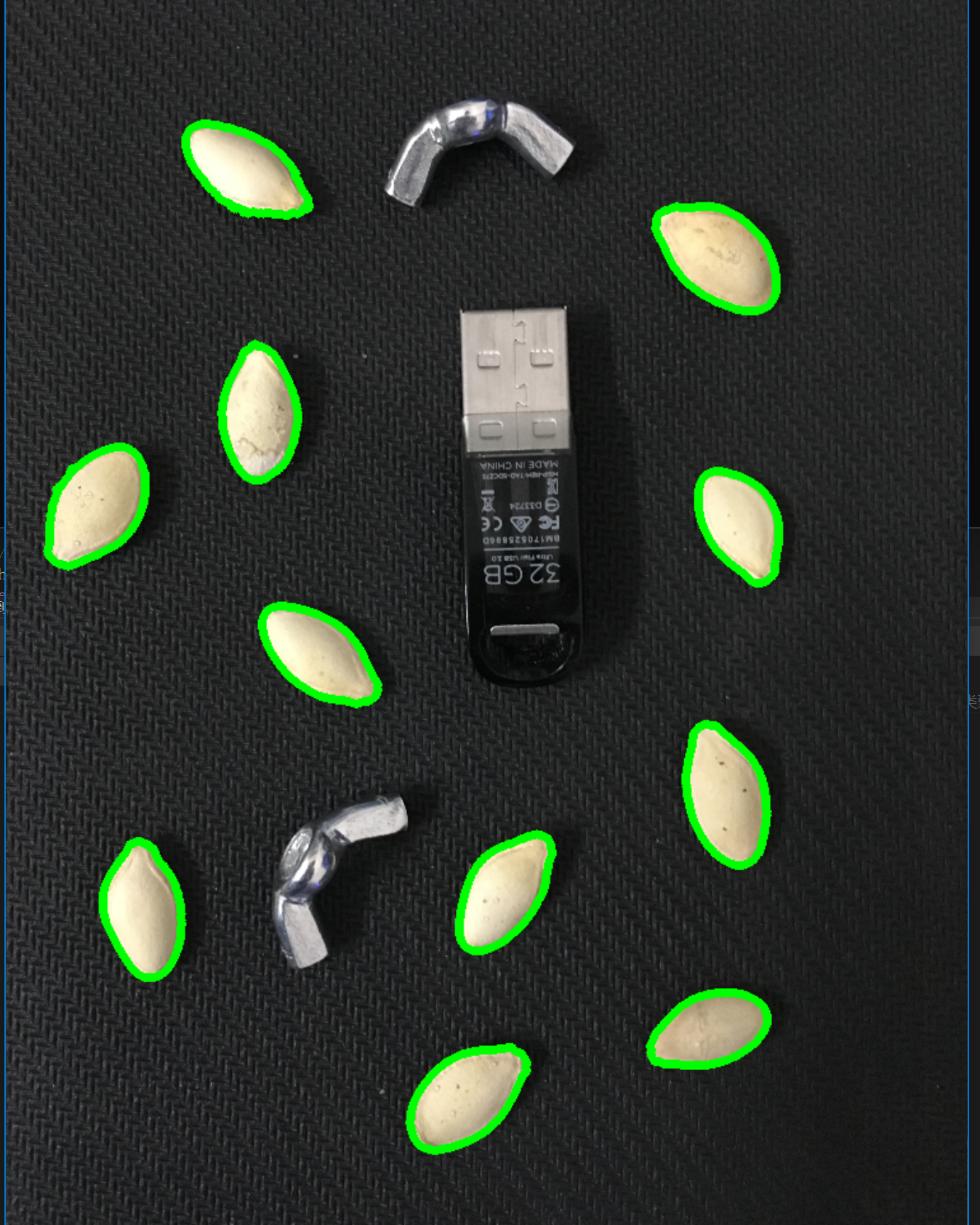

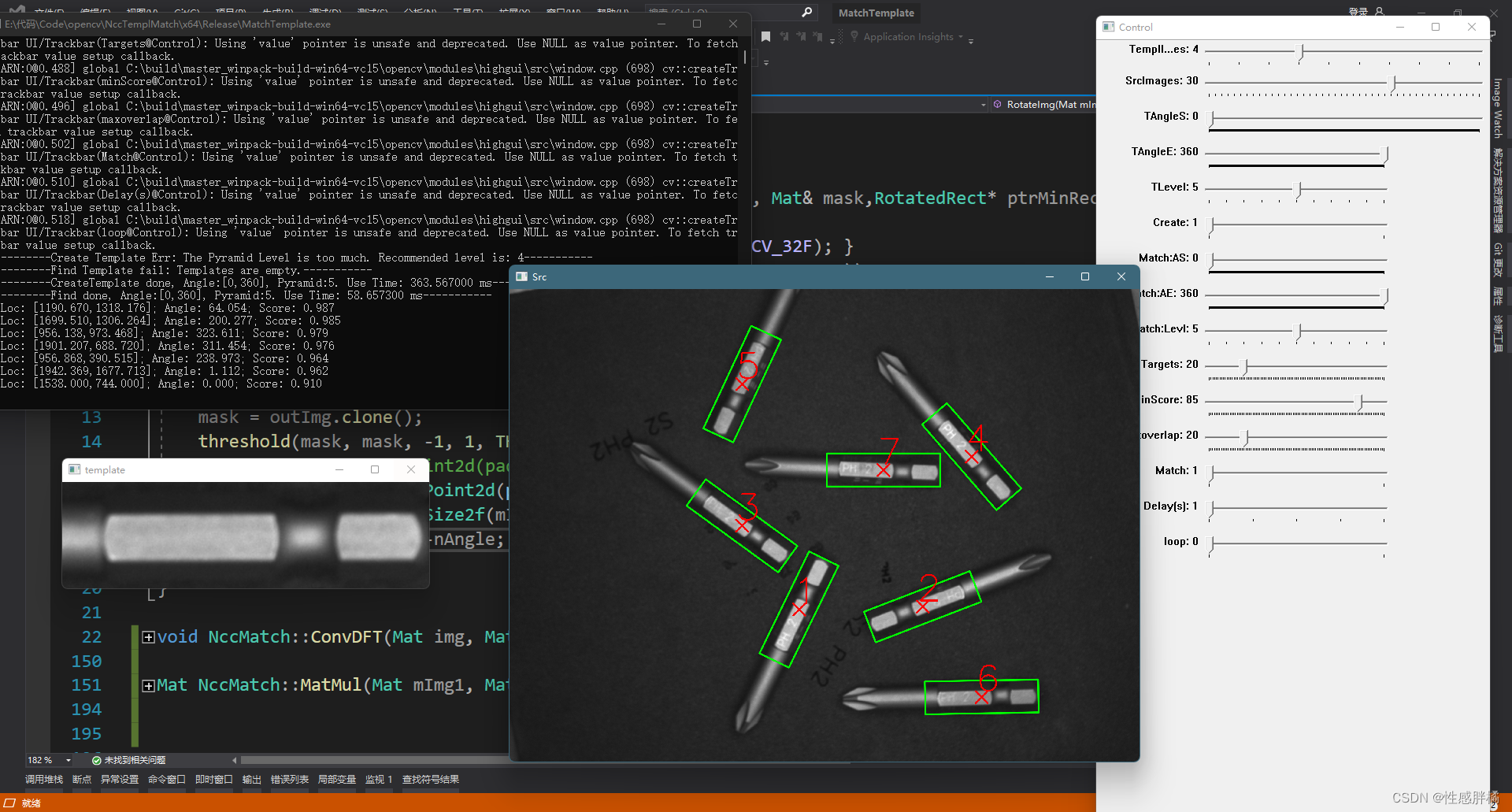

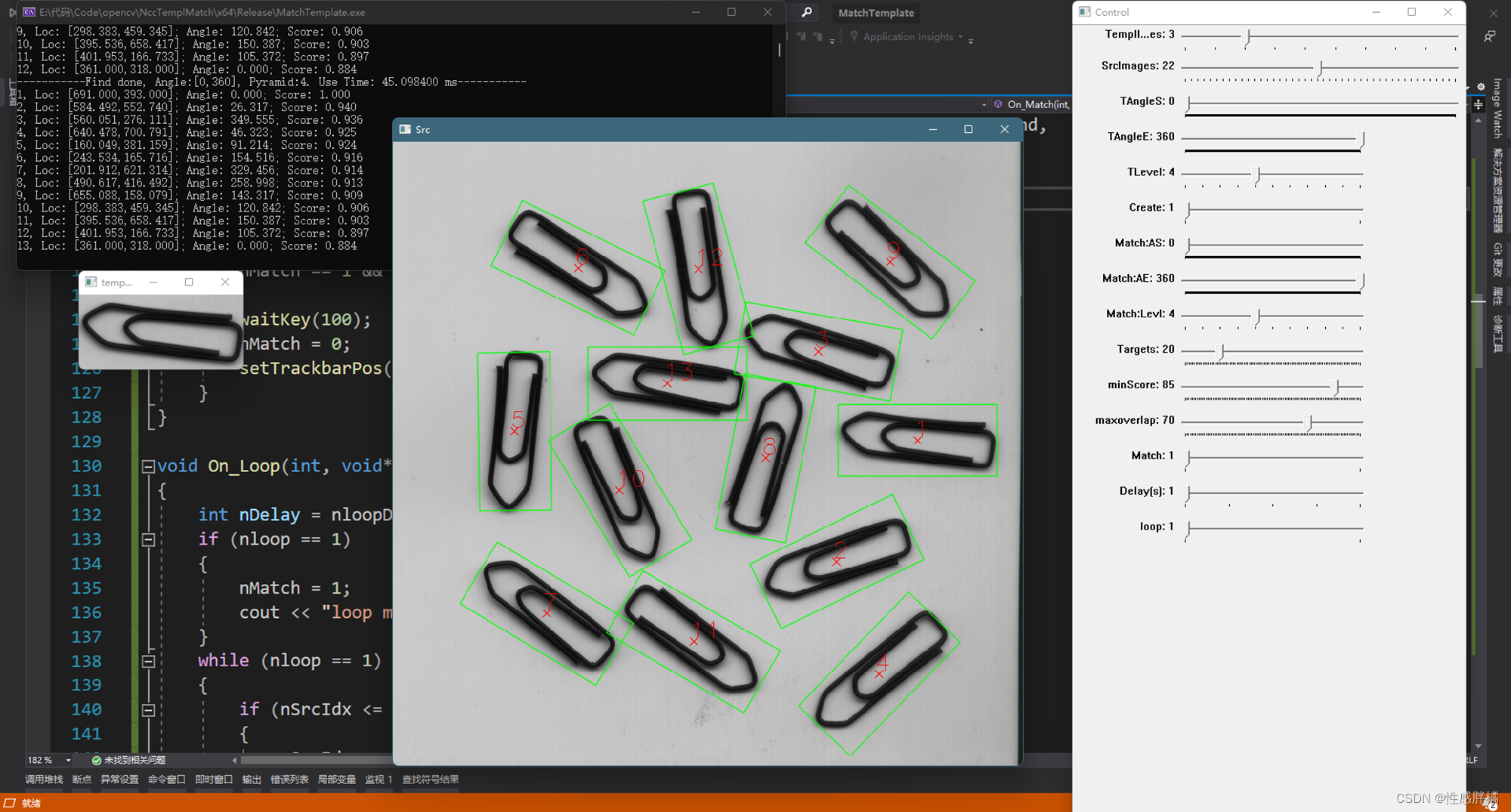

最后,实现的效果如下,有些测试图是用的这位大佬的https://github.com/DennisLiu1993/Fastest_Image_Pattern_Matching

步长和亚像素的计算也是参考这位大佬。

目标图像2592x1944,模板149x150,匹配角度[0,360],耗时约为:34ms

目标图像646x492,模板214x98,匹配角度[0,360],金字塔层数=4,耗时约为:14ms

目标图像2592x1944,模板466x135,匹配角度[0,360],金字塔层数=5,耗时约为:58ms

目标图像830x822,模板209x95,匹配角度[0,360],金字塔层数=4,耗时约为:45ms

目标图像830x822,模板209x95,匹配角度[0,360],金字塔层数=4,耗时约为:45ms

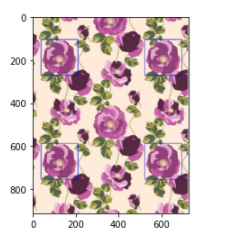

更新:

增加了模板文件的序列化 ,把ncc match相关功能封装成了dll,用WPF做了个简单的Demo,如下:

Demo里面展现了调用dll的接口,下载在这里Demo下载(不包含Ncc match的源码)