sogou中文语料库下载地址是:https://download.csdn.net/download/kinas2u/1277550

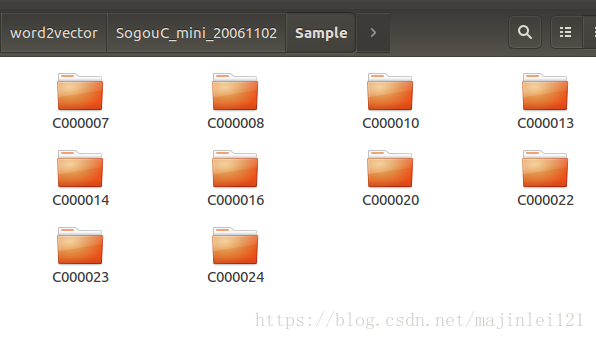

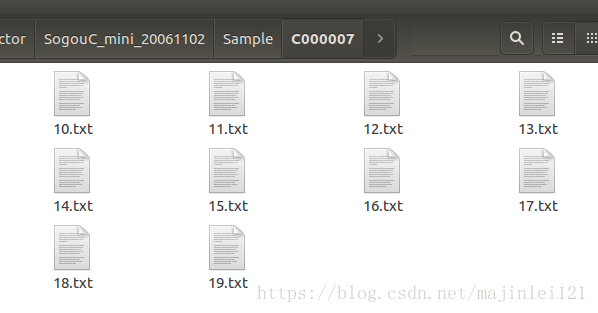

下载下来的文件包含了很多子文件夹,每个子文件夹下又包含了很多txt语料文件,我想把他们都整合到一个txt中(./SogouC_mini_20061102/Sample),并且输出的是已经分好词的txt文件

下面是处理程序

# -*- coding: utf-8 -*-

#!/usr/bin/env python

import sys

reload(sys)

sys.setdefaultencoding('utf8')import pandas as pd

import numpy as np

import lightgbm as lgb

from sklearn.model_selection import StratifiedKFold

from sklearn.metrics import f1_score

from gensim.models import word2vec

import logging, jieba

import os, ioif os.path.exists('sogou_seg.txt'):os.remove('sogou_seg.txt')stop_words_file = "./SogouC_mini_20061102/stop_words.txt"

stop_words = list()

with io.open(stop_words_file, 'r', encoding="gb18030") as stop_words_file_object: contents = stop_words_file_object.readlines() for line in contents: line = line.strip() stop_words.append(line)d_s = []

data_dir = './SogouC_mini_20061102/Sample'

#data_dir = './train'

for folder in os.listdir(data_dir):d = os.path.join(data_dir, folder) if not os.path.isdir(d):continued_s.append(d) data_files = []

for folder_cls in d_s:txt_files = os.listdir(folder_cls)for txt_file in txt_files:data_files.append(os.path.join(folder_cls,txt_file))for data_file in data_files:with io.open(data_file, 'r', encoding='gb18030') as content:for line in content:seg_list = list(jieba.cut(line))out_str = ''for word in seg_list:if word not in stop_words:if word.strip() != "":word = ''.join(word)out_str += wordout_str += ' 'with io.open('sogou_seg.txt', 'a', encoding='utf-8') as output:output.write(unicode(out_str))output.close()

程序中中文语料停用词(stop_words.txt)下载地址为https://download.csdn.net/download/majinlei121/10733352

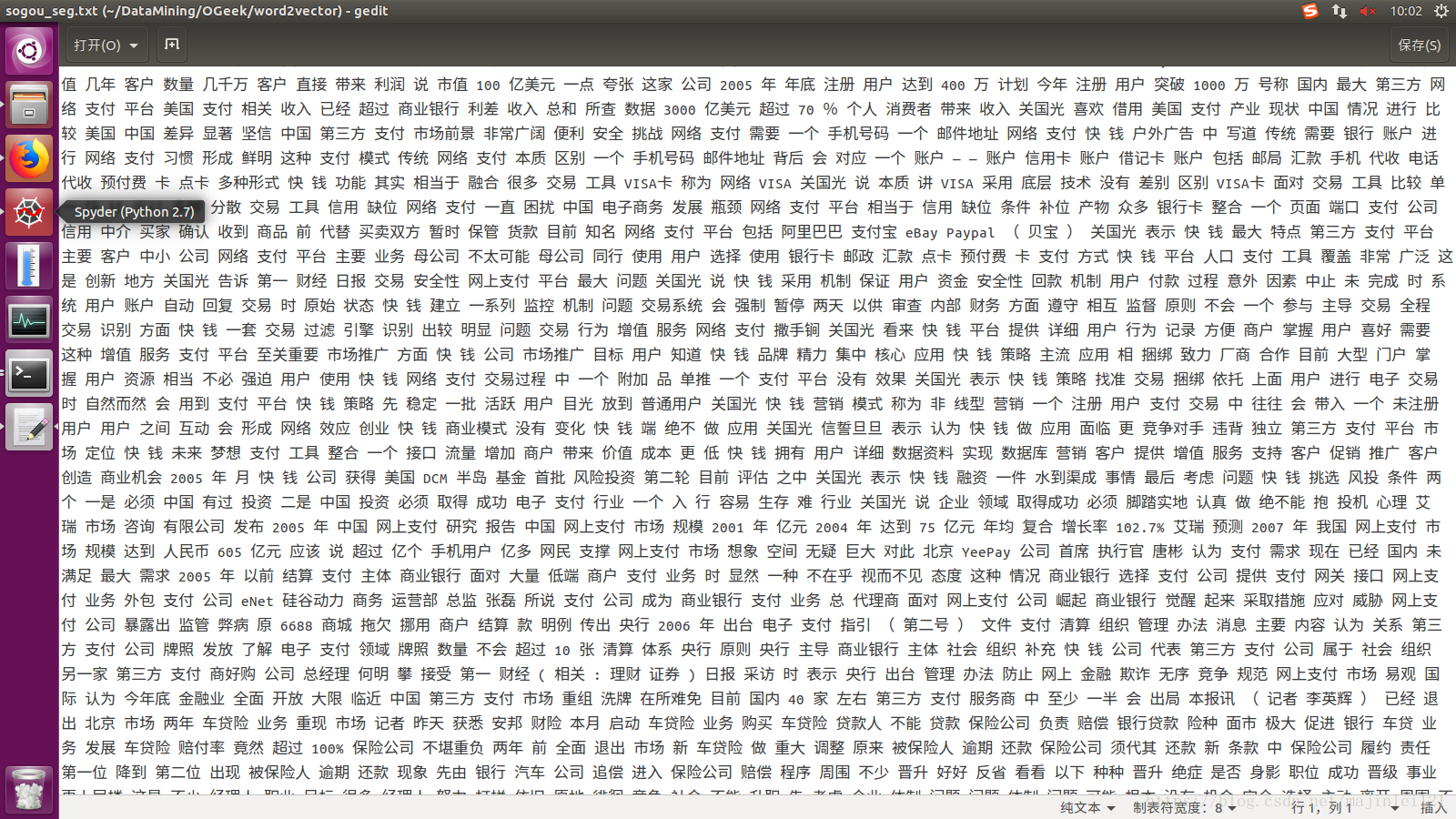

输出文件为sogou_seg.txt(大约309K),打开样式如下