Zuul2 的 线程模型

转自:https://www.jianshu.com/p/cb413fec1632

Zuul 2相对zuul 1 由同步改进为异步机制,没有了同步阻塞,全部基于事件驱动模型编程,线程模型也变得简单。

zuul做为一个网关接受客户端的请求–服务端,又要和后端的服务建立链接,把请求转发给后端服务–客户端。下面我们来分析zuul 是怎么通过一个线程池来实现的,即服务端和客户端用同一个线程池,通过netty 编程这很容易,空口无凭,我们来看zuul 是怎么实现的。

线程池初始化

线程池的个数如下

public DefaultEventLoopConfig(){eventLoopCount = WORKER_THREADS.get() > 0 ? WORKER_THREADS.get() : PROCESSOR_COUNT;acceptorCount = ACCEPTOR_THREADS.get();}

boss 线程数是1,

worker 线程数是:PROCESSOR_COUNT 缺省值如下,为cpu的核数。

zuul 异步线程模型,吞吐量很高,所以线程的个数基本按cpu核数来,这样上下文切换的开销很少。

private static final int PROCESSOR_COUNT = Runtime.getRuntime().availableProcessors();Zuul server 的start 方法入手

public void start(boolean sync){//ServerGroup 是zuul 对netty eventloop的简单封装,serverGroup = new ServerGroup("Salamander", eventLoopConfig.acceptorCount(), eventLoopConfig.eventLoopCount(), eventLoopGroupMetrics);//使用epoll还是机遇jdk的多路复用select来实现事件驱动,epoll 只支持Linux,使用serverGroup.initializeTransport();try {List<ChannelFuture> allBindFutures = new ArrayList<>();// Setup each of the channel initializers on requested ports.for (Map.Entry<Integer, ChannelInitializer> entry : portsToChannelInitializers.entrySet()){allBindFutures.add(setupServerBootstrap(entry.getKey(), entry.getValue()));}// Once all server bootstraps are successfully initialized, then bind to each port.for (ChannelFuture f: allBindFutures) {// Wait until the server socket is closed.ChannelFuture cf = f.channel().closeFuture();if (sync) {cf.sync();}}}catch (InterruptedException e) {Thread.currentThread().interrupt();}}

Epoll 还是Selector

我们知道,操作系统底层的IO方式有select,poll,epoll,最早的是select 机制,但是select机制对文件句柄的个数有限制,而且需要迭代,而epoll 无限制,而且不需要迭代,效率最高,jdk也有epoll 的api,不过默认是采用水平出发的方式,netty 的epoll 是采用边缘触发方式,效率更高,所以写netty的都会做个适配,是用epoll还是用select。

服务端

private ChannelFuture setupServerBootstrap(int port, ChannelInitializer channelInitializer)

throws InterruptedException

{

//设置上面创建的两个线程池

ServerBootstrap serverBootstrap = new ServerBootstrap().group(

serverGroup.clientToProxyBossPool,

serverGroup.clientToProxyWorkerPool);

// Choose socket options.Map<ChannelOption, Object> channelOptions = new HashMap<>();channelOptions.put(ChannelOption.SO_BACKLOG, 128);//channelOptions.put(ChannelOption.SO_TIMEOUT, SERVER_SOCKET_TIMEOUT.get());channelOptions.put(ChannelOption.SO_LINGER, -1);channelOptions.put(ChannelOption.TCP_NODELAY, true);channelOptions.put(ChannelOption.SO_KEEPALIVE, true);// Choose EPoll or NIO.if (USE_EPOLL.get()) {LOG.warn("Proxy listening with TCP transport using EPOLL");serverBootstrap = serverBootstrap.channel(EpollServerSocketChannel.class);channelOptions.put(EpollChannelOption.TCP_DEFER_ACCEPT, Integer.valueOf(-1));}else {LOG.warn("Proxy listening with TCP transport using NIO");serverBootstrap = serverBootstrap.channel(NioServerSocketChannel.class);}// Apply socket options.for (Map.Entry<ChannelOption, Object> optionEntry : channelOptions.entrySet()) {serverBootstrap = serverBootstrap.option(optionEntry.getKey(), optionEntry.getValue());}//设置channelInitializer,后面分析的入口就在这里了。serverBootstrap.childHandler(channelInitializer);serverBootstrap.validate();LOG.info("Binding to port: " + port);// Flag status as UP just before binding to the port.serverStatusManager.localStatus(InstanceInfo.InstanceStatus.UP);// Bind and start to accept incoming connections.return serverBootstrap.bind(port).sync();

}

客户端

服务端接收到请求后,请求经过一序列的filter 处理,会交给zuul的ProxyEndpoint 来把请求转发给后端服务,ProxyEndpoint这里 需要做路由和对后端链接的建立,即实现netty的客户端,执行的入口如下:

ProxyEndpoint 的 proxyRequestToOrigin 方法:

attemptNum += 1;requestStat = createRequestStat();origin.preRequestChecks(zuulRequest);concurrentReqCount++; //关键是这里,channelCtx.channel().eventLoop()promise = origin.connectToOrigin(zuulRequest, channelCtx.channel().eventLoop(), attemptNum, passport, chosenServer);logOriginServerIpAddr();currentRequestAttempt = origin.newRequestAttempt(chosenServer.get(), context, attemptNum);requestAttempts.add(currentRequestAttempt);passport.add(PassportState.ORIGIN_CONN_ACQUIRE_START);if (promise.isDone()) {operationComplete(promise);} else {promise.addListener(this);}}

上面的这行代码中的channelCtx.channel().eventLoop(),就是当前netty 接入端的worker event loop。

promise = origin.connectToOrigin(zuulRequest, channelCtx.channel().eventLoop(), attemptNum, passport, chosenServer);

这里主要是实现如下几点:

负载均衡,选择一台机器。

为对应的机器创建链接池。

从链接池活着链接。

第一次建立链接时,最终会执行如下的代码:

public ChannelFuture connect(final EventLoop eventLoop, String host, final int port, CurrentPassport passport) {Class socketChannelClass;if (Server.USE_EPOLL.get()) {socketChannelClass = EpollSocketChannel.class;} else {socketChannelClass = NioSocketChannel.class;}final Bootstrap bootstrap = new Bootstrap().channel(socketChannelClass).handler(channelInitializer).group(eventLoop).attr(CurrentPassport.CHANNEL_ATTR, passport).option(ChannelOption.CONNECT_TIMEOUT_MILLIS, connPoolConfig.getConnectTimeout()).option(ChannelOption.SO_KEEPALIVE, connPoolConfig.getTcpKeepAlive()).option(ChannelOption.TCP_NODELAY, connPoolConfig.getTcpNoDelay()).option(ChannelOption.SO_SNDBUF, connPoolConfig.getTcpSendBufferSize()).option(ChannelOption.SO_RCVBUF, connPoolConfig.getTcpReceiveBufferSize()).option(ChannelOption.WRITE_BUFFER_HIGH_WATER_MARK, connPoolConfig.getNettyWriteBufferHighWaterMark()).option(ChannelOption.WRITE_BUFFER_LOW_WATER_MARK, connPoolConfig.getNettyWriteBufferLowWaterMark()).option(ChannelOption.AUTO_READ, connPoolConfig.getNettyAutoRead()).remoteAddress(new InetSocketAddress(host, port));return bootstrap.connect();}

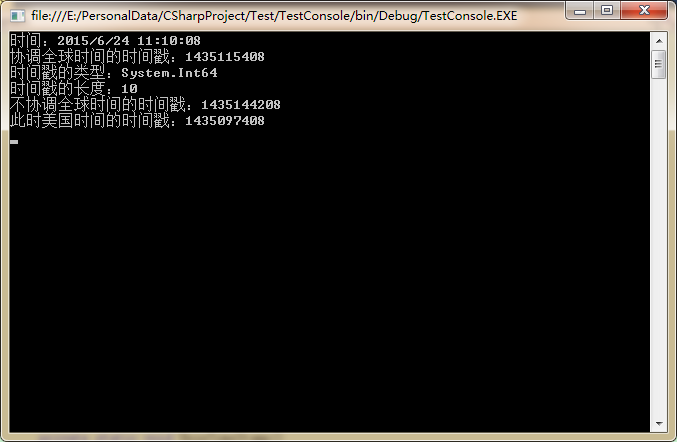

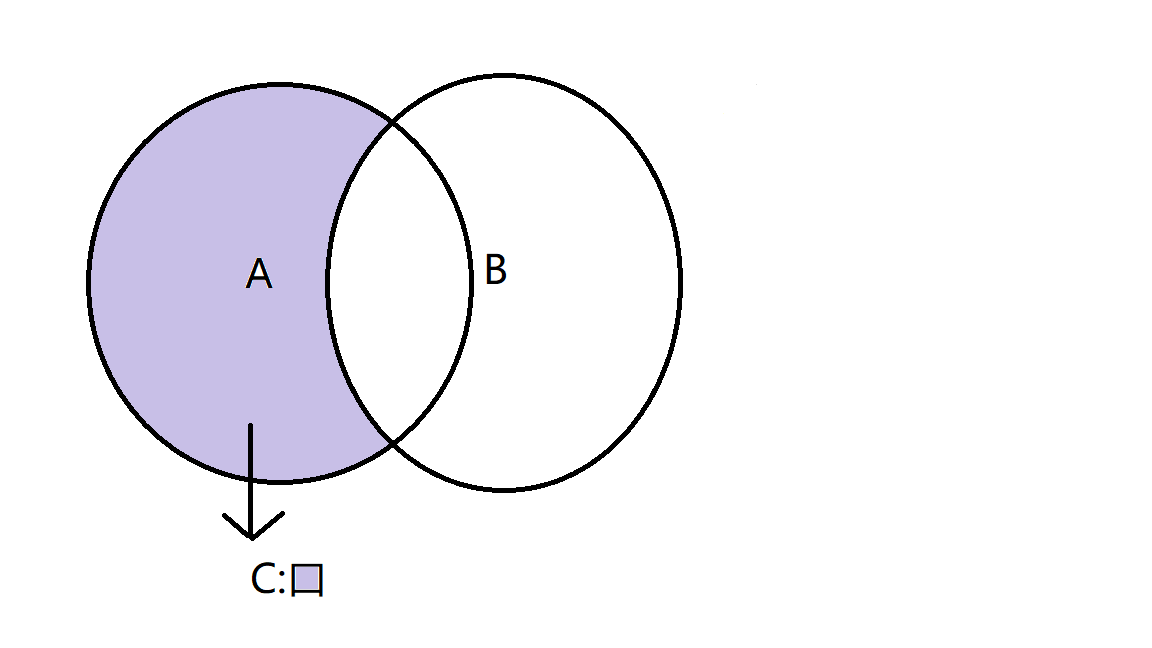

上面的代码是不是很熟悉,是netty客户端的实现,绑定的eventloop 就是前面传递进来的即接入端的eventLoop,这样zuul 就是实现了接入端的io 操作和后端服务的读写都是绑定到同一个eventLoop线程上。

总结

zuul2的线程模型好简单,就是netty的一个eventloop 线程池,来处理所有的请求和远程调用。

从上面的图可以看出,一个请求进来和调用远程服务,以及回写都是由同一个线程来完成的,完全没有上下文切换。zuul2 用一个线程池搞定所有的这些,这都是得益于netty 异步编程的威力。