本文在调参记录20的基础上,将残差模块的个数,从27个增加到60个,继续测试深度残差网络ResNet+自适应参数化ReLU激活函数在Cifar10数据集上的表现。

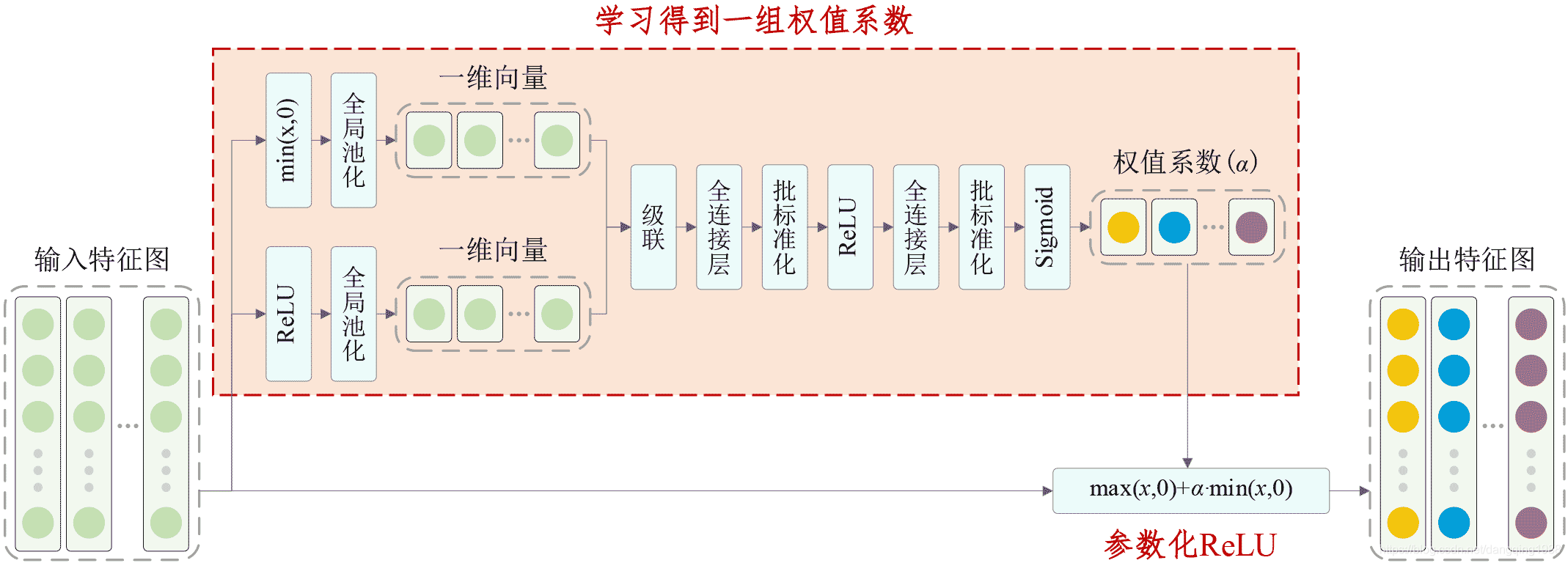

自适应参数化ReLU函数被放在了残差模块的第二个卷积层之后,这与Squeeze-and-Excitation Networks或者深度残差收缩网络是相似的。其基本原理如下

Keras程序如下:

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Tue Apr 14 04:17:45 2020

Implemented using TensorFlow 1.10.0 and Keras 2.2.1Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Shaojiang Dong, Michael Pecht,

Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis,

IEEE Transactions on Industrial Electronics, 2020, DOI: 10.1109/TIE.2020.2972458 @author: Minghang Zhao

"""from __future__ import print_function

import keras

import numpy as np

from keras.datasets import cifar10

from keras.layers import Dense, Conv2D, BatchNormalization, Activation, Minimum

from keras.layers import AveragePooling2D, Input, GlobalAveragePooling2D, Concatenate, Reshape

from keras.regularizers import l2

from keras import backend as K

from keras.models import Model

from keras import optimizers

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import LearningRateScheduler

K.set_learning_phase(1)# The data, split between train and test sets

(x_train, y_train), (x_test, y_test) = cifar10.load_data()# Noised data

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_test = x_test-np.mean(x_train)

x_train = x_train-np.mean(x_train)

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')# convert class vectors to binary class matrices

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)# Schedule the learning rate, multiply 0.1 every 150 epoches

def scheduler(epoch):if epoch % 150 == 0 and epoch != 0:lr = K.get_value(model.optimizer.lr)K.set_value(model.optimizer.lr, lr * 0.1)print("lr changed to {}".format(lr * 0.1))return K.get_value(model.optimizer.lr)# An adaptively parametric rectifier linear unit (APReLU)

def aprelu(inputs):# get the number of channelschannels = inputs.get_shape().as_list()[-1]# get a zero feature mapzeros_input = keras.layers.subtract([inputs, inputs])# get a feature map with only positive featurespos_input = Activation('relu')(inputs)# get a feature map with only negative featuresneg_input = Minimum()([inputs,zeros_input])# define a network to obtain the scaling coefficientsscales_p = GlobalAveragePooling2D()(pos_input)scales_n = GlobalAveragePooling2D()(neg_input)scales = Concatenate()([scales_n, scales_p])scales = Dense(channels//16, activation='linear', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(scales)scales = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(scales)scales = Activation('relu')(scales)scales = Dense(channels, activation='linear', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(scales)scales = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(scales)scales = Activation('sigmoid')(scales)scales = Reshape((1,1,channels))(scales)# apply a paramtetric reluneg_part = keras.layers.multiply([scales, neg_input])return keras.layers.add([pos_input, neg_part])# Residual Block

def residual_block(incoming, nb_blocks, out_channels, downsample=False,downsample_strides=2):residual = incomingin_channels = incoming.get_shape().as_list()[-1]for i in range(nb_blocks):identity = residualif not downsample:downsample_strides = 1residual = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(residual)residual = Activation('relu')(residual)residual = Conv2D(out_channels, 3, strides=(downsample_strides, downsample_strides), padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(residual)residual = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(residual)residual = Activation('relu')(residual)residual = Conv2D(out_channels, 3, padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(residual)residual = aprelu(residual)# Downsamplingif downsample_strides > 1:identity = AveragePooling2D(pool_size=(1,1), strides=(2,2))(identity)# Zero_padding to match channelsif in_channels != out_channels:zeros_identity = keras.layers.subtract([identity, identity])identity = keras.layers.concatenate([identity, zeros_identity])in_channels = out_channelsresidual = keras.layers.add([residual, identity])return residual# define and train a model

inputs = Input(shape=(32, 32, 3))

net = Conv2D(16, 3, padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(inputs)

net = residual_block(net, 20, 32, downsample=False)

net = residual_block(net, 1, 32, downsample=True)

net = residual_block(net, 19, 32, downsample=False)

net = residual_block(net, 1, 64, downsample=True)

net = residual_block(net, 19, 64, downsample=False)

net = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(net)

net = Activation('relu')(net)

net = GlobalAveragePooling2D()(net)

outputs = Dense(10, activation='softmax', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(net)

model = Model(inputs=inputs, outputs=outputs)

sgd = optimizers.SGD(lr=0.1, decay=0., momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])# data augmentation

datagen = ImageDataGenerator(# randomly rotate images in the range (deg 0 to 180)rotation_range=30,# Range for random zoomzoom_range = 0.2,# shear angle in counter-clockwise direction in degreesshear_range = 30,# randomly flip imageshorizontal_flip=True,# randomly shift images horizontallywidth_shift_range=0.125,# randomly shift images verticallyheight_shift_range=0.125)reduce_lr = LearningRateScheduler(scheduler)

# fit the model on the batches generated by datagen.flow().

model.fit_generator(datagen.flow(x_train, y_train, batch_size=100),validation_data=(x_test, y_test), epochs=500, verbose=1, callbacks=[reduce_lr], workers=4)# get results

K.set_learning_phase(0)

DRSN_train_score = model.evaluate(x_train, y_train, batch_size=100, verbose=0)

print('Train loss:', DRSN_train_score[0])

print('Train accuracy:', DRSN_train_score[1])

DRSN_test_score = model.evaluate(x_test, y_test, batch_size=100, verbose=0)

print('Test loss:', DRSN_test_score[0])

print('Test accuracy:', DRSN_test_score[1])

实验结果如下:

Using TensorFlow backend.

x_train shape: (50000, 32, 32, 3)

50000 train samples

10000 test samples

Epoch 1/500

156s 312ms/step - loss: 3.7450 - acc: 0.4151 - val_loss: 3.1432 - val_acc: 0.5763

Epoch 2/500

113s 226ms/step - loss: 2.9954 - acc: 0.5750 - val_loss: 2.5940 - val_acc: 0.6689

Epoch 3/500

113s 226ms/step - loss: 2.5203 - acc: 0.6476 - val_loss: 2.1871 - val_acc: 0.7254

Epoch 4/500

113s 225ms/step - loss: 2.1855 - acc: 0.6865 - val_loss: 1.9171 - val_acc: 0.7488

Epoch 5/500

113s 225ms/step - loss: 1.9224 - acc: 0.7144 - val_loss: 1.6662 - val_acc: 0.7774

Epoch 6/500

113s 225ms/step - loss: 1.7111 - acc: 0.7331 - val_loss: 1.4882 - val_acc: 0.7915

Epoch 7/500

113s 226ms/step - loss: 1.5472 - acc: 0.7483 - val_loss: 1.3414 - val_acc: 0.7994

Epoch 8/500

113s 226ms/step - loss: 1.4095 - acc: 0.7633 - val_loss: 1.2149 - val_acc: 0.8194

Epoch 9/500

113s 226ms/step - loss: 1.3008 - acc: 0.7739 - val_loss: 1.1264 - val_acc: 0.8234

Epoch 10/500

113s 226ms/step - loss: 1.2077 - acc: 0.7824 - val_loss: 1.0474 - val_acc: 0.8322

Epoch 11/500

113s 225ms/step - loss: 1.1382 - acc: 0.7885 - val_loss: 0.9929 - val_acc: 0.8343

Epoch 12/500

113s 225ms/step - loss: 1.0722 - acc: 0.7955 - val_loss: 0.9418 - val_acc: 0.8400

Epoch 13/500

113s 225ms/step - loss: 1.0242 - acc: 0.8032 - val_loss: 0.9018 - val_acc: 0.8421

Epoch 14/500

113s 225ms/step - loss: 0.9843 - acc: 0.8083 - val_loss: 0.8639 - val_acc: 0.8506

Epoch 15/500

113s 225ms/step - loss: 0.9520 - acc: 0.8101 - val_loss: 0.8522 - val_acc: 0.8491

Epoch 16/500

113s 226ms/step - loss: 0.9313 - acc: 0.8130 - val_loss: 0.8124 - val_acc: 0.8541

Epoch 17/500

113s 226ms/step - loss: 0.9033 - acc: 0.8190 - val_loss: 0.8156 - val_acc: 0.8484

Epoch 18/500

113s 226ms/step - loss: 0.8791 - acc: 0.8223 - val_loss: 0.7796 - val_acc: 0.8572

Epoch 19/500

113s 226ms/step - loss: 0.8628 - acc: 0.8289 - val_loss: 0.7842 - val_acc: 0.8559

Epoch 20/500

113s 225ms/step - loss: 0.8528 - acc: 0.8292 - val_loss: 0.7725 - val_acc: 0.8533

Epoch 21/500

113s 225ms/step - loss: 0.8432 - acc: 0.8292 - val_loss: 0.7405 - val_acc: 0.8687

Epoch 22/500

113s 225ms/step - loss: 0.8260 - acc: 0.8347 - val_loss: 0.7425 - val_acc: 0.8648

Epoch 23/500

113s 225ms/step - loss: 0.8180 - acc: 0.8357 - val_loss: 0.7319 - val_acc: 0.8666

Epoch 24/500

113s 226ms/step - loss: 0.8146 - acc: 0.8385 - val_loss: 0.7158 - val_acc: 0.8761

Epoch 25/500

113s 226ms/step - loss: 0.8029 - acc: 0.8387 - val_loss: 0.7228 - val_acc: 0.8705

Epoch 26/500

113s 225ms/step - loss: 0.7968 - acc: 0.8425 - val_loss: 0.7160 - val_acc: 0.8725

Epoch 27/500

113s 225ms/step - loss: 0.7940 - acc: 0.8433 - val_loss: 0.7176 - val_acc: 0.8747

Epoch 28/500

113s 226ms/step - loss: 0.7904 - acc: 0.8439 - val_loss: 0.7080 - val_acc: 0.8747

Epoch 29/500

113s 225ms/step - loss: 0.7810 - acc: 0.8450 - val_loss: 0.7234 - val_acc: 0.8679

Epoch 30/500

113s 225ms/step - loss: 0.7807 - acc: 0.8457 - val_loss: 0.6999 - val_acc: 0.8754

Epoch 31/500

113s 225ms/step - loss: 0.7795 - acc: 0.8487 - val_loss: 0.7116 - val_acc: 0.8745

Epoch 32/500

113s 225ms/step - loss: 0.7722 - acc: 0.8497 - val_loss: 0.7064 - val_acc: 0.8798

Epoch 33/500

113s 226ms/step - loss: 0.7678 - acc: 0.8533 - val_loss: 0.7148 - val_acc: 0.8709

Epoch 34/500

113s 226ms/step - loss: 0.7634 - acc: 0.8528 - val_loss: 0.7095 - val_acc: 0.8741

Epoch 35/500

113s 225ms/step - loss: 0.7684 - acc: 0.8535 - val_loss: 0.7070 - val_acc: 0.8768

Epoch 36/500

113s 225ms/step - loss: 0.7630 - acc: 0.8540 - val_loss: 0.6935 - val_acc: 0.8804

Epoch 37/500

113s 225ms/step - loss: 0.7557 - acc: 0.8566 - val_loss: 0.6997 - val_acc: 0.8785

Epoch 38/500

113s 225ms/step - loss: 0.7518 - acc: 0.8591 - val_loss: 0.7090 - val_acc: 0.8771

Epoch 39/500

113s 225ms/step - loss: 0.7537 - acc: 0.8581 - val_loss: 0.6784 - val_acc: 0.8879

Epoch 40/500

113s 226ms/step - loss: 0.7537 - acc: 0.8566 - val_loss: 0.6778 - val_acc: 0.8854

Epoch 41/500

113s 226ms/step - loss: 0.7461 - acc: 0.8613 - val_loss: 0.6941 - val_acc: 0.8800

Epoch 42/500

113s 226ms/step - loss: 0.7518 - acc: 0.8586 - val_loss: 0.7230 - val_acc: 0.8731

Epoch 43/500

113s 225ms/step - loss: 0.7562 - acc: 0.8561 - val_loss: 0.6876 - val_acc: 0.8859

Epoch 44/500

113s 225ms/step - loss: 0.7398 - acc: 0.8626 - val_loss: 0.6793 - val_acc: 0.8861

Epoch 45/500

113s 225ms/step - loss: 0.7402 - acc: 0.8638 - val_loss: 0.6860 - val_acc: 0.8857

Epoch 46/500

113s 225ms/step - loss: 0.7430 - acc: 0.8626 - val_loss: 0.6878 - val_acc: 0.8857

Epoch 47/500

113s 225ms/step - loss: 0.7372 - acc: 0.8656 - val_loss: 0.6758 - val_acc: 0.8885

Epoch 48/500

113s 225ms/step - loss: 0.7364 - acc: 0.8649 - val_loss: 0.6837 - val_acc: 0.8849

Epoch 49/500

113s 226ms/step - loss: 0.7374 - acc: 0.8639 - val_loss: 0.6730 - val_acc: 0.8902

Epoch 50/500

113s 226ms/step - loss: 0.7389 - acc: 0.8657 - val_loss: 0.6848 - val_acc: 0.8868

Epoch 51/500

113s 227ms/step - loss: 0.7354 - acc: 0.8654 - val_loss: 0.6788 - val_acc: 0.8892

Epoch 52/500

113s 227ms/step - loss: 0.7286 - acc: 0.8691 - val_loss: 0.6942 - val_acc: 0.8800

Epoch 53/500

113s 225ms/step - loss: 0.7365 - acc: 0.8653 - val_loss: 0.6929 - val_acc: 0.8820

Epoch 54/500

113s 226ms/step - loss: 0.7295 - acc: 0.8685 - val_loss: 0.6761 - val_acc: 0.8892

Epoch 55/500

113s 226ms/step - loss: 0.7319 - acc: