基于图像处理相关技术的高级车道线检测(可适用于弯道,车道线颜色不固定,路面阴影,亮光)

pipeline:

1.校准摄像头的畸变,使拍摄照片能够较完整的反映3D世界的情况

2.对每一帧图片做透视转换(perspective transform),将摄像头的照片转换到鸟瞰图视角(图片见正文),方便计算车道线曲率,从而控制车辆运动

3.对鸟瞰图二值化,通过二值的像素点进一步区分左右两条车道线,从而拟合出车道线曲线

4.用滑窗的方法检测第一帧的车道线像素点,然后拟合车道线曲线

5.从第一帧的曲线周围寻找接下来的车道线像素点,然后拟合车道线曲线,这样可以节约计算资源,加快检测速度

6.有了车道线曲线方程之后,可以计算斜率和车道线偏离中心的位置

正文

1.校准摄像头畸变

摄像头畸变主要分两种,径向畸变和切向畸变,径向畸变是由于光线经过摄像机的镜头时,边缘的光线会更多或更少的弯曲,所以边缘的物体成像时会有畸变。切向畸变主要是由于镜头和成像胶卷或传感器不平行导致的。

关于由5个参数矫正畸变,k1-k5,畸变越严重,所需参数越多,径向畸变矫正公式如下:

切向畸变矫正公式如下:

其中x,y为原图任一点,x,y(corrected)为其对应的没有畸变的图像上的坐标,r为点到中心的距离,K1,K2,K3为径向畸变参数,P1,P2为切向畸变参数,矫正参数由opencv的API来获得。这里用棋盘图的原因是计算点坐标相对容易,一般计算要多拍一些棋盘图在不同角度的照片,找到每一张棋盘图的角点坐标(图中的全部(x,y)和(x,y)(corrected)坐标),和其对应的未畸变的角点坐标,然后计算矫正参数进行矫正。

#创建objpoints和imgpoints来接收来自无畸变图片和相机拍摄畸变图片的角点,存储多张图片角点增加矫正的准确率。

def calibrate_camera(nx,ny):objp = np.zeros((nx*ny,3), np.float32)objp[:,:2] = np.mgrid[0:nx,0:ny].T.reshape(-1,2)objpoints = []imgpoints = []images = glob.glob('camera_cal/calibration*.jpg')for idx, fname in enumerate(images):#convert image to gray that the 'cv2.findChessboardCorners' neededimg = mpimg.imread(fname)gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)#Finding chessboard corners (for an 9×️6 board)ret, corners = cv2.findChessboardCorners(gray, (nx,ny), None)if ret == True:objpoints.append(objp)imgpoints.append(corners)#mtx represents 3D to 2D transformation, dist represents undistortion coef, rvecs the spin of camera#and tvecs the offset(偏移量)of the camera in the real world.return objpoints, imgpoints

有了目标点和图像点的角点信息之后,利用opencv的API自动计算矫正系数来矫正每一帧图片,返回值是无畸变图像,图1为矫正前图像,图2为矫正后图像。

def undistort_image(img):objectpoints, imagepoints = calibrate_camera(9,6)img_size = img.shape[1::-1]#get the distortion coef and other parametersret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objectpoints, imagepoints, img_size, None, None)#Undistort a imagedst = cv2.undistort(img, mtx, dist, None, mtx)return dst

接着就是为了计算曲率,将摄像头的视角进行转换,转换的结果最好是转换成从上向下的视角,造成摄像机从空中垂直拍摄车道线的效果,使用转换前图片任意多边形的边界点和转换以后多边形的边界点作为输入,调用opencv的cv2.getPerspectiveTransform函数可以返回转换的矩阵M,Minv是反转换矩阵,warped就是转换后车道线鸟瞰图。

def perspective_transform(img):# Vertices extracted manually for performing a perspective transformleftupperpoint = [568, 470]rightupperpoint = [717, 470]leftlowerpoint = [260, 680]rightlowerpoint = [1043, 680]src = np.float32([leftupperpoint, leftlowerpoint, rightupperpoint, rightlowerpoint])dst = np.float32([[200, 0], [200, 680], [1000, 0], [1000, 680]])img_size = img.shape[1::-1]#Compute the perspective transform, M, given source and destination pointsM = cv2.getPerspectiveTransform(src, dst)#Compute the inverse perspective transformMinv = cv2.getPerspectiveTransform(dst, src)#Warp an image using the perspective transform, Mwarped = cv2.warpPerspective(img, M, img_size, flags=cv2.INTER_LINEAR)return M, Minv, warpeddef perspective_transform(img):

有了鸟瞰图,还是要让程序自己辨别左右两条车道线,车道线在图像上是两条垂直线,可以用边缘检测的方法检测出,关于Sobel边缘检测,用sobel算子在x方向求导数可以很好的检测出垂直的车道线,但是为了让车道线检测效果更鲁棒,这里还需要结合一些图像颜色空间的知识,实验表明,HLS颜色中的S(饱和度)空间对阴影,光照的结果很鲁棒,因为饱和度通常反应物体颜色的鲜艳程度,与物体颜色及颜色亮暗无关,所以采用x方向的sobel边缘检测和饱和度阈值结合的方法可以使车道线检测结果更鲁棒

def pipeline(img, s_thresh=(170, 255), sx_thresh=(20, 100)):img = np.copy(img)# Convert to HLS color space and separate the V channelhls = cv2.cvtColor(img, cv2.COLOR_RGB2HLS)l_channel = hls[:,:,1]s_channel = hls[:,:,2]# Sobel xsobelx = cv2.Sobel(l_channel, cv2.CV_64F, 1, 0) # Take the derivative in xabs_sobelx = np.absolute(sobelx) # Absolute x derivative to accentuate lines away from horizontalscaled_sobel = np.uint8(255*abs_sobelx/np.max(abs_sobelx))# Threshold x gradientsxbinary = np.zeros_like(scaled_sobel)sxbinary[(scaled_sobel >= sx_thresh[0]) & (scaled_sobel <= sx_thresh[1])] = 1# Threshold color channels_binary = np.zeros_like(s_channel)s_binary[(s_channel >= s_thresh[0]) & (s_channel <= s_thresh[1])] = 1# Stack each channelcolor_binary = np.dstack(( np.zeros_like(sxbinary), sxbinary, s_binary)) *255return color_binary

有了二进制的车道线图像,就可以进一步拟合车道线曲线,首先画车道线二进制图的像素直方图,取下半张图片,像素最多的位置作为车道线的起始位置,然后自定义窗口大小和个数向上做滑窗操作,求出每个窗口中像素点的x和y坐标作为车道线的x,y坐标,当前窗口像素的平均x坐标作为下一个滑窗的中心位置,有了全部滑窗和车道线坐标用cv2.fitpoly函数拟合车道线曲线方程

def find_line_pixels(binary_warped):# Take a histogram of the bottom half of the imagehistogram = np.sum(binary_warped[binary_warped.shape[0] // 2:, :], axis=0)# Find the peak of the left and right halves of the histogram# These will be the starting point for the left and right linesmidpoint = np.int(histogram.shape[0] / 2)leftx_base = np.argmax(histogram[:midpoint])rightx_base = np.argmax(histogram[midpoint:]) + midpoint# Choose the number of sliding windowsnwindows = 9# Set height of windowswindow_height = np.int(binary_warped.shape[0] / nwindows)# Identify the x and y positions of all nonzero pixels in the imagenonzero = binary_warped.nonzero()nonzeroy = np.array(nonzero[0])nonzerox = np.array(nonzero[1])# Current positions to be updated for each windowleftx_current = leftx_baserightx_current = rightx_base# Set the width of the windows +/- marginmargin = 100# Set minimum number of pixels found to recenter windowminpix = 50# Create empty lists to receive left and right lane pixel indicesleft_lane_inds = []right_lane_inds = []# Step through the windows one by onefor window in range(nwindows):# Identify window boundaries in x and y (and right and left)win_y_low = binary_warped.shape[0] - (window + 1) * window_heightwin_y_high = binary_warped.shape[0] - window * window_heightwin_xleft_low = leftx_current - marginwin_xleft_high = leftx_current + marginwin_xright_low = rightx_current - marginwin_xright_high = rightx_current + margin# Identify the nonzero pixels in x and y within the windowgood_left_inds = ((nonzeroy >= win_y_low) & (nonzeroy < win_y_high) &(nonzerox >= win_xleft_low) & (nonzerox < win_xleft_high)).nonzero()[0]good_right_inds = ((nonzeroy >= win_y_low) & (nonzeroy < win_y_high) &(nonzerox >= win_xright_low) & (nonzerox < win_xright_high)).nonzero()[0]# Append these indices to the listsleft_lane_inds.append(good_left_inds)right_lane_inds.append(good_right_inds)# If you found > minpix pixels, recenter next window on their mean positionif len(good_left_inds) > minpix:leftx_current = np.int(np.mean(nonzerox[good_left_inds]))if len(good_right_inds) > minpix:rightx_current = np.int(np.mean(nonzerox[good_right_inds]))# Concatenate the arrays of indicesleft_lane_inds = np.concatenate(left_lane_inds)right_lane_inds = np.concatenate(right_lane_inds)# Extract left and right line pixel positionsleftx = nonzerox[left_lane_inds]lefty = nonzeroy[left_lane_inds]rightx = nonzerox[right_lane_inds]righty = nonzeroy[right_lane_inds]# Fit a second order polynomial to eachleft_fit = np.polyfit(lefty, leftx, 2)right_fit = np.polyfit(righty, rightx, 2)return left_fit, right_fit, left_lane_inds, right_lane_inds, lefty, leftx, righty, rightx滑窗的方法通常用于第一帧或者检测失败重新开始的检测,因为对计算资源浪费过多,检测时间长,由于连续帧图像之间相差不大,之后几帧的图像可以只对第一帧拟合的曲线周围检测,设置周围的margin,然后在该范围内寻找下一帧曲线的像素点从而拟合曲线。但是再最后处理每一帧图像时要设置标志位检测是否检测到拟合的曲线,检测到的话用search_from_previous方法,否则的话要用滑窗的方法重新寻找车道线。

def search_from_previous(binary_warped, left_fit, right_fit):nonzero = binary_warped.nonzero()nonzeroy = np.array(nonzero[0])nonzerox = np.array(nonzero[1])margin = 100left_lane_inds = ((nonzerox > (left_fit[0] * (nonzeroy ** 2) + left_fit[1] * nonzeroy +left_fit[2] - margin)) & (nonzerox < (left_fit[0] * (nonzeroy ** 2) +left_fit[1] * nonzeroy + left_fit[2] + margin)))right_lane_inds = ((nonzerox > (right_fit[0] * (nonzeroy ** 2) + right_fit[1] * nonzeroy +right_fit[2] - margin)) & (nonzerox < (right_fit[0] * (nonzeroy ** 2) +right_fit[1] * nonzeroy + right_fit[2] + margin)))# Again, extract left and right line pixel positionsleftx = nonzerox[left_lane_inds]lefty = nonzeroy[left_lane_inds]rightx = nonzerox[right_lane_inds]righty = nonzeroy[right_lane_inds]# Fit a second order polynomial to eachleft_fit = np.polyfit(lefty, leftx, 2)right_fit = np.polyfit(righty, rightx, 2)return left_fit, right_fit, left_lane_inds, right_lane_inds,lefty,leftx,righty,rightx

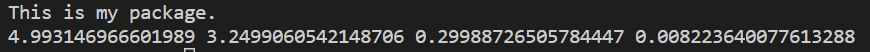

有了曲线的方程,则可以根据曲线曲率半径的公式计算曲率,dx/dy是因为我们拟合的是x对y的方程,因为车道线基本垂直,y对x的函数可能会存在一个y队形多个x的情况,这里还要注意自己计算的车道线曲线是根据像素值计算的,还要转换为其对应的实际道路距离(m)。

对于车辆的偏移,我们可以假设摄像头安装在车辆的正中心,那么道路中心就是检测到的图像中两条车道线的中点,车道线中心和图像中心的偏移就是车辆相对于车道线的偏移。同样记得把像素值转换为实际道路记录(m)。

def measure_radius_of_curvature(warped_img, lefty, leftx, righty, rightx):ym_per_pix = 20 / 720 # meters per pixel in y dimensionxm_per_pix = 3.7 / 800 # meters per pixel in x dimension# Fit a second order polynomial to pixel positions in each fake lane line# Fit new polynomials to x,y in world spaceleft_fit_cr = np.polyfit(lefty*ym_per_pix, leftx*xm_per_pix, 2)right_fit_cr = np.polyfit(righty*ym_per_pix, rightx*xm_per_pix, 2)ploty = np.linspace(0, warped_img.shape[0] - 1, warped_img.shape[0])# Define y-value where we want radius of curvature# We'll choose the maximum y-value, corresponding to the bottom of the imagey_eval = np.max(ploty)#Implement the calculation of R_curve (radius of curvature)left_curverad = ((1 + (2 * left_fit_cr[0] * y_eval * ym_per_pix + left_fit_cr[1]) ** 2) ** 1.5) / np.absolute(2 * left_fit_cr[0])right_curverad = ((1 + (2 * right_fit_cr[0] * y_eval * ym_per_pix + right_fit_cr[1]) ** 2) ** 1.5) / np.absolute(2 * right_fit_cr[0])left_lane_bottom = left_fit_cr[0] * (y_eval* ym_per_pix) ** 2 + left_fit_cr[0] * y_eval* ym_per_pix + left_fit_cr[2]right_lane_bottom = right_fit_cr[0] * (y_eval* ym_per_pix) ** 2 + right_fit_cr[0] * y_eval* ym_per_pix + right_fit_cr[2]# Lane center as mid of left and right lane bottomlane_center = (left_lane_bottom + right_lane_bottom) / 2.center_image = np.float(1280/2*xm_per_pix)center = lane_center - center_image # Convert to metersposition = "left" if center < 0 else "right"center = "Vehicle is {:.2f}m {}".format(center, position)# Now our radius of curvature is in metersreturn left_curverad, right_curverad, center

这就是完整的车道线检测流程了,对于一些连续弯道导致车道线超出图像边界的情况日后解决了会更新。