注意低版本的keras对一些包的引用方式跟高版本有区别,注意看章节1的代码注释部分。

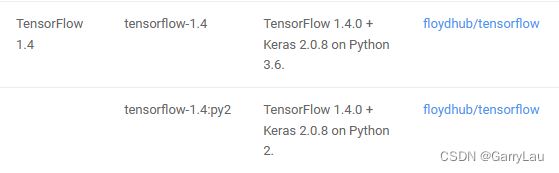

TensorFlow跟Keras也有版本的对应关系,https://master–floydhub-docs.netlify.app/guides/environments/。

例如:

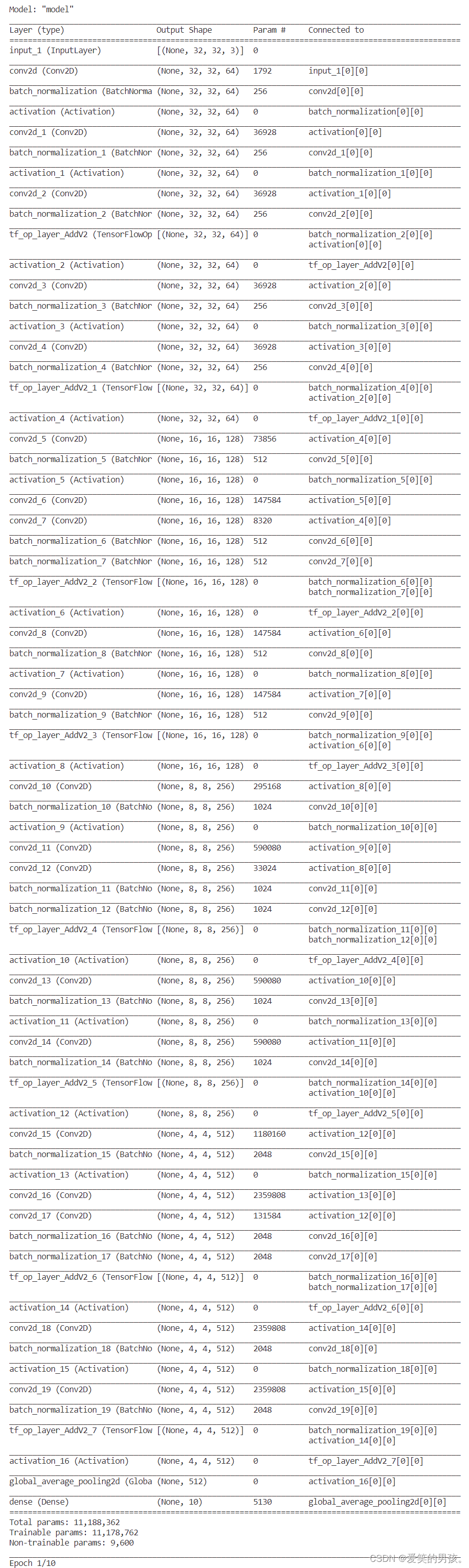

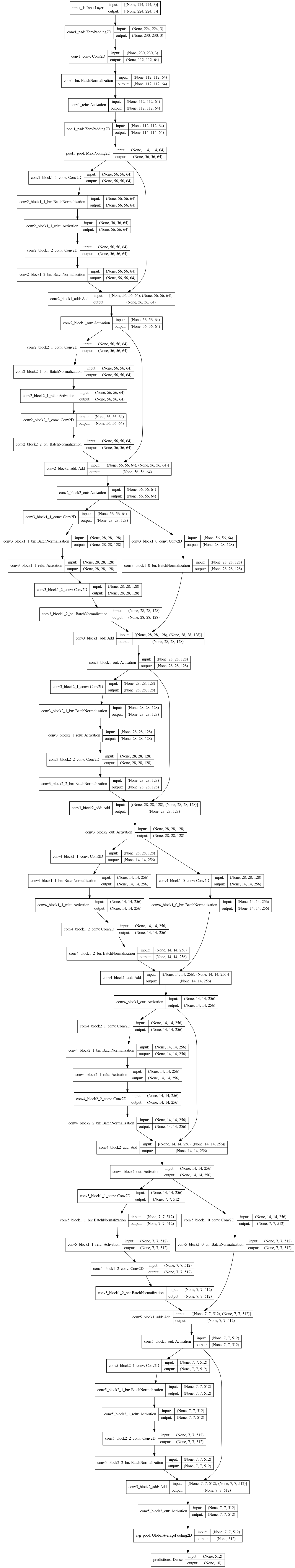

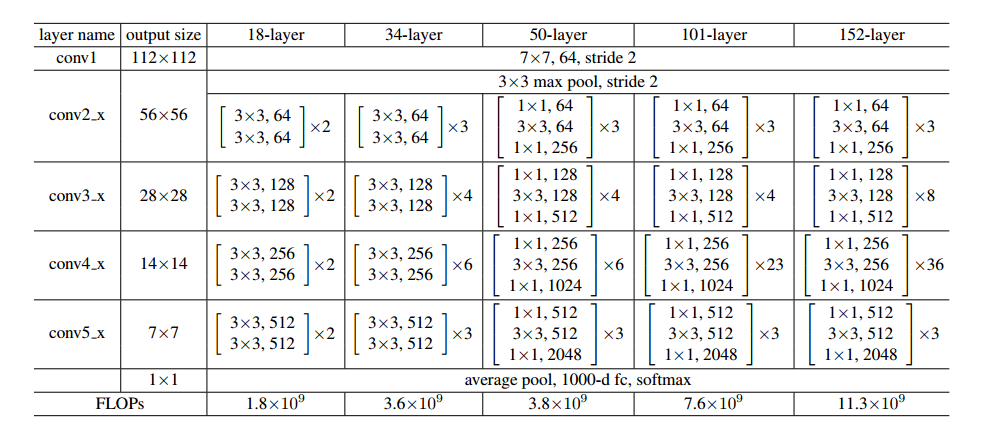

1.ResNet18

ResNet18

from tensorflow import keras

from tensorflow.keras import layers

# 对于keras==2.0.8应该写成下面这句(pip install keras==2.0.8 -i https://pypi.tuna.tsinghua.edu.cn/simple):

# from tensorflow.python.keras import layersINPUT_SIZE = 224

CLASS_NUM = 1000# stage_name=2,3,4,5; block_name=a,b,c

def ConvBlock(input_tensor, num_output, stride, stage_name, block_name):filter1, filter2 = num_outputx = layers.Conv2D(filter1, 3, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)shortcut = layers.Conv2D(filter2, 1, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch1')(input_tensor)shortcut = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch1')(shortcut)x = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef IdentityBlock(input_tensor, num_output, stage_name, block_name):filter1, filter2 = num_outputx = layers.Conv2D(filter1, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)shortcut = input_tensorx = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef ResNet18(input_shape, class_num):input = keras.Input(shape=input_shape, name='input')# conv1x = layers.Conv2D(64, 7, strides=(2, 2), padding='same', name='conv1')(input) # 7×7, 64, stride 2x = layers.BatchNormalization(name='bn_conv1')(x)x = layers.Activation('relu', name='conv1_relu')(x)x = layers.MaxPooling2D((3, 3), strides=2, padding='same', name='pool1')(x) # 3×3 max pool, stride 2# conv2_xx = ConvBlock(input_tensor=x, num_output=(64, 64), stride=(1, 1), stage_name='2', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(64, 64), stage_name='2', block_name='b')# conv3_xx = ConvBlock(input_tensor=x, num_output=(128, 128), stride=(2, 2), stage_name='3', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(128, 128), stage_name='3', block_name='b')# conv4_xx = ConvBlock(input_tensor=x, num_output=(256, 256), stride=(2, 2), stage_name='4', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(256, 256), stage_name='4', block_name='b')# conv5_xx = ConvBlock(input_tensor=x, num_output=(512, 512), stride=(2, 2), stage_name='5', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(512, 512), stage_name='5', block_name='b')# average pool, 1000-d fc, softmaxx = layers.AveragePooling2D((7, 7), strides=(1, 1), name='pool5')(x)x = layers.Flatten(name='flatten')(x)x = layers.Dense(class_num, activation='softmax', name='fc1000')(x)model = keras.Model(input, x, name='resnet18')# 对于keras==2.0.8应该写成下面这句,否则会报错:AttributeError: module 'tensorflow.python.keras' has no attribute 'Model'# model = keras.models.Model(input, x, name='resnet18')model.summary()return modelif __name__ == '__main__':model = ResNet18((INPUT_SIZE, INPUT_SIZE, 3), CLASS_NUM)print('Done.')

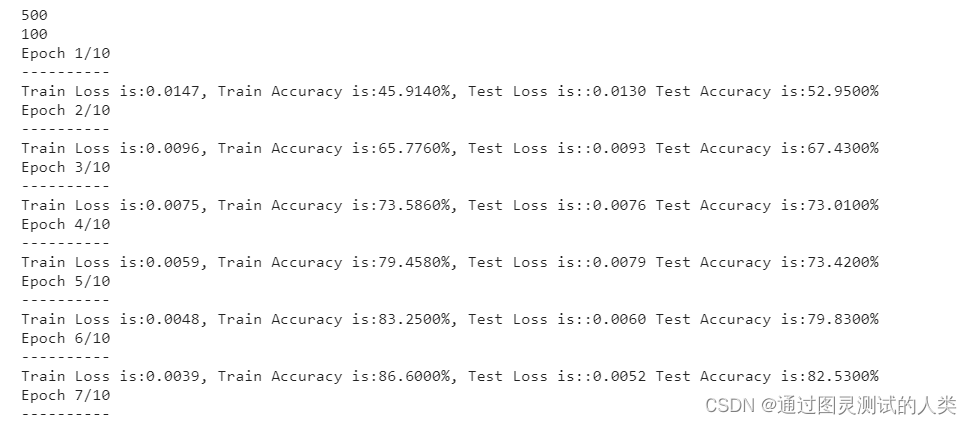

train_resnet18.py

from tensorflow import keras

from tensorflow.keras import layers

# 对于keras==2.0.8应该写成:from tensorflow.python.keras import layers

# pip install keras==2.0.8 -i https://pypi.tuna.tsinghua.edu.cn/simple INPUT_SIZE = 224

CLASS_NUM = 2# stage_name=2,3,4,5; block_name=a,b,c

def ConvBlock(input_tensor, num_output, stride, stage_name, block_name):filter1, filter2 = num_outputx = layers.Conv2D(filter1, 3, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)shortcut = layers.Conv2D(filter2, 1, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch1')(input_tensor)shortcut = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch1')(shortcut)x = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef IdentityBlock(input_tensor, num_output, stage_name, block_name):filter1, filter2 = num_outputx = layers.Conv2D(filter1, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)shortcut = input_tensorx = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef ResNet18(input_shape, class_num):input = keras.Input(shape=input_shape, name='input')# conv1x = layers.Conv2D(64, 7, strides=(2, 2), padding='same', name='conv1')(input) # 7×7, 64, stride 2x = layers.BatchNormalization(name='bn_conv1')(x)x = layers.Activation('relu', name='conv1_relu')(x)x = layers.MaxPooling2D((3, 3), strides=2, padding='same', name='pool1')(x) # 3×3 max pool, stride 2# conv2_xx = ConvBlock(input_tensor=x, num_output=(64, 64), stride=(1, 1), stage_name='2', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(64, 64), stage_name='2', block_name='b')# conv3_xx = ConvBlock(input_tensor=x, num_output=(128, 128), stride=(2, 2), stage_name='3', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(128, 128), stage_name='3', block_name='b')# conv4_xx = ConvBlock(input_tensor=x, num_output=(256, 256), stride=(2, 2), stage_name='4', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(256, 256), stage_name='4', block_name='b')# conv5_xx = ConvBlock(input_tensor=x, num_output=(512, 512), stride=(2, 2), stage_name='5', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(512, 512), stage_name='5', block_name='b')# average pool, 1000-d fc, softmaxx = layers.AveragePooling2D((7, 7), strides=(1, 1), name='pool5')(x)x = layers.Flatten(name='flatten')(x)x = layers.Dense(class_num, activation='softmax', name='fc1000')(x)model = keras.Model(input, x, name='resnet18')model.summary()return modelif __name__ == '__main__':model = ResNet18((INPUT_SIZE, INPUT_SIZE, 3), CLASS_NUM)print('Done.')

predict_resnet18.py

import matplotlib.pyplot as pltfrom ResNet18 import ResNet18

import cv2

import numpy as np

from tensorflow.keras import backend as K # K.set_image_dim_ordering('tf')

from tensorflow.keras.utils import to_categoricalINPUT_IMG_SIZE = 224

NUM_CLASSES = 2

label_dict = {0:'CAT', 1:'DOG'}def show_predict_probability(y_gts, predictions, x_imgs, predict_probabilitys, idx):for i in range(len(label_dict)):print(label_dict[i]+', Probability:%1.9f'%(predict_probabilitys[idx][i]))print('label: ', label_dict[int(y_gts[idx])], ', predict: ', label_dict[predictions[idx]])plt.figure(figsize=(2, 2))plt.imshow(np.reshape(x_imgs[idx], (INPUT_IMG_SIZE, INPUT_IMG_SIZE, 3)))plt.show()def plot_images_labels_prediction(images, labels, prediction, idx, num):fig = plt.gcf()fig.set_size_inches(12, 14)if num>25: num=25for i in range(0, num):ax = plt.subplot(2, 5, 1+i)ax.imshow(images[idx], cmap='binary')title = 'labels='+str(labels[idx])if len(prediction) > 0:title += "prediction="+str(prediction[idx])ax.set_title(title, fontsize=10)idx += 1plt.show()if __name__ == '__main__':log_path = r"D:\02.Work\00.LearnML\003.Net\ResNet\log\\"model = ResNet18((224, 224, 3), NUM_CLASSES)model.load_weights(log_path+"resnet18.h5")### cat dog datasetlines = []root_path = r"D:\03.Data\01.CatDog"with open(root_path + "\\test.txt") as f:lines = f.readlines()x_images_normalize = []y_labels_onehot = []y_labels = []for i in range(len(lines)):img_path = lines[i].split(";")[0]img = cv2.imread(img_path)img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = cv2.resize(img, (INPUT_IMG_SIZE, INPUT_IMG_SIZE))img = img / 255x_images_normalize.append(img)label = to_categorical(lines[i].split(";")[1], num_classes=NUM_CLASSES)y_labels_onehot.append(label)y_labels.append(lines[i].split(";")[1])x_images_normalize = np.array(x_images_normalize)# x_images_normalize = x_images_normalize.reshape(-1, INPUT_IMG_SIZE, INPUT_IMG_SIZE, 3)y_labels_onehot = np.array(y_labels_onehot)predict_probability = model.predict(x_images_normalize, verbose=1)predict = np.argmax(predict_probability, axis=1)plot_images_labels_prediction(x_images_normalize, y_labels, predict, 0, 10)show_predict_probability(y_labels, predict, x_images_normalize, predict_probability, 0)print('done')

2.ResNet50

ResNet50

from tensorflow import keras

from tensorflow.keras import layersINPUT_SIZE = 224

CLASS_NUM = 1000# stage_name=2,3,4,5; block_name=a,b,c

def ConvBlock(input_tensor, num_output, stride, stage_name, block_name):filter1, filter2, filter3 = num_outputx = layers.Conv2D(filter1, 1, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)x = layers.Conv2D(filter3, 1, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2c')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2c')(x)shortcut = layers.Conv2D(filter3, 1, strides=stride, padding='same', name='res'+stage_name+block_name+'_branch1')(input_tensor)shortcut = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch1')(shortcut)x = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef IdentityBlock(input_tensor, num_output, stage_name, block_name):filter1, filter2, filter3 = num_outputx = layers.Conv2D(filter1, 1, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2a')(input_tensor)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2a')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2a_relu')(x)x = layers.Conv2D(filter2, 3, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2b')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2b')(x)x = layers.Activation('relu', name='res'+stage_name+block_name+'_branch2b_relu')(x)x = layers.Conv2D(filter3, 1, strides=(1, 1), padding='same', name='res'+stage_name+block_name+'_branch2c')(x)x = layers.BatchNormalization(name='bn'+stage_name+block_name+'_branch2c')(x)shortcut = input_tensorx = layers.add([x, shortcut], name='res'+stage_name+block_name)x = layers.Activation('relu', name='res'+stage_name+block_name+'_relu')(x)return xdef ResNet50(input_shape, class_num):input = keras.Input(shape=input_shape, name='input')# conv1x = layers.Conv2D(64, 7, strides=(2, 2), padding='same', name='conv1')(input) # 7×7, 64, stride 2x = layers.BatchNormalization(name='bn_conv1')(x)x = layers.Activation('relu', name='conv1_relu')(x)x = layers.MaxPooling2D((3, 3), strides=2, padding='same', name='pool1')(x) # 3×3 max pool, stride 2# conv2_xx = ConvBlock(input_tensor=x, num_output=(64, 64, 256), stride=(1, 1), stage_name='2', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(64, 64, 256), stage_name='2', block_name='b')x = IdentityBlock(input_tensor=x, num_output=(64, 64, 256), stage_name='2', block_name='c')# conv3_xx = ConvBlock(input_tensor=x, num_output=(128, 128, 512), stride=(2, 2), stage_name='3', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(128, 128, 512), stage_name='3', block_name='b')x = IdentityBlock(input_tensor=x, num_output=(128, 128, 512), stage_name='3', block_name='c')x = IdentityBlock(input_tensor=x, num_output=(128, 128, 512), stage_name='3', block_name='d')# conv4_xx = ConvBlock(input_tensor=x, num_output=(256, 256, 1024), stride=(2, 2), stage_name='4', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(256, 256, 1024), stage_name='4', block_name='b')x = IdentityBlock(input_tensor=x, num_output=(256, 256, 1024), stage_name='4', block_name='c')x = IdentityBlock(input_tensor=x, num_output=(256, 256, 1024), stage_name='4', block_name='d')x = IdentityBlock(input_tensor=x, num_output=(256, 256, 1024), stage_name='4', block_name='e')x = IdentityBlock(input_tensor=x, num_output=(256, 256, 1024), stage_name='4', block_name='f')# conv5_xx = ConvBlock(input_tensor=x, num_output=(512, 512, 2048), stride=(2, 2), stage_name='5', block_name='a')x = IdentityBlock(input_tensor=x, num_output=(512, 512, 2048), stage_name='5', block_name='b')x = IdentityBlock(input_tensor=x, num_output=(512, 512, 2048), stage_name='5', block_name='c')# average pool, 1000-d fc, softmaxx = layers.AveragePooling2D((7, 7), strides=(1, 1), name='pool5')(x)x = layers.Flatten(name='flatten')(x)x = layers.Dense(class_num, activation='softmax', name='fc1000')(x)model = keras.Model(input, x, name='resnet50')model.summary()return modelif __name__ == '__main__':model = ResNet50((INPUT_SIZE, INPUT_SIZE, 3), CLASS_NUM)print('Done.')