前言

在前篇vgg16之后,无法成功训练vgg16,发现是自己电脑可用的显存太低了,遂放弃。

在2015 ILSVRC&COCO比赛中,何恺明团队提出的Resnet网络斩获第一,这是一个经典的网络。李沐说过,如果要学习一个CNN网络,一定是残差网络Resnet。与VGG相比,Resnet则更加出色,为后续的研究做下铺垫

这是Resnet论文翻译参考链接:https://blog.csdn.net/weixin_42858575/article/details/93305238

在之前的神经网络,存在两个问题:

网络收敛速度很慢,在用vgg16训练做cifar分类的时候,收敛速度很慢,与resnet相比,收敛速度慢至几倍甚至十倍。而且一旦出现梯度爆炸或者梯度消失,则会影响网络的收敛

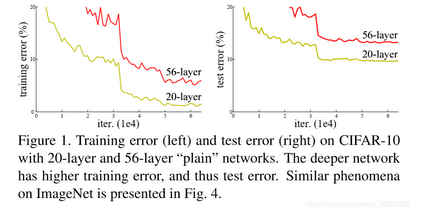

随着网络的加深,准确率达到饱和,然后开始下降。这称之为退化。

从上面这幅图可以看出,在一定的训练迭代中,适合的浅层网络要比深层网络有更低的训练误差和测试误差

Resnet在当时打破了网络越深,性能越好的共识,而且残差结构能加速学习,使得模型更加容易学习,也能有效地防止梯度爆炸或者消失。

为什么残差网络更容易学习特征?

- 从论文中可以看出,Resnet网络没有使用Dropout,而是利用了Bn层和平均池化层进行正则化,有效加快训练。

- 使用很少的池化层,间接加快训练

- 残差结构能够减少学习压力,在学习过程中,可以通过Shortcut连接学习冗余度比较高的地方,整体看,网络不再依赖整个映射,因此能够学习地更好。

- 还有一个可能,Resnet就像是集成学习,将每一个残差模块以某种加权方式学习起来。该观点属于猜测,写下来是为了做个标记,在未来可以根据资料印证。

区分退化和过拟合:

退化:指的是网络深度增加,网络准确度出现饱和,甚至出现下降

过拟合:指的是网络在训练集训练的很好,但是在未知的测试集表现地很差

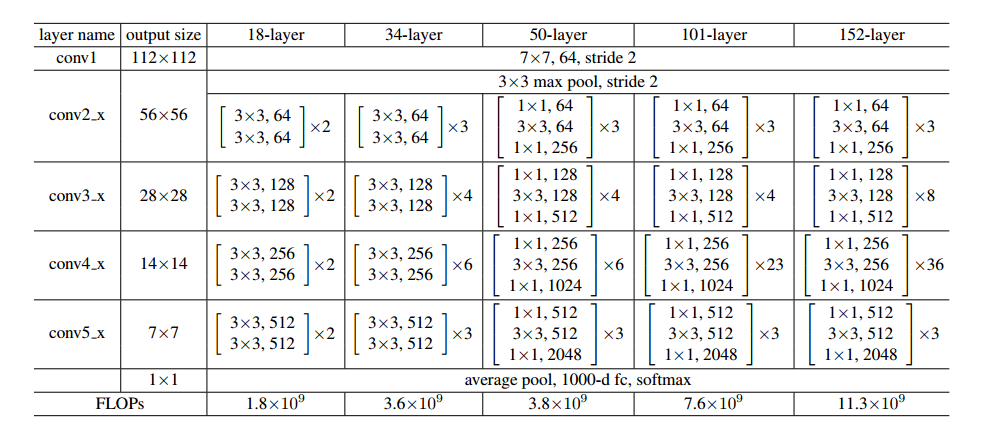

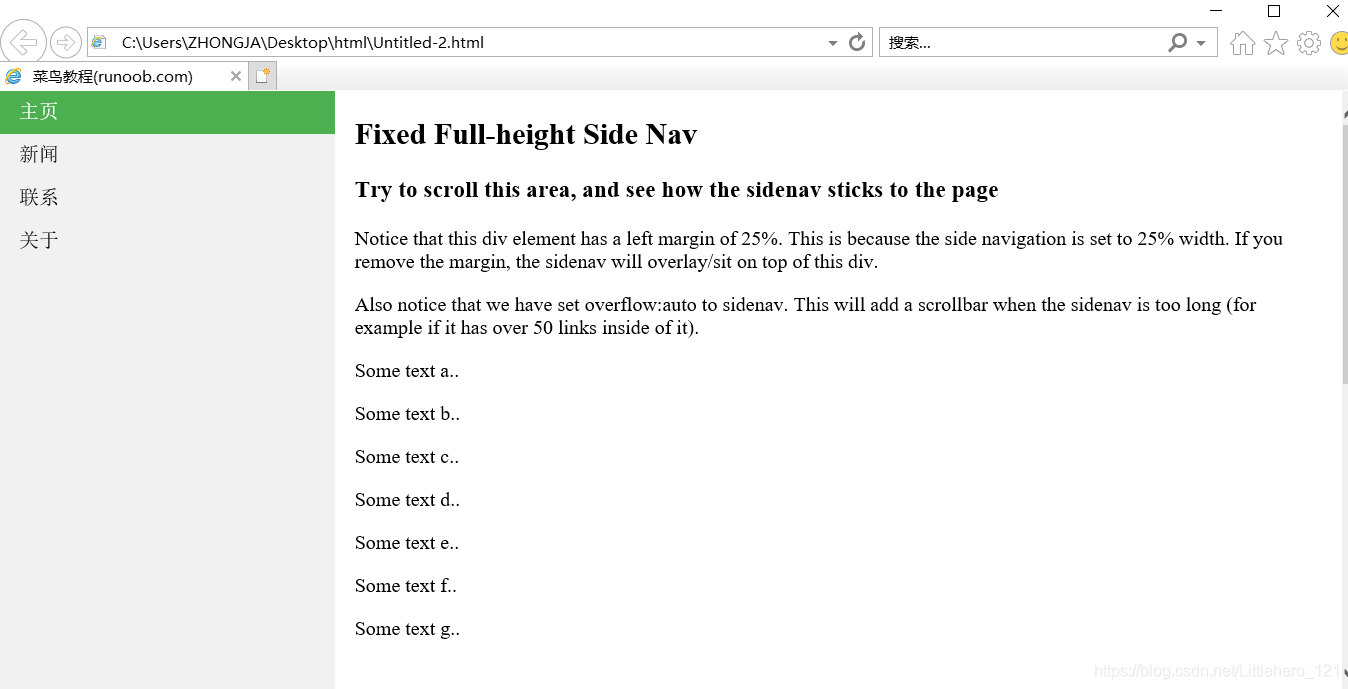

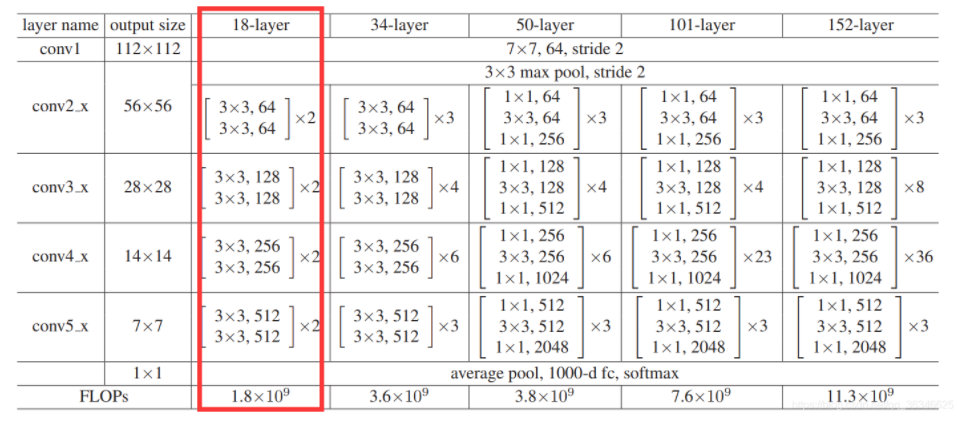

下图是Resnet系列,包括Resnet18、Resnet34、Resnet50、Resnet101、Resnet152

在keras中没有找到Resnet18的网络,所以本次复现的是Resnet18,如果有问题,请联系我。本次我根据一些帖子以及pytorch的源码去核对我复现的版本,复现的代码参照了keras源码。

代码

Resnet18

from keras import backend

from keras.applications import imagenet_utils

from keras.engine import training

from keras.layers import VersionAwareLayerslayers = VersionAwareLayers()

def ResNet18(input_tensor=None,input_shape=None,classes=1000,use_bias=True,classifier_activation='softmax',weights=None):bn_axis = 3 if backend.image_data_format() == 'channels_last' else 1if input_tensor is None:img_input = layers.Input(shape=input_shape)else:if not backend.is_keras_tensor(input_tensor):img_input = layers.Input(tensor=input_tensor, shape=input_shape)else:img_input = input_tensor# conv1_convx = layers.ZeroPadding2D(padding=((3, 3), (3, 3)), name='conv1_pad')(img_input)x = layers.Conv2D(64, 7, strides=2, use_bias=use_bias, name='conv1_conv')(x)x = layers.BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name='conv1_bn')(x)x = layers.Activation('relu', name='conv1_relu')(x)x = layers.ZeroPadding2D(padding=((1, 1), (1, 1)), name='pool1_pad')(x)x = layers.MaxPooling2D(3, strides=2, name='pool1_pool')(x)# 第二段卷积x = block1(x, filters=64, kernel_size=3, conv_shortcut=False, name="conv2_block1")x = block1(x, filters=64, kernel_size=3, conv_shortcut=False, name="conv2_block2")# 第三段卷积x = block1(x, filters=128, kernel_size=3, stride=2, conv_shortcut=True, name="conv3_block1")x = block1(x, filters=128, kernel_size=3, conv_shortcut=False, name="conv3_block2")# 第四段卷积x = block1(x, filters=256, kernel_size=3, stride=2, conv_shortcut=True, name="conv4_block1")x = block1(x, filters=256, kernel_size=3, conv_shortcut=False, name="conv4_block2")# 第五段卷积x = block1(x, filters=512, kernel_size=3, stride=2, conv_shortcut=True, name="conv5_block1")x = block1(x, filters=512, kernel_size=3, conv_shortcut=False, name="conv5_block2")x = layers.GlobalAveragePooling2D(name='avg_pool')(x)imagenet_utils.validate_activation(classifier_activation, weights)x = layers.Dense(classes, activation=classifier_activation,name='predictions')(x)model = training.Model(img_input, x, name="Resnet18")return modeldef block1(x, filters, kernel_size=3, stride=1, conv_shortcut=True, name=None):bn_axis = 3 if backend.image_data_format() == 'channels_last' else 1if conv_shortcut:shortcut = layers.Conv2D(filters, 1, strides=stride, name=name + '_0_conv')(x)shortcut = layers.BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + '_0_bn')(shortcut)else:shortcut = xx = layers.Conv2D(filters, kernel_size,strides=stride, padding='SAME', name=name + '_1_conv')(x)x = layers.BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + '_1_bn')(x)x = layers.Activation('relu', name=name + '_1_relu')(x)x = layers.Conv2D(filters, kernel_size, padding='SAME', name=name + '_2_conv')(x)x = layers.BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + '_2_bn')(x)x = layers.Add(name=name + '_add')([x, shortcut])x = layers.Activation('relu', name=name + '_out')(x)return x

from resnet18_version1.resnet import ResNet18

from keras.utils.vis_utils import plot_model# 通过plot_model保存keras版本resnet18的模型结构

model = ResNet18(input_shape=(224,224,3),classes=10)

model.summary()

plot_model(model,to_file="./resnet18.png",show_shapes=True)

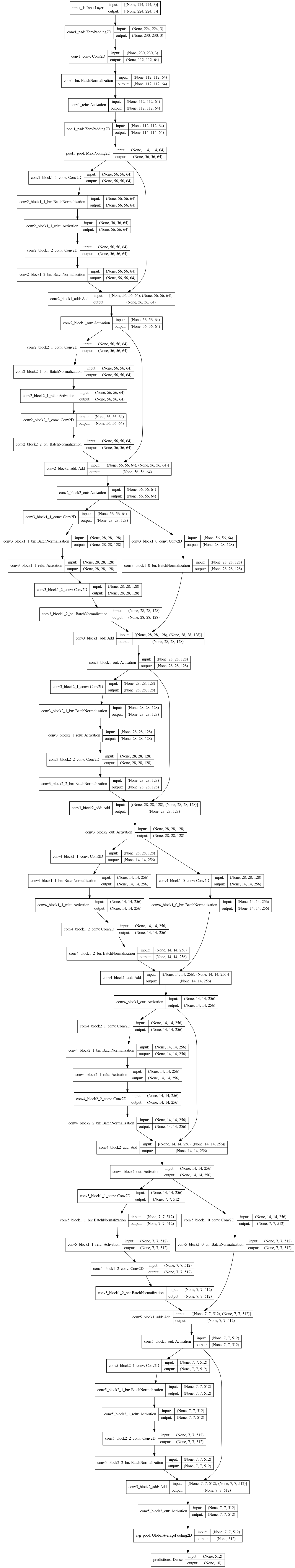

下图是plot_model函数保存下来的Resnet18网络结构,欢迎大家指出问题

训练

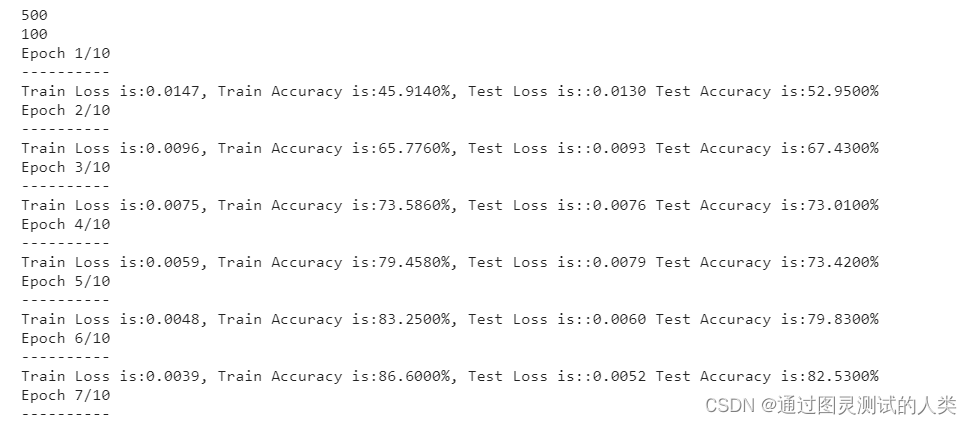

这次依旧训练cifar10,将cifar10分成训练集、验证集、测试集。接下来贴代码,本次训练和上篇vgg16一样,大家可以参照上一篇。按照其他大佬的经验,在不大修改模型的情况下,按照比赛的记录,测试的结果应该在85%+。

import glob

import os, sys

import random

from concurrent.futures import ThreadPoolExecutor

import cv2

import numpy as np

import tensorflow as tf

from keras.applications.resnet import ResNet, ResNet50

from keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.applications.vgg16 import VGG16from resnet18_version1.resnet import ResNet18os.environ["CUDA_VISIBLE_DEVICES"] = "0"

# # tensorflow 2.0写法

config = tf.compat.v1.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 0.98

config.gpu_options.allow_growth = True

sess = tf.compat.v1.Session(config=config)cifarlabel = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

train_path = r""

test_path = r""

valid_path = r""

batch_size = 8def train():# 数据增强方式transform = ImageDataGenerator(rescale=1. / 255, rotation_range=random.randint(0, 30), width_shift_range=random.randint(0, 5),height_shift_range=random.randint(0, 5))train_data = transform.flow_from_directory(train_path, target_size=(32, 32), shuffle=True, batch_size=batch_size)valid_data = transform.flow_from_directory(valid_path, target_size=(32, 32), shuffle=True, batch_size=batch_size)model = ResNet18(weights=None,classes=10,input_shape=(32, 32, 3))early_stopping = tf.keras.callbacks.EarlyStopping(monitor='loss', min_delta=0.001, patience=3, verbose=0,mode='auto',baseline=None, restore_best_weights=False)model.compile(optimizer="Adam",loss=tf.keras.losses.categorical_crossentropy,metrics=['accuracy'])model.fit_generator(train_data, epochs=50,callbacks=[early_stopping], validation_data=valid_data)model.save("./resnet18.h5")def write_img(path, img, label, index):print(index)label = str(label)dir = os.path.join(path, label)if not os.path.exists(dir):os.makedirs(dir)img_path = os.path.join(dir, str(index) + ".jpg")cv2.imwrite(img_path, img)def gen_data():(train_image, train_label), (test_image, test_label) = tf.keras.datasets.cifar10.load_data()print(train_image[3].shape, cifarlabel[int(train_label[3])]) # 查看数据格式path = train_pathwith ThreadPoolExecutor(max_workers=None) as t:for index, i in enumerate(range(len(train_image))):t.submit(write_img, path, train_image[index], int(train_label[index]), index)path = test_pathwith ThreadPoolExecutor(max_workers=None) as t:for index, i in enumerate(range(len(test_image))):t.submit(write_img, path, test_image[index], int(test_label[index]), index)valid_path = os.path.join(os.path.dirname(train_path), "valid")for i in os.listdir(train_path):dirname = os.path.join(train_path, i)valid_dir = os.path.join(valid_path, i)if not os.path.exists(valid_dir):os.makedirs(valid_dir)files = os.listdir(dirname)files = random.sample(files, len(files))for j in files[:int(len(files) * 0.1)]:os.rename(os.path.join(dirname, j), os.path.join(valid_dir, j))# keras flow_from_directory会无限循环提供batch图片,

# 现在写一个伪迭代,仿flow_from_directory会无限循环提供batch图片,

# 缺点很多,比不上源代码,而且路径只有2级。我定义的是数字类名,这里刚好也使用数字。

# 如果自己自定义数据集的话,需要自己修改此处代码

def loader(path, batch_size, target_size=(224, 224), shuffle=True):files = glob.glob(os.path.join(path, "*", "*.jpg"))if shuffle:random.shuffle(files)labels = [os.path.basename(os.path.dirname(file)) for file in files]# print(files[0],labels[0])for i in range(0, len(files), batch_size):imgs = files[i:i + batch_size]imgs = [cv2.imread(img) for img in imgs]imgs = [cv2.resize(img, target_size) for img in imgs]imgs = [tf.convert_to_tensor(img) for img in imgs]imgs = tf.convert_to_tensor(imgs)labelss = labels[i:i + batch_size]yield (imgs, labelss)def test():# 数据增强方式 刚开始没有给图片归一化,准确率一直在10%左右transform = ImageDataGenerator(rescale=1. / 255)test_data = transform.flow_from_directory(test_path, target_size=(224, 224), shuffle=True, batch_size=batch_size,class_mode="sparse")model = tf.keras.models.load_model("/data/kile/other/vgg16/resnet18_version1/model/0.415565_resnet18.h5",compile=False)allCount = 0trueCount = 0for index, i in enumerate(test_data):if index >= len(test_data):breakpredict_result = model.predict(i[0])# 预测的labelpredict_label = np.argmax(predict_result, axis=1).tolist()# 实际的labellabel = i[1]print(allCount, predict_label, label)for a, b in zip(predict_label, label):allCount += 1if a == int(b):trueCount += 1print(f"allCount:{allCount} trueCount:{trueCount} true_percent:{trueCount / allCount}")if __name__ == '__main__':# gen_data() 第一次使用就好了 不用重复train()test()训练结果:总共训练了20个epoches,但是在第15-18个epoches时,模型达到比较优秀的结果

Epoch 1/20

2022-01-02 13:55:16.442882: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8204

2813/2813 [==============================] - 472s 166ms/step - loss: 1.4426 - accuracy: 0.4791 - lr: 0.0100 - val_loss: 1.2521 - val_accuracy: 0.5508 - val_lr: 0.0100

/root/anaconda3/envs/py36/lib/python3.6/site-packages/keras/utils/generic_utils.py:497: CustomMaskWarning: Custom mask layers require a config and must override get_config. When loading, the custom mask layer must be passed to the custom_objects argument.category=CustomMaskWarning)

Epoch 2/20

2813/2813 [==============================] - 468s 166ms/step - loss: 0.9934 - accuracy: 0.6509 - lr: 0.0100 - val_loss: 1.1318 - val_accuracy: 0.6276 - val_lr: 0.0100

Epoch 3/20

2813/2813 [==============================] - 465s 165ms/step - loss: 0.7994 - accuracy: 0.7200 - lr: 0.0100 - val_loss: 1.0890 - val_accuracy: 0.6560 - val_lr: 0.0100

Epoch 4/20

2813/2813 [==============================] - 465s 165ms/step - loss: 0.6785 - accuracy: 0.7641 - lr: 0.0100 - val_loss: 1.0904 - val_accuracy: 0.6406 - val_lr: 0.0100

Epoch 5/20

2813/2813 [==============================] - 466s 166ms/step - loss: 0.5909 - accuracy: 0.7921 - lr: 0.0100 - val_loss: 0.7205 - val_accuracy: 0.7576 - val_lr: 0.0100

Epoch 6/20

2813/2813 [==============================] - 469s 167ms/step - loss: 0.5240 - accuracy: 0.8202 - lr: 0.0100 - val_loss: 0.6743 - val_accuracy: 0.7686 - val_lr: 0.0100

Epoch 7/20

2813/2813 [==============================] - 468s 166ms/step - loss: 0.4629 - accuracy: 0.8409 - lr: 0.0100 - val_loss: 0.7149 - val_accuracy: 0.7650 - val_lr: 0.0100

Epoch 8/20

2813/2813 [==============================] - 464s 165ms/step - loss: 0.4110 - accuracy: 0.8576 - lr: 0.0100 - val_loss: 0.7091 - val_accuracy: 0.7670 - val_lr: 0.0100

Epoch 9/20

2813/2813 [==============================] - 466s 166ms/step - loss: 0.3740 - accuracy: 0.8717 - lr: 0.0100 - val_loss: 0.6305 - val_accuracy: 0.7976 - val_lr: 0.0100

Epoch 10/20

2813/2813 [==============================] - 470s 167ms/step - loss: 0.3314 - accuracy: 0.8846 - lr: 0.0100 - val_loss: 0.7267 - val_accuracy: 0.7786 - val_lr: 0.0100

Epoch 11/20

2813/2813 [==============================] - 463s 165ms/step - loss: 0.3009 - accuracy: 0.8947 - lr: 0.0100 - val_loss: 0.6524 - val_accuracy: 0.7990 - val_lr: 0.0100

Epoch 12/20

2813/2813 [==============================] - 463s 165ms/step - loss: 0.2707 - accuracy: 0.9073 - lr: 0.0100 - val_loss: 0.6691 - val_accuracy: 0.7934 - val_lr: 0.0100

Epoch 13/20

2813/2813 [==============================] - 464s 165ms/step - loss: 0.1561 - accuracy: 0.9498 - lr: 9.9999e-04 - val_loss: 0.4140 - val_accuracy: 0.8664 - val_lr: 1.0000e-03

Epoch 14/20

2813/2813 [==============================] - 463s 165ms/step - loss: 0.1306 - accuracy: 0.9608 - lr: 9.9999e-04 - val_loss: 0.4191 - val_accuracy: 0.8628 - val_lr: 1.0000e-03

Epoch 15/20

2813/2813 [==============================] - 472s 168ms/step - loss: 0.1198 - accuracy: 0.9646 - lr: 9.9999e-04 - val_loss: 0.4149 - val_accuracy: 0.8644 - val_lr: 1.0000e-03

Epoch 16/20

2813/2813 [==============================] - 463s 165ms/step - loss: 0.1080 - accuracy: 0.9682 - lr: 9.9999e-04 - val_loss: 0.4156 - val_accuracy: 0.8644 - val_lr: 1.0000e-03

Epoch 17/20

2813/2813 [==============================] - 472s 168ms/step - loss: 0.1019 - accuracy: 0.9697 - lr: 9.9999e-04 - val_loss: 0.4225 - val_accuracy: 0.8684 - val_lr: 1.0000e-03

Epoch 18/20

2813/2813 [==============================] - 464s 165ms/step - loss: 0.0959 - accuracy: 0.9721 - lr: 9.9999e-04 - val_loss: 0.4228 - val_accuracy: 0.8670 - val_lr: 1.0000e-03

Epoch 19/20

2813/2813 [==============================] - 470s 167ms/step - loss: 0.0883 - accuracy: 0.9750 - lr: 9.9999e-04 - val_loss: 0.4216 - val_accuracy: 0.8654 - val_lr: 1.0000e-03

Epoch 20/20

2813/2813 [==============================] - 476s 169ms/step - loss: 0.0868 - accuracy: 0.9752 - lr: 9.9999e-04 - val_loss: 0.4166 - val_accuracy: 0.8664 - val_lr: 1.0000e-03

接下来 我们取其中第16个epoches做测试,得到结果,准确率大概在88%

9968 [3, 4, 6, 7, 4, 9, 2, 5] [3. 4. 6. 7. 4. 2. 5. 5.]

9976 [9, 9, 3, 4, 4, 7, 4, 5] [9. 9. 3. 4. 4. 7. 4. 5.]

9984 [1, 9, 1, 3, 4, 0, 2, 8] [1. 9. 1. 3. 4. 0. 2. 8.]

9992 [3, 1, 9, 1, 9, 5, 1, 4] [3. 1. 9. 9. 9. 5. 1. 7.]

allCount:10000 trueCount:8792 true_percent:0.8792

补充,其实还有一种方式可以画出keras的结构图。而且是网页版的

https://netron.app/

结果模型我已经放在云盘 欢迎大家下载测试

https://url25.ctfile.com/f/34628125-532522905-23a4e6

(访问密码:3005)