1、主要参考

(1)摄像头参数

https://blog.csdn.net/crazty/article/details/107365147

(2)双目标定方法,过程参照了一下

双目三维重建系统(双目标定+立体校正+双目测距+点云显示)Python_AI吃大瓜的博客-CSDN博客_双目三维重建

(3)直接放源码的都是好人啊,下面的大佬代码参照了一下,大家仔细看看

python、opencv 双目视觉测距代码 - 灰信网(软件开发博客聚合)

(4)另一个大佬的文章

使用OpenCV/Python进行双目测距_程序员老华的博客-CSDN博客

(5)左右摄像头联合标定,下面的代码一定要看一下!!!

【ZED】从零开始使用ZED相机(五):Opencv+Python实现相机标定(双目)_摇曳的树的博客-CSDN博客_python 双目标定

(6)wls滤波的代码的作者地址

https://blog.csdn.net/weixin_40524689/article/details/124929419

2、双目摄像头图像的获取和分割

2.1 某宝买的摄像头

采集分别率可达3840*1080,但是是拼接好的一幅图

2.2 对摄像头进行采集和分割

(1)以下代码将采集的图片分为左右两个摄像头图像,各位1920*1080

#加载opencv模块

import cv2 as cv

import copy

#获取摄像头

cap = cv.VideoCapture(0)# https://blog.csdn.net/crazty/article/details/107365147

# cap.set(cv.CV_CAP_PROP_FRAME_WIDTH, 3840)

# cap.set(cv.CV_CAP_PROP_FRAME_HEIGHT, 1080)

width = 3840

height = 1080

# widht_split = width//2 #注意不能用/这是浮点除法, 要用//,整除法

cap.set(3, width)

cap.set(4, height)

cv.namedWindow('camera', cv.WINDOW_NORMAL)

cv.namedWindow('camera_left', cv.WINDOW_NORMAL)

cv.namedWindow('camera_right', cv.WINDOW_NORMAL)

print("start cap")left_img = None

right_img = Nonewhile (cap.isOpened()):ret, frame = cap.read() #捕获图片if ret == False or frame is None:print("can't get img")breakframe = cv.flip(frame,1) #镜像操作# print(frame.shape)#注意不能用/这是浮点除法, 要用//,整除法left_img = copy.deepcopy(frame[:,:width//2,:]) right_img = copy.deepcopy(frame[:,width//2:,:])cv.imshow("camera", frame)cv.imshow("camera_left", left_img)cv.imshow("camera_right", right_img)key = cv.waitKey(30)if key == ord('q'): #如果是按键r,则退出breakcap.release()

cv.destroyAllWindows()

2.3 对标定板的图片分左右摄像头采集记录

(1)标定板的生成,参照我前面的教程

(7)点云数据处理学习——单摄像头深度估计_chencaw的博客-CSDN博客

(2)图像采集记录程序

#加载opencv模块

import cv2 as cv

import copy

#获取摄像头

cap = cv.VideoCapture(0)# https://blog.csdn.net/crazty/article/details/107365147

# cap.set(cv.CV_CAP_PROP_FRAME_WIDTH, 3840)

# cap.set(cv.CV_CAP_PROP_FRAME_HEIGHT, 1080)

width = 3840

height = 1080

# widht_split = width//2 #注意不能用/这是浮点除法, 要用//,整除法

cap.set(3, width)

cap.set(4, height)

cv.namedWindow('camera', cv.WINDOW_NORMAL)

cv.namedWindow('camera_left', cv.WINDOW_NORMAL)

cv.namedWindow('camera_right', cv.WINDOW_NORMAL)

print("start cap")left_img = None

right_img = None

record_file_basepath = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/'

imgcount = 1

while (cap.isOpened()):ret, frame = cap.read() #捕获图片if ret == False or frame is None:print("can't get img")breakframe = cv.flip(frame,1) #镜像操作# print(frame.shape)#注意不能用/这是浮点除法, 要用//,整除法left_img = copy.deepcopy(frame[:,:width//2,:]) right_img = copy.deepcopy(frame[:,width//2:,:])cv.imshow("camera", frame)cv.imshow("camera_left", left_img)cv.imshow("camera_right", right_img)key = cv.waitKey(10)if key == ord('q'): #如果是按键q,则退出breakif key == ord('r'): #如果是按键r,则分别记录左右摄像头图片# cv.imwrite('D:/RGBD_CAMERA/1chen_code/stereo_vision/leftimg/1.jpg',left_img)cv.imwrite(record_file_basepath+"leftimg/"+str(imgcount)+".jpg",left_img)cv.imwrite(record_file_basepath+"rightimg/"+str(imgcount)+".jpg",right_img)imgcount = imgcount+1cap.release()

cv.destroyAllWindows()

3、左右摄像头联合标定

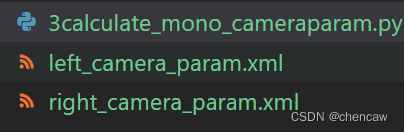

3.1 左右摄像头单独标定(注意,最后没用!)

(1)简单封装了一下,代码如下,转载要备注啊!

import cv2

import numpy as np

import glob#摄像头标定

def calculate_mono_camera_param(imgfile_path,checkerboard_w_num,checkerboard_h_num,checkerboard_real_size,xmfile_name):#(一) 第一步:找棋盘格角点# 设置寻找亚像素角点的参数,采用的停止准则是最大循环次数30和最大误差容限0.001criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001) # 阈值#棋盘格模板规格# w = 8 # 9 - 1 # h = 5 # 6 - 1w = checkerboard_w_num h = checkerboard_h_num # 世界坐标系中的棋盘格点,例如(0,0,0), (1,0,0), (2,0,0) ....,(8,5,0),去掉Z坐标,记为二维矩阵objp = np.zeros((w*h,3), np.float32)objp[:,:2] = np.mgrid[0:w,0:h].T.reshape(-1,2)# objp = objp*18.1 # 18.1 mmobjp = objp * checkerboard_real_size # 30 mm #实际打印方格尺寸# 储存棋盘格角点的世界坐标和图像坐标对objpoints = [] # 在世界坐标系中的三维点imgpoints = [] # 在图像平面的二维点#加载pic文件夹下所有的jpg图像images = glob.glob(imgfile_path + '*.jpg') # 拍摄的十几张棋盘图片所在目录i=0cv2.namedWindow('findCorners', cv2.WINDOW_NORMAL)for fname in images:img = cv2.imread(fname)# 获取画面中心点#获取图像的长宽h1, w1 = img.shape[0], img.shape[1]gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)u, v = img.shape[:2]# 找到棋盘格角点ret, corners = cv2.findChessboardCorners(gray, (w,h),None)# 如果找到足够点对,将其存储起来if ret == True:print("i:", i)i = i+1# 在原角点的基础上寻找亚像素角点cv2.cornerSubPix(gray,corners,(11,11),(-1,-1),criteria)#追加进入世界三维点和平面二维点中objpoints.append(objp)imgpoints.append(corners)# 将角点在图像上显示cv2.drawChessboardCorners(img, (w,h), corners, ret)cv2.resizeWindow('findCorners', 640, 480)cv2.imshow('findCorners',img)cv2.waitKey(200)# cv2.waitKey(2000)cv2.destroyAllWindows()#(二) 第二步:标定print('正在计算......')#标定ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)print('ret',ret) #ret也就是retval,表示的是重投影误差print("mtx:\n",mtx) # 内参数矩阵print("dist畸变值:\n",dist) # 畸变系数 distortion cofficients = (k_1,k_2,p_1,p_2,k_3)print("rvecs旋转(向量)外参:\n",rvecs) # 旋转向量 # 外参数print("tvecs平移(向量)外参:\n",tvecs ) # 平移向量 # 外参数#(三) 第三步:保存摄像机参数print('保存摄像头参数,重要!!')cv_file = cv2.FileStorage(xmfile_name,cv2.FILE_STORAGE_WRITE)cv_file.write('intrinsic_mtx',mtx)print(type(mtx))cv_file.write('intrinsic_dist',dist)print(type(dist))cv_file.release()if __name__ == '__main__':checkerboard_real_size = 30#(1)左摄像头imgfile_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/leftimg/'xmfile_name = 'left_camera_param.xml'calculate_mono_camera_param(imgfile_path, 8, 5, checkerboard_real_size,xmfile_name)#(2)右摄像头imgfile_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/rightimg/'xmfile_name = 'right_camera_param.xml'calculate_mono_camera_param(imgfile_path, 8, 5, checkerboard_real_size,xmfile_name)(2)标定结果以xml形式保存下来

这次标定的参数如下

<?xml version="1.0"?>

<opencv_storage>

<intrinsic_mtx type_id="opencv-matrix"><rows>3</rows><cols>3</cols><dt>d</dt><data>1.5096201160215364e+03 0. 8.9049227410684671e+02 0.1.5015318633100094e+03 5.5143964705119470e+02 0. 0. 1.</data></intrinsic_mtx>

<intrinsic_dist type_id="opencv-matrix"><rows>1</rows><cols>5</cols><dt>d</dt><data>1.0748359938372364e-01 -1.0045565960216591e+004.4327918123757016e-03 -9.0274743236329804e-032.9483429325418737e+00</data></intrinsic_dist>

</opencv_storage>

3.2 左右摄像头联合标定(opencv最后使用的,还是有问题!)

(1)参照了大佬的代码

【ZED】从零开始使用ZED相机(五):Opencv+Python实现相机标定(双目)_摇曳的树的博客-CSDN博客_python 双目标定

(2)代码实现如下

import cv2

import numpy as np

import globclass Stereo_calibrate(): # 执行校正def __init__(self,checkerboard_w_num,checkerboard_h_num,checkerboard_real_size,xmfile_name):# 终止条件self.criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)# 准备对象点,棋盘方块交界点排列:6行9列 self.row = checkerboard_w_num #w 8self.col = checkerboard_h_num #h 5self.real_size = checkerboard_real_size # 30 mmself.xmfile_name = xmfile_name #保存的xml参数文件名self.objpoints = np.zeros((self.row * self.col, 3), np.float32)self.objpoints[:, :2] = np.mgrid[0:self.row, 0:self.col].T.reshape(-1, 2)self.objpoints = self.objpoints * checkerboard_real_size # 30 mm #实际打印方格尺寸def exe(self,dir_l,dir_r):objectpoints = [] # 真实世界中的3d点imgpoints_l = []imgpoints_r = []# 标定所用图像images_l = glob.glob('%s/*'%dir_l)images_r = glob.glob('%s/*' % dir_r)for i in range(len(images_l)):img_l = cv2.imread(images_l[i])gray_l = cv2.cvtColor(img_l, cv2.COLOR_BGR2GRAY)img_r = cv2.imread(images_r[i])gray_r = cv2.cvtColor(img_r, cv2.COLOR_BGR2GRAY)# 寻找到棋盘角点ret1, corners_l = cv2.findChessboardCorners(img_l, (self.row, self.col), None)ret2, corners_r = cv2.findChessboardCorners(img_r, (self.row, self.col), None)# 如果找到,添加对象点,图像点(细化之后)if ret1 == True and ret2 == True:# 添加每幅图的对应3D-2D坐标objectpoints.append(self.objpoints)corners_l = cv2.cornerSubPix(gray_l, corners_l, (11, 11), (-1, -1),self.criteria)imgpoints_l.append(corners_l)corners_r = cv2.cornerSubPix(gray_r, corners_r, (11, 11), (-1, -1), self.criteria)imgpoints_r.append(corners_r)# # 绘制并显示拐角# cv2.drawChessboardCorners(img_l, (self.row, self.col), corners_l, ret)# cv2.drawChessboardCorners(img_r, (self.row, self.col), corners_r, ret)# view = np.concatenate((img_l, img_r), axis=1)# cv2.namedWindow('View')# cv2.imshow("View", cv2.resize(view,(1920,540)))# cv2.waitKey(0)# cv2.destroyAllWindows()# 利用单目校正函数实现相机内参初始化ret, m1, d1, _, _ = cv2.calibrateCamera(objectpoints, imgpoints_l, gray_l.shape[::-1], None, None)ret, m2, d2, _, _= cv2.calibrateCamera(objectpoints, imgpoints_l, gray_l.shape[::-1], None, None)# configflags = 0# flags |= cv2.CALIB_FIX_ASPECT_RATIOflags |= cv2.CALIB_USE_INTRINSIC_GUESS# flags |= cv2.CALIB_SAME_FOCAL_LENGTH# flags |= cv2.CALIB_ZERO_TANGENT_DISTflags |= cv2.CALIB_RATIONAL_MODEL# flags |= cv2.CALIB_FIX_K1# flags |= cv2.CALIB_FIX_K2# flags |= cv2.CALIB_FIX_K3# flags |= cv2.CALIB_FIX_K4# flags |= cv2.CALIB_FIX_K5# flags |= cv2.CALIB_FIX_K6stereocalib_criteria = (cv2.TERM_CRITERIA_COUNT +cv2.TERM_CRITERIA_EPS, 100, 1e-5)# 输入参数:真实3d坐标点,左相机像素点、右相机像素点、左内参、左畸变、右内参、右畸变、图像尺寸、一些配置# 输出值:未知、左内参、左畸变、右内参、右畸变(迭代优化后的)、旋转矩阵、平移向量、本质矩阵、基础矩阵ret, m1, d1,m2, d2, R, t, E, F = cv2.stereoCalibrate(objectpoints,imgpoints_l,imgpoints_r,m1, d1,m2, d2, gray_l.shape[::-1],criteria=stereocalib_criteria, flags=flags)print(ret,'\n左相机矩阵:%s\n左相机畸变:%s\n右相机矩阵:%s\n右相机畸变:%s\n旋转矩阵:%s\n平移向量:%s''\n本质矩阵E:%s\n基础矩阵F:%s\n'%(m1, d1, m2, d2, R, t, E, F))#保存摄像机参数print('保存摄像头参数,重要!!')cv_file = cv2.FileStorage(self.xmfile_name,cv2.FILE_STORAGE_WRITE)cv_file.write('left_m1',m1)cv_file.write('left_d1',d1)cv_file.write('right_m2',m2)cv_file.write('right_d2',d2)cv_file.write('stereo_r',R)cv_file.write('stereo_t',t)cv_file.release()if __name__ == "__main__":# (1) 初始化参数xmlfile_name = 'stereo_param.xml'cal = Stereo_calibrate(8,5,30,xmlfile_name)#(2)立体视觉标定left_img_file_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/leftimg/'right_img_file_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/leftimg/'cal.exe(left_img_file_path, right_img_file_path)

(3)标定结果如下

<?xml version="1.0"?>

<opencv_storage>

<left_m1 type_id="opencv-matrix"><rows>3</rows><cols>3</cols><dt>d</dt><data>1.5096198348992518e+03 0. 8.9049227714927781e+02 0.1.5015316270463043e+03 5.5143964513223204e+02 0. 0. 1.</data></left_m1>

<left_d1 type_id="opencv-matrix"><rows>1</rows><cols>14</cols><dt>d</dt><data>1.0750374792687890e-01 -1.0035692712049433e+004.4327603241579574e-03 -9.0274500455266634e-032.9573781021023886e+00 9.5808444418142185e-06 1.1258969063255248e-038.5100846188187560e-03 0. 0. 0. 0. 0. 0.</data></left_d1>

<right_m2 type_id="opencv-matrix"><rows>3</rows><cols>3</cols><dt>d</dt><data>1.5096198378360564e+03 0. 8.9049227891398687e+02 0.1.5015316300540694e+03 5.5143964504318376e+02 0. 0. 1.</data></right_m2>

<right_d2 type_id="opencv-matrix"><rows>1</rows><cols>14</cols><dt>d</dt><data>1.0750374675083163e-01 -1.0035692594357535e+004.4327601917556643e-03 -9.0274494471253627e-032.9573780872480153e+00 9.5820382961066939e-06 1.1258851317497449e-038.5100988514177738e-03 0. 0. 0. 0. 0. 0.</data></right_d2>

<stereo_r type_id="opencv-matrix"><rows>3</rows><cols>3</cols><dt>d</dt><data>1. -4.7614064551882831e-11 -1.1988571142849994e-094.7614064551882831e-11 1. 2.1823626572323024e-101.1988571142849994e-09 -2.1823626572323024e-10 1.</data></stereo_r>

<stereo_t type_id="opencv-matrix"><rows>3</rows><cols>1</cols><dt>d</dt><data>1.0755947703661318e-08 -9.0097844983336702e-081.1366280383436105e-06</data></stereo_t>

</opencv_storage>

(4)结论:参数有问题,有空接着研究一下!!!!!

3.3 左右摄像头matlab标定,看起来可以!

(0)标定过程和参数导出,主要参考

Matlab双目相机标定_indigo love的博客-CSDN博客_matlab双目相机标定

(1)

matlab2016,命令窗口中输入:

stereoCameraCalibrator

(2) 标定结果

#注意,陈备注:以下数据由matlab2016的工具箱stereoCameraCalibrator标注获得left_camera_matrix = np.array([[ 1504.835951, 0, 903.7996248],[0, 1496.764636, 561.0768711],[0, 0, 1]]) left_distortion = np.array([[0.0570108746206312, -0.477832004663628, 0.00673968704282051, -0.00388206741248358,1.12821673355428]])right_camera_matrix = np.array([[1508.16718253864, 0, 868.820482265892],[ 0, 1497.3685484015, 574.72759642089],[ 0, 0, 1]])right_distortion = np.array([[0.0619253328320556, -1.3099240242802, 0.00734199071917032, -0.00389020681922442,7.50657175779989]])R = np.matrix([[0.999999429, -0.001009115, -0.000350325],[0.001007939, 0.999993912, 0],[0.000353694, 0.00334044, 0.999994358],]) T = np.array([-49.53961963, -0.245581652, 2.526004856]) # 平移关系向量

4、双目立体视觉的检测

(1)基础方法检测,测试代码

import cv2

import numpy as np

import copy

import globthreeD = None

#函数一,获得立体视觉的参数

def get_stereo_param(xmlfie_path,size = (1920, 1080)):#方法(一)陈备注:opencv双目标注的方法使用有误,有空接着研究# cv_file = cv2.FileStorage()# cv_file.open(xmlfie_path,cv2.FileStorage_READ)# left_camera_matrix = cv_file.getNode('left_m1').mat()# left_distortion = cv_file.getNode('left_d1').mat()# right_camera_matrix = cv_file.getNode('right_m2').mat()# right_distortion = cv_file.getNode('right_d2').mat()# R = cv_file.getNode('stereo_r').mat()# T = cv_file.getNode('stereo_t').mat()# print("left_camera_matrix",left_camera_matrix)#注意,陈备注:以下数据由matlab2016的工具箱stereoCameraCalibrator标注获得left_camera_matrix = np.array([[ 1504.835951, 0, 903.7996248],[0, 1496.764636, 561.0768711],[0, 0, 1]]) left_distortion = np.array([[0.0570108746206312, -0.477832004663628, 0.00673968704282051, -0.00388206741248358,1.12821673355428]])right_camera_matrix = np.array([[1508.16718253864, 0, 868.820482265892],[ 0, 1497.3685484015, 574.72759642089],[ 0, 0, 1]])right_distortion = np.array([[0.0619253328320556, -1.3099240242802, 0.00734199071917032, -0.00389020681922442,7.50657175779989]])R = np.matrix([[0.999999429, -0.001009115, -0.000350325],[0.001007939, 0.999993912, 0],[0.000353694, 0.00334044, 0.999994358],]) T = np.array([-49.53961963, -0.245581652, 2.526004856]) # 平移关系向量# size = (640, 480) # 图像尺寸# 进行立体更正R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(left_camera_matrix, left_distortion,right_camera_matrix, right_distortion, size, R,T)# 计算更正mapleft_map1, left_map2 = cv2.initUndistortRectifyMap(left_camera_matrix, left_distortion, R1, P1, size, cv2.CV_16SC2)right_map1, right_map2 = cv2.initUndistortRectifyMap(right_camera_matrix, right_distortion, R2, P2, size, cv2.CV_16SC2)# print("leftmap1",left_map1)# print("right_map1",right_map1)return left_map1, left_map2, right_map1, right_map2, Q#立体视觉待测试视频--20221212,重要!!!!

# https://blog.csdn.net/m0_72557783/article/details/128256932# 添加点击事件,打印当前点的距离

def callbackFunc(e, x, y, f, p):global threeDif e == cv2.EVENT_LBUTTONDOWN: # print threeD[y][x]print(threeD[y][x])#获取立体视觉并计算函数

def stereo_vision(cap_id=0,width = 3840,height = 1080):global threeDxmlfie_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/stereo_param.xml' # left_map1, left_map2, right_map1, right_map2, sterro_Q = get_stereo_param(xmlfie_path)left_map1, left_map2, right_map1, right_map2, sterro_Q = get_stereo_param(xmlfie_path,size=(width//2,height))#获取摄像头cap = cv2.VideoCapture(cap_id)cv2.namedWindow("left", cv2.WINDOW_NORMAL)cv2.namedWindow("right", cv2.WINDOW_NORMAL)cv2.namedWindow("depth", cv2.WINDOW_NORMAL)cv2.moveWindow("left", 0, 0)cv2.moveWindow("right", 600, 0)cv2.createTrackbar("num", "depth", 0, 10, lambda x: None)cv2.createTrackbar("blockSize", "depth", 5, 255, lambda x: None)cv2.setMouseCallback("depth", callbackFunc, None)# widht_split = width//2 #注意不能用/这是浮点除法, 要用//,整除法cap.set(3, width) # CV_CAP_PROP_FRAME_WIDTHcap.set(4, height) # CV_CAP_PROP_FRAME_HEIGHT# cv2.namedWindow('camera', cv2.WINDOW_NORMAL)# cv2.namedWindow('camera_left', cv2.WINDOW_NORMAL)# cv2.namedWindow('camera_right', cv2.WINDOW_NORMAL)print("start cap")left_img = Noneright_img = Nonewhile (cap.isOpened()):ret, frame = cap.read() #捕获图片if ret == False or frame is None:print("can't get img")breakframe = cv2.flip(frame,1) #镜像操作# print(frame.shape)#注意不能用/这是浮点除法, 要用//,整除法left_img = copy.deepcopy(frame[:,:width//2,:]) right_img = copy.deepcopy(frame[:,width//2:,:])#噪声多,不稳定,陈添加了滤波left_img = cv2.GaussianBlur(left_img,(9,9),0,0)right_img = cv2.GaussianBlur(right_img,(9,9),0,0)# cv2.imshow("camera", frame)# cv2.imshow("camera_left", left_img)# cv2.imshow("camera_right", right_img)# 根据更正map对图片进行重构img1_rectified = cv2.remap(left_img, left_map1, left_map2, cv2.INTER_LINEAR)img2_rectified = cv2.remap(right_img, right_map1, right_map2, cv2.INTER_LINEAR)# 将图片置为灰度图,为StereoBM作准备imgL = cv2.cvtColor(img1_rectified, cv2.COLOR_BGR2GRAY)imgR = cv2.cvtColor(img2_rectified, cv2.COLOR_BGR2GRAY)cv2.imshow("chentest imgL",imgL)cv2.imshow("chentest imgR",imgR)# 两个trackbar用来调节不同的参数查看效果num = cv2.getTrackbarPos("num", "depth")blockSize = cv2.getTrackbarPos("blockSize", "depth")if blockSize % 2 == 0:blockSize += 1if blockSize < 5:blockSize = 5# 根据Block Maching方法生成差异图(opencv里也提供了SGBM/Semi-Global Block Matching算法,有兴趣可以试试)# stereo = cv2.StereoBM_create(numDisparities=16*num, blockSize=blockSize)stereo = cv2.StereoSGBM_create(numDisparities=16, blockSize=11)# numDisparities:disparity的搜索范围,即最大差异减去最小差异。该值始终大于零。在当前的实现中,这个参数必须可以被16整除# blockSize:块的线性大小。大小应该是奇数(因为块位于当前像素的中心)。更大的块大小意味着更平滑# stereo = cv2.StereoBM_create(numDisparities=16, blockSize=11)# speckleWindowSize:考虑其噪声斑点的平滑差距区域的最大尺寸,并使之无效。把它设置为0以禁用斑点过滤。否则,将它设置在50-200范围内的某个地方。# stereo = cv2.StereoSGBM_create(minDisparity=1,# numDisparities=16,# blockSize=11,# uniquenessRatio = 5,# speckleWindowSize = 100,# speckleRange = 1,# disp12MaxDiff = 200,# P1 = 8*3*blockSize**2,# P2 = 32*3*blockSize**2) disparity = stereo.compute(imgL, imgR)disp = cv2.normalize(disparity, disparity, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)# 将图片扩展至3d空间中,其z方向的值则为当前的距离threeD = cv2.reprojectImageTo3D(disparity.astype(np.float32)/16., sterro_Q)cv2.imshow("left", img1_rectified)cv2.imshow("right", img2_rectified)cv2.imshow("depth", disp)key = cv2.waitKey(1)if key == ord("q"):breakelif key == ord("s"):cv2.imwrite("snapshot/BM_left.jpg", imgL)cv2.imwrite("snapshot/BM_right.jpg", imgR)cv2.imwrite("snapshot/BM_depth.jpg", disp)if key == ord('q'): #如果是按键r,则退出breakcap.release()cv2.destroyAllWindows()if __name__ == '__main__':# stereo_vision(cap_id=0,width = 1280,height = 480)stereo_vision(cap_id=0,width = 3840,height = 1080)

(2)测试结果,画面抖动很厉害,噪点很多,很不理想

5、双目立体视觉的检测基于WLS滤波

(1)安装opencv-contrib

pip install opencv-contrib-python -i https://pypi.tuna.tsinghua.edu.cn/simple(2)测试,速度很慢

import cv2

import numpy as np

import copy

import glob

#pip install opencv-contrib-python -i https://pypi.tuna.tsinghua.edu.cn/simple#函数一,获得立体视觉的参数

def get_stereo_param(xmlfie_path,size = (1920, 1080)):#方法(二)设定自己的摄像机外部调整参数# cv_file = cv2.FileStorage()# cv_file.open(xmlfie_path,cv2.FileStorage_READ)# left_camera_matrix = cv_file.getNode('left_m1').mat()# left_distortion = cv_file.getNode('left_d1').mat()# right_camera_matrix = cv_file.getNode('right_m2').mat()# right_distortion = cv_file.getNode('right_d2').mat()# R = cv_file.getNode('stereo_r').mat()# T = cv_file.getNode('stereo_t').mat()# print("left_camera_matrix",left_camera_matrix)#注意,陈备注:以下数据由matlab2016的工具箱stereoCameraCalibrator标注获得left_camera_matrix = np.array([[ 1504.835951, 0, 903.7996248],[0, 1496.764636, 561.0768711],[0, 0, 1]]) left_distortion = np.array([[0.0570108746206312, -0.477832004663628, 0.00673968704282051, -0.00388206741248358,1.12821673355428]])right_camera_matrix = np.array([[1508.16718253864, 0, 868.820482265892],[ 0, 1497.3685484015, 574.72759642089],[ 0, 0, 1]])right_distortion = np.array([[0.0619253328320556, -1.3099240242802, 0.00734199071917032, -0.00389020681922442,7.50657175779989]])R = np.matrix([[0.999999429, -0.001009115, -0.000350325],[0.001007939, 0.999993912, 0],[0.000353694, 0.00334044, 0.999994358],]) T = np.array([-49.53961963, -0.245581652, 2.526004856]) # 平移关系向量# size = (640, 480) # 图像尺寸# 进行立体更正R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(left_camera_matrix, left_distortion,right_camera_matrix, right_distortion, size, R,T)# 计算更正mapleft_map1, left_map2 = cv2.initUndistortRectifyMap(left_camera_matrix, left_distortion, R1, P1, size, cv2.CV_16SC2)right_map1, right_map2 = cv2.initUndistortRectifyMap(right_camera_matrix, right_distortion, R2, P2, size, cv2.CV_16SC2)# print("leftmap1",left_map1)# print("right_map1",right_map1)return left_map1, left_map2, right_map1, right_map2, Q#立体视觉待测试视频--20221212,重要!!!!

# https://blog.csdn.net/m0_72557783/article/details/128256932# 添加点击事件,打印当前点的距离

def callbackFunc(e, x, y, f, p):if e == cv2.EVENT_LBUTTONDOWN: # print threeD[y][x]print("chest")#获取立体视觉并计算函数

def stereo_vision(cap_id=0,width = 3840,height = 1080):#重要参数,20221213### 设置参数#块大小必须为奇数(3-11)blockSize = 5img_channels = 2num_disp = 16 * 8param = {'preFilterCap': 63, #映射滤波器大小,默认15"minDisparity" : 0, #最小视差"numDisparities" : num_disp, #视差的搜索范围,16的整数倍"blockSize" : blockSize,"uniquenessRatio" : 10, #唯一检测性参数,匹配区分度不够,则误匹配(5-15)"speckleWindowSize" : 0, #视差连通区域像素点个数的大小(噪声点)(50-200)或用0禁用斑点过滤"speckleRange" : 1, #认为不连通(1-2)"disp12MaxDiff" : 2, #左右一致性检测中最大容许误差值"P1" : 8 * img_channels * blockSize** 2, #值越大,视差越平滑,相邻像素视差+/-1的惩罚系数"P2" : 32 * img_channels * blockSize** 2, #同上,相邻像素视差变化值>1的惩罚系数# 'mode': cv2.STEREO_SGBM_MODE_SGBM_3WAY}# ————————————————# wls这段代码的原文链接:https://blog.csdn.net/weixin_40524689/article/details/124929419xmlfie_path = 'D:/RGBD_CAMERA/1chen_code/stereo_vision/stereo_param.xml' left_map1, left_map2, right_map1, right_map2, sterro_Q = get_stereo_param(xmlfie_path,size=(width//2,height))#获取摄像头cap = cv2.VideoCapture(cap_id)cv2.namedWindow("left", cv2.WINDOW_NORMAL)cv2.namedWindow("right", cv2.WINDOW_NORMAL)cv2.namedWindow("depth", cv2.WINDOW_NORMAL)cv2.moveWindow("left", 0, 0)cv2.moveWindow("right", 600, 0)cv2.createTrackbar("num", "depth", 0, 10, lambda x: None)cv2.createTrackbar("blockSize", "depth", 5, 255, lambda x: None)cv2.setMouseCallback("depth", callbackFunc, None)# widht_split = width//2 #注意不能用/这是浮点除法, 要用//,整除法cap.set(3, width) # CV_CAP_PROP_FRAME_WIDTHcap.set(4, height) # CV_CAP_PROP_FRAME_HEIGHT# cv2.namedWindow('camera', cv2.WINDOW_NORMAL)# cv2.namedWindow('camera_left', cv2.WINDOW_NORMAL)# cv2.namedWindow('camera_right', cv2.WINDOW_NORMAL)print("start cap")left_img = Noneright_img = Nonewhile (cap.isOpened()):ret, frame = cap.read() #捕获图片if ret == False or frame is None:print("can't get img")breakframe = cv2.flip(frame,1) #镜像操作# print(frame.shape)#注意不能用/这是浮点除法, 要用//,整除法left_img = copy.deepcopy(frame[:,:width//2,:]) right_img = copy.deepcopy(frame[:,width//2:,:])# cv2.imshow("camera", frame)# cv2.imshow("camera_left", left_img)# cv2.imshow("camera_right", right_img)# 根据更正map对图片进行重构img1_rectified = cv2.remap(left_img, left_map1, left_map2, cv2.INTER_LINEAR)img2_rectified = cv2.remap(right_img, right_map1, right_map2, cv2.INTER_LINEAR)# 将图片置为灰度图,为StereoBM作准备imgL_gray = cv2.cvtColor(img1_rectified, cv2.COLOR_BGR2GRAY)imgR_gray = cv2.cvtColor(img2_rectified, cv2.COLOR_BGR2GRAY)cv2.imshow("chentest imgL",imgL_gray)cv2.imshow("chentest imgR",imgR_gray)left_matcher = cv2.StereoSGBM_create(**param)left_disp = left_matcher.compute(imgL_gray, imgR_gray)# 得到深度图# disp = cv2.normalize(dispL, dispL, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)## 接上面的参数left_matcher = cv2.StereoSGBM_create(**param)right_matcher = cv2.ximgproc.createRightMatcher(left_matcher)left_disp = left_matcher.compute(imgL_gray, imgR_gray)right_disp = right_matcher.compute(imgR_gray, imgL_gray)wls_filter = cv2.ximgproc.createDisparityWLSFilter(left_matcher)# sigmaColor典型范围值为0.8-2.0wls_filter.setLambda(8000.)wls_filter.setSigmaColor(1.3)wls_filter.setLRCthresh(24)wls_filter.setDepthDiscontinuityRadius(3)filtered_disp = wls_filter.filter(left_disp, imgL_gray, disparity_map_right=right_disp)disp = cv2.normalize(filtered_disp, filtered_disp, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)# 两个trackbar用来调节不同的参数查看效果# num = cv2.getTrackbarPos("num", "depth")# blockSize = cv2.getTrackbarPos("blockSize", "depth")# if blockSize % 2 == 0:# blockSize += 1# if blockSize < 5:# blockSize = 5# # 根据Block Maching方法生成差异图(opencv里也提供了SGBM/Semi-Global Block Matching算法,有兴趣可以试试)# # stereo = cv2.StereoBM_create(numDisparities=16*num, blockSize=blockSize)# stereo = cv2.StereoBM_create(numDisparities=16, blockSize=11)# disparity = stereo.compute(imgL, imgR)# disp = cv2.normalize(disparity, disparity, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)# 将图片扩展至3d空间中,其z方向的值则为当前的距离# threeD = cv2.reprojectImageTo3D(disparity.astype(np.float32)/16., sterro_Q)threeD = cv2.reprojectImageTo3D(filtered_disp.astype(np.float32)/16., sterro_Q)cv2.imshow("left", img1_rectified)cv2.imshow("right", img2_rectified)cv2.imshow("depth", disp)key = cv2.waitKey(1)if key == ord("q"):breakelif key == ord("s"):cv2.imwrite("snapshot/BM_left.jpg", imgL_gray)cv2.imwrite("snapshot/BM_right.jpg", imgR_gray)cv2.imwrite("snapshot/BM_depth.jpg", disp)if key == ord('q'): #如果是按键r,则退出breakcap.release()cv2.destroyAllWindows()if __name__ == '__main__':# stereo_vision(cap_id=0,width = 1280,height = 480)stereo_vision(cap_id=0,width = 3840,height = 1080)