openpose提供了官方的bin文件,可以通过shell直接处理视频,

此外还提供了一系列的demo文件,用于处理图片文件。

那如何修改官方的demo用来处理视频呢?

找了不少别人的教程都是通过

cv2.dnn.readNetFromCaffe(protoFile, weightsFile)用opencv载入权重的方式来实现的

比如OpenCV手势识别

或者还有的通过修改flags.hpp文件实现

如OpenPose学习笔记

我不想搞那么复杂,只想通过修改官方的01_body_from_image.py来是实现视频的处理

修改后的openpose_body_from_video.py如下

# From Python

# It requires OpenCV installed for Python

import sys

import cv2

import os

from sys import platform

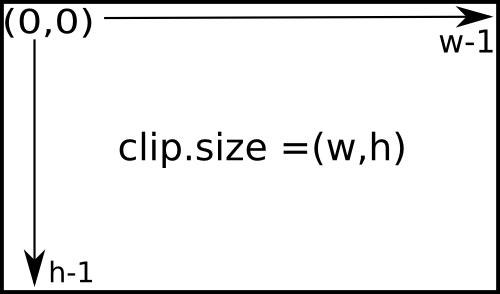

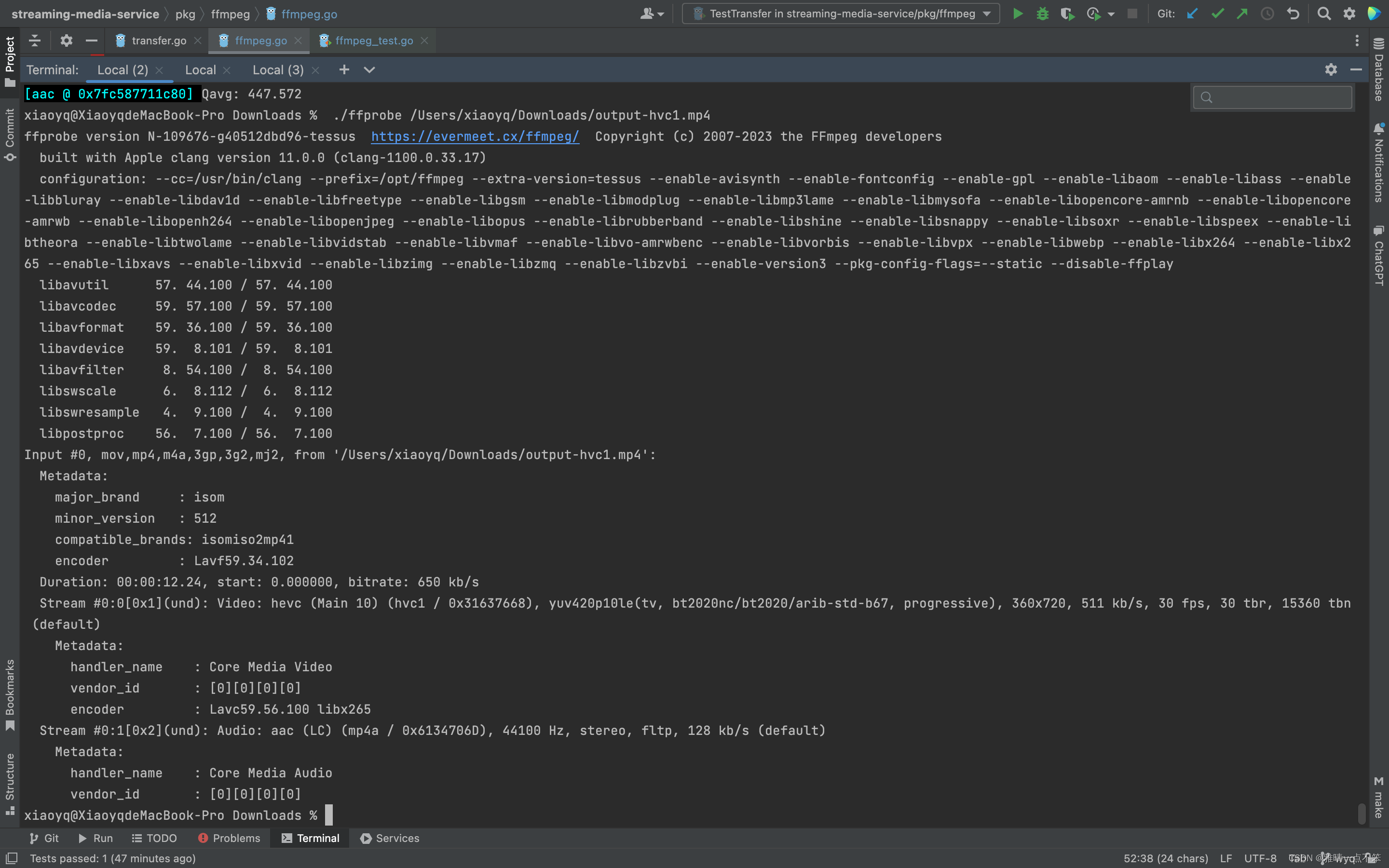

import argparsetry:# Import Openpose (Windows/Ubuntu/OSX)dir_path = os.path.dirname(os.path.realpath(__file__))try:# Windows Importif platform == "win32":# Change these variables to point to the correct folder (Release/x64 etc.)sys.path.append(dir_path + '/../../python/openpose/Release');os.environ['PATH'] = os.environ['PATH'] + ';' + dir_path + '/../../x64/Release;' + dir_path + '/../../bin;'import pyopenpose as opelse:# Change these variables to point to the correct folder (Release/x64 etc.)sys.path.append('../../python');# If you run `make install` (default path is `/usr/local/python` for Ubuntu), you can also access the OpenPose/python module from there. This will install OpenPose and the python library at your desired installation path. Ensure that this is in your python path in order to use it.# sys.path.append('/usr/local/python')from openpose import pyopenpose as opexcept ImportError as e:print('Error: OpenPose library could not be found. Did you enable `BUILD_PYTHON` in CMake and have this Python script in the right folder?')raise e# # Flags# parser = argparse.ArgumentParser()# parser.add_argument("--image_path", default="../../../examples/media/COCO_val2014_000000000192.jpg", help="Process an image. Read all standard formats (jpg, png, bmp, etc.).")# args = parser.parse_known_args()# Custom Params (refer to include/openpose/flags.hpp for more parameters)params = dict()params["model_folder"] = "../../../models/"params["hand"] = Trueparams["number_people_max"] = 1params["disable_blending"] = True # for black background# params["display"] = 0# # Add others in path?# for i in range(0, len(args[1])):# curr_item = args[1][i]# if i != len(args[1]) - 1:# next_item = args[1][i + 1]# else:# next_item = "1"# if "--" in curr_item and "--" in next_item:# key = curr_item.replace('-', '')# if key not in params: params[key] = "1"# elif "--" in curr_item and "--" not in next_item:# key = curr_item.replace('-', '')# if key not in params: params[key] = next_item# Construct it from system arguments# op.init_argv(args[1])# oppython = op.OpenposePython()# Starting OpenPoseopWrapper = op.WrapperPython()opWrapper.configure(params)opWrapper.start()# Process Imagedatum = op.Datum()cap = cv2.VideoCapture("../../../examples/media/jzh/720P.flv")fps = cap.get(cv2.CAP_PROP_FPS)size = (int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)), int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)))framecount = cap.get(cv2.CAP_PROP_FRAME_COUNT)print('Total frames in this video: ' + str(framecount))videoWriter = cv2.VideoWriter("op720_2.avi", cv2.VideoWriter_fourcc('D', 'I', 'V', 'X'), fps, size)while cap.isOpened():hasFrame, frame = cap.read()if hasFrame:datum.cvInputData = frameopWrapper.emplaceAndPop(op.VectorDatum([datum]))opframe = datum.cvOutputDatacv2.imshow("main", opframe)videoWriter.write(opframe)if cv2.waitKey(1) & 0xFF == ord('q'):breakelse:breakcap.release()cv2.destroyAllWindows()except Exception as e:print(e)sys.exit(-1)

详情请见github openpose_body_from_video

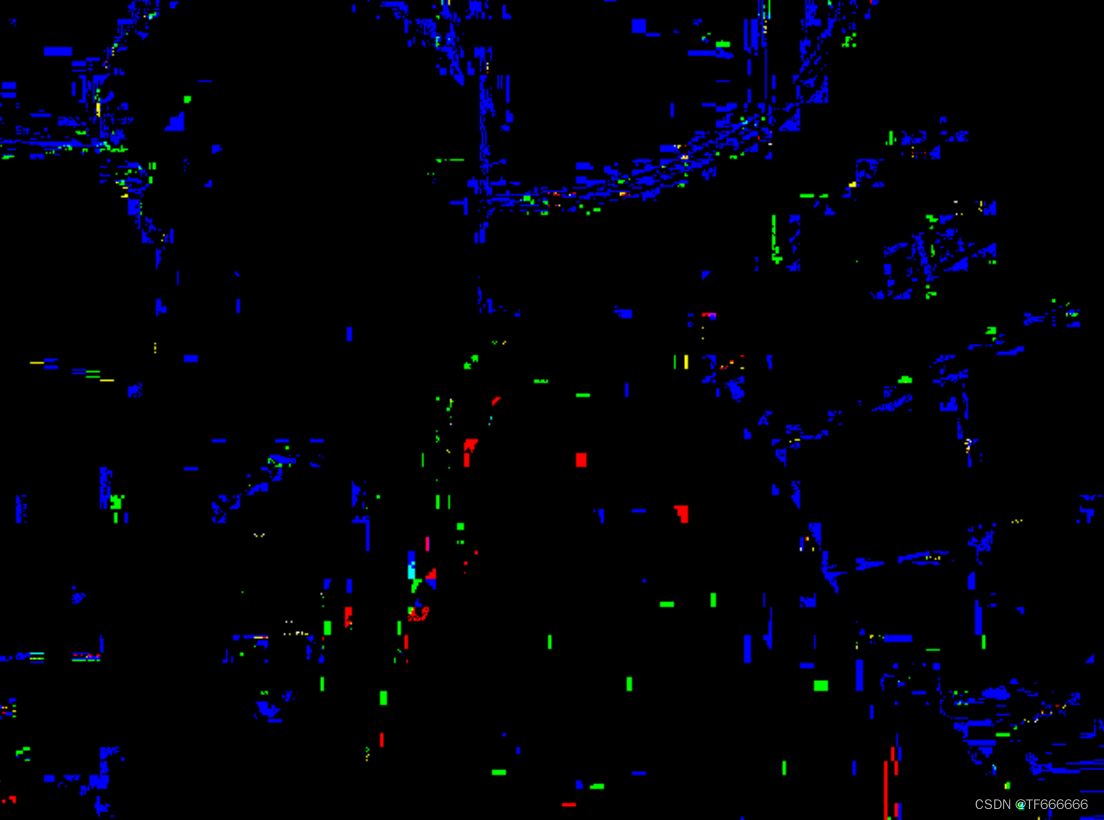

视频展示

openpose人体姿态识别 效果展示 金子涵

openpose人体姿态识别 增加手部特征 效果展示 金子涵