前言:ceph的基本介绍

Ceph是一个统一的分布式存储系统,设计初衷是提供较好的性能、可靠性和可扩展性。

Ceph特点:

1.高性能:

a. 摒弃了传统的集中式存储元数据寻址的方案,采用CRUSH算法,数据分布均衡,并行度高。

b.考虑了容灾域的隔离,能够实现各类负载的副本放置规则,例如跨机房、机架感知等。

c.能够支持上千个存储节点的规模,支持TB到PB级的数据。

2.高可用性:

a. 副本数可以灵活控制。

b. 支持故障域分隔,数据强一致性。

c. 多种故障场景自动进行修复自愈。

d. 没有单点故障,自动管理。

3.高可扩展性

a. 去中心化。

b. 扩展灵活。

c. 随着节点增加而线性增长

4.特性丰富

a. 支持三种存储接口:块存储、文件存储、对象存储。

b. 支持自定义接口,支持多种语言驱动。

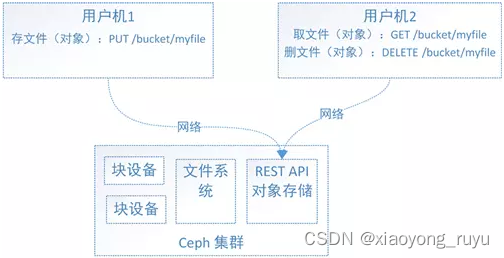

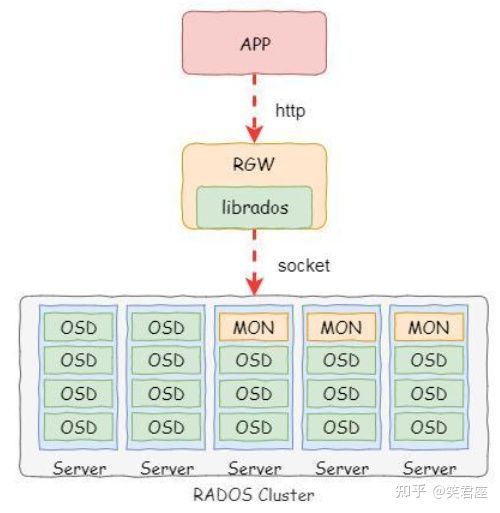

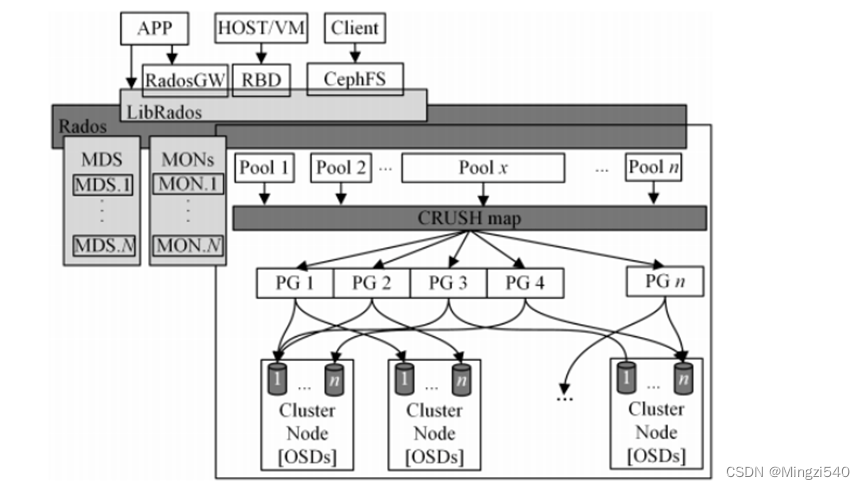

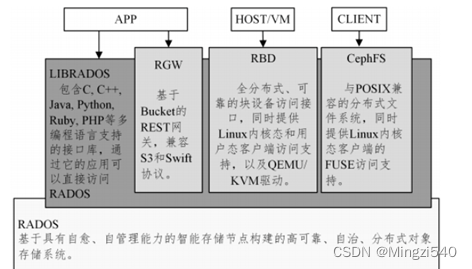

Cephd的核心基于RADOS,RADOS可提供高可靠、高性能和全分布式的对象存储服务。对象的分布可以基于集群中各节点的实时状态,也可以自定义故障域来调整数据分布。块设备和文件都被抽象包装为对象,对象则是兼具安全和强一致性语义的抽象数据类型,因此RADOS可在大规模异构存储集群中实现动态数据与负载均衡。对象存储设备(OSD)是 RADOS 集群的基本存储单元,它的主要功能是存储、备份、恢复数据,并与其他OSD之间进行负载均衡和心跳检查等。一块硬盘 通常对应一个OSD,由OSD对硬盘存储进行管理,但有时一个分区也可以成为一个OSD,每个OSD皆可提供完备和具有强一致性语义的本地对象存储服务。MDS是元数据服务器,向外提供CephFS在服务时发出的处理元数据的请求,将客户端对文件的请求转换为对对象的请求。RADOS中可以有多个 MDS分担元数据查询的工作。

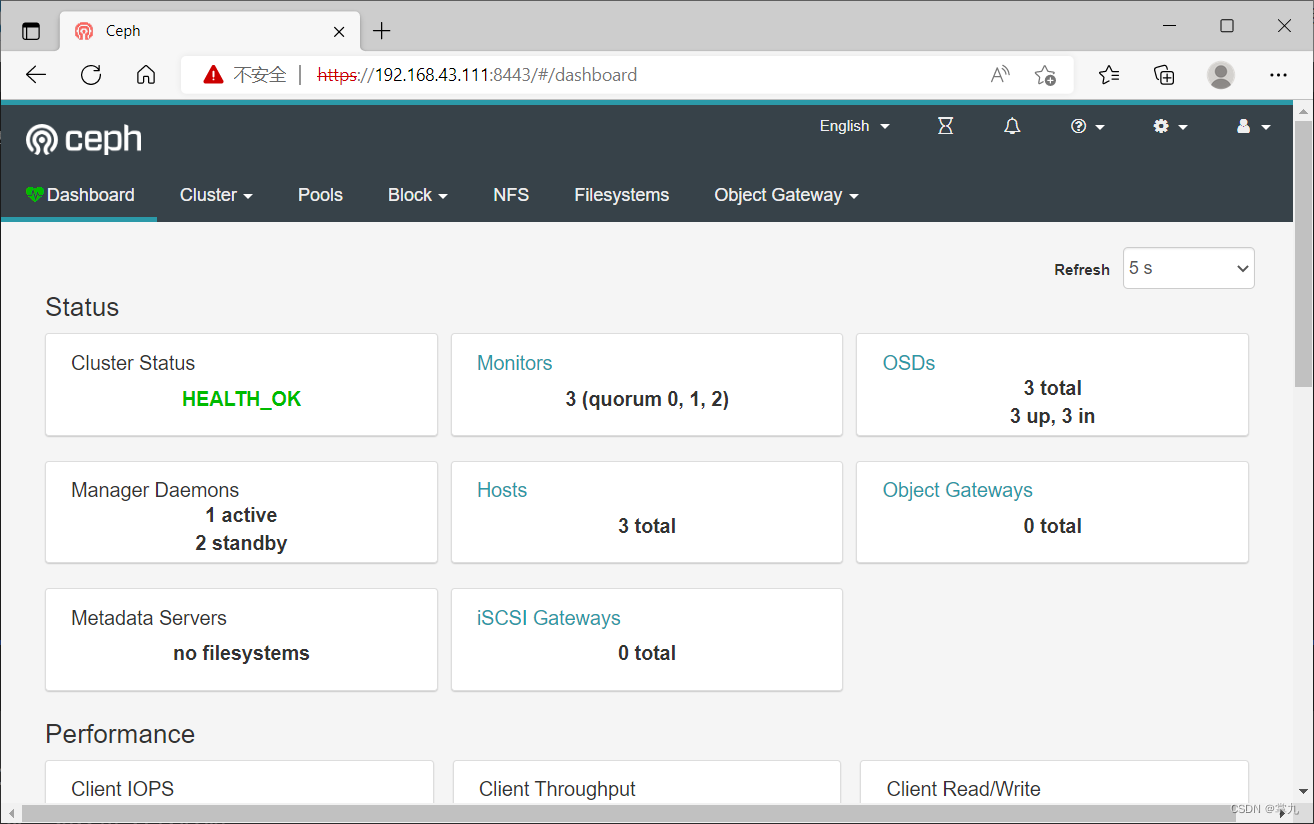

工作环境top图

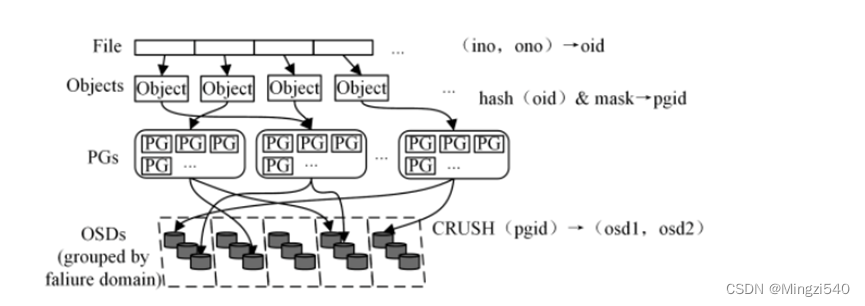

数据放置算法:

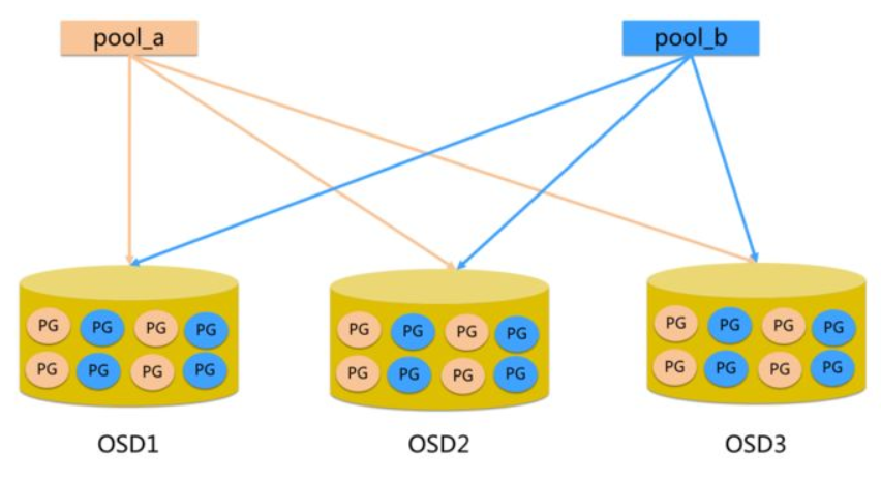

RADOS取得高可扩展性的关键在于彻底抛弃了传统存储系统中的中心元数据节点,另辟蹊径的以基于可扩展哈希的受控副本分布算法—CRUSH来代替。通过CRUSH算法,客户端可以计算出所要访问的对象所在的OSD。与以往的方法相比,CRUSH的数据管理机制更好,它把工作分配给集群内的所有客户端和OSD来处理,因此具有极大的伸缩性。CRUSH用智能数据复制来确保弹性,更能适应超大规模存储。如图所示,从文件到对象以及PG(Placement Group)都是逻辑上的映射,从PG到OSD的映射采用CRUSH算法,以保证在增删集群节点时能找到对应的数据位置。

三类访问接口:

Object:有原生的API,而且也兼容Swift和S3的API。(对象)

Block:支持精简配置、快照、克隆。(块)

File:Posix接口,支持快照。(文件)

下面我们准备环境部署。一般需要5台服务器,master与它的四个mod节点。我们这边为了节约成本使用3台。

| master | 192.168.80.10 |

| node1 | 192.168.80.7 |

| node2 | 192.168.80.11 |

每台主机需要添加2块磁盘,磁盘大小自己控制

镜像源提取码

百度网盘 请输入提取码iiii

准备工作。3台主机都需要操作

先下载我们的安装包

[root@ceph1 ~]# yum install net-toolsyum -y install lrzsz unzip zip

//安装常用的工具包[root@ceph1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.80.10 ceph1

192.168.80.7 ceph2

192.168.80.11 ceph

//3台主机都要添加主节点的ip信息systemctl stop firewalld && systemctl disable firewalld

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/configswapoff -a && sed -ri 's/.*swap.*/#&/' /etc/fstab

//关闭防火墙。selinux,swap分区[root@ceph1 ~]# ulimit -SHn 65535

[root@ceph1 ~]# cat /etc/security/limits.conf # End of file

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

//这些参数加在文件结尾[root@ceph1 ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

kernel.pid_max = 4194303

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_tw_reuse = 1

net.ipv4.ip_local_port_range = 1024 65000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_tw_buckets = 20480

net.ipv4.tcp_max_syn_backlog = 20480

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_fin_timeout = 20//在文件结尾加上参数[root@ceph1 ~]# sysctl -p

kernel.pid_max = 4194303

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_tw_reuse = 1

net.ipv4.ip_local_port_range = 1024 65000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_tw_buckets = 20480

net.ipv4.tcp_max_syn_backlog = 20480

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_fin_timeout = 20

//验证主节点ceph1添加yum源[root@ceph1 ~]# cd /opt/

[root@ceph1 opt]# ls

ceph_images ceph-pkg containerd data

//提前把ceph的包拉进opt目录解压,镜像后面解压mkdir /etc/yum.repos.d.bak/ -pmv /etc/yum.repos.d/* /etc/yum.repos.d.bak/[root@ceph1 ~]# cd /etc/yum.repos.d

[root@ceph1 yum.repos.d]# cat ceph.repo

[ceph]

name=ceph

baseurl=file:///opt/ceph-pkg/

gpgcheck=0

enabled=1[root@ceph1 opt]# yum makecache

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

ceph | 2.9 kB 00:00:00

Metadata Cache Created

//建立缓存yum install -y vsftpdecho "anon_root=/opt/" >> /etc/vsftpd/vsftpd.confsystemctl enable --now vsftpd

//下载VSFTP两个节点换上ceph1的源

mkdir /etc/yum.repos.d.bak/ -p

mv /etc/yum.repos.d/* /etc/yum.repos.d.bak/[root@ceph2 ~]# cd /etc/yum.repos.d

[root@ceph2 yum.repos.d]# cat ceph.repo

[ceph]

name=ceph

baseurl=ftp://192.168.80.10/ceph-pkg/

gpgcheck=0

enabled=1[root@ceph3 ~]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph

baseurl=ftp://192.168.80.10/ceph-pkg/

gpgcheck=0

enabled=1yum clean all

yum makecache

安装时钟源 cp1 cp2 cp3 同时配置yum install -y chrony

//下载chrony[root@ceph1 ~]# cat /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server 192.168.80.10 iburst

allow all

local stratum 10[root@ceph2 ~]# cat /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server 192.168.80.10 iburst

allow all

local stratum 10[root@ceph3 ~]# cat /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server 192.168.80.10 iburstsystemctl restart chronyd

clock -w//安装docker cp1 cp2 cp3yum install -y yum-utils device-mapper-persistent-data lvm2yum -y install docker-ce python3

yum -y install python3[root@ceph1 ~]# systemctl status docker

● docker.service - Docker Application Container EngineLoaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)Active: active (running) since Sat 2022-10-01 12:06:58 CST; 12min agoDocs: https://docs.docker.comMain PID: 1411 (dockerd)Tasks: 24Memory: 138.6MCGroup: /system.slice/docker.service└─1411 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sockOct 01 12:07:24 ceph1 dockerd[1411]: time="2022-10-01T12:07:24.692606616+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:07:25 ceph1 dockerd[1411]: time="2022-10-01T12:07:25.280448528+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:07:27 ceph1 dockerd[1411]: time="2022-10-01T12:07:27.053867172+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:07:27 ceph1 dockerd[1411]: time="2022-10-01T12:07:27.697494308+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:07:28 ceph1 dockerd[1411]: time="2022-10-01T12:07:28.274449001+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:07:28 ceph1 dockerd[1411]: time="2022-10-01T12:07:28.811756697+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:17:32 ceph1 dockerd[1411]: time="2022-10-01T12:17:32.608800965+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:17:33 ceph1 dockerd[1411]: time="2022-10-01T12:17:33.219899151+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:17:33 ceph1 dockerd[1411]: time="2022-10-01T12:17:33.758334094+08:00" level=info msg="ignoring event" cont...elete"

Oct 01 12:17:34 ceph1 dockerd[1411]: time="2022-10-01T12:17:34.296629723+08:00" level=info msg="ignoring event" cont...elete"

Hint: Some lines were ellipsized, use -l to show in full./安装yum install -y cephadm//docker导入镜像[root@ceph1 ~]# cd /opt/ceph_images/

[root@ceph1 ceph_images]# for i in `ls`;do docker load -i $i;done

Loaded image: quay.io/ceph/ceph-grafana:6.7.4

Loaded image: quay.io/ceph/ceph:v15

Loaded image: quay.io/prometheus/alertmanager:v0.20.0

Loaded image: quay.io/prometheus/node-exporter:v0.18.1

Loaded image: quay.io/prometheus/prometheus:v2.18.1//3台主机都需要执行此操作//初始化mon节点cp1 cp2 cp3mkdir -p /etc/ceph

cephadm bootstrap --mon-ip 10.219.23.71 --skip-pull

//初始话必须在创建的目录下执行https://docs.ceph.com/docs/master/mgr/telemetry/

//最后是这一行内容说明初始化成功//安装ceph-common工具(ceph1)

服务yum install -y ceph-common//做免密登录[root@ceph1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2[root@ceph1 ~]#ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3//发现集群

[root@ceph1 ~]# ceph orch host add ceph1

Added host 'ceph1'

[root@ceph1 ~]# ceph orch host add ceph2

Added host 'ceph2'

[root@ceph1 ~]# ceph orch host add ceph3

Added host 'ceph3'

//master与2个节点形成集群ceph config set mon public_network 192.168.80.0/24

//执行此命令

[root@ceph1 ~]# ceph orch apply mon ceph1,ceph2,ceph3

Scheduled mon update...

[root@ceph1 ~]#ceph orch host label add ceph1 mon

[root@ceph1 ~]#ceph orch host label add ceph2 mon

[root@ceph1 ~]#ceph orch host label add ceph3 mon

//添加节点信息# node2与node3查看进程

ps -ef | grep docker//验证

[root@ceph1 ~]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

ceph1 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph2 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph3 /dev/sdb hdd 21.4G Unknown N/A N/A Yessystemctl restart ceph.target

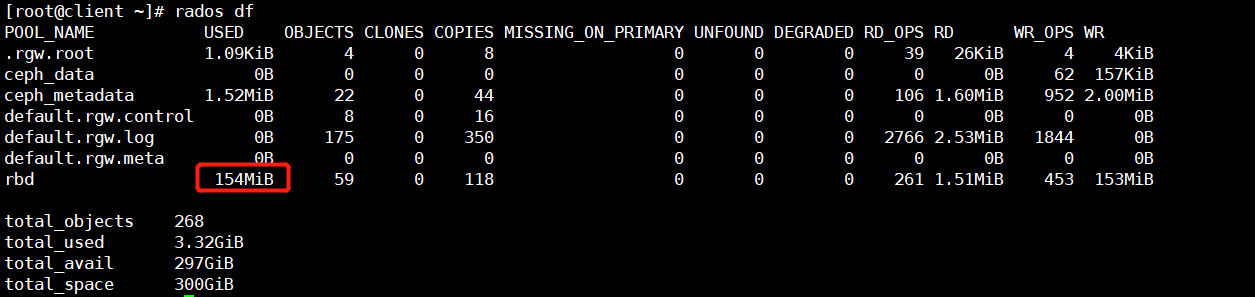

//重启服务11.部署OSD

存储数据

# 查看可用的磁盘设备

[root@ceph1 ~]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

ceph1 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph2 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph3 /dev/sdb hdd 21.4G Unknown N/A N/A Yes# 添加到ceph集群中,在未使用的设备上自动创建osd

[root@ceph1 ~]# ceph orch apply osd --all-available-devices

Scheduled osd.all-available-devices update...# 查看osd磁盘

ceph -sceph df[root@ceph1 ~]# ceph -scluster:id: 2b0a47ba-3e32-11ed-87cb-000c29bdbaa4health: HEALTH_WARN2 failed cephadm daemon(s)1 filesystem is degraded1 MDSs report slow metadata IOs1 osds down1 host (1 osds) downReduced data availability: 97 pgs inactiveDegraded data redundancy: 44/66 objects degraded (66.667%), 13 pgs degraded, 97 pgs undersized2 slow ops, oldest one blocked for 1636 sec, mon.ceph1 has slow opsservices:mon: 1 daemons, quorum ceph1 (age 27m)mgr: ceph3.ikzpva(active, since 27m), standbys: ceph1.xixxhumds: cephfs:1/1 {0=cephfs.ceph1.ffzbyq=up:replay} 2 up:standbyosd: 3 osds: 1 up (since 27m), 2 in (since 3d)data:pools: 3 pools, 97 pgsobjects: 22 objects, 6.9 KiBusage: 2.0 GiB used, 38 GiB / 40 GiB availpgs: 100.000% pgs not active44/66 objects degraded (66.667%)84 undersized+peered13 undersized+degraded+peered[root@ceph1 ~]# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 40 GiB 38 GiB 39 MiB 2.0 GiB 5.10

TOTAL 40 GiB 38 GiB 39 MiB 2.0 GiB 5.10--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

cephfs-metadata 1 32 113 KiB 22 1.6 MiB 0 54 GiB

device_health_metrics 2 1 0 B 0 0 B 0 18 GiB

cephfs-data 3 64 0 B 0 0 B 0 18 GiB12.部署MDS

存储元数据

CephFS 需要两个 Pools,cephfs-data 和 cephfs-metadata,分别存储文件数据和文件元数据

ceph osd pool create cephfs-metadata 32 32ceph osd pool create cephfs-data 64 64ceph fs new cephfs cephfs-metadata cephfs-data# 查看 cephfs

[root@ceph1 ~]# ceph fs ls

name: cephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ]

[root@ceph1 ~]#ceph orch apply mds cephfs --placement="3 ceph1 ceph2 ceph3"

Scheduled mds.cephfs update...# 查看mds有三个,两个预备状态

ceph -s

[root@ceph1 ~]# ceph orch apply rgw rgw01 zone01 --placement="3 ceph1 ceph2 ceph3"

Scheduled rgw.rgw01.zone01 update...[root@ceph1 ~]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 31s ago 3d count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 56s ago 3d * quay.io/ceph/ceph:v15 93146564743f

grafana 1/1 31s ago 3d count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

mds.cephfs 3/3 56s ago 58s ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

mgr 2/2 31s ago 3d count:2 quay.io/ceph/ceph:v15 93146564743f

mon 1/3 56s ago 10m ceph1;ceph2;ceph3 quay.io/ceph/ceph:v15 mix

node-exporter 1/3 31s ago 3d * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.all-available-devices 3/3 56s ago 3m * quay.io/ceph/ceph:v15 93146564743f

prometheus 1/1 31s ago 3d count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

rgw.rgw01.zone01 2/3 56s ago 33s ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f # 创建授权账号,给客户端使用

[root@ceph1 ~]# ceph auth get-or-create client.fsclient mon 'allow r' mds 'allow rw' osd 'allow rwx pool=cephfs-data' -o ceph.client.fsclient.keyring

//-o 指定保存的客户端key文件,这是一种方式,可以创建授权文件,再利用授权文件导出key保存给

客户端使用# 获取key

[root@ceph1 ~]# ceph auth print-key client.fsclient > fsclient.key# 传递给予客户端(客户端可能没有ceph目录,需要创建 mkdir /etc/ceph/)

[root@ceph1 ~]# scp fsclient.key root@ceph2:/etc/ceph/

[root@ceph1 ~]# scp fsclient.key root@ceph3:/etc/ceph/客户端使用

# 挂载使用cephfs(ceph2或ceph3节点)yum -y install ceph-common# 查看ceph模块

[root@ceph1 ~]# modinfo ceph

filename: /lib/modules/3.10.0-1160.el7.x86_64/kernel/fs/ceph/ceph.ko.xz

license: GPL

description: Ceph filesystem for Linux

author: Patience Warnick <patience@newdream.net>

author: Yehuda Sadeh <yehuda@hq.newdream.net>

author: Sage Weil <sage@newdream.net>

alias: fs-ceph

retpoline: Y

rhelversion: 7.9

srcversion: EB765DDC1F7F8219F09D34C

depends: libceph

intree: Y

vermagic: 3.10.0-1160.el7.x86_64 SMP mod_unload modversions

signer: CentOS Linux kernel signing key

sig_key: E1:FD:B0:E2:A7:E8:61:A1:D1:CA:80:A2:3D:CF:0D:BA:3A:A4:AD:F5

sig_hashalgo: sha256# 检查是否有上面授权给予的密钥key,如果密钥则无法使用相应的key

ls /etc/ceph/[root@ceph1 ~]# ls /etc/ceph/

ceph.client.admin.keyring ceph.client.fsclient.keyring ceph.conf ceph.pub fsclient.key rbdmap# 创建挂载点(以data目录为例)

mkdir /cephfs# 挂载[root@ceph1 ~]# mount -t ceph ceph1:6789,ceph2:6789,ceph3:6789:/ /cephfs -o name=fsclient,secretfile=/etc/ceph/fsclient.key# 查看是否挂载成功

[root@ceph1 ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 38M 2.0G 2% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 54G 11G 43G 21% /

/dev/sda1 xfs 1.1G 158M 906M 15% /boot

/dev/mapper/centos-home xfs 45G 34M 45G 1% /home

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/45a533a79429e5b246e6def07f055ab74f5dc61285bd3c11545dc9c916196651/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/9aee54ff92cad9256ab432d3b3faba73889d1deb6d27bd970cd5cf17c3223ff7/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/2554e96032aeca13ed847b587dfc8633398cb808a0b27cb9962f669acf8775b1/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/958178579d45c6cbadad049097d057ec6a4547d6afbe5d1b9abec8b8aed8a64f/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/6477aa1cb3b34f694d1092a2b6f0dd198db45bf74f3203de49916e18e567c4ea/merged

tmpfs tmpfs 396M 0 396M 0% /run/user/0

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/aa0f011762164ffe210eb54d25bce0da97d58e284a4b00ff9e633be43c5babef/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/2bcca822e8dd204d897abdae6e954b9ca35681c890782661877a6724c4c152dd/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/2fcc501129c6abb0c93a0fe078eb588239ec323353bfb32639bf0a0855d94e38/merged

overlay overlay 54G 11G 43G 21% /var/lib/docker/overlay2/e78a4e1045a64349c08be6575f0df1b7152960e697933d838fd7103edc49cd26/merged# 配置永久挂载

vim /etc/fstab

ceph1:6789,ceph2:6789,ceph3:6789,ceph4:6789,ceph5:6789:/ /cephfs ceph name=fsclient,secretfile=/etc/ceph/fsclient.key,_netdev,noatime 0 0

# _netdev作为网络传输挂载,不能访问时就不挂载

# noatime为了提示文件性能,不用实时访问时间戳