文章目录

- 思路

- 源码分析

- 初始化 Chrome

- 获取搜索引擎页面给出的网页链接

- 循环遍历网页链接,过滤电影链接

- 第二次过滤并输出链接

- 最后加上程序入口

- 完整源码 复制即用

- 效果

思路

手动用搜索引擎找电影链接慢而且不方便,找到的链接也有限。各大电影网站的链接不是太少就是被封了版权。

但电影链接几乎都是以 thunder迅雷云盘,magnet BT,ed2k电驴的形式出现的

于是乎 我就出现了一个想法,能不能用 selenium 在搜索引擎上搜索电影,遍历搜索引擎页面给出的 网页链接,最后过滤其 html 页面 所有的 电影链接

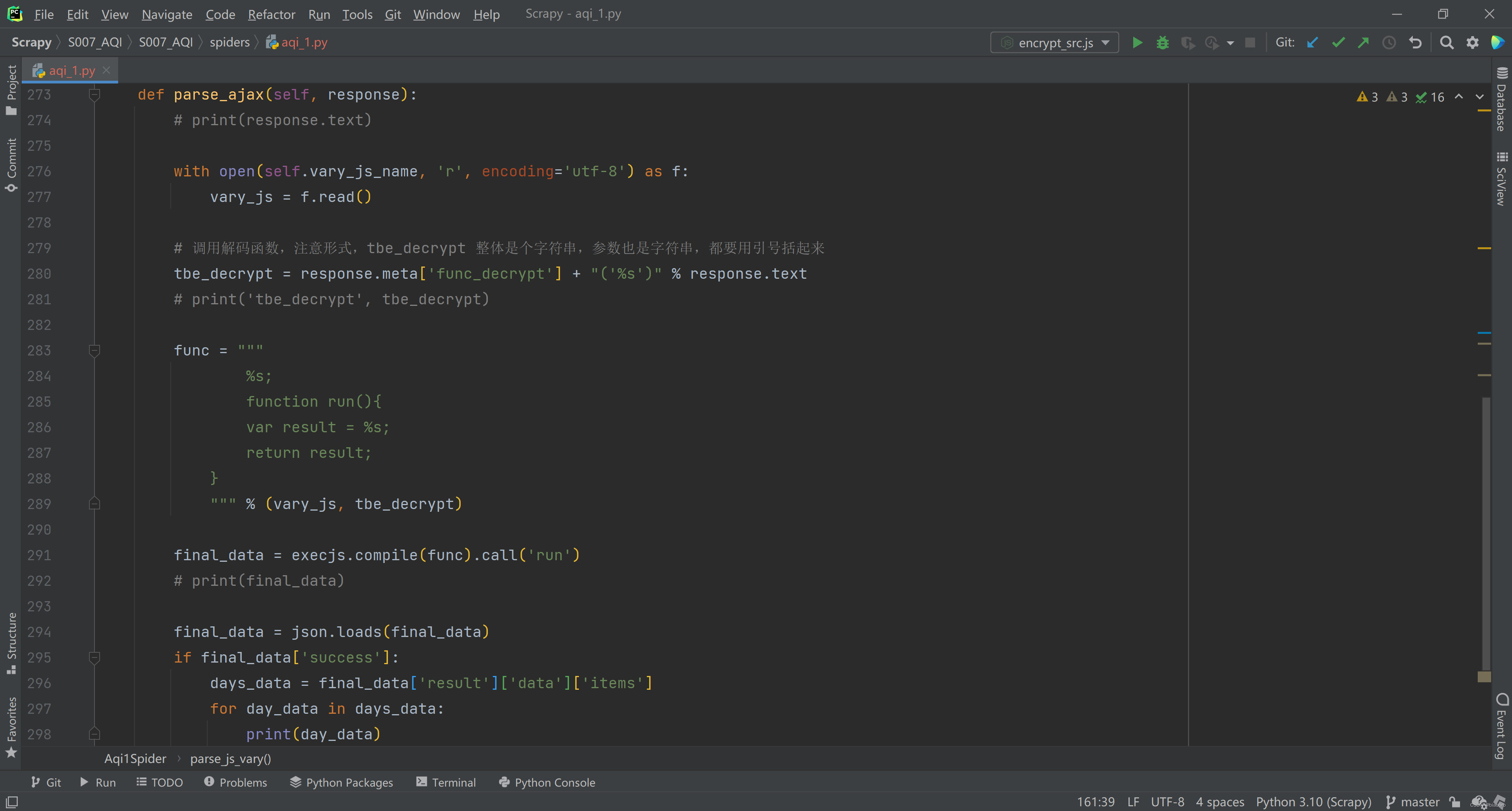

源码分析

初始化 Chrome

def start_crawl(name):agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36 Edg/109.0.1518.78'list1 = ['0.5', '1']options = webdriver.ChromeOptions()prefs = {'profile.default_content_setting_values': {'images': 2,'javascript': 2}}# options.add_experimental_option('prefs', prefs)#本来是设置不加载图片与javascript脚本,后来发现可能被搜索引擎认为是爬虫封掉ip ,故没有使用此设置options.add_argument('--headless')options.add_argument('--disable-gpu')options.add_argument('--ignore-certificate-error')options.add_argument("user-agent=" + agent) # str(UserAgent().random))d = webdriver.Chrome(r'chromedriver.exe', options=options)d.get('https://cn.bing.com/')#向搜索引擎发送请求

获取搜索引擎页面给出的网页链接

time.sleep(2)d.find_element_by_css_selector('input[id="sb_form_q"]').send_keys(str(name) + ' 下载 迅雷下载\n')#模拟在搜索引擎输入框内搜索电影操作 并按下回车urls = []# 储存所有的网页time.sleep(2)for i in range(5): # 翻五页time.sleep(1)for j in d.find_elements_by_css_selector('li div h2 a'):urls.append(j.get_attribute('href'))#将网页链接添加到 urls 里d.execute_script('var t=document.documentElement.scrollTop=2000;')#将页面拉到最下面time.sleep(0.1)WebDriverWait(d, 10, 1).until(expected_conditions.visibility_of_element_located((By.CSS_SELECTOR, 'li a[title="下一页"]')))#等待按钮加载next_page = d.find_element_by_css_selector('li a[title="下一页"]')next_page.click()#点击下一页按钮翻页

循环遍历网页链接,过滤电影链接

print(len(urls))list2 = []se = requests.session()se.keep_alive = Falserequests.packages.urllib3.disable_warnings()# requests初始化print('url开始爬取!')for i in urls:'''请求并解析页面网址。'''try:time.sleep(0.2)print(str(i))r = se.get(url=str(i),timeout=5, headers={'useragent': agent, 'content-type': 'charset=utf8'}, verify=False)encoding = cchardet.detect(r.content)['encoding']r.encoding = encoding#获取网页解码方式,防止乱码s = BeautifulSoup(r.text, "lxml")if r.status_code == 200:all_element = s.select('*')for j in all_element:#遍历单个网页里所有的元素try:text = j.textexcept:text = ''#尝试获取元素的text内容try:if text != '':if ('magnet:' in j.text) or ('thunder:' in j.text) or ('ed2k:' in j.text):list2.append(j.text)for z in j.attrs:if ('magnet:' in j.attrs[str(z)]) or ('thunder:' in j.attrs[str(z)]) or ('ed2k:' in j.attrs[str(z)]):list2.append(j.attrs[str(z)])except KeyError:passexcept AttributeError:passexcept Exception as e:print(e)

第二次过滤并输出链接

list3 = []os.system('cls')print('\n\n--------链接如下--------\n\n')'''净化并输出链接。'''for i in list2:for j in i.split('\n'):if ('magnet:' in j) or ('thunder:' in j) or ('ed2k:' in j):list3.append(j)#去换行符list4 = []for i in list3:for j in i.split(' '):if ('magnet:' in j) or ('thunder:' in j) or ('ed2k:' in j):list4.append(j)#去空格list4 = list(set(list4))#去重for i in list4:print(str(i))print('\n')try:d.quit()#driver 结束os.system('pause')# 程序暂停,为打包成exe形式添加的代码time.sleep(10000)except Exception as e:print(e)time.sleep(10000)

最后加上程序入口

if __name__ == '__main__':name = input('请输入电影名称:')start_crawl(name=name)完整源码 复制即用

import os

import random

import time

import cchardet

from requests.exceptions import ConnectionError

from selenium import webdriver

#from fake_useragent import UserAgent

import requests

from bs4 import BeautifulSoup

from selenium.common.exceptions import StaleElementReferenceException

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions

from selenium.webdriver.support.wait import WebDriverWaitdef start_crawl(name):agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36 Edg/109.0.1518.78'#name = input('请输入电影名称:')list1 = ['0.5', '1']options = webdriver.ChromeOptions()prefs = {'profile.default_content_setting_values': {'images': 2,'javascript': 2}}# options.add_experimental_option('prefs', prefs)options.add_argument('--headless')options.add_argument('--disable-gpu')options.add_argument('--ignore-certificate-error')options.add_argument("user-agent=" + agent) # str(UserAgent().random))d = webdriver.Chrome(r'chromedriver.exe', options=options)d.get('https://cn.bing.com/')time.sleep(2)d.find_element_by_css_selector('input[id="sb_form_q"]').send_keys(str(name) + ' 下载 迅雷下载\n')urls = []# 储存所有的网页time.sleep(2)for i in range(5): # 默认翻五页time.sleep(1)for j in d.find_elements_by_css_selector('li div h2 a'):urls.append(j.get_attribute('href'))d.execute_script('var t=document.documentElement.scrollTop=2000;')time.sleep(0.1)WebDriverWait(d, 10, 1).until(expected_conditions.visibility_of_element_located((By.CSS_SELECTOR, 'li a[title="下一页"]')))next_page = d.find_element_by_css_selector('li a[title="下一页"]')next_page.click()print(len(urls))list2 = []se = requests.session()se.keep_alive = Falserequests.packages.urllib3.disable_warnings()# requests初始化print('url开始爬取!')for i in urls:'''请求并解析页面网址。'''try:time.sleep(0.2)print(str(i))# print(i.get_attribute('href'))r = se.get(url=str(i),timeout=5, headers={'useragent': agent, 'content-type': 'charset=utf8'}, verify=False)encoding = cchardet.detect(r.content)['encoding']r.encoding = encodings = BeautifulSoup(r.text, "lxml")if r.status_code == 200:all_element = s.select('*')for j in all_element:try:text = j.textexcept:text = ''try:if text != '':if ('magnet:' in j.text) or ('thunder:' in j.text) or ('ed2k:' in j.text):# print(''.join(j.text.split(' ')))# print(j.text.replace('\n',''))list2.append(j.text)for z in j.attrs:if ('magnet:' in j.attrs[str(z)]) or ('thunder:' in j.attrs[str(z)]) or ('ed2k:' in j.attrs[str(z)]):# print(j.attrs[str(z)].replace('\n',''))list2.append(j.attrs[str(z)])except KeyError:passexcept AttributeError:passexcept Exception as e:print(e)list3 = []os.system('cls')print('\n\n--------链接如下--------\n\n')'''净化并输出链接。'''for i in list2:for j in i.split('\n'):if ('magnet:' in j) or ('thunder:' in j) or ('ed2k:' in j):list3.append(j)list4 = []for i in list3:for j in i.split(' '):if ('magnet:' in j) or ('thunder:' in j) or ('ed2k:' in j):list4.append(j)list4 = list(set(list4))for i in list4:print(str(i))print('\n')try:d.quit()os.system('pause')time.sleep(10000)except Exception as e:print(e)time.sleep(100)if __name__ == '__main__':try:name = input('请输入电影名称:')start_crawl(name=name)except Exception as e:print(e)time.sleep(100)效果

大概有一百多条连接,其中八成能用

| 本文若对你有帮助,请留下一个赞激励我…… | |

|---|---|

| 如有不足之处,欢迎评论区指正…… |