自定义函数案例:

文章目录

- 自定义UDF函数

- 1.需求

- 2.前期maven工程准备

- 3.编程实现

- 4.导包

- 5.导入hive中

- 自定义UDTF函数

- 1.需求

- 2.编程实现

- 3.导入hive中

自定义UDF函数

1.需求

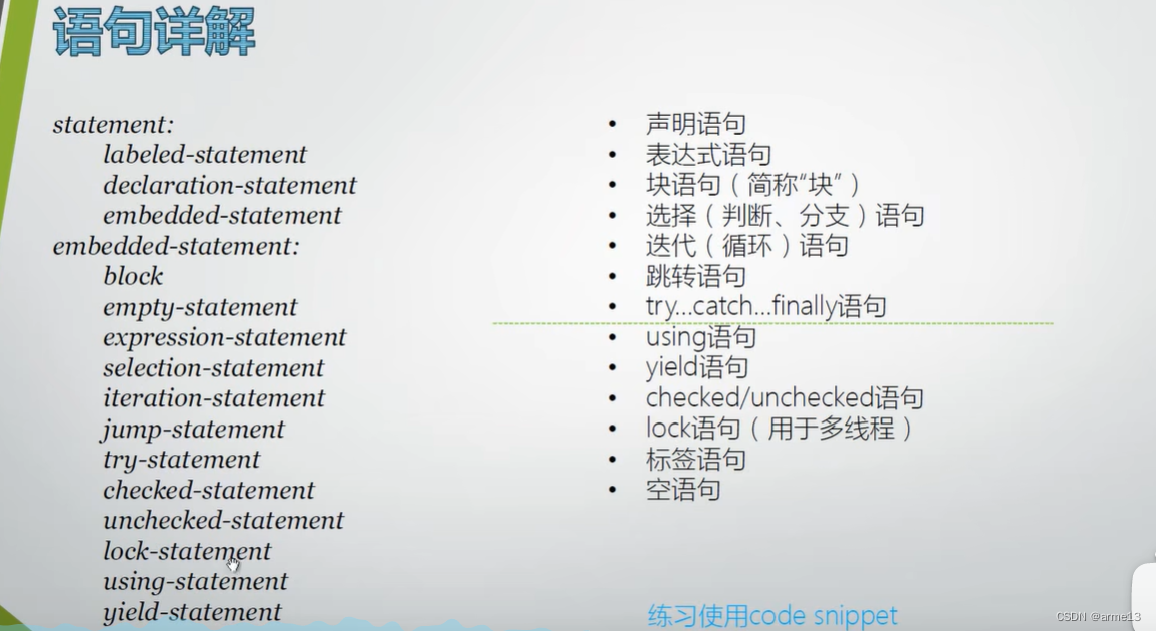

自定义一个UDF实现计算给定字符串的长度例如

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-8ZqHfWL7-1642945083980)(C:\Users\Admin\AppData\Roaming\Typora\typora-user-images\image-20220123150507709.png)]](https://img-blog.csdnimg.cn/ca9cd5e75e134228b615d5405ef7cf99.png)

2.前期maven工程准备

创建一个maven工程,导入依赖

<dependencies>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>3.1.2</version>

</dependency>

</dependencies>

3.编程实现

编写实现类

package com.yingzi.hive1;import org.apache.hadoop.hive.ql.udf.generic.GenericUDF;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentLengthException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentTypeException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

/*** @author 影子* @create 2022-01-23-14:08**//*** 自定义UDF函数,继承GenericUDF类* 需求:计算指定字符串的长度*/

public class MyStringLength extends GenericUDF {/**** @param objectInspectors 输入参数类型的鉴别器对象* @return 返回值类型的鉴别器对象* @throws UDFArgumentException*/@Overridepublic ObjectInspector initialize(ObjectInspector[] objectInspectors) throws UDFArgumentException {// 判断输入参数的个数if (objectInspectors.length != 1){throw new UDFArgumentLengthException("Input Args Length Error!!!");}// 判断输入参数的类型if (!objectInspectors[0].getCategory().equals(ObjectInspector.Category.PRIMITIVE)){throw new UDFArgumentTypeException(0,"Input Args Type Error!!!");}// 函数本身返回值为int,需要返回int类型的鉴别器对象return PrimitiveObjectInspectorFactory.javaIntObjectInspector;}/*** 函数的逻辑处理* @param deferredObjects 输入的参数* @return 返回值* @throws HiveException*/@Overridepublic Object evaluate(DeferredObject[] deferredObjects) throws HiveException {if (deferredObjects[0].get() == null){return 0;}return deferredObjects[0].get().toString().length();}@Overridepublic String getDisplayString(String[] strings) {return "";}

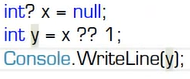

}4.导包

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-FOXNHmjH-1642945083982)(C:\Users\Admin\AppData\Roaming\Typora\typora-user-images\image-20220123150806976.png)]](https://img-blog.csdnimg.cn/e048f19742d44c6fa5d817a4ac89aeff.png)

将上述包,放到虚拟机的hive/lib下

5.导入hive中

1)将jar包添加到hive的calsspath

add jar /opt/module/hive/libHIVE_test-1.0-SNAPSHOT.jar

2)创建临时函数与开发好的 java class 关联

create temporary function my_len as "com.yingzi.hive1.MyStringLength";

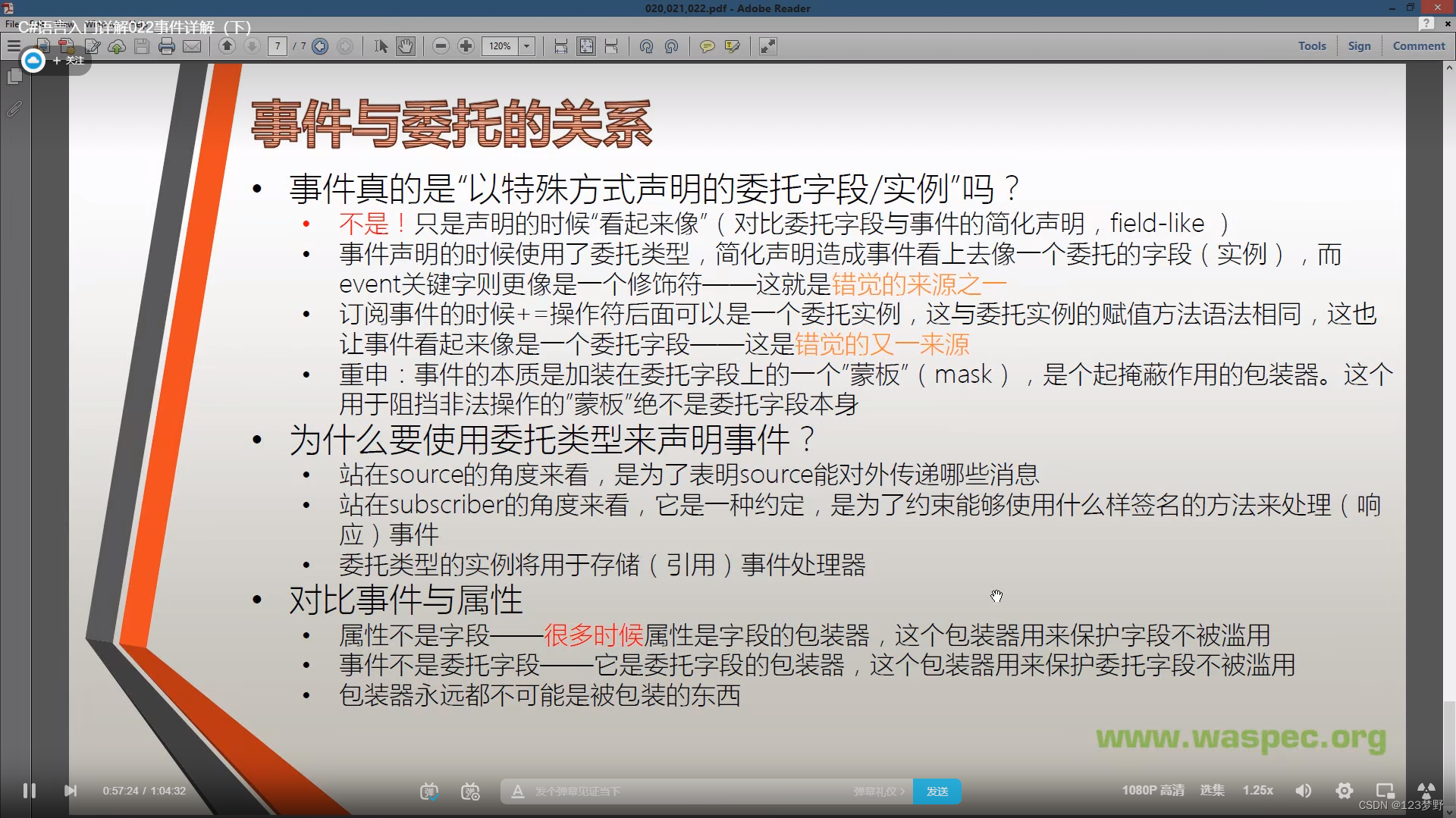

3)验证是否导入成功!!!

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-8I8ghPNA-1642945083983)(C:\Users\Admin\AppData\Roaming\Typora\typora-user-images\image-20220123151144648.png)]](https://img-blog.csdnimg.cn/cb0fcf7ada3f4c15b5a99a56ff539123.png)

自定义UDTF函数

1.需求

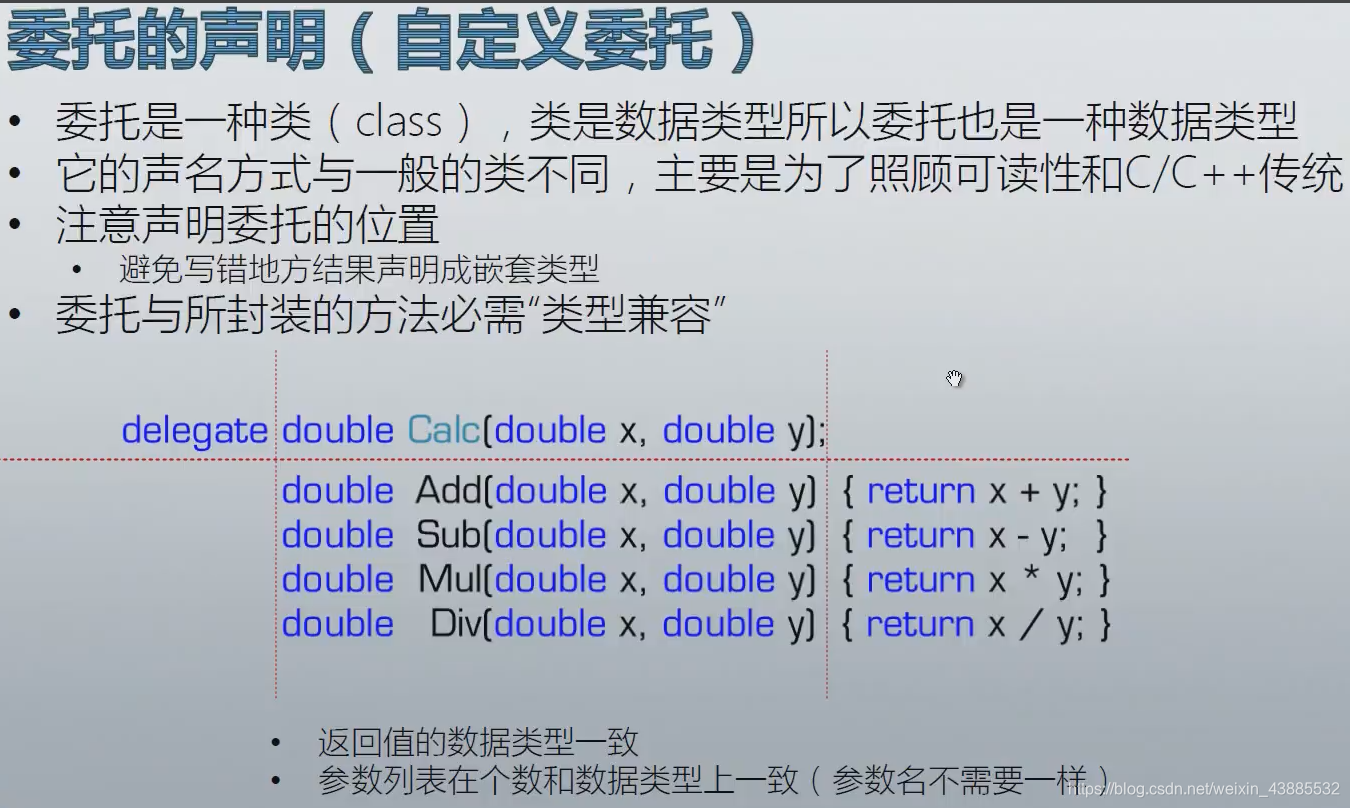

自定义一个 UDTF 实现将一个任意分割符的字符串切割成独立的单词,例如:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-DfYV4ICB-1642945083983)(C:\Users\Admin\AppData\Roaming\Typora\typora-user-images\image-20220123153505788.png)]](https://img-blog.csdnimg.cn/e341f75293524108812a7f4c41cbadab.png)

2.编程实现

package com.yingzi.hive1;import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import java.util.ArrayList;

import java.util.List;/*** @author 影子* @create 2022-01-23-15:14**/

public class MYUDTF extends GenericUDTF {private ArrayList<String> outList = new ArrayList<>();@Overridepublic StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {//1.定义输出数据的列名和类型List<String> fieldNames = new ArrayList<>();List<ObjectInspector> fieldOIs = new ArrayList<>();//2.添加输出数据的列名和类型fieldNames.add("lineToWord");fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);}@Overridepublic void process(Object[] objects) throws HiveException {//1获取原始数据String object = objects[0].toString();//2获取数据传入的第二个参数,此处为分隔符String splitKey = objects[1].toString();//3将原始数据按照传入的分隔符进行切分String[] fields = object.split(splitKey);//4遍历切分后的结果,并写出for (String field : fields) {//集合为复用的,首先清空集合outList.clear();//将每一个单词添加至集合outList.add(field);//将集合内容写出forward(outList);}}@Overridepublic void close() throws HiveException {}

}

3.导入hive中

同前面一样