|引言

参考:CDH6.3.2 hive on tez搭建过程_我不是橙子的博客-CSDN博客

CDH6.3.2版本搭建Tez执行引擎过程 - 虎啸千峰 - 博客园

hive on tez集成完整采坑指南(含tez-ui及安全环境)_匆匆z2的博客-CSDN博客_hive on tez

下载过程中若是感觉慢的话可以参考这个操作步骤:

https://blog.csdn.net/ly8951677/article/details/124151146

环境:

服务器:CentOS7.9.2009

jdk:1.8.0_181

CDH:6.3.2

TEZ:0.9.2

步骤

1. 下载

1.1 下载TEZ

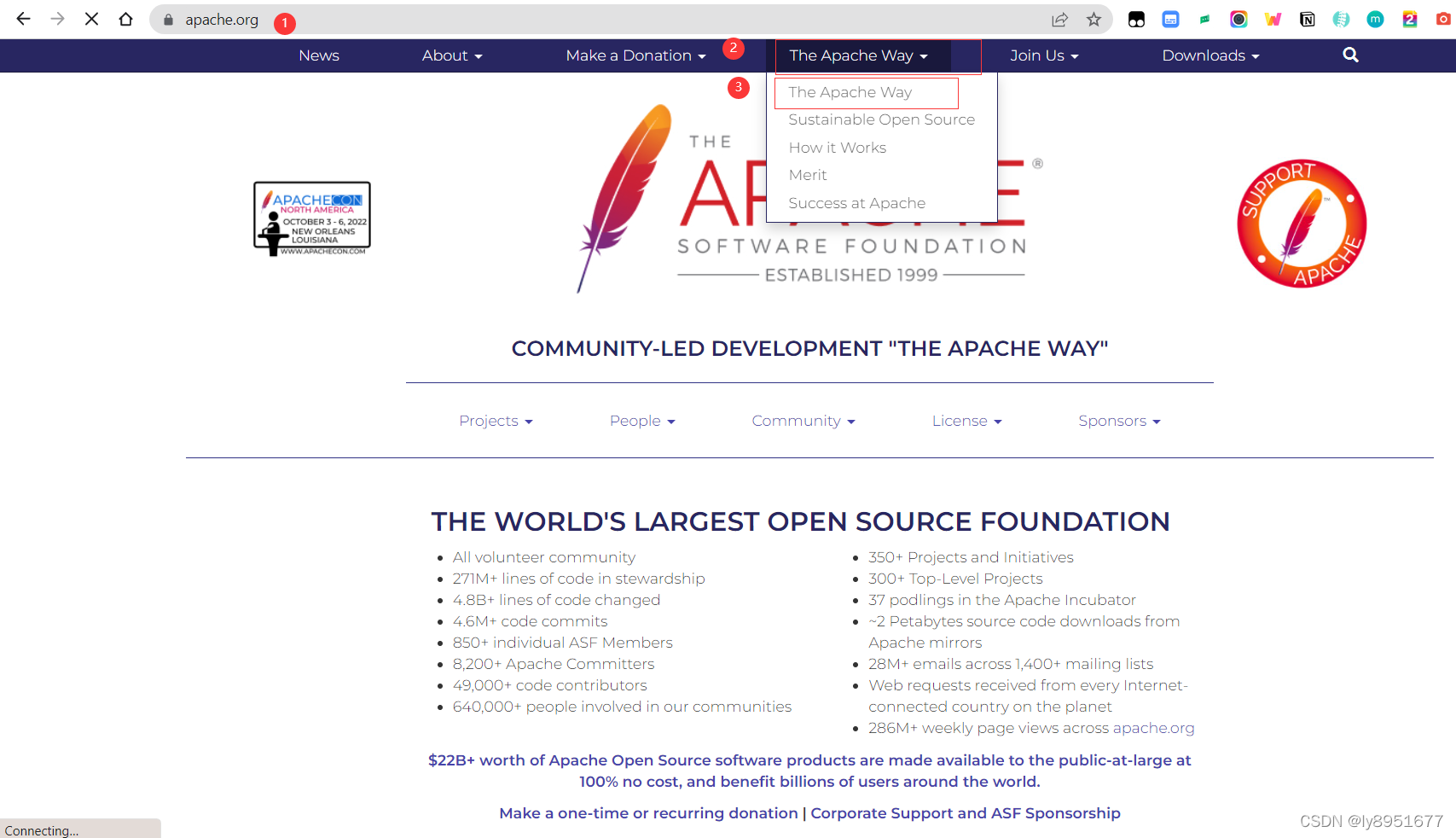

官网:Apache Tez – Welcome to Apache TEZ®

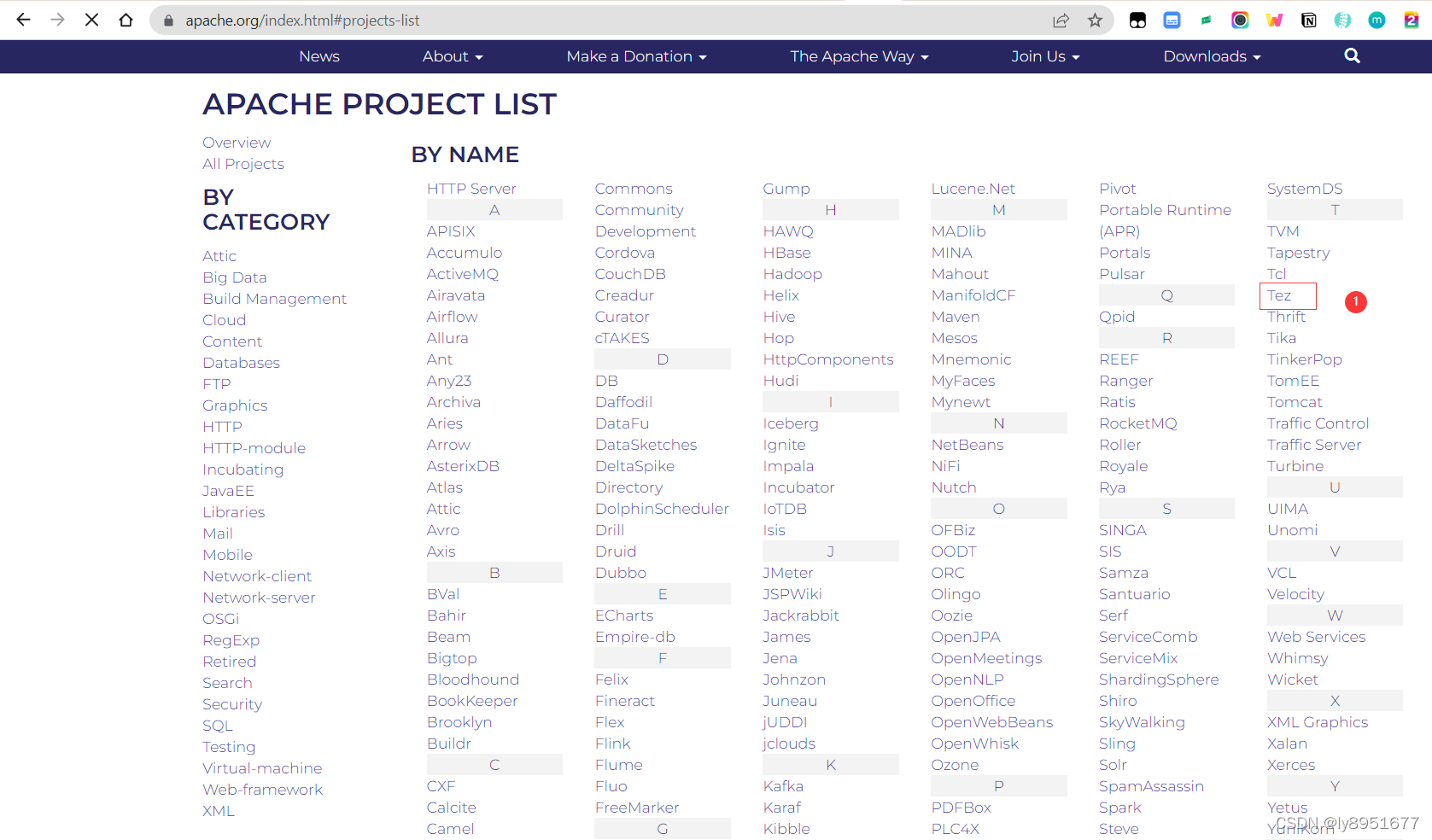

采用Apache下载路径寻找:Welcome to The Apache Software Foundation!

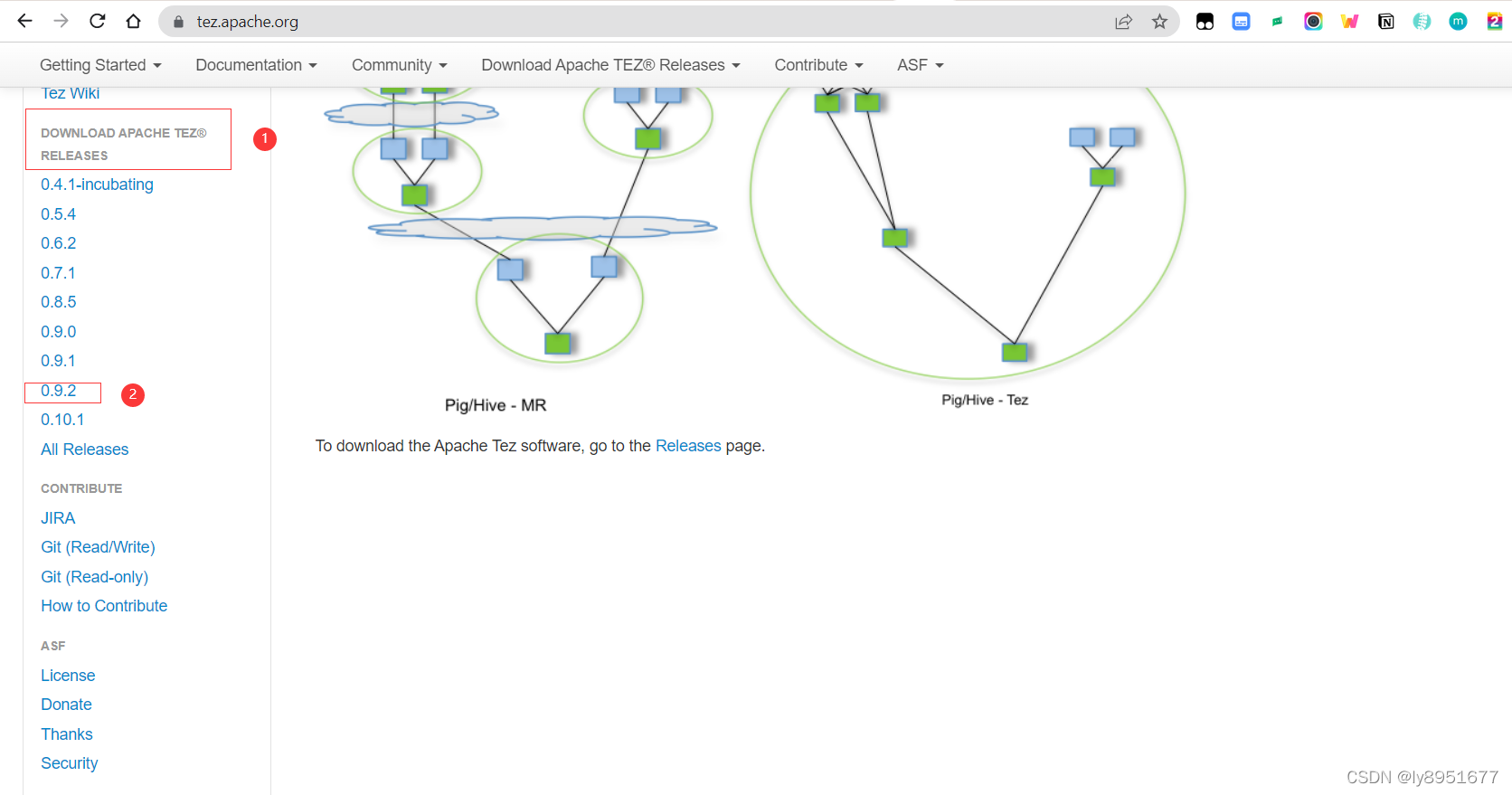

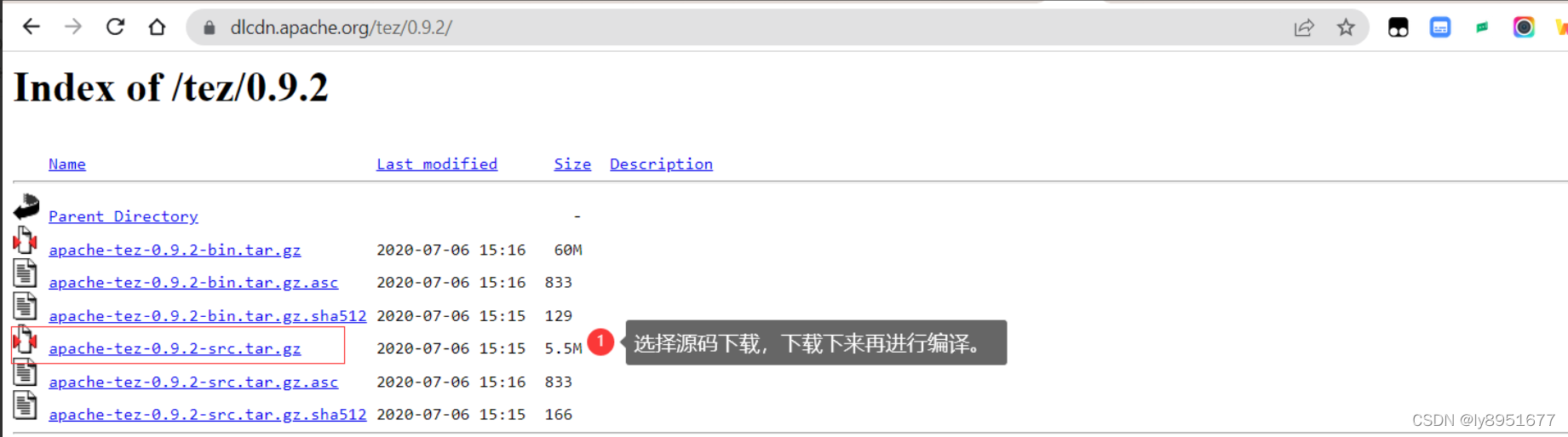

选择编译版或者源码版下载:Index of /tez/0.9.2

直接下载:https://dlcdn.apache.org/tez/0.9.2/apache-tez-0.9.2-src.tar.gz

Index of /tez/0.9.2![]() https://dlcdn.apache.org/tez/0.9.2/

https://dlcdn.apache.org/tez/0.9.2/

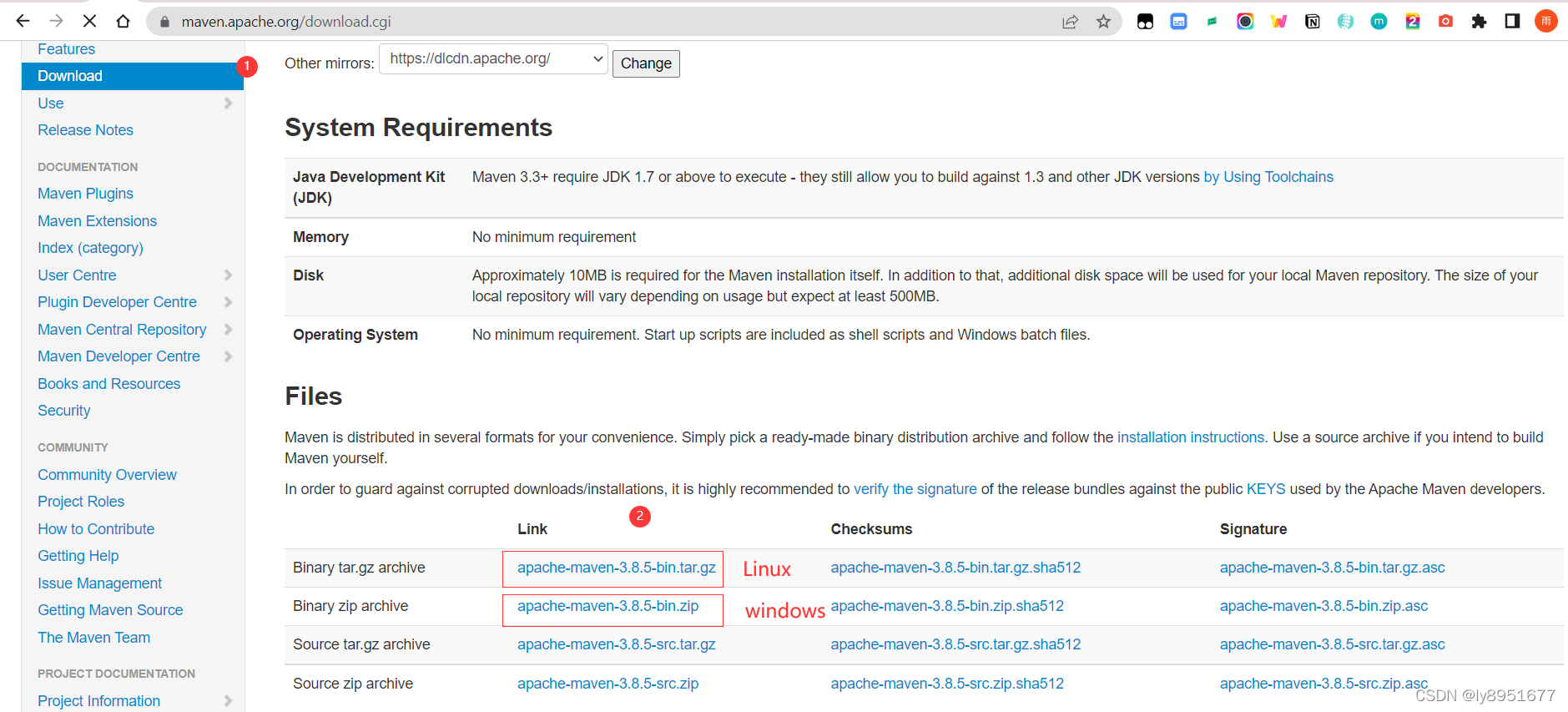

1.2 下载Maven

官网地址:Maven – Welcome to Apache Maven

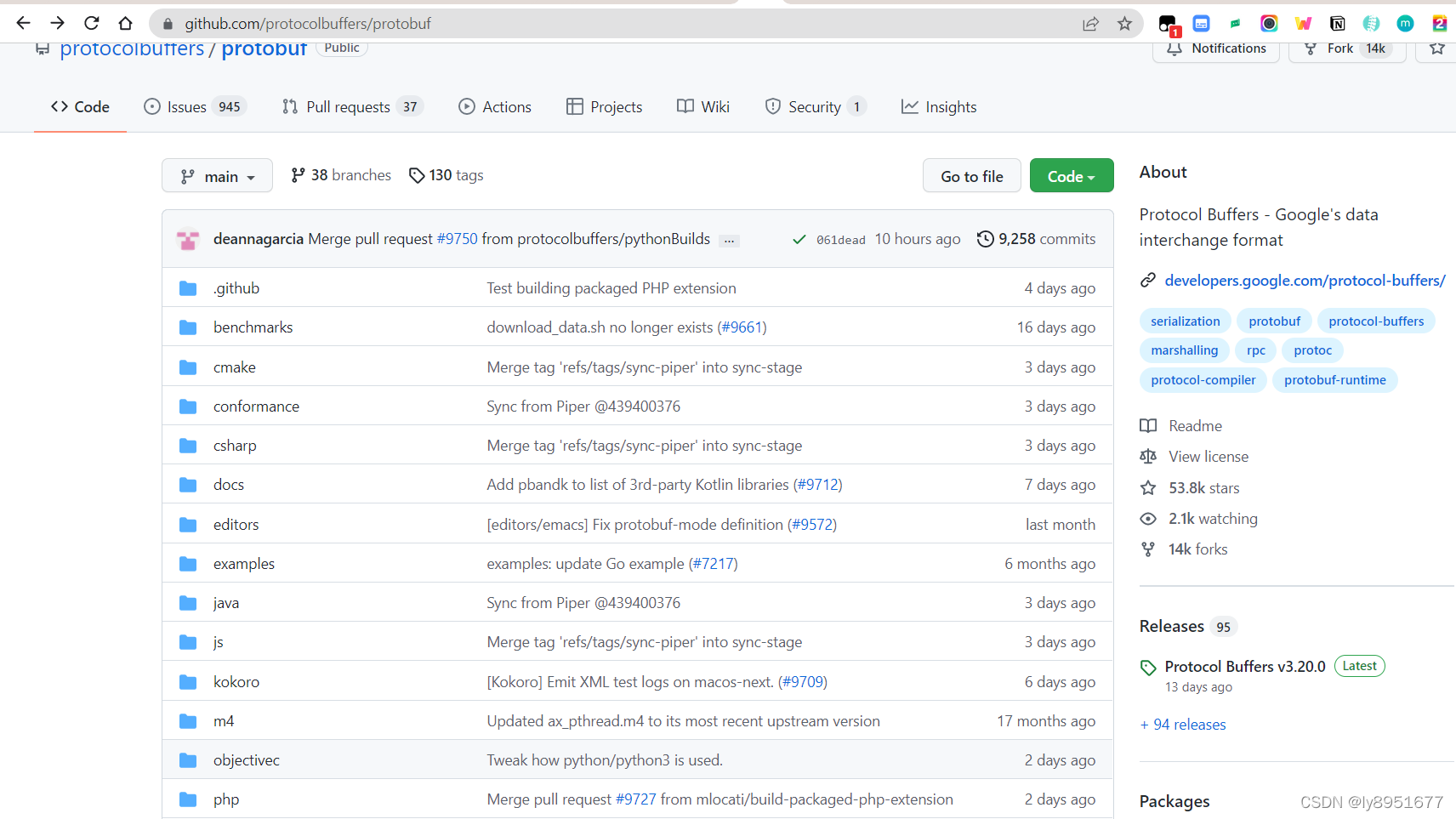

1.3 下载安装protobuf

注:源码编译过程中hadoop中需要使用protobuf,需要下载protobuf-2.5.0

下载地址:Releases · protocolbuffers/protobuf · GitHub

2. 安装

2.1安装maven

-

解压

[root@node02 ~]# cd /data1/software/tez/ [root@node02 tez]# tar -zxvf apache-maven-3.8.5-bin.tar.gz -C /data1/program/ -

配置/etc/profile

[root@node02 tez]# vim /etc/profile #在最后一行输入以下内容 #maven export MAVEN_HOME=/data1/program/apache-maven-3.8.5 export PATH=$PATH:$MAVEN_HOME/bin -

使配置生效并校验

[root@node02 tez]# source /etc/profile [root@node02 tez]# mvn --version Apache Maven 3.8.5 (3599d3414f046de2324203b78ddcf9b5e4388aa0) Maven home: /data1/program/apache-maven-3.8.5 Java version: 1.8.0_181, vendor: Oracle Corporation, runtime: /usr/java/jdk1.8.0_181-cloudera/jre Default locale: en_US, platform encoding: UTF-8 OS name: "linux", version: "3.10.0-862.el7.x86_64", arch: "amd64", family: "unix" ##备注,若是maven有新版本,安装后不退出当前窗口还是会显示旧版本信息。退出后重新登录后显示正常。

2.2 安装protobuf(我为什么是2.5.0版本,因为是前人这么操作。)

-

解压

[root@node02 tez]# tar -zxvf protobuf-2.5.0.tar.gz -C /data1/program/ -

配置并生效

[root@node02 tez]# vim /etc/profile #在最后一行输入以下内容 #protobuf-2.5.0 export POF_HOME=/data1/program/protobuf-2.5.0 export PATH=$PATH:$POF_HOME/bin [root@node02 tez]# source /etc/profile -

编译安装

[root@node02 tez]# cd /data1/program/protobuf-2.5.0/ [root@node02 protobuf-2.5.0]# ./configure [root@node02 protobuf-2.5.0]# make && make install ##注:如果在编译的过程中报错缺少c或者c++,使用yum方式安装后重试即可 -

验证

[root@node02 protobuf-2.5.0]# protoc --version libprotoc 2.5.0 注:当configure校验不通过的时候,缺少哪些包就安装,一般需要安装gcc

2.3 tez编译0.9.2版本

-

解压

[root@node02 protobuf-2.5.0]# cd /data1/software/tez/ [root@node02 tez]# tar -zxvf apache-tez-0.9.2-src.tar.gz -

修改配置文件pom.xml

##进行第二步配置文件命令 [root@node02 tez]# cd /data1/software/tez/apache-tez-0.9.2-src [root@node02 apache-tez-0.9.2-src]# vim pom.xml <!--以下是修改不走 --> <!--第一处:这里才是修改,后续2,3,4都是增加--> <hadoop.version>3.0.0-cdh6.3.2</hadoop.version> <!--第二处:repositorys标签内最后增加--> <repository><id>cloudera</id><url><https://repository.cloudera.com/artifactory/cloudera-repos/></url><name>Cloudera Repositories</name><snapshots><enabled>false</enabled></snapshots> </repository> <!--第三处:pluginRepositories标签内最后增加--> <pluginRepository><id>cloudera</id><name>Cloudera Repositories</name><url><https://repository.cloudera.com/artifactory/cloudera-repos/></url> </pluginRepository> <!--第四处:dependencyManagement标签里的dependencies标签内最后一行。不过这里有个1.9版本,我暂时不理,有兴趣可以验证下需不需要加这个。成功可以留言告知下--> <dependency><groupId>com.sun.jersey</groupId><artifactId>jersey-client</artifactId><version>1.19</version> </dependency> <!--第五处:--> <!--<module>tez-ext-service-tests</module> <module>tez-ui</module>--> <!--注:将这两个注释掉,如果有需要可以不用注释,赶时间的建议最好注释,要不maven编译很耗时。--> -

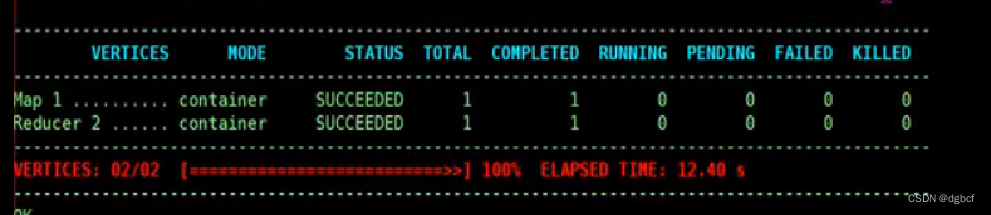

maven编译

[root@node02 apache-tez-0.9.2-src]# mvn clean package -Dmaven.javadoc.skip=true -Dmaven.test.skip=true ##注:这样会跳过test编译,很快就编译完成 ##经过一段时间等待,所有输出选项均为SUCCESS则表示为编译通过##Maven编译后的压缩包在项目的tez-dist/target目录下,没有编译成功是没有target这个目录的 ##查看是否成功 [root@node02 apache-tez-0.9.2-src]# cd /data1/software/tez/apache-tez-0.9.2-src/tez-dist/target [root@node02 apache-tez-0.9.2-src]# ll drwxr-xr-x 2 root root 6 Apr 8 19:41 archive-tmp drwxr-xr-x 3 root root 4096 Apr 8 19:41 tez-0.9.2 drwxr-xr-x 3 root root 4096 Apr 8 19:41 tez-0.9.2-minimal -rw-r--r-- 1 root root 13421050 Apr 8 19:41 tez-0.9.2-minimal.tar.gz -rw-r--r-- 1 root root 68025360 Apr 8 19:41 tez-0.9.2.tar.gz

3.配置

-

上传到hdfs路径

[root@node02 target]# su hdfs bash-4.2$ hdfs dfs -mkdir /tez bash-4.2$ tez-0.9.2.tar.gz /tez -

配置客户端(每台集群机子都需要配置,切记,弄好了也可以scp过去)

-

在CDH里的lib目录生成tez目录

[root@node02 target]# mkdir -p /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/conf -

生成tez_site.xml文件

[root@node02 target]# vim /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/conf/tez-site.xml ##一定要加${fs.defaultFS},要不就找不到,这个就是第一步上传到hdfs的压缩包路径 <configuration><property><name>tez.lib.uris</name><value>${fs.defaultFS}/tez/tez-0.9.2.tar.gz</value></property><property><name>tez.use.cluster.hadoop-libs</name><value>false</value></property><property><name>tez.am.launch.env</name><value>LD_LIBRARY_PATH=${PARCELS_ROOT}/CDH/lib/hadoop/lib/native</value></property><property><name>tez.task.launch.env</name><value>LD_LIBRARY_PATH=${PARCELS_ROOT}/CDH/lib/hadoop/lib/native</value></property> </configuration> -

拷贝jar包和lib目录

[root@node02 target]# cd tez-0.9.1-minimal [root@node02 tez-0.9.1-minimal]# cp ./*.jar /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/ [root@node02 tez-0.9.1-minimal]# cp -r ./lib /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/ -

避免kryo的错误

[root@node02 tez-0.9.1-minimal]# cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/auxlib [root@node02 auxlib]# mv hive-exec-2.1.1-cdh6.3.2-core.jar hive-exec-2.1.1-cdh6.3.2-core.jar.bak [root@node02 auxlib]# mv hive-exec-core.jar hive-exec-core.jar.bak ##备注,要不就会报异常。所以一定要处理

-

-

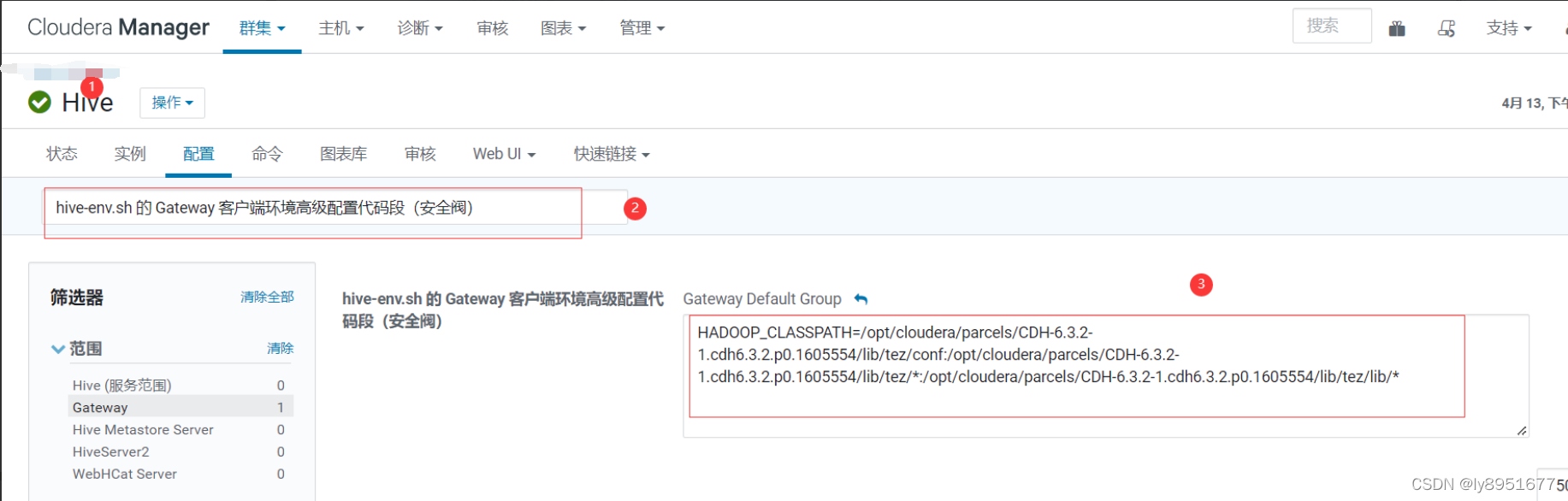

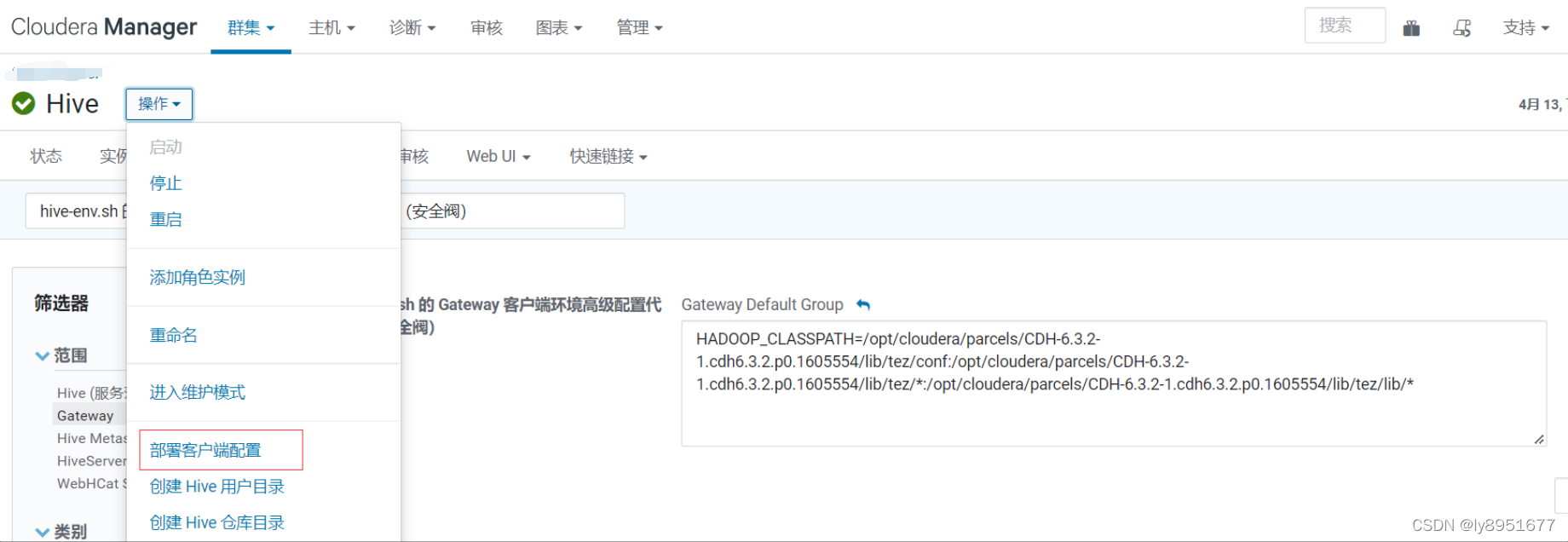

配置HADOOP_CLASSPATH路径(这个在cdh上面配置就好)

HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/conf:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/*:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/lib/*hive-env.sh 的 Gateway 客户端环境高级配置代码段(安全阀)

-

-

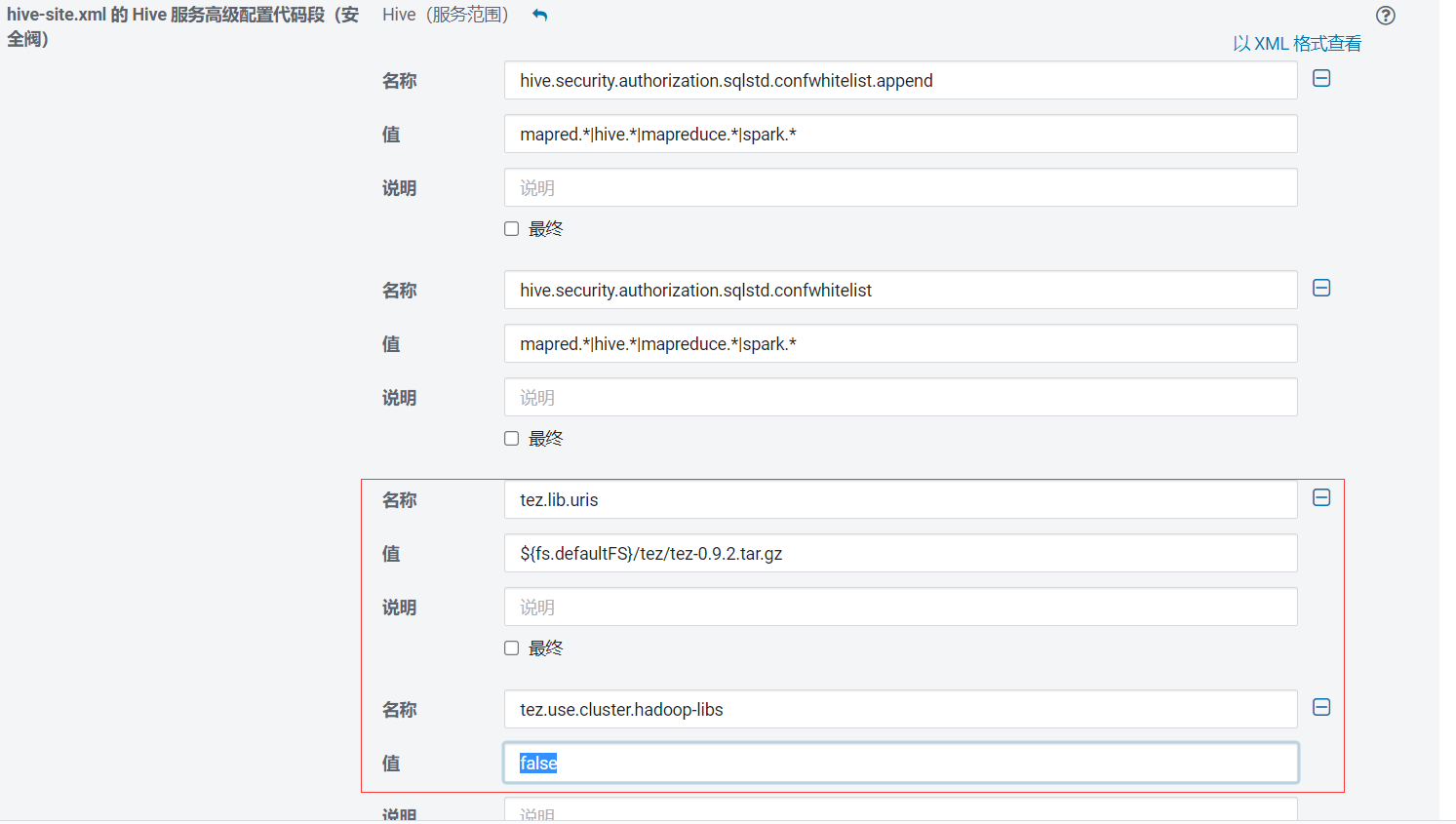

(此步无要求可以忽略)JDBC客户端无法连接,需要在Hive配置hive-site.xml 的 Hive 服务高级配置代码段

#插入一下代码 tez.lib.uris ${fs.defaultFS}/tez/tez-0.9.2.tar.gztez.use.cluster.hadoop-libs false

- 若是还报异常,需要吧之前整理的tez包放进/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/lib/。以下是我放的包

-rw-r--r-- 1 root root 58160 May 11 17:07 commons-codec-1.4.jar -rw-r--r-- 1 root root 41123 May 11 17:07 commons-cli-1.2.jar -rw-r--r-- 1 root root 535731 May 11 17:07 async-http-client-1.8.16.jar -rw-r--r-- 1 root root 588337 May 11 17:07 commons-collections-3.2.2.jar -rw-r--r-- 1 root root 751238 May 11 17:07 commons-collections4-4.1.jar -rw-r--r-- 1 root root 1599627 May 11 17:07 commons-math3-3.1.1.jar -rw-r--r-- 1 root root 1648200 May 11 17:07 guava-11.0.2.jar -rw-r--r-- 1 root root 770504 May 11 17:07 hadoop-mapreduce-client-common-3.0.0-cdh6.3.2.jar -rw-r--r-- 1 root root 1644597 May 11 17:07 hadoop-mapreduce-client-core-3.0.0-cdh6.3.2.jar -rw-r--r-- 1 root root 81743 May 11 17:07 jettison-1.3.4.jar -rw-r--r-- 1 root root 147952 May 11 17:07 jersey-json-1.9.jar -rw-r--r-- 1 root root 5450 May 11 17:07 hadoop-shim-2.7-0.9.2.jar -rw-r--r-- 1 root root 8803 May 11 17:07 hadoop-shim-0.9.2.jar -rw-r--r-- 1 root root 458642 May 11 17:07 jetty-util-9.3.24.v20180605.jar -rw-r--r-- 1 root root 520813 May 11 17:07 jetty-server-9.3.24.v20180605.jar -rw-r--r-- 1 root root 8866 May 11 17:07 slf4j-log4j12-1.7.10.jar -rw-r--r-- 1 root root 32119 May 11 17:07 slf4j-api-1.7.10.jar -rw-r--r-- 1 root root 105112 May 11 17:07 servlet-api-2.5.jar -rw-r--r-- 1 root root 206280 May 11 17:07 RoaringBitmap-0.5.21.jar -rw-r--r-- 1 root root 5362 May 11 17:07 tez-build-tools-0.9.2.jar -rw-r--r-- 1 root root 1032647 May 11 17:07 tez-api-0.9.2.jar -rw-r--r-- 1 root root 85864 May 11 17:07 tez-common-0.9.2.jar -rw-r--r-- 1 root root 56795 May 11 17:07 tez-examples-0.9.2.jar -rw-r--r-- 1 root root 1412824 May 11 17:07 tez-dag-0.9.2.jar -rw-r--r-- 1 root root 76042 May 11 17:07 tez-protobuf-history-plugin-0.9.2.jar -rw-r--r-- 1 root root 296849 May 11 17:07 tez-mapreduce-0.9.2.jar -rw-r--r-- 1 root root 78934 May 11 17:07 tez-job-analyzer-0.9.2.jar -rw-r--r-- 1 root root 15265 May 11 17:07 tez-javadoc-tools-0.9.2.jar -rw-r--r-- 1 root root 79097 May 11 17:07 tez-history-parser-0.9.2.jar -rw-r--r-- 1 root root 198892 May 11 17:07 tez-runtime-internals-0.9.2.jar -rw-r--r-- 1 root root 7741 May 11 17:07 tez-yarn-timeline-history-with-acls-0.9.2.jar -rw-r--r-- 1 root root 28162 May 11 17:07 tez-yarn-timeline-history-0.9.2.jar -rw-r--r-- 1 root root 158966 May 11 17:07 tez-tests-0.9.2.jar -rw-r--r-- 1 root root 768820 May 11 17:07 tez-runtime-library-0.9.2.jar附上JDBC代码示例:

package com.test.controller;import java.sql.*; import java.util.Properties;/*** @Author xxxx* @Date 2022-04-27 14:46* @Version 1.0*/ public class TEZTestTwo {public static void main(String[] args) throws SQLException {String driverName = "org.apache.hive.jdbc.HiveDriver";try {Class.forName(driverName);} catch (ClassNotFoundException e) {e.printStackTrace();System.exit(1);}//*hiverserver 版本jdbc url格式*//* // Connection con = DriverManager.getConnection("jdbc:hive://ip:10000/default", "", "");// *hiverserver2 版本jdbc url格式*//* // Connection con = DriverManager.getConnection("jdbc:hive2://ip:10000/default", "root", "");Properties properties = new Properties();properties.setProperty("user","root");properties.setProperty("password","");//这段和以下参数设置重叠,这两种都可以/*properties.setProperty("hiveconf:hive.execution.engine","tez");properties.setProperty("hiveconf:tez.job.name","lytez");//设置队列prop.setProperty("hiveconf:tez.queue.name", "root.default");//这个不一定要设置,不行的话再设置properties.setProperty("hiveconf:hive.tez.container.size","1024");**/Connection con = DriverManager.getConnection("jdbc:hive2://ip:10000/default", properties);Statement stmt = con.createStatement();//参数设置测试boolean resHivePropertyTest = stmt.execute("set hive.execution.engine=tez");//这个是yarn显示名称,这个需要修改源代码打包成:,替换:/opt/cloudera/parcels/CDH/jars/目录的hive-exec-2.1.1-cdh6.3.2.jarboolean resHivePropertyTestName = stmt.execute("set tez.job.name=test");//这个不一定要设置,不行的话再设置boolean resHivePropertyTestContainer = stmt.execute("set hive.tez.container.size=1024");//cdh设置动态资源池boolean setHivePropertyQUEUE = stmt.execute("set tez.queue.name=root.default");System.out.println("resHivePropertyTestContainer="+resHivePropertyTestContainer);System.out.println("resHivePropertyTest="+resHivePropertyTest);String tableName = "test";ResultSet res = stmt.executeQuery("select count(1) AS a from " + tableName); while (res.next()) {System.out.println(res.getString("a"));}/*tableName="area_type";res = stmt.executeQuery("select count(1) AS a from " + tableName);while (res.next()) {System.out.println(res.getString("a"));}*/} }

4.启动

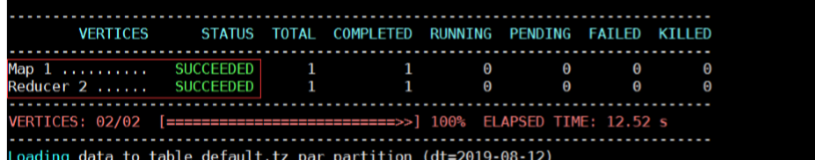

hive> set hive.execution.engine=tez;

hive> set hive.tez.container.size=1024;

## 第一次查询的时候要加载jar包,所以比较慢。耐心点就好。示例:

hive> select count(1) from cwqejg_test2 ;

5. 异常

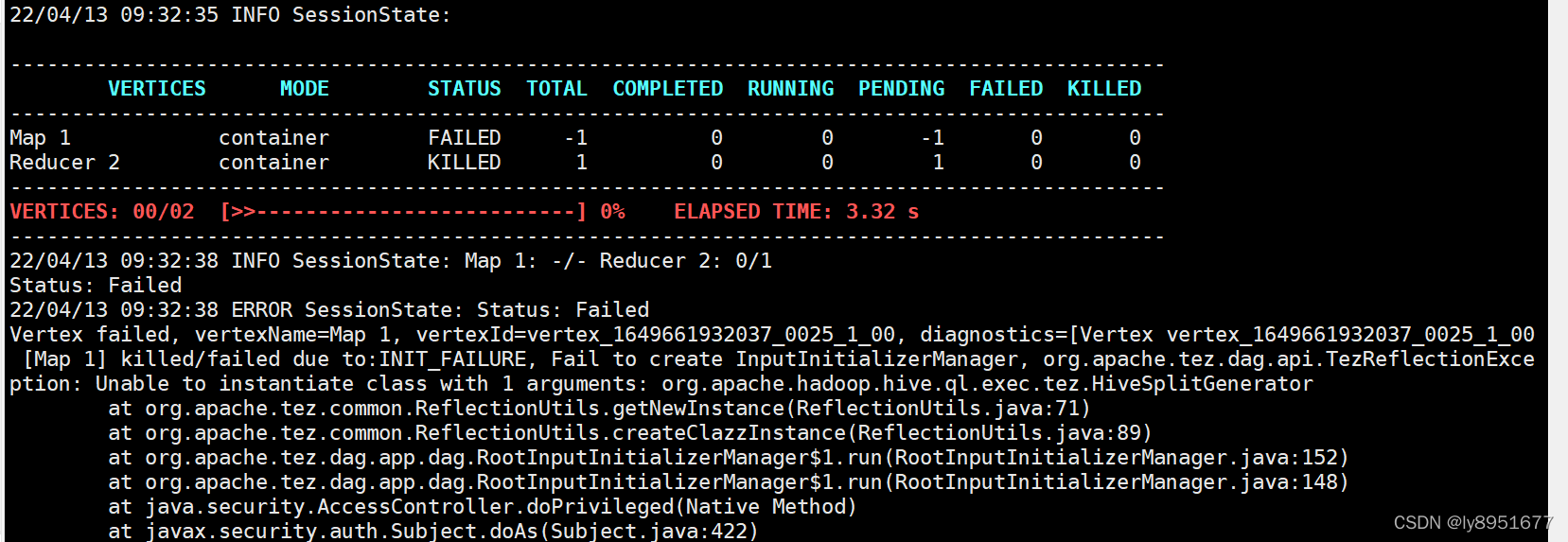

5.1 HiveSplitGenerator异常

- 异常信息

org.apache.tez.dag.api.TezReflectionException: Unable to instantiate class with 1 arguments: org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator

-

解决方案

hive> set hive.tez.container.size=1024;

5.2 kryo版本不一致异常

-

异常信息

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.tez.TezTask. Vertex failed, vertexName=Map 1, vertexId=vertex_1649661932037_0034_3_00, diagnostics=[Vertex vertex_1649661932037_0034_3_00 [Map 1] killed/failed due to:INIT_FAILURE, Fail to create InputInitializerManager, org.apache.tez.dag.api.TezReflectionException: Unable to instantiate class with 1 arguments: org.apache.hadoop.hive.ql.exec.tez.HiveSplitGeneratorat org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:71)at org.apache.tez.common.ReflectionUtils.createClazzInstance(ReflectionUtils.java:89)at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:152)at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:148)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)at org.apache.tez.dag.app.dag.RootInputInitializerManager.createInitializer(RootInputInitializerManager.java:148)at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInputInitializers(RootInputInitializerManager.java:121)at org.apache.tez.dag.app.dag.impl.VertexImpl.setupInputInitializerManager(VertexImpl.java:4120)at org.apache.tez.dag.app.dag.impl.VertexImpl.access$3100(VertexImpl.java:206)at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.handleInitEvent(VertexImpl.java:2931)at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.transition(VertexImpl.java:2878)at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.transition(VertexImpl.java:2860)at org.apache.hadoop.yarn.state.StateMachineFactory$MultipleInternalArc.doTransition(StateMachineFactory.java:385)at org.apache.hadoop.yarn.state.StateMachineFactory.doTransition(StateMachineFactory.java:302)at org.apache.hadoop.yarn.state.StateMachineFactory.access$500(StateMachineFactory.java:46)at org.apache.hadoop.yarn.state.StateMachineFactory$InternalStateMachine.doTransition(StateMachineFactory.java:487)at org.apache.tez.state.StateMachineTez.doTransition(StateMachineTez.java:59)at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:1956)at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:205)at org.apache.tez.dag.app.DAGAppMaster$VertexEventDispatcher.handle(DAGAppMaster.java:2317)at org.apache.tez.dag.app.DAGAppMaster$VertexEventDispatcher.handle(DAGAppMaster.java:2303)at org.apache.tez.common.AsyncDispatcher.dispatch(AsyncDispatcher.java:180)at org.apache.tez.common.AsyncDispatcher$1.run(AsyncDispatcher.java:115)at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.reflect.InvocationTargetExceptionat sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:68)... 25 more Caused by: java.lang.NoClassDefFoundError: com/esotericsoftware/kryo/Serializerat org.apache.hadoop.hive.ql.exec.Utilities.getBaseWork(Utilities.java:404)at org.apache.hadoop.hive.ql.exec.Utilities.getMapWork(Utilities.java:317)at org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.<init>(HiveSplitGenerator.java:131)... 30 more Caused by: java.lang.ClassNotFoundException: com.esotericsoftware.kryo.Serializerat java.net.URLClassLoader.findClass(URLClassLoader.java:381)at java.lang.ClassLoader.loadClass(ClassLoader.java:424)at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)at java.lang.ClassLoader.loadClass(ClassLoader.java:357)... 33 more -

解决方案

[root@node01 ~]# cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/auxlib [root@node01 auxlib]# mv hive-exec-2.1.1-cdh6.3.2-core.jar hive-exec-2.1.1-cdh6.3.2-core.jar.bak [root@node01 auxlib]# mv hive-exec-core.jar hive-exec-core.jar.bak

5.2.1 kyro修复后异常

-

异常信息

22/04/13 10:22:22 ERROR exec.Task: Failed to execute tez graph. java.io.FileNotFoundException: File file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/auxlib/hive-exec-2.1.1-cdh6.3.2-core.jar does not existat org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:641)at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:867)at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:631)at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:442)at org.apache.hadoop.hive.ql.exec.tez.DagUtils.checkPreExisting(DagUtils.java:974)at org.apache.hadoop.hive.ql.exec.tez.DagUtils.localizeResource(DagUtils.java:991)at org.apache.hadoop.hive.ql.exec.tez.DagUtils.addTempResources(DagUtils.java:902)at org.apache.hadoop.hive.ql.exec.tez.DagUtils.localizeTempFilesFromConf(DagUtils.java:845)at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.refreshLocalResourcesFromConf(TezSessionState.java:466)at org.apache.hadoop.hive.ql.exec.tez.TezTask.updateSession(TezTask.java:296)at org.apache.hadoop.hive.ql.exec.tez.TezTask.execute(TezTask.java:157)at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200)at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843)at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563)at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339)at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1328)at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:187)at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:409)at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:836)at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:772)at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.util.RunJar.run(RunJar.java:313)at org.apache.hadoop.util.RunJar.main(RunJar.java:227) -

解决方案

退出客户端,重新进入就好。