文章目录

- 杂谈

- 实现步骤

- 核心算法

- 交互界面

- 界面代码

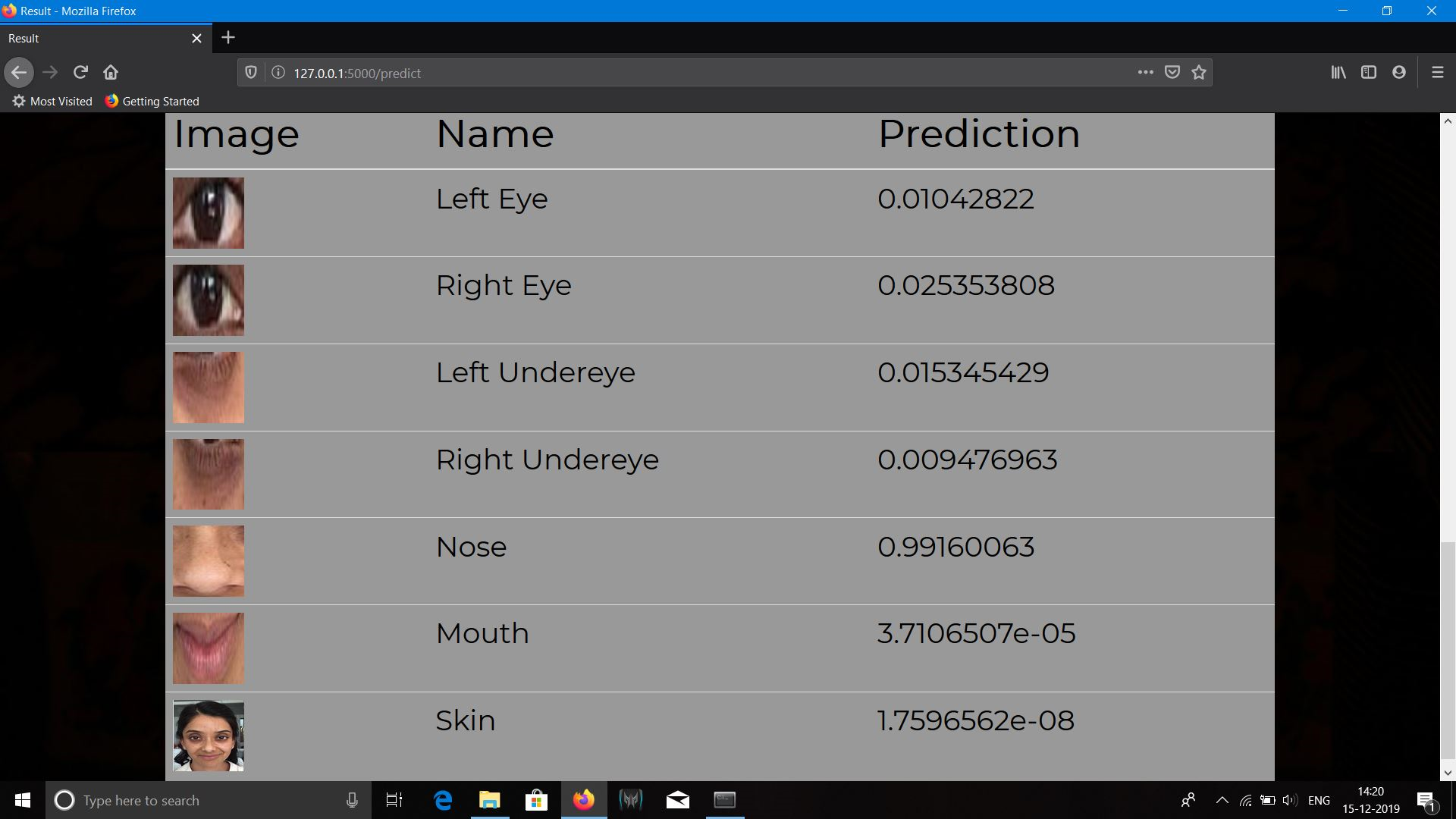

- 检测效果

- 源代码

杂谈

最近发现视力下降严重, 可能跟我的过度用眼有关,于是想着能不能做一个检测用眼疲劳的,灵感来自特斯拉的疲劳检测系统。

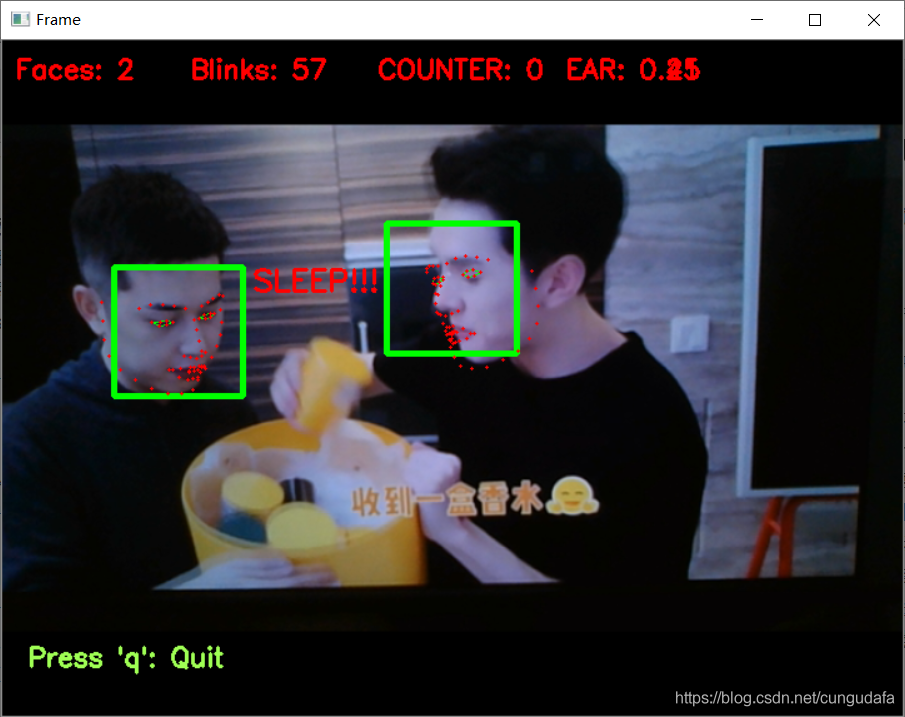

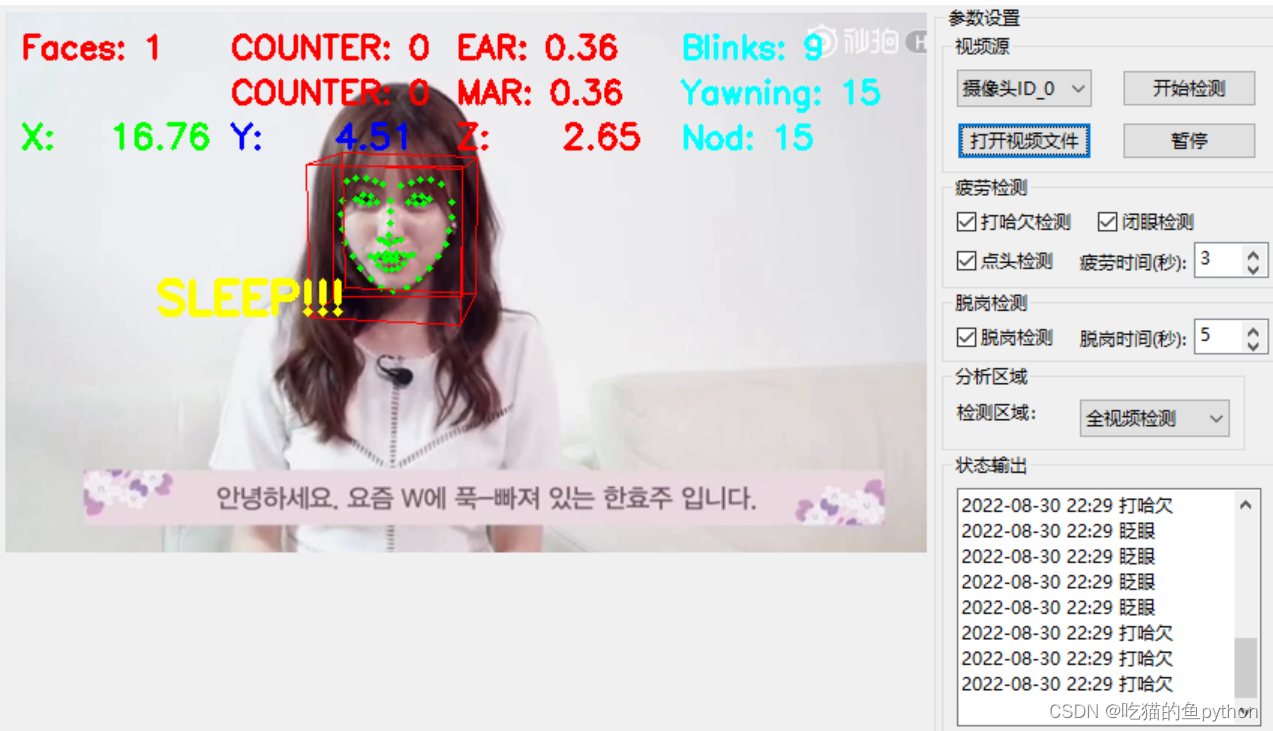

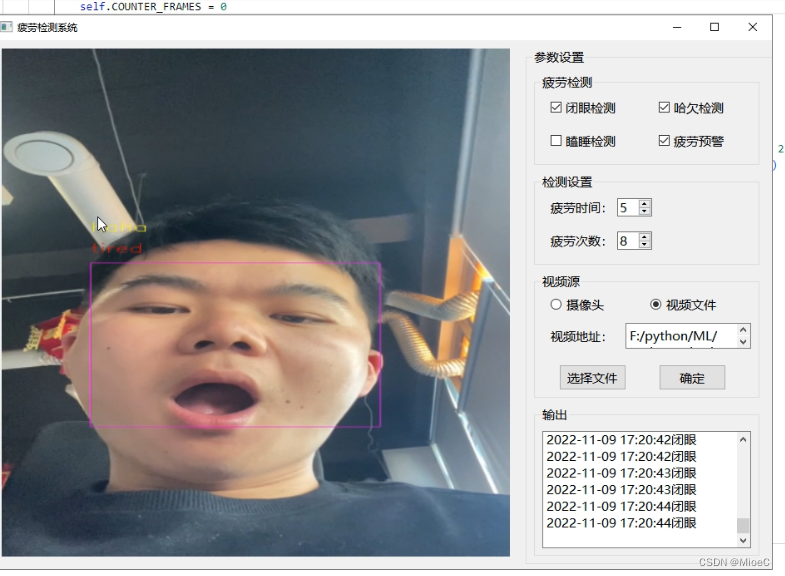

效果如下:

实现步骤

- 实现核心算法

- 制作交互界面

- 设计交互逻辑

核心算法

疲劳检测算法讲解:

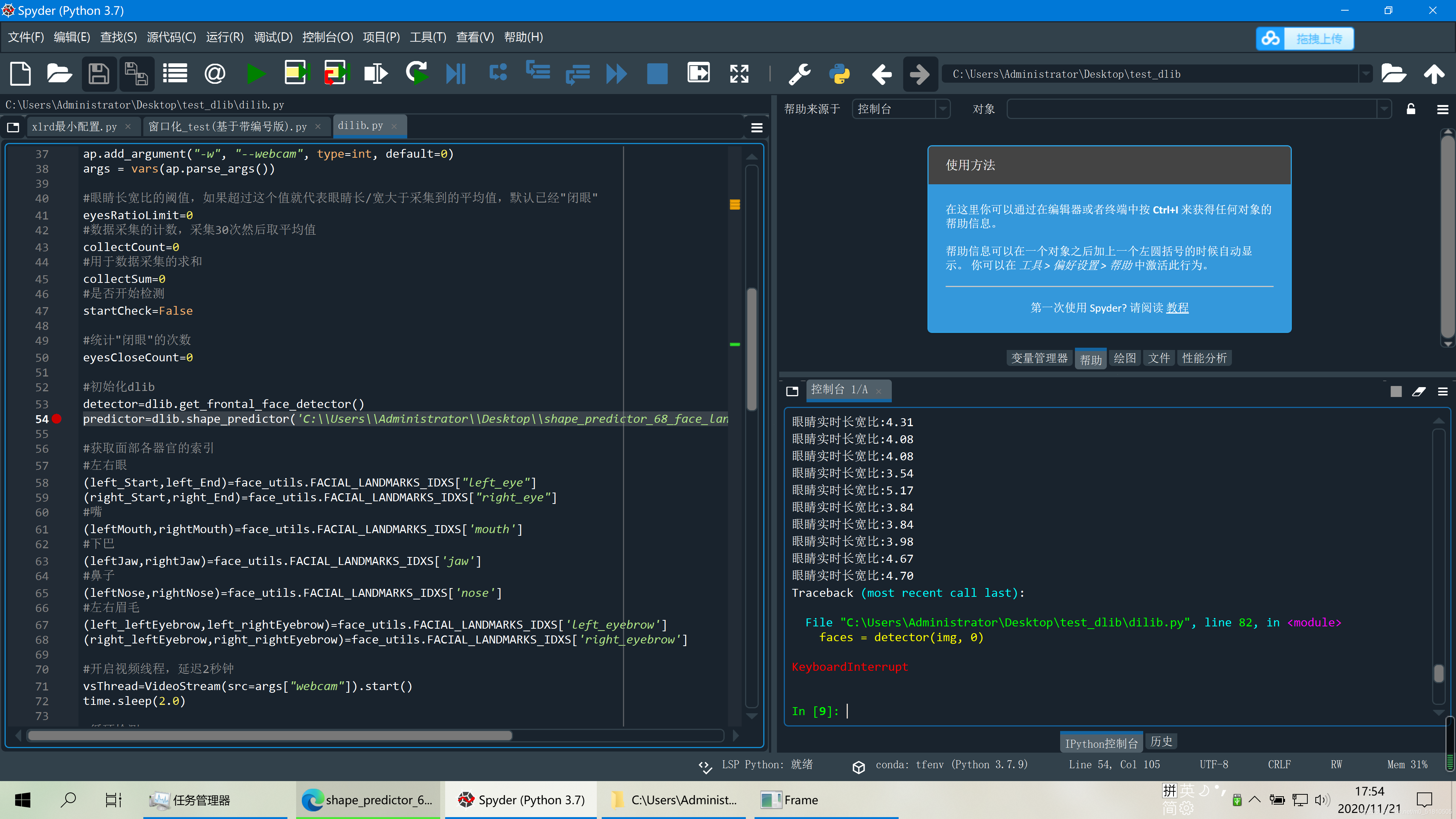

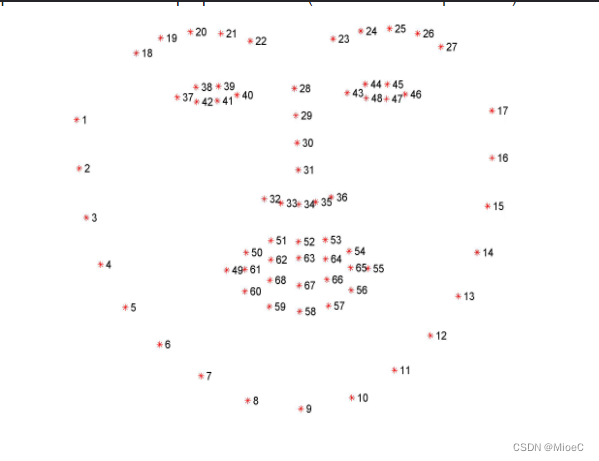

利用dlib 人脸检测算法来捕获人脸的关键点数(68个关键点)

参考文章:https://blog.csdn.net/monk96/article/details/127751414?spm=1001.2014.3001.5502

获取眼睛和嘴巴的点位置

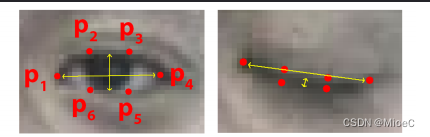

眼睛疲劳计算公式

利用欧拉距离计算

dist = (||P2 - P6|| + ||P3 - P5||)/ 2 * ||P1 - P4||

对应就是上下距离 与左右的比值, 然后我们设定一个阈值,比如说0.3, 另外设置帧数,如3帧,超过3帧则 检测为闭眼, 在闭眼总数上加1,如果闭眼次数超过设定的阈值(6次),判断为疲劳状态。

哈欠疲劳计算公式

哈欠用于眼睛相同的计算方式来计算打哈欠, 同样设置阈值和帧数, 不同点是在于哈欠是设定为0.8。

tips: 这里需要设置多少时间内没闭眼,这去除计数器,不然长时间的检测,肯定会超过阈值

这里需要拿到眼睛的位置进行计算,引入欧拉距离工具

from scipy.spatial import distance as dist

from collections import OrderedDict

设定点位(固定的)

self.LANDMARKS = OrderedDict([("mouth", (48, 68)),("right_eyebrow", (17, 22)),("left_eyebrow", (22, 27)),("right_eye", (36, 42)),("left_eye", (42, 48)),("nose", (27, 36)),("jaw", (0, 17))])

self.EYE_THRESH = 0.3self.EYE_FRAMES = 3self.COUNTER_FRAMES = 0self.TOTAL = 0self.MOUSE_UP_FRAMES = 5self.MOUSE_COUNTER_FRAMES = 0self.MOUSE_RATE = 0.8(self.lStart, self.lEnd) = self.LANDMARKS['left_eye'](self.rStart, self.rEnd) = self.LANDMARKS['right_eye'](self.mStart, self.mEnd) = self.LANDMARKS['mouth']

计算大小 这里计算欧拉距离,然后再把距离进行平均,减少误差

def eye_aspect_ratio(self, eye):A = dist.euclidean(eye[1], eye[5])B = dist.euclidean(eye[2], eye[4])C = dist.euclidean(eye[0], eye[3])return (A + B) / (2 * C)

def cal_height(self, points):leftEye = points[self.lStart: self.lEnd]rightEye = points[self.rStart: self.rEnd]leftEAR = self.eye_aspect_ratio(leftEye)rightEAR = self.eye_aspect_ratio(rightEye)return (leftEAR + rightEAR)/2

设定检测的方法:这里用来每一帧检测图片,并返回信息给到交互界面

def skim_video(self, img, ha, eye, warn):img_gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)# 人脸数rectsrects = self.detector(img_gray, 0)close_eye = Falsefor i in range(len(rects)):faces = self.predictor(img, rects[i]).parts()points = np.matrix([[p.x, p.y] for p in faces])rate = self.cal_height(points) # 闭眼rate_mouse = self.cal_mouse_height(points) # 哈欠if rate_mouse > self.MOUSE_RATE and ha:self.MOUSE_COUNTER_FRAMES += 1if self.MOUSE_COUNTER_FRAMES >= self.MOUSE_UP_FRAMES:print('打哈欠')cv2.putText(img, "haha", (rects[i].left(), rects[i].top() - 60), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 255)) if rate < self.EYE_THRESH and eye:self.COUNTER_FRAMES += 1# print('闭眼检测到了,第%s次'%COUNTER_FRAMES)if self.COUNTER_FRAMES >= 5:self.TOTAL += 1self.COUNTER_FRAMES = 0close_eye = Trueelse:self.COUNTER_FRAMES = 0# for idx, point in enumerate(points):# pos = (point[0, 0], point[0, 1])# cv2.circle(img, pos, 2, (0, 0, 255), 1)# cv2.putText(img, str(idx + 1), pos, cv2.FONT_HERSHEY_SIMPLEX, 0.3, (0, 255, 255)) if self.TOTAL >= 10 and warn:cv2.rectangle(img, (rects[i].left(), rects[i].top()), (rects[i].right(), rects[i].bottom()), color= (255, 0, 255))cv2.putText(img, "tired", (rects[i].left(), rects[i].top() - 20), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 0, 255)) print('warning, 您已疲劳,请尽快休息') return "%s闭眼"%time.strftime("%Y-%m-%d %H:%M:%S", time.localtime()) if close_eye else "", img完整的数据处理类

import numpy as np

import dlib

import cv2

import sys

import time

sys.path.append("..")

from scipy.spatial import distance as dist

from collections import OrderedDictclass Recognize():def __init__(self):self.init_data()self.init_model()def init_data(self):self.LANDMARKS = OrderedDict([("mouth", (48, 68)),("right_eyebrow", (17, 22)),("left_eyebrow", (22, 27)),("right_eye", (36, 42)),("left_eye", (42, 48)),("nose", (27, 36)),("jaw", (0, 17))])self.EYE_THRESH = 0.3self.EYE_FRAMES = 3self.COUNTER_FRAMES = 0self.TOTAL = 0self.MOUSE_UP_FRAMES = 5self.MOUSE_COUNTER_FRAMES = 0self.MOUSE_RATE = 0.8(self.lStart, self.lEnd) = self.LANDMARKS['left_eye'](self.rStart, self.rEnd) = self.LANDMARKS['right_eye'](self.mStart, self.mEnd) = self.LANDMARKS['mouth']def cal_height(self, points):leftEye = points[self.lStart: self.lEnd]rightEye = points[self.rStart: self.rEnd]leftEAR = self.eye_aspect_ratio(leftEye)rightEAR = self.eye_aspect_ratio(rightEye)return (leftEAR + rightEAR)/2def cal_mouse_height(self, points):mouse = points[self.mStart: self.mEnd]mouse_rate = self.mouse_aspect_ratio(mouse)return mouse_ratedef mouse_aspect_ratio(self, mouse):A = dist.euclidean(mouse[2],mouse[9])B = dist.euclidean(mouse[4],mouse[7])C = dist.euclidean(mouse[0],mouse[6])return (A+ B) / (2 * C)def eye_aspect_ratio(self, eye):A = dist.euclidean(eye[1], eye[5])B = dist.euclidean(eye[2], eye[4])C = dist.euclidean(eye[0], eye[3])return (A + B) / (2 * C)def init_model(self):self.detector = dlib.get_frontal_face_detector()self.predictor = dlib.shape_predictor('F:/python/ML/11-learn/tired/model_data/shape_predictor_68_face_landmarks.dat')def init_video_capture(self, method):if method == 0:self.capture = cv2.VideoCapture(0)else:self.capture = cv2.VideoCapture(method)def skim_video(self, img, ha, eye, warn):img_gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)# 人脸数rectsrects = self.detector(img_gray, 0)close_eye = Falsefor i in range(len(rects)):faces = self.predictor(img, rects[i]).parts()points = np.matrix([[p.x, p.y] for p in faces])rate = self.cal_height(points) # 闭眼rate_mouse = self.cal_mouse_height(points) # 哈欠if rate_mouse > self.MOUSE_RATE and ha:self.MOUSE_COUNTER_FRAMES += 1if self.MOUSE_COUNTER_FRAMES >= self.MOUSE_UP_FRAMES:print('打哈欠')cv2.putText(img, "haha", (rects[i].left(), rects[i].top() - 60), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 255)) if rate < self.EYE_THRESH and eye:self.COUNTER_FRAMES += 1# print('闭眼检测到了,第%s次'%COUNTER_FRAMES)if self.COUNTER_FRAMES >= 5:self.TOTAL += 1self.COUNTER_FRAMES = 0close_eye = Trueelse:self.COUNTER_FRAMES = 0# for idx, point in enumerate(points):# pos = (point[0, 0], point[0, 1])# cv2.circle(img, pos, 2, (0, 0, 255), 1)# cv2.putText(img, str(idx + 1), pos, cv2.FONT_HERSHEY_SIMPLEX, 0.3, (0, 255, 255)) if self.TOTAL >= 10 and warn:cv2.rectangle(img, (rects[i].left(), rects[i].top()), (rects[i].right(), rects[i].bottom()), color= (255, 0, 255))cv2.putText(img, "tired", (rects[i].left(), rects[i].top() - 20), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 0, 255)) print('warning, 您已疲劳,请尽快休息') return "%s闭眼"%time.strftime("%Y-%m-%d %H:%M:%S", time.localtime()) if close_eye else "", img

# print('结束检测,检测到了%s次疲劳闭眼'%TOTAL)交互界面

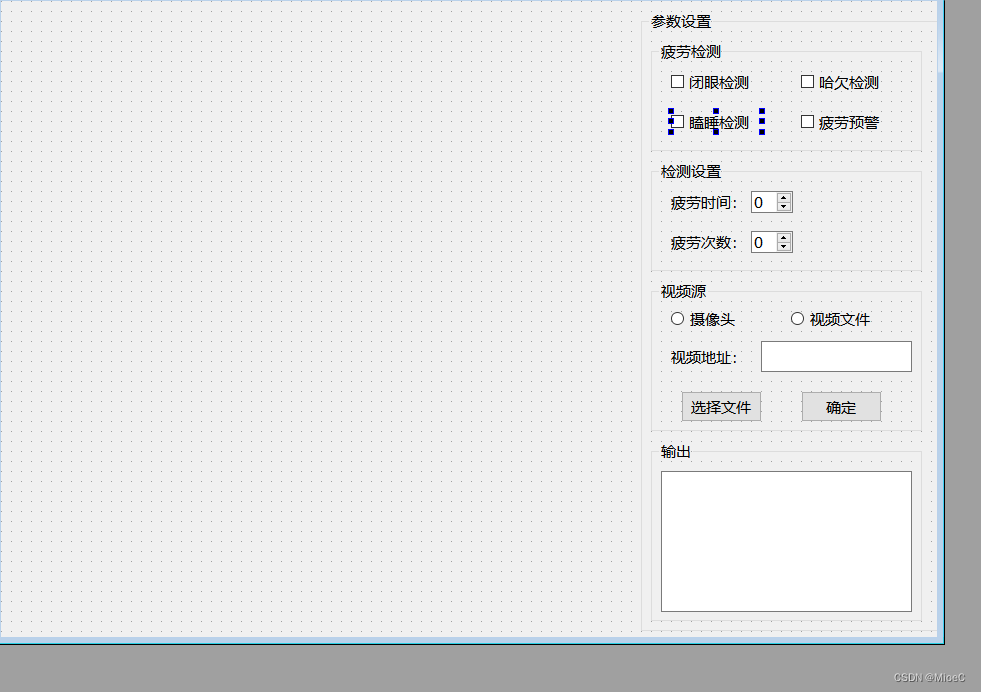

交互界面利用qtdesigner, 可视化的设计, 再转化成python语言进行操作。

交互代码

# 控件绑定相关操作def init_slots(self):self.ui.tired_time.setValue(3)self.ui.tired_count.setValue(6)self.ui.eye.setChecked(True)self.ui.video.setChecked(True)self.ui.select_video.clicked.connect(self.button_video_open)self.ui.start_skim.clicked.connect(self.toggleState)self.ui.camera.clicked.connect(partial(self.change_method, METHOD.CAMERA))self.ui.video.clicked.connect(partial(self.change_method, METHOD.VIDEO))

暂停和开始: 这里利用了QtCore.QTimer() 的方法,里面有开始和暂停的api可以调用

import argparse

import random

import sys

import timesys.path.append("..")

from ui import detect

from logic.recognize import Recognize

import torch

from PyQt5.QtWidgets import *

from PyQt5 import QtCore, QtGui, QtWidgets

from PyQt5.QtWidgets import QApplication,QMainWindow

from functools import partial

import torch.backends.cudnn as cudnn

import cv2 as cv

import numpy as np

class METHOD():CAMERA = 0VIDEO = 1

# pyuic5 -o name.py test.ui

class UI_Logic_Window(QtWidgets.QMainWindow):def __init__(self, parent = None):super(UI_Logic_Window, self).__init__(parent)self.timer_video = QtCore.QTimer() # 创建定时器#创建一个窗口self.w = QMainWindow()self.ui = detect.Ui_DREAM_EYE()self.ui.setupUi(self)self.init_slots()self.output_folder = 'output/'self.cap = cv.VideoCapture()# 日志self.logging = ''self.recognize = Recognize() # 控件绑定相关操作def init_slots(self):self.ui.tired_time.setValue(3)self.ui.tired_count.setValue(6)self.ui.eye.setChecked(True)self.ui.video.setChecked(True)self.ui.select_video.clicked.connect(self.button_video_open)self.ui.start_skim.clicked.connect(self.toggleState)self.ui.camera.clicked.connect(partial(self.change_method, METHOD.CAMERA))self.ui.video.clicked.connect(partial(self.change_method, METHOD.VIDEO))# self.ui.capScan.clicked.connect(self.button_camera_open)# self.ui.loadWeight.clicked.connect(self.open_model)# self.ui.initModel.clicked.connect(self.model_init)# self.ui.start_skim.clicked.connect(self.toggleState)# self.ui.end.clicked.connect(self.endVideo)# # self.ui.pushButton_stop.clicked.connect(self.button_video_stop)# # self.ui.pushButton_finish.clicked.connect(self.finish_detect)self.timer_video.timeout.connect(self.show_video_frame) # 定时器超时,将槽绑定至show_video_framedef change_method(self, type):if type == METHOD.CAMERA:self.ui.select_video.setDisabled(True)else:self.ui.select_video.setDisabled(False)def button_image_open(self):print('button_image_open')name_list = []try:img_name, _ = QtWidgets.QFileDialog.getOpenFileName(self, "选择文件")except OSError as reason:print('文件出错啦')QtWidgets.QMessageBox.warning(self, 'Warning', '文件出错', buttons=QtWidgets.QMessageBox.Ok)else:if not img_name:QtWidgets.QMessageBox.warning(self,"Warning", '文件出错', buttons=QtWidgets.QMessageBox.Ok)self.log('文件出错')else:img = cv.imread(img_name)info_show = self.recognize.skim_video(img)date = time.strftime('%Y-%m-%d-%H-%M-%S', time.localtime(time.time())) # 当前时间file_extaction = img_name.split('.')[-1]new_fileName = date + '.' + file_extactionfile_path = self.output_folder + 'img_output/' + new_fileNamecv.imwrite(file_path, img)self.show_img(info_show, img)# self.log(info_show) #检测信息# self.result = cv.cvtColor(img, cv.COLOR_BGR2BGRA)# self.result = letterbox(self.result, new_shape=self.opt.img_size)[0] #cv.resize(self.result, (640, 480), interpolation=cv.INTER_AREA)# self.QtImg = QtGui.QImage(self.result.data, self.result.shape[1], self.result.shape[0], QtGui.QImage.Format_RGB32)# print(type(self.ui.show))# self.ui.show.setPixmap(QtGui.QPixmap.fromImage(self.QtImg))# self.ui.show.setScaledContents(True) # 设置图像自适应界面大小def show_img(self, info_show, img):if info_show:self.log(info_show)show = cv.resize(img, (640, 480)) # 直接将原始img上的检测结果进行显示self.result = cv.cvtColor(show, cv.COLOR_BGR2RGB)showImage = QtGui.QImage(self.result.data, self.result.shape[1], self.result.shape[0],QtGui.QImage.Format_RGB888)self.ui.capture.setPixmap(QtGui.QPixmap.fromImage(showImage))self.ui.capture.setScaledContents(True) # 设置图像自适应界面大小def toggleState(self):print('toggle')state = self.timer_video.signalsBlocked()self.timer_video.blockSignals(not state)text = '继续' if not state else '暂停'self.ui.start_skim.setText(text)def endVideo(self):print('end')self.timer_video.blockSignals(True)self.releaseRes()def button_video_open(self):video_path, _ = QtWidgets.QFileDialog.getOpenFileName(self, '选择检测视频', './', filter="*.mp4;;*.avi;;All Files(*)")self.ui.video_path.setText(video_path)flag = self.cap.open(video_path)if not flag:QtWidgets.QMessageBox.warning(self,"Warning", '打开视频失败', buttons=QtWidgets.QMessageBox.Ok)else: self.timer_video.start(1000/self.cap.get(cv.CAP_PROP_FPS)) # 以30ms为间隔,启动或重启定时器# if self.opt.save:# fps, w, h, path = self.set_video_name_and_path()# self.vid_writer = cv.VideoWriter(path, cv.VideoWriter_fourcc(*'mp4v'), fps, (w, h))def set_video_name_and_path(self):# 获取当前系统时间,作为img和video的文件名now = time.strftime("%Y-%m-%d-%H-%M-%S", time.localtime(time.time()))# if vid_cap: # videofps = self.cap.get(cv.CAP_PROP_FPS)w = int(self.cap.get(cv.CAP_PROP_FRAME_WIDTH))h = int(self.cap.get(cv.CAP_PROP_FRAME_HEIGHT))# 视频检测结果存储位置save_path = self.output_folder + 'video/' + now + '.mp4'return fps, w, h, save_pathdef button_camera_open(self):camera_num = 0self.cap = cv.VideoCapture(camera_num)if not self.cap.isOpened():QtWidgets.QMessageBox.warning(self, u"Warning", u'摄像头打开失败', buttons=QtWidgets.QMessageBox.Ok)else:self.timer_video.start(1000/60)if self.opt.save:fps, w, h, path = self.set_video_name_and_path()self.vid_writer = cv.VideoWriter(path, cv.VideoWriter_fourcc(*'mp4v'), fps, (w, h))def open_model(self):self.openfile_name_model, _ = QFileDialog.getOpenFileName(self, '选择权重文件', directory='./yolov5\yolo\YoloV5_PyQt5-main\weights')print(self.openfile_name_model)if not self.openfile_name_model:# QtWidgets.QMessageBox.warning(self, u"Warning" u'未选择权重文件,请重试', buttons=QtWidgets.QMessageBox.Ok)self.log("warining 未选择权重文件,请重试")else :print(self.openfile_name_model)self.log("权重文件路径为:%s"%self.openfile_name_model)passdef show_video_frame(self):name_list = []flag, img = self.cap.read()if img is None:self.releaseRes() else:close_eye, img = self.recognize.skim_video(img, self.ui.ha.checkState(), self.ui.eye.checkState(), self.ui.tired.checkState())# if self.opt.save:# self.vid_writer.write(img) # 检测结果写入视频self.show_img(close_eye, img)def releaseRes(self):print('读取结束')self.log('检测结束')self.timer_video.stop()self.cap.release() # 释放video_capture资源self.ui.show.clear()if self.opt.save:self.vid_writer.release() def log(self, msg):self.logging += '%s\n'%msgself.ui.log.setText(self.logging)self.ui.log.moveCursor(QtGui.QTextCursor.End)

if __name__=='__main__':# 创建QApplication实例app=QApplication(sys.argv)#获取命令行参数current_ui = UI_Logic_Window()current_ui.show()sys.exit(app.exec_())界面代码

# -*- coding: utf-8 -*-# Form implementation generated from reading ui file 'detect_ui.ui'

#

# Created by: PyQt5 UI code generator 5.9.2

#

# WARNING! All changes made in this file will be lost!from PyQt5 import QtCore, QtGui, QtWidgetsclass Ui_DREAM_EYE(object):def setupUi(self, DREAM_EYE):DREAM_EYE.setObjectName("DREAM_EYE")DREAM_EYE.resize(936, 636)icon = QtGui.QIcon()icon.addPixmap(QtGui.QPixmap("../../ui_img/icon.jpg"), QtGui.QIcon.Normal, QtGui.QIcon.Off)DREAM_EYE.setWindowIcon(icon)self.centralwidget = QtWidgets.QWidget(DREAM_EYE)self.centralwidget.setEnabled(True)sizePolicy = QtWidgets.QSizePolicy(QtWidgets.QSizePolicy.Preferred, QtWidgets.QSizePolicy.Fixed)sizePolicy.setHorizontalStretch(0)sizePolicy.setVerticalStretch(0)sizePolicy.setHeightForWidth(self.centralwidget.sizePolicy().hasHeightForWidth())self.centralwidget.setSizePolicy(sizePolicy)self.centralwidget.setObjectName("centralwidget")self.groupBox = QtWidgets.QGroupBox(self.centralwidget)self.groupBox.setGeometry(QtCore.QRect(640, 10, 311, 621))font = QtGui.QFont()font.setFamily("Microsoft YaHei")font.setPointSize(11)self.groupBox.setFont(font)self.groupBox.setObjectName("groupBox")self.groupBox_2 = QtWidgets.QGroupBox(self.groupBox)self.groupBox_2.setGeometry(QtCore.QRect(10, 270, 271, 151))self.groupBox_2.setObjectName("groupBox_2")self.camera = QtWidgets.QRadioButton(self.groupBox_2)self.camera.setGeometry(QtCore.QRect(20, 30, 89, 16))self.camera.setObjectName("camera")self.video = QtWidgets.QRadioButton(self.groupBox_2)self.video.setGeometry(QtCore.QRect(140, 30, 89, 16))self.video.setObjectName("video")self.label = QtWidgets.QLabel(self.groupBox_2)self.label.setGeometry(QtCore.QRect(20, 60, 81, 31))self.label.setObjectName("label")self.select_video = QtWidgets.QPushButton(self.groupBox_2)self.select_video.setGeometry(QtCore.QRect(30, 110, 81, 31))self.select_video.setObjectName("select_video")self.start_skim = QtWidgets.QPushButton(self.groupBox_2)self.start_skim.setGeometry(QtCore.QRect(150, 110, 81, 31))self.start_skim.setObjectName("start_skim")self.video_path = QtWidgets.QTextEdit(self.groupBox_2)self.video_path.setGeometry(QtCore.QRect(110, 60, 151, 31))self.video_path.setObjectName("video_path")self.groupBox_3 = QtWidgets.QGroupBox(self.groupBox)self.groupBox_3.setGeometry(QtCore.QRect(10, 30, 271, 111))self.groupBox_3.setObjectName("groupBox_3")self.eye = QtWidgets.QCheckBox(self.groupBox_3)self.eye.setGeometry(QtCore.QRect(20, 30, 91, 21))self.eye.setObjectName("eye")self.ha = QtWidgets.QCheckBox(self.groupBox_3)self.ha.setGeometry(QtCore.QRect(150, 30, 91, 21))self.ha.setObjectName("ha")self.head = QtWidgets.QCheckBox(self.groupBox_3)self.head.setGeometry(QtCore.QRect(20, 70, 91, 21))self.head.setObjectName("head")self.tired = QtWidgets.QCheckBox(self.groupBox_3)self.tired.setGeometry(QtCore.QRect(150, 70, 91, 21))self.tired.setObjectName("tired")self.groupBox_5 = QtWidgets.QGroupBox(self.groupBox)self.groupBox_5.setGeometry(QtCore.QRect(10, 430, 271, 181))self.groupBox_5.setObjectName("groupBox_5")self.log = QtWidgets.QTextBrowser(self.groupBox_5)self.log.setGeometry(QtCore.QRect(10, 30, 251, 141))self.log.setObjectName("log")self.groupBox_4 = QtWidgets.QGroupBox(self.groupBox)self.groupBox_4.setGeometry(QtCore.QRect(10, 150, 271, 111))self.groupBox_4.setObjectName("groupBox_4")self.label_3 = QtWidgets.QLabel(self.groupBox_4)self.label_3.setGeometry(QtCore.QRect(20, 30, 81, 21))self.label_3.setObjectName("label_3")self.tired_time = QtWidgets.QSpinBox(self.groupBox_4)self.tired_time.setGeometry(QtCore.QRect(100, 30, 42, 22))self.tired_time.setObjectName("tired_time")self.label_4 = QtWidgets.QLabel(self.groupBox_4)self.label_4.setGeometry(QtCore.QRect(20, 70, 81, 21))self.label_4.setObjectName("label_4")self.tired_count = QtWidgets.QSpinBox(self.groupBox_4)self.tired_count.setGeometry(QtCore.QRect(100, 70, 42, 22))self.tired_count.setObjectName("tired_count")self.capture = QtWidgets.QLabel(self.centralwidget)self.capture.setGeometry(QtCore.QRect(10, 10, 611, 611))self.capture.setText("")self.capture.setObjectName("capture")DREAM_EYE.setCentralWidget(self.centralwidget)self.retranslateUi(DREAM_EYE)QtCore.QMetaObject.connectSlotsByName(DREAM_EYE)def retranslateUi(self, DREAM_EYE):_translate = QtCore.QCoreApplication.translateDREAM_EYE.setWindowTitle(_translate("DREAM_EYE", "疲劳检测系统"))self.groupBox.setTitle(_translate("DREAM_EYE", "参数设置"))self.groupBox_2.setTitle(_translate("DREAM_EYE", "视频源"))self.camera.setText(_translate("DREAM_EYE", "摄像头"))self.video.setText(_translate("DREAM_EYE", "视频文件"))self.label.setText(_translate("DREAM_EYE", "视频地址:"))self.select_video.setText(_translate("DREAM_EYE", "选择文件"))self.start_skim.setText(_translate("DREAM_EYE", "确定"))self.groupBox_3.setTitle(_translate("DREAM_EYE", "疲劳检测"))self.eye.setText(_translate("DREAM_EYE", "闭眼检测"))self.ha.setText(_translate("DREAM_EYE", "哈欠检测"))self.head.setText(_translate("DREAM_EYE", "瞌睡检测"))self.tired.setText(_translate("DREAM_EYE", "疲劳预警"))self.groupBox_5.setTitle(_translate("DREAM_EYE", "输出"))self.groupBox_4.setTitle(_translate("DREAM_EYE", "检测设置"))self.label_3.setText(_translate("DREAM_EYE", "疲劳时间:"))self.label_4.setText(_translate("DREAM_EYE", "疲劳次数:"))检测效果

效果还不错,不过对于不是那么明显的情况可能就不是很好了,如光线不好,人脸不全的情况。

接下来可以放到你笔记本上,让他定时提醒你休息。

源代码

https://github.com/cdmstrong/tried