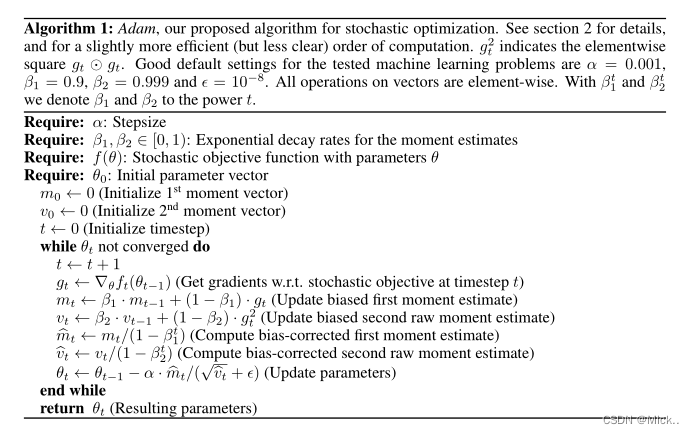

Adam: A method for stochastic optimization

Adam是通过梯度的一阶矩和二阶矩自适应的控制每个参数的学习率的大小。

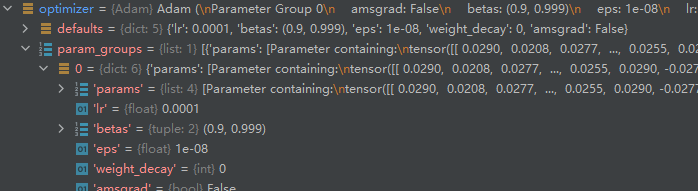

adam的初始化

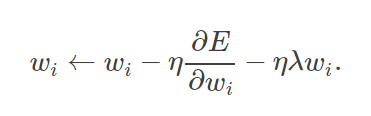

def __init__(self, params, lr=1e-3, betas=(0.9, 0.999), eps=1e-8,weight_decay=0, amsgrad=False):Args:params (iterable): iterable of parameters to optimize or dicts definingparameter groupslr (float, optional): learning rate (default: 1e-3)betas (Tuple[float, float], optional): coefficients used for computingrunning averages of gradient and its square (default: (0.9, 0.999))eps (float, optional): term added to the denominator to improvenumerical stability (default: 1e-8)weight_decay (float, optional): weight decay (