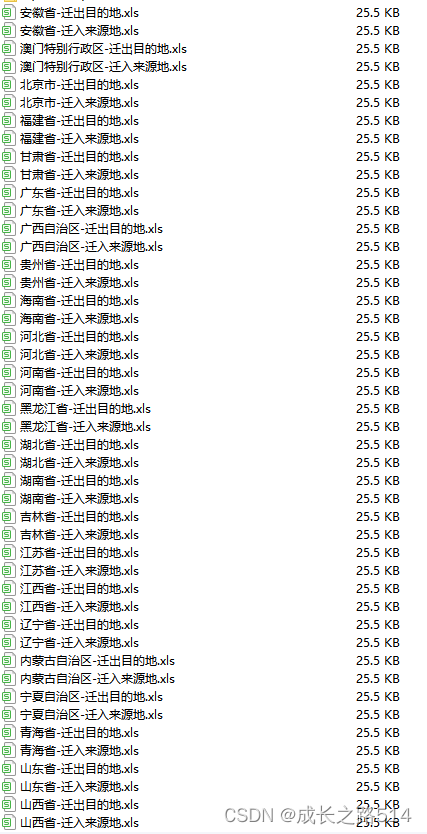

最近做COVID-19相关的课程项目,需要用到省级间人口迁移的数据。笔者参考改进了https://blog.csdn.net/qq_44315987/article/details/104118498 的城市间流动的代码,从百度迁徙爬取了数据,并将数据保存在同一张表内。

迁入人口

# coding:utf-8

import urllib.request

import pandas as pddef get_code_city():code_str = """北京|110000,天津|120000,广西壮族自治区|450000,内蒙古自治区|150000,宁夏回族自治区|640000,新疆维吾尔自治区|650000,西藏自治区|540000,上海|310000,浙江|330000,重庆|500000,安徽|340000,福建|350000,甘肃|620000,广东|440000,贵州|520000,海南|460000,河北|130000,黑龙江|230000,河南|410000,湖北|420000,湖南|430000,江苏|320000,江西|360000,吉林|220000,辽宁|210000,青海|630000,山东|370000,山西|140000,陕西|610000,四川|510000,云南|530000"""code_dict = {}for mapping in code_str.split(","):name, number = mapping.split("|")code_dict[name] = numberreturn code_dictdef conserve(data, time, work):province = []value = []for item in data['list']:province.append(item['province_name'])value.append(item['value'])res = {'省份': province, '比例': value}res = pd.DataFrame(res)res.to_excel(excel_writer=work, sheet_name=time)data_type = "move_in"

f = pd.DataFrame()

f.to_excel('{}.xlsx'.format(data_type)) # 保存的文件名

work = pd.ExcelWriter('{}.xlsx'.format(data_type))

time_slots = list(range(20200115, 20200132)) + list(range(20200201, 20200230)) + list(range(20200301, 20200316))# 用到的时间片段provinces = ["上海市", "北京市", "重庆市", "天津市", "内蒙古自治区", "广西壮族自治区", "西藏自治区", "新疆维吾尔自治区", "宁夏回族自治区", "河北省", "山西省", "辽宁省", "吉林省", "黑龙江省", "江苏省", "浙江省", "安徽省", "福建省", "江西省", "山东省", "河南省", "湖北省", "湖南省", "广东省", "海南省", "四川省", "贵州省", "云南省", "陕西省", "甘肃省", "青海省"]code = get_code_city() # 获取城市和编码。这里去除了港澳台

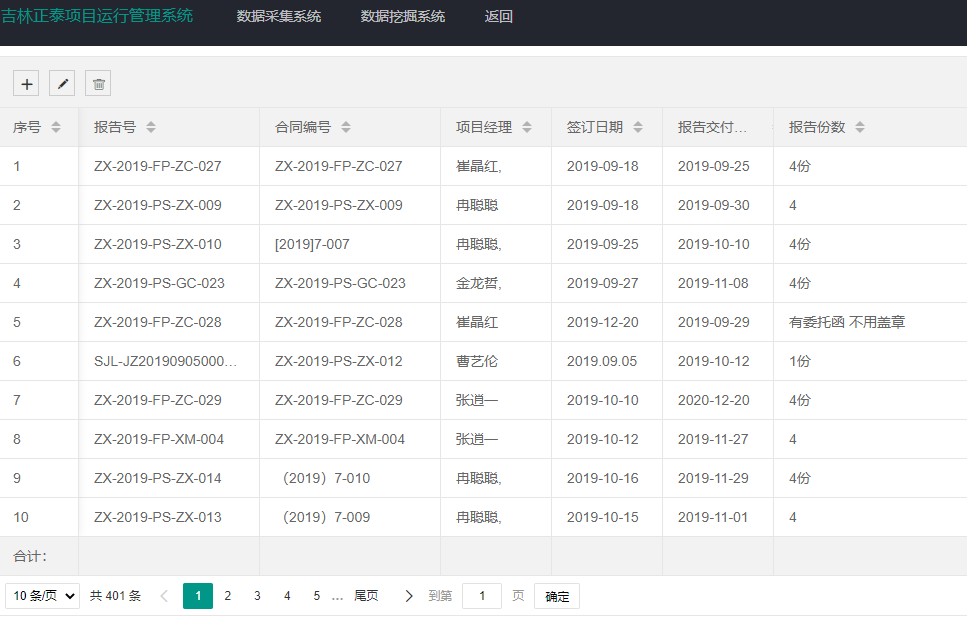

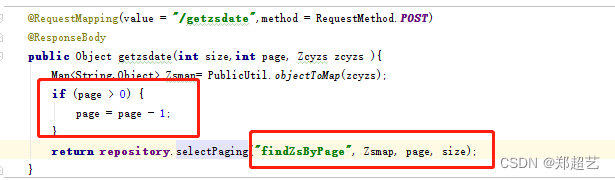

for name, num in code.items():province_ratio_dict = {}province_ratio_dict['省份'] = provincesfor t in time_slots:print(name, t)url = 'http://huiyan.baidu.com/migration/provincerank.jsonp?' \'dt=province&id={}&type=move_in&date={}'.format(str(num), str(t)) # 设置url,这里比较重要。如果选择迁出,改为type=move_outhead = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) ''AppleWebKit/537.36 (KHTML, like Gecko) ''Chrome/74.0.3729.169 Safari/537.36'}req = urllib.request.Request(url, headers=head)response = urllib.request.urlopen(url)html = response.read().decode('unicode_escape')if html.startswith("cb"):html = html[3:-1]data = eval(html)["data"]ratios = []province_ratio_temp = {item['province_name']: item['value'] for item in data['list']}for province in provinces:if province not in province_ratio_temp: # 这一段是为了确保每个日期下保存的省市数目都一致,因而可以都保存在一张表格内ratios.append(0)else:ratios.append(province_ratio_temp[province])province_ratio_dict[str(t)] = ratiosres = pd.DataFrame(province_ratio_dict)res.to_excel(excel_writer=work, sheet_name=name)work.save()

迁徙规模爬虫

# coding:utf-8

import urllib.request

import pandas as pddef get_code_city():code_str = """北京|110000,天津|120000,广西壮族自治区|450000,内蒙古自治区|150000,宁夏回族自治区|640000,新疆维吾尔自治区|650000,西藏自治区|540000,上海|310000,浙江|330000,重庆|500000,安徽|340000,福建|350000,甘肃|620000,广东|440000,贵州|520000,海南|460000,河北|130000,黑龙江|230000,河南|410000,湖北|420000,湖南|430000,江苏|320000,江西|360000,吉林|220000,辽宁|210000,青海|630000,山东|370000,山西|140000,陕西|610000,四川|510000,云南|530000"""code_dict = {}for mapping in code_str.split(","):name, number = mapping.split("|")code_dict[name] = numberreturn code_dictdef conserve(data, time, work):province = []value = []for item in data['list']:province.append(item['province_name'])value.append(item['value'])res = {'省份': province, '比例': value}res = pd.DataFrame(res)res.to_excel(excel_writer=work, sheet_name=time)data_type = "scale"

f = pd.DataFrame()

f.to_excel('{}.xlsx'.format(data_type))

work = pd.ExcelWriter('{}.xlsx'.format(data_type))

t = 20200115

time_slots = list(range(20200115, 20200132)) + list(range(20200201, 20200230)) + list(range(20200301, 20200316))provinces = ["上海市", "北京市", "重庆市", "天津市", "内蒙古自治区", "广西壮族自治区", "西藏自治区", "新疆维吾尔自治区", "宁夏回族自治区", "河北省", "山西省", "辽宁省", "吉林省", "黑龙江省", "江苏省", "浙江省", "安徽省", "福建省", "江西省", "山东省", "河南省", "湖北省", "湖南省", "广东省", "海南省", "四川省", "贵州省", "云南省", "陕西省", "甘肃省", "青海省"]code = get_code_city()

province_scale_dict = {}

province_scale_dict["时间"] = time_slots

for name, num in code.items():url = 'http://huiyan.baidu.com/migration/historycurve.jsonp?' \'dt=province&id={}&type=move_in&date={}'.format(str(num), str(t))head = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) ''AppleWebKit/537.36 (KHTML, like Gecko) ''Chrome/74.0.3729.169 Safari/537.36'}req = urllib.request.Request(url, headers=head)response = urllib.request.urlopen(url)html = response.read().decode('unicode_escape')if html.startswith("cb"):html = html[3:-1]data = eval(html)["data"]scales = []for time in data["list"]:if int(time) in time_slots:scales.append(data["list"][time])province_scale_dict[name] = scalesres = pd.DataFrame(province_scale_dict)res.to_excel(excel_writer=work)work.save()

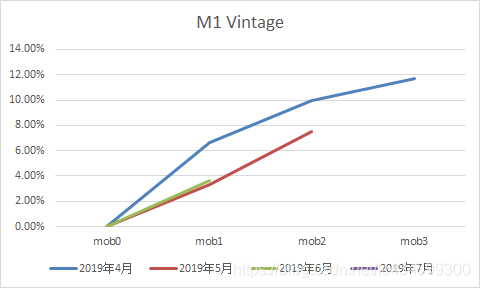

规模爬虫结果保存在scale.xlsx中