目录

- 简介

- 拓扑图

- 需求

- 首先先搭建好MHA集群

- 跟新主机时间

- 修改主机名

- 配置所有主机之间SSH无密码验证

- 将私钥发送到所有主机(包括本机)

- 将下载好的软件包上传到主机

- 配置本地yum源

- 解压软件包

- 在manager主机和各个node节点安装软件依赖包

- 安装MHA manager依赖的perl模块包

- 安装MHA manager软件包

- 搭建主从复制环境

- 登陆到mysql-01主机(创建一个测试库)

- 授权

- 查看状态

- 将数据导出并发送到其他两台mysql上

- 导入数据

- 添加权限

- 修改配置文件(mysql-02和mysql-03是同样的步骤)

- 建立主从关系

- 查看主从是否设置成功

- 两台slave服务器设置read_only

- 配置MHA

- 创建MHA的相关工作目录,并创建相关的配置文件

- 编辑

- 检查SSH配置

- 检查整个复制环境状态

- 检查MHA manager状态

- 查看启动日志

- 关闭监控

- 在主库上创建vip

- 在在主配置文件中开启脚本

- 编写脚本/usr/bin/master_ip_failover,要会perl脚本语言

- 给脚本添加执行权限

- 检查SSH配置

- 检查整个复制环节

- 开启监控

- 查看MHA manager是否正常

- 查看启动日志

- 打开新的日志窗口观察vip和主从是否漂移

- 搭建ceph集群

- 根新主机时间

- 修改host文件

- 做SSH免密登陆

- 将密钥发送到所有主机

- 上传软件包并解压

- 配置ceph的yum源

- 将解压的软件包和yum源发送

- 安装epel-release(所有节点)

- 在所有的主机上部署ceph

- 在管理节点上部署服务

- 修改副本数

- 安装ceph monitor

- 收集节点的keyring文件

- 查看密钥

- 部署osd服务

- 使用ceph自动分区

- 添加osd节点

- 查看osd状态

- 部署mgr管理服务

- 统一集群配置

- 各节点修改ceph.client.admin.keyring权限

- 部署mds服务

- 查看mds服务

- 查看集群状态

- 现在开始创建ceph文件系统

- 创建存储池

- 创建文件系统

- 查看ceph文件系统

- 查看mds节点状态

- 备份mysql数据到ceph

- 创建ceph的RBD

- 创建rbd存储池

- 创建指定大小的块设备作为磁盘文件

- 查看test1的信息

- 映射块设备,即用rbd把镜像名映射为内核模块

- 查看一下

- 创建挂载目录

- 格式化分区

- 挂载

- 写入数据测试

- 查看一下

- 安装ansible

- 配置yum源

- 上传软件包

- 安装ansible

- 修改主机时间

- 配置主机清单

- 测试一下

- 配置免密访问

- 修改主机清单

- 安装服务

- 安装

- 挂载ceph文件系统到web服务器

- 编辑文件

- 安装软件包

- 挂载

- 查看一下

- 搭建LVS+keepalived

- 安装依赖包

- 上传软件包

- 解压软件包

- 预编译

- 编译安装

- 配置keepalived+LVS-DR模式

- 添加软连接

- 创建目录

- 复制配置文件到刚才创建的目录

- 修改配置文件

- 重启keepalived并设置开机自启

- 查看一下

- 备用节点s_director配置

- 修改配置文件

- 重启并设置开机自启

- 查看一下

- 测试主从切换

- 修改nginx服务(同样的步骤在nginx-01和nginx-02上都要操作)

- 生效配置文件

- 配置nginx的vip

- 重启网卡并查看vip

- 修改主页文件(做测试用)

- 安装ipvsadm命令,并添加规则

- 添加服务器节点

- 重启服务

- 查看一下

- 访问测试

- 搭建discuz论坛

- 上传软件包

- 解决依赖关系

- 安装 libmcrypt

- 解压php包

- 安装php

- 编译及安装

- 生成php.ini脚本

- 修改fpm配置php-fpm.conf.default文件名称

- 修改配置文件

- 复制启动脚本到init.d下

- 赋予执行权限

- 添加开机自启

- 启动服务

- 查看端口监听状态

- 修改nginx.conf配置文件

- 生效配置文件

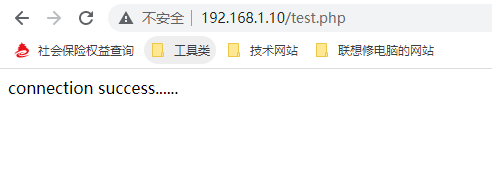

- 创建index.php和test.php文件

- 测试

- 修改默认运行账户

- 下载软件包

- 创建站点目录

- 解压软件包

- 建立虚拟主机

- 添加权限

- 重启nginx

- 创建数据库

- 开始访问并安装

- 安装zabbix(在nginx02上搭建)

- 解压软件包并配置zabbix源

- 解决依赖关系

- 安装libmcrypt

- 安装php

- 修改配置文件

- 创建php-fpm服务启动脚本

- 修改配置文件

- 启动php-fpm服务

- 修改nginx配置文件支持php

- 重载配置文件

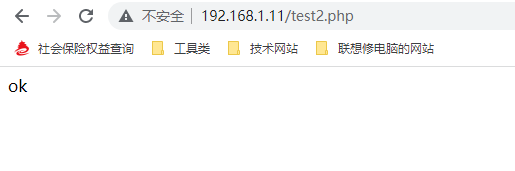

- 创建测试页

- 测试

- 创建zabbix使用的数据库

- 导入数据库

- 解决依赖关系

- 创建zabbix用户

- 预编译

- 安装

- 添加软连接

- 配置zabbix_server.conf

- 配置zabbix监控本身

- 启动

- 添加zabbix启动脚本

- 配置zabbix的web界面

- 启动zabbix_agnetd

- 配置web页面

- 修改为中文界面

- 解决中文乱码问题

- 搭建DNS服务

- 启动named并设置开机自启

- 查看端口

- 修改配置文件

- 检查一下

- 编辑正向解析配置文件

- 检查正向解析和反向解析配置文件

- 修改属组

- 测试

简介

公司现阶段需要搭建一个技术论坛对外网提供服务,网站设计要求达到高可用,高负载,并且添加监控。

拓扑图

需求

1、使用LVS+ keeplive实现负载均衡

2、使用MHA搭建mysql集群

3、使用ceph集群实现web网站内容一致

4、搭建discuz论坛

5、搭建DNS解析网站域名

6、使用zabbix监控各个服务器硬件指标及服务端口

7、备份mysql数据库到ceph集群

8、使用ansble批量部署nginx、apache,nginx和apache必须为源码包安装。

首先先搭建好MHA集群

| 主机名 | IP |

|---|---|

| mysql-01 | 192.168.1.2 |

| mysql-02 | 192.168.1.3 |

| mysql-03 | 192.168.1.4 |

| mha | 192.168.1.5 |

跟新主机时间

所有主机都需要根新

[root@mysql-01 ~]# ntpdate ntp1.aliyun.com6 Apr 15:34:50 ntpdate[1467]: step time server 120.25.115.20 offset -28798.923817 sec

[root@mysql-01 ~]#

做个计划任务

[root@mysql-01 ~]# crontab -l

30 * * * * ntpdate ntp1.aliyun.com

修改主机名

[root@mysql ~]# hostnamectl set-hostname mysql-01

[root@mysql ~]# bash #这里需要bash刷新一下环境,才会显示新修改的主机名

[root@mysql-01 ~]#

[root@mysql ~]# hostnamectl set-hostname mysql-02

[root@mysql ~]# bash #这里需要bash刷新一下环境,才会显示新修改的主机名

[root@mysql-02 ~]#

[root@mysql ~]# hostnamectl set-hostname mysql-03

[root@mysql ~]# bash #这里需要bash刷新一下环境,才会显示新修改的主机名

[root@mysql-03 ~]#

[root@mysql ~]# hostnamectl set-hostname mha

[root@mysql ~]# bash #这里需要bash刷新一下环境,才会显示新修改的主机名

[root@mha ~]#

配置所有主机之间SSH无密码验证

所有主机之间要相互SSH无秘

[root@mysql-01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): #这里什么都不输入,直接回车

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase): #这里什么都不输入,直接回车

Enter same passphrase again: #这里什么都不输入,直接回车

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:u1htfi6dAAP6Fx4plXeAfvGJfmANLO9Gcde8GflzpPk root@mysql-01

The key's randomart image is:

+---[RSA 2048]----+

| +.. o.|

| . = * o .o+|

| . + = X o +=|

| . . B B + o+o|

| . oS@ . .o|

| . oo= . E|

| .o.o+ . |

| o +. + |

| . . .+. |

+----[SHA256]-----+

[root@mysql-01 ~]#

此时密钥对已经创建完成。

将私钥发送到所有主机(包括本机)

[root@mysql-01 ~]# for i in 2 3 4 5;do ssh-copy-id 192.168.1.$i;done

测试一下

[root@mysql-01 ~]# for i in 2 3 4 5;do ssh root@192.168.1.$i hostname;done

mysql-01

mysql-02

mysql-03

mha

显示正常

将下载好的软件包上传到主机

链接:https://pan.baidu.com/s/1hRiV4jF7w9WaG5brhdRRkA

提取码:agp6

–来自百度网盘超级会员V2的分享

[root@mysql-01 ~]# ls

auto_install_mysql_cpu4.sh mha4mysql-manager-0.57-0.el7.noarch.rpm mhapath.tar.gz mysql-community-5.7.26-1.el7.src.rpm

boost_1_59_0 mha4mysql-node-0.57-0.el7.noarch.rpm mysql-5.7.26 rpmbuild

[root@mysql-01 ~]#

此时可以看见我们已经上传好了mha4mysql-manager-0.57-0.el7.noarch.rpm,mha4mysql-node-0.57-0.el7.noarch.rpm,mhapath.tar.gz这三个包

配置本地yum源

[root@mysql-01 ~]# vim /etc/yum.repos.d/mhapath.repo [mhapath]

name=mhapath

baseurl=file:///root/mhapath

enabled=1

gpgcheck=0

解压软件包

[root@mysql-01 ~]# tar -zxvf mhapath.tar.gz

将解压好的软件包和yum文件发送到其他主机上

[root@mysql-01 ~]# for i in 3 4 5;do scp -r /root/mhapath root@192.168.1.$i:~;done

[root@mysql-01 ~]# for i in 3 4 5;do scp -r /etc/yum.repos.d/ root@192.168.1.$i:/etc/;done

[root@mysql-01 ~]# for i in 3 4 5;do scp mha4mysql-node-0.57-0.el7.noarch.rpm root@192.168.1.$i:~;done

mha4mysql-node-0.57-0.el7.noarch.rpm 100% 35KB 13.8MB/s 00:00

mha4mysql-node-0.57-0.el7.noarch.rpm 100% 35KB 14.6MB/s 00:00

mha4mysql-node-0.57-0.el7.noarch.rpm 100% 35KB 18.6MB/s 00:00

在manager主机和各个node节点安装软件依赖包

在所有主机上都要执行这两条命令

[root@mysql-01 ~]# yum -y install perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager --skip-broken --nogpgcheck

[root@mysql-01 ~]# rpm -ivh mha4mysql-node-0.57-0.el7.noarch.rpm

Preparing... ################################# [100%]

Updating / installing...1:mha4mysql-node-0.57-0.el7 ################################# [100%]

[root@mysql-01 ~]#

安装MHA manager依赖的perl模块包

[root@mha ~]# yum -y install perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager perl-Time-HiRes perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker perl-CPAN

安装MHA manager软件包

[root@mysql-01 ~]# scp mha4mysql-manager-0.57-0.el7.noarch.rpm root@192.168.1.5:~

mha4mysql-manager-0.57-0.el7.noarch.rpm

[root@mha ~]# rpm -ivh mha4mysql-manager-0.57-0.el7.noarch.rpm

Preparing... ################################# [100%]

Updating / installing...1:mha4mysql-manager-0.57-0.el7 ################################# [100%]

[root@mha ~]#

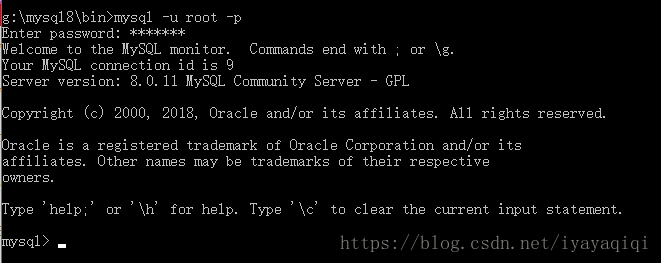

搭建主从复制环境

首先要先在所有mysql主机上安装半同步插件,在关闭mysql-01的数据库并修改配置文件,在重启mysql主机

[root@mysql-01 ~]# mysql -uroot -p

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.26 Source distributionCopyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> install plugin rpl_semi_sync_master soname 'semisync_master.so';

Query OK, 0 rows affected (0.01 sec)mysql> install plugin rpl_semi_sync_slave soname 'semisync_slave.so';

Query OK, 0 rows affected (0.00 sec)mysql> [root@mysql-01 ~]# systemctl stop mysql

[root@mysql-01 ~]# vim /etc/my.cnfdatadir=/data/mysql/data

port=3306

socket=/usr/local/mysql/mysql.sock

symbolic-links=0

character-set-server=utf8

log-error=/data/mysql/log/mysqld.log

pid-file=/usr/local/mysql/mysqld.pid

server-id=1 #从这里开始添加

log-bin=/data/mysql/log/mysql-bin

log-bin-index=/data/mysql/log/mysql-bin.index

binlog_format=mixed

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=10000

rpl_semi_sync_slave_enabled=1

relay_log_purge=0

relay-log=/data/mysql/log/relay-bin

relay-log-index=/data/mysql/log/slave-relay-bin.index

log_slave_updates=1[root@mysql-01 ~]# systemctl restart mysql

登陆到mysql-01主机(创建一个测试库)

创建HA库并创建stu表,并插入数据

mysql> create database HA;

Query OK, 1 row affected (10.01 sec)mysql> use HA;

Database changed

mysql> create table stu(id int,name varchar(20));

Query OK, 0 rows affected (0.00 sec)mysql> insert into stu values(1,'lisi');

Query OK, 1 row affected (0.02 sec)mysql>

授权

创建用于主从复制的用户,并赋予权限,之后刷新权限使其生效。

mysql> grant replication slave on *.* to hello@'192.168.1.%' identified by '1';

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql>

授权给manager主机

mysql> grant all privileges on *.* to manager@'192.168.1.%' identified by '1';

Query OK, 0 rows affected, 1 warning (0.01 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql>

查看状态

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000001 | 1655 | | | |

+------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)mysql> 将数据导出并发送到其他两台mysql上

[root@mysql-01 ~]# mysqldump -uroot -p1 -B HA>HA.sql

mysqldump: [Warning] Using a password on the command line interface can be insecure.

[root@mysql-01 ~]# for i in 3 4;do scp HA.sql root@192.168.1.$i:~;done

HA.sql 100% 1940 1.2MB/s 00:00

HA.sql 100% 1940 2.1MB/s 00:00

[root@mysql-01 ~]#

导入数据

分别将数据导入到两台mysql数据库里

[root@mysql-02 ~]# mysql -uroot -p1 < HA.sql

mysql: [Warning] Using a password on the command line interface can be insecure.

[root@mysql-02 ~]# mysql -uroot -p1

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.26 Source distributionCopyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show tables;

ERROR 1046 (3D000): No database selected

mysql> use HA;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

mysql> show tables;

+--------------+

| Tables_in_HA |

+--------------+

| stu |

+--------------+

1 row in set (0.00 sec)mysql> 添加权限

分别在剩下的两台mysql上添加权限

mysql> grant replication slave on *.* to hello@'192.168.1.%' identified by '1';

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> grant all privileges on *.* to manager@'192.168.1.%' identified by '1';

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql> 修改配置文件(mysql-02和mysql-03是同样的步骤)

分别修改两台mysql的配置文件,首先需要停止mysql在修改配置文件,修改完成之后重启即可。

[root@mysql-02 ~]# systemctl stop mysql

[root@mysql-02 ~]# vim /etc/my.cnf[mysqld]

basedir=/usr/local/mysql

datadir=/data/mysql/data

port=3306

socket=/usr/local/mysql/mysql.sock

symbolic-links=0

character-set-server=utf8

log-error=/data/mysql/log/mysqld.log

pid-file=/usr/local/mysql/mysqld.pid

server-id=2 #需要注意这里,三台mysql的id不能一致

log-bin=/data/mysql/log/mysql-bin

log-bin-index=/data/mysql/log/mysql-bin.index

binlog_format=mixed

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=10000

rpl_semi_sync_slave_enabled=1

relay_log_purge=0

relay-log=/data/mysql/log/relay-bin

relay-log-index=/data/mysql/log/slave-relay-bin.index

log_slave_updates=1[root@mysql-02 ~]# systemctl restart mysql

如果这里报错,则表示上面的半同步插件没有在这台mysql上安装,需要吧配置文件里面新添加的先注释掉,然后启动mysql进到mysql中安装半同步插件,然后在把配置文件中的注释删掉,在重启mysql就可以了。

建立主从关系

首先进入到mysql中,然后关闭slave复制功能,指定主库的ip地址,指定主库用于复制的用户,指定主库用于复制用户的密码,指定主库的binlog日志文件,指定主库binlog文件的起始位置。

在开启slave复制

[root@mysql-02 ~]# mysql -uroot -p1

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.26-log Source distributionCopyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> stop slave;

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> change master to master_host='192.168.1.2',master_user='hello',master_password='1',master_log_file='mysql-bin.000001',master_log_pos=1655;

Query OK, 0 rows affected, 2 warnings (0.00 sec)mysql> start slave;

Query OK, 0 rows affected (0.01 sec)查看主从是否设置成功

mysql> show slave status\G

*************************** 1. row ***************************Slave_IO_State: Waiting for master to send eventMaster_Host: 192.168.1.2Master_User: helloMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000001Read_Master_Log_Pos: 1655Relay_Log_File: relay-bin.000002Relay_Log_Pos: 320Relay_Master_Log_File: mysql-bin.000001Slave_IO_Running: Yes #此时我们看见这里为yes表示,IO没有问题Slave_SQL_Running: Yes #此时我们看见这里为yes表示,SQL没有问题Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0Last_Error: Skip_Counter: 0Exec_Master_Log_Pos: 1655Relay_Log_Space: 521Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 0Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 1Master_UUID: f9cf2bb0-9f99-11ec-9e14-000c294a561eMaster_Info_File: /data/mysql/data/master.infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Slave has read all relay log; waiting for more updatesMaster_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: Auto_Position: 0Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version:

1 row in set (0.00 sec)mysql>

如果IO和SQL为no时,我们需要重新设置用于复制的权限,重新指定主数据库。

两台slave服务器设置read_only

从库对外提供读服务,只所以没有写进配置文件,是因为slave随时会提升为master

mysql> set global read_only=1;

Query OK, 0 rows affected (0.00 sec)

到这里整个集群环境已经搭建完毕,剩下的就是配置MHA软件了。

配置MHA

创建MHA的相关工作目录,并创建相关的配置文件

[root@mha ~]# mkdir -p /var/log/masterha/app1

[root@mha ~]# mkdir -p /etc/masterha

编辑

[root@mha ~]# vim /etc/masterha/app1.cnf[server default]

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/data/mysql/log

#master_ip_failover_script=/usr/bin/master_ip_failover

#master_ip_online_change_script=/usr/bin/master_ip_online_changeuser=manager

password=1

ping_interval=1

remote_workdir=/tmp

repl_user=hello #需要注意的是,这里的用户是用于复制的用户,也就是slave用户

repl_password=1 #这里是slave用户的密码

report_script=/usr/local/send_report

shutdown_script=""

ssh_user=root[server1]

hostname=192.168.1.2

port=3306[server2]

hostname=192.168.1.3

port=3306[server3]

hostname=192.168.1.4

port=3306

检查SSH配置

[root@mha ~]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

Wed Apr 6 19:46:50 2022 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Apr 6 19:46:50 2022 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Apr 6 19:46:50 2022 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Apr 6 19:46:50 2022 - [info] Starting SSH connection tests..

Wed Apr 6 19:46:51 2022 - [debug]

Wed Apr 6 19:46:50 2022 - [debug] Connecting via SSH from root@192.168.1.2(192.168.1.2:22) to root@192.168.1.3(192.168.1.3:22)..

Wed Apr 6 19:46:50 2022 - [debug] ok.

Wed Apr 6 19:46:50 2022 - [debug] Connecting via SSH from root@192.168.1.2(192.168.1.2:22) to root@192.168.1.4(192.168.1.4:22)..

Wed Apr 6 19:46:50 2022 - [debug] ok.

Wed Apr 6 19:46:51 2022 - [debug]

Wed Apr 6 19:46:51 2022 - [debug] Connecting via SSH from root@192.168.1.3(192.168.1.3:22) to root@192.168.1.2(192.168.1.2:22)..

Wed Apr 6 19:46:51 2022 - [debug] ok.

Wed Apr 6 19:46:51 2022 - [debug] Connecting via SSH from root@192.168.1.3(192.168.1.3:22) to root@192.168.1.4(192.168.1.4:22)..

Wed Apr 6 19:46:51 2022 - [debug] ok.

Wed Apr 6 19:46:52 2022 - [debug]

Wed Apr 6 19:46:51 2022 - [debug] Connecting via SSH from root@192.168.1.4(192.168.1.4:22) to root@192.168.1.2(192.168.1.2:22)..

Wed Apr 6 19:46:51 2022 - [debug] ok.

Wed Apr 6 19:46:51 2022 - [debug] Connecting via SSH from root@192.168.1.4(192.168.1.4:22) to root@192.168.1.3(192.168.1.3:22)..

Wed Apr 6 19:46:51 2022 - [debug] ok.

Wed Apr 6 19:46:52 2022 - [info] All SSH connection tests passed successfully.

这里看见ALL SSH connection tests passed successfully就表示成功了

检查整个复制环境状态

[root@mha ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf

Wed Apr 6 21:18:00 2022 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Apr 6 21:18:00 2022 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Apr 6 21:18:00 2022 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Apr 6 21:18:00 2022 - [info] MHA::MasterMonitor version 0.57.

Wed Apr 6 21:18:01 2022 - [info] GTID failover mode = 0

Wed Apr 6 21:18:01 2022 - [info] Dead Servers:

Wed Apr 6 21:18:01 2022 - [info] Alive Servers:

Wed Apr 6 21:18:01 2022 - [info] 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 21:18:01 2022 - [info] 192.168.1.3(192.168.1.3:3306)

Wed Apr 6 21:18:01 2022 - [info] 192.168.1.4(192.168.1.4:3306)

Wed Apr 6 21:18:01 2022 - [info] Alive Slaves:

Wed Apr 6 21:18:01 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 21:18:01 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 21:18:01 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 21:18:01 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 21:18:01 2022 - [info] Current Alive Master: 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 21:18:01 2022 - [info] Checking slave configurations..

Wed Apr 6 21:18:01 2022 - [info] Checking replication filtering settings..

Wed Apr 6 21:18:01 2022 - [info] binlog_do_db= , binlog_ignore_db=

Wed Apr 6 21:18:01 2022 - [info] Replication filtering check ok.

Wed Apr 6 21:18:01 2022 - [info] GTID (with auto-pos) is not supported

Wed Apr 6 21:18:01 2022 - [info] Starting SSH connection tests..

Wed Apr 6 21:18:03 2022 - [info] All SSH connection tests passed successfully.

Wed Apr 6 21:18:03 2022 - [info] Checking MHA Node version..

Wed Apr 6 21:18:03 2022 - [info] Version check ok.

Wed Apr 6 21:18:03 2022 - [info] Checking SSH publickey authentication settings on the current master..

Wed Apr 6 21:18:03 2022 - [info] HealthCheck: SSH to 192.168.1.2 is reachable.

Wed Apr 6 21:18:04 2022 - [info] Master MHA Node version is 0.57.

Wed Apr 6 21:18:04 2022 - [info] Checking recovery script configurations on 192.168.1.2(192.168.1.2:3306)..

Wed Apr 6 21:18:04 2022 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/data/mysql/log --output_file=/tmp/save_binary_logs_test --manager_version=0.57 --start_file=mysql-bin.000001

Wed Apr 6 21:18:04 2022 - [info] Connecting to root@192.168.1.2(192.168.1.2:22).. Creating /tmp if not exists.. ok.Checking output directory is accessible or not..ok.Binlog found at /data/mysql/log, up to mysql-bin.000001

Wed Apr 6 21:18:04 2022 - [info] Binlog setting check done.

Wed Apr 6 21:18:04 2022 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Wed Apr 6 21:18:04 2022 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.1.3 --slave_ip=192.168.1.3 --slave_port=3306 --workdir=/tmp --target_version=5.7.26-log --manager_version=0.57 --relay_log_info=/data/mysql/data/relay-log.info --relay_dir=/data/mysql/data/ --slave_pass=xxx

Wed Apr 6 21:18:04 2022 - [info] Connecting to root@192.168.1.3(192.168.1.3:22).. Checking slave recovery environment settings..Opening /data/mysql/data/relay-log.info ... ok.Relay log found at /data/mysql/log, up to relay-bin.000002Temporary relay log file is /data/mysql/log/relay-bin.000002Testing mysql connection and privileges..mysql: [Warning] Using a password on the command line interface can be insecure.done.Testing mysqlbinlog output.. done.Cleaning up test file(s).. done.

Wed Apr 6 21:18:04 2022 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.1.4 --slave_ip=192.168.1.4 --slave_port=3306 --workdir=/tmp --target_version=5.7.26-log --manager_version=0.57 --relay_log_info=/data/mysql/data/relay-log.info --relay_dir=/data/mysql/data/ --slave_pass=xxx

Wed Apr 6 21:18:04 2022 - [info] Connecting to root@192.168.1.4(192.168.1.4:22).. Checking slave recovery environment settings..Opening /data/mysql/data/relay-log.info ... ok.Relay log found at /data/mysql/log, up to relay-bin.000002Temporary relay log file is /data/mysql/log/relay-bin.000002Testing mysql connection and privileges..mysql: [Warning] Using a password on the command line interface can be insecure.done.Testing mysqlbinlog output.. done.Cleaning up test file(s).. done.

Wed Apr 6 21:18:04 2022 - [info] Slaves settings check done.

Wed Apr 6 21:18:04 2022 - [info]

192.168.1.2(192.168.1.2:3306) (current master)+--192.168.1.3(192.168.1.3:3306)+--192.168.1.4(192.168.1.4:3306)Wed Apr 6 21:18:04 2022 - [info] Checking replication health on 192.168.1.3..

Wed Apr 6 21:18:04 2022 - [info] ok.

Wed Apr 6 21:18:04 2022 - [info] Checking replication health on 192.168.1.4..

Wed Apr 6 21:18:04 2022 - [info] ok.

Wed Apr 6 21:18:04 2022 - [warning] master_ip_failover_script is not defined.

Wed Apr 6 21:18:04 2022 - [warning] shutdown_script is not defined.

Wed Apr 6 21:18:04 2022 - [info] Got exit code 0 (Not master dead).MySQL Replication Health is OK.

此时看见ok表示成功了。(如果显示为NOT ok表示失败了。可以尝试重新设置权限,并重新做主从)

检查MHA manager状态

开启MHA监控

[root@mha ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf \

> --remove_dead_master_conf --ignore_last_failover < /dev/null > \

> /var/log/masterha/app1/manager.log 2>&1 &

[1] 5180

[root@mha ~]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:5180) is running(0:PING_OK), master:192.168.1.2

[root@mha ~]#

此时显示正常,并显示主库ip

注意:如果正常,会显示"PING_OK",否则会显示"NOT_RUNNING",这代表MHA监控没有开启。

查看启动日志

[root@mha ~]# tail -20 /var/log/masterha/app1/manager.log Checking slave recovery environment settings..Opening /data/mysql/data/relay-log.info ... ok.Relay log found at /data/mysql/log, up to relay-bin.000002Temporary relay log file is /data/mysql/log/relay-bin.000002Testing mysql connection and privileges..mysql: [Warning] Using a password on the command line interface can be insecure.done.Testing mysqlbinlog output.. done.Cleaning up test file(s).. done.

Wed Apr 6 21:22:57 2022 - [info] Slaves settings check done.

Wed Apr 6 21:22:57 2022 - [info]

192.168.1.2(192.168.1.2:3306) (current master)+--192.168.1.3(192.168.1.3:3306)+--192.168.1.4(192.168.1.4:3306)Wed Apr 6 21:22:57 2022 - [warning] master_ip_failover_script is not defined.

Wed Apr 6 21:22:57 2022 - [warning] shutdown_script is not defined.

Wed Apr 6 21:22:57 2022 - [info] Set master ping interval 1 seconds.

Wed Apr 6 21:22:57 2022 - [warning] secondary_check_script is not defined. It is highly recommended setting it to check master reachability from two or more routes.

Wed Apr 6 21:22:57 2022 - [info] Starting ping health check on 192.168.1.2(192.168.1.2:3306)..

Wed Apr 6 21:22:57 2022 - [info] Ping(SELECT) succeeded, waiting until MySQL doesn't respond..

[root@mha ~]#

其中"Ping(SELECT) succeeded, waiting until MySQL doesn’t respond…"说明整个系统已经开始监控了。

此时左右的MHA搭建完毕。现在需要创建vip

关闭监控

[root@mha ~]# masterha_stop --conf=/etc/masterha/app1.cnf

Stopped app1 successfully.

[1]+ Exit 1 nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1

[root@mha ~]#

在主库上创建vip

创建vip并查看一下

[root@mysql-01 ~]# ifconfig ens33:1 192.168.1.200 netmask 255.255.255.0 up

[root@mysql-01 ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.1.2 netmask 255.255.255.0 broadcast 192.168.1.255inet6 fe80::8513:8f3a:aa86:c310 prefixlen 64 scopeid 0x20<link>ether 00:0c:29:4a:56:1e txqueuelen 1000 (Ethernet)RX packets 17075 bytes 7135592 (6.8 MiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 25434 bytes 46457937 (44.3 MiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0ens33:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.1.200 netmask 255.255.255.0 broadcast 192.168.1.255ether 00:0c:29:4a:56:1e txqueuelen 1000 (Ethernet)lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 127.0.0.1 netmask 255.0.0.0inet6 ::1 prefixlen 128 scopeid 0x10<host>loop txqueuelen 1000 (Local Loopback)RX packets 193 bytes 38912 (38.0 KiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 193 bytes 38912 (38.0 KiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@mysql-01 ~]#

在在主配置文件中开启脚本

[root@mha ~]# vim /etc/masterha/app1.cnf [server default]

manager_workdir=/var/log/masterha/app1

manager_log=/var/log/masterha/app1/manager.log

master_binlog_dir=/data/mysql/log

master_ip_failover_script=/usr/bin/master_ip_failover #将这一行的注释取消掉

#master_ip_online_change_script=/usr/bin/master_ip_online_changeuser=manager

password=1

ping_interval=1

remote_workdir=/tmp

repl_user=hello

repl_password=1

report_script=/usr/local/send_report

shutdown_script=""

ssh_user=root[server1]

hostname=192.168.1.2

port=3306[server2]

hostname=192.168.1.3

port=3306[server3]

hostname=192.168.1.4

port=3306编写脚本/usr/bin/master_ip_failover,要会perl脚本语言

[root@mha ~]# vim /usr/bin/master_ip_failover#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';use Getopt::Long;my ($command, $ssh_user, $orig_master_host, $orig_master_ip,$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);my $vip = '192.168.1.200/24'; #这里的ip必须是刚才设置的vip

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down";GetOptions('command=s' => \$command,'ssh_user=s' => \$ssh_user,'orig_master_host=s' => \$orig_master_host,'orig_master_ip=s' => \$orig_master_ip,'orig_master_port=i' => \$orig_master_port,'new_master_host=s' => \$new_master_host,'new_master_ip=s' => \$new_master_ip,'new_master_port=i' => \$new_master_port,

);exit &main();sub main {print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";if ( $command eq "stop" || $command eq "stopssh" ) {my $exit_code = 1;eval {print "Disabling the VIP on old master: $orig_master_host \n";&stop_vip();$exit_code = 0;};if ($@) {warn "Got Error: $@\n";exit $exit_code;}exit $exit_code;}elsif ( $command eq "start" ) {my $exit_code = 10;eval {print "Enabling the VIP - $vip on the new master - $new_master_host \n";&start_vip();$exit_code = 0;};if ($@) {warn $@;exit $exit_code;}exit $exit_code;}elsif ( $command eq "status" ) {print "Checking the Status of the script.. OK \n";#`ssh $ssh_user\@cluster1 \" $ssh_start_vip \"`;else {&usage();exit 1;}

}# A simple system call that enable the VIP on the new master

sub start_vip() {`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}sub usage {print"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

给脚本添加执行权限

[root@mha ~]# chmod +x /usr/bin/master_ip_failover

检查SSH配置

[root@mha ~]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

Wed Apr 6 22:00:36 2022 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Apr 6 22:00:36 2022 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:00:36 2022 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:00:36 2022 - [info] Starting SSH connection tests..

Wed Apr 6 22:00:36 2022 - [debug]

Wed Apr 6 22:00:36 2022 - [debug] Connecting via SSH from root@192.168.1.2(192.168.1.2:22) to root@192.168.1.3(192.168.1.3:22)..

Wed Apr 6 22:00:36 2022 - [debug] ok.

Wed Apr 6 22:00:36 2022 - [debug] Connecting via SSH from root@192.168.1.2(192.168.1.2:22) to root@192.168.1.4(192.168.1.4:22)..

Wed Apr 6 22:00:36 2022 - [debug] ok.

Wed Apr 6 22:00:37 2022 - [debug]

Wed Apr 6 22:00:36 2022 - [debug] Connecting via SSH from root@192.168.1.3(192.168.1.3:22) to root@192.168.1.2(192.168.1.2:22)..

Wed Apr 6 22:00:37 2022 - [debug] ok.

Wed Apr 6 22:00:37 2022 - [debug] Connecting via SSH from root@192.168.1.3(192.168.1.3:22) to root@192.168.1.4(192.168.1.4:22)..

Wed Apr 6 22:00:37 2022 - [debug] ok.

Wed Apr 6 22:00:37 2022 - [debug]

Wed Apr 6 22:00:37 2022 - [debug] Connecting via SSH from root@192.168.1.4(192.168.1.4:22) to root@192.168.1.2(192.168.1.2:22)..

Wed Apr 6 22:00:37 2022 - [debug] ok.

Wed Apr 6 22:00:37 2022 - [debug] Connecting via SSH from root@192.168.1.4(192.168.1.4:22) to root@192.168.1.3(192.168.1.3:22)..

Wed Apr 6 22:00:37 2022 - [debug] ok.

Wed Apr 6 22:00:37 2022 - [info] All SSH connection tests passed successfully.

[root@mha ~]#

检查整个复制环节

[root@mha ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf

Wed Apr 6 22:03:46 2022 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Apr 6 22:03:46 2022 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:03:46 2022 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:03:46 2022 - [info] MHA::MasterMonitor version 0.57.

Wed Apr 6 22:03:47 2022 - [info] GTID failover mode = 0

Wed Apr 6 22:03:47 2022 - [info] Dead Servers:

Wed Apr 6 22:03:47 2022 - [info] Alive Servers:

Wed Apr 6 22:03:47 2022 - [info] 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:03:47 2022 - [info] 192.168.1.3(192.168.1.3:3306)

Wed Apr 6 22:03:47 2022 - [info] 192.168.1.4(192.168.1.4:3306)

Wed Apr 6 22:03:47 2022 - [info] Alive Slaves:

Wed Apr 6 22:03:47 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:03:47 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:03:47 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:03:47 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:03:47 2022 - [info] Current Alive Master: 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:03:47 2022 - [info] Checking slave configurations..

Wed Apr 6 22:03:47 2022 - [info] Checking replication filtering settings..

Wed Apr 6 22:03:47 2022 - [info] binlog_do_db= , binlog_ignore_db=

Wed Apr 6 22:03:47 2022 - [info] Replication filtering check ok.

Wed Apr 6 22:03:47 2022 - [info] GTID (with auto-pos) is not supported

Wed Apr 6 22:03:47 2022 - [info] Starting SSH connection tests..

Wed Apr 6 22:03:49 2022 - [info] All SSH connection tests passed successfully.

Wed Apr 6 22:03:49 2022 - [info] Checking MHA Node version..

Wed Apr 6 22:03:49 2022 - [info] Version check ok.

Wed Apr 6 22:03:49 2022 - [info] Checking SSH publickey authentication settings on the current master..

Wed Apr 6 22:03:49 2022 - [info] HealthCheck: SSH to 192.168.1.2 is reachable.

Wed Apr 6 22:03:49 2022 - [info] Master MHA Node version is 0.57.

Wed Apr 6 22:03:49 2022 - [info] Checking recovery script configurations on 192.168.1.2(192.168.1.2:3306)..

Wed Apr 6 22:03:49 2022 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/data/mysql/log --output_file=/tmp/save_binary_logs_test --manager_version=0.57 --start_file=mysql-bin.000001

Wed Apr 6 22:03:49 2022 - [info] Connecting to root@192.168.1.2(192.168.1.2:22).. Creating /tmp if not exists.. ok.Checking output directory is accessible or not..ok.Binlog found at /data/mysql/log, up to mysql-bin.000001

Wed Apr 6 22:03:49 2022 - [info] Binlog setting check done.

Wed Apr 6 22:03:49 2022 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Wed Apr 6 22:03:49 2022 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.1.3 --slave_ip=192.168.1.3 --slave_port=3306 --workdir=/tmp --target_version=5.7.26-log --manager_version=0.57 --relay_log_info=/data/mysql/data/relay-log.info --relay_dir=/data/mysql/data/ --slave_pass=xxx

Wed Apr 6 22:03:49 2022 - [info] Connecting to root@192.168.1.3(192.168.1.3:22).. Checking slave recovery environment settings..Opening /data/mysql/data/relay-log.info ... ok.Relay log found at /data/mysql/log, up to relay-bin.000002Temporary relay log file is /data/mysql/log/relay-bin.000002Testing mysql connection and privileges..mysql: [Warning] Using a password on the command line interface can be insecure.done.Testing mysqlbinlog output.. done.Cleaning up test file(s).. done.

Wed Apr 6 22:03:50 2022 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.1.4 --slave_ip=192.168.1.4 --slave_port=3306 --workdir=/tmp --target_version=5.7.26-log --manager_version=0.57 --relay_log_info=/data/mysql/data/relay-log.info --relay_dir=/data/mysql/data/ --slave_pass=xxx

Wed Apr 6 22:03:50 2022 - [info] Connecting to root@192.168.1.4(192.168.1.4:22).. Checking slave recovery environment settings..Opening /data/mysql/data/relay-log.info ... ok.Relay log found at /data/mysql/log, up to relay-bin.000002Temporary relay log file is /data/mysql/log/relay-bin.000002Testing mysql connection and privileges..mysql: [Warning] Using a password on the command line interface can be insecure.done.Testing mysqlbinlog output.. done.Cleaning up test file(s).. done.

Wed Apr 6 22:03:50 2022 - [info] Slaves settings check done.

Wed Apr 6 22:03:50 2022 - [info]

192.168.1.2(192.168.1.2:3306) (current master)+--192.168.1.3(192.168.1.3:3306)+--192.168.1.4(192.168.1.4:3306)Wed Apr 6 22:03:50 2022 - [info] Checking replication health on 192.168.1.3..

Wed Apr 6 22:03:50 2022 - [info] ok.

Wed Apr 6 22:03:50 2022 - [info] Checking replication health on 192.168.1.4..

Wed Apr 6 22:03:50 2022 - [info] ok.

Wed Apr 6 22:03:50 2022 - [info] Checking master_ip_failover_script status:

Wed Apr 6 22:03:50 2022 - [info] /usr/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.1.2 --orig_master_ip=192.168.1.2 --orig_master_port=3306 IN SCRIPT TEST====/sbin/ifconfig ens33:1 down==/sbin/ifconfig ens33:1 192.168.1.200/24===Checking the Status of the script.. OK

Wed Apr 6 22:03:50 2022 - [info] OK.

Wed Apr 6 22:03:50 2022 - [warning] shutdown_script is not defined.

Wed Apr 6 22:03:50 2022 - [info] Got exit code 0 (Not master dead).MySQL Replication Health is OK.

[root@mha ~]#

开启监控

[root@mha ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf \

> --remove_dead_master_conf --ignore_last_failover < /dev/null > \

> /var/log/masterha/app1/manager.log 2>&1 &

[1] 5738

查看MHA manager是否正常

[root@mha ~]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:5738) is running(0:PING_OK), master:192.168.1.2

[root@mha ~]#

查看启动日志

[root@mha ~]# tail -20 /var/log/masterha/app1/manager.log Cleaning up test file(s).. done.

Wed Apr 6 22:04:44 2022 - [info] Slaves settings check done.

Wed Apr 6 22:04:44 2022 - [info]

192.168.1.2(192.168.1.2:3306) (current master)+--192.168.1.3(192.168.1.3:3306)+--192.168.1.4(192.168.1.4:3306)Wed Apr 6 22:04:44 2022 - [info] Checking master_ip_failover_script status:

Wed Apr 6 22:04:44 2022 - [info] /usr/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.1.2 --orig_master_ip=192.168.1.2 --orig_master_port=3306 IN SCRIPT TEST====/sbin/ifconfig ens33:1 down==/sbin/ifconfig ens33:1 192.168.1.200/24===Checking the Status of the script.. OK

Wed Apr 6 22:04:44 2022 - [info] OK.

Wed Apr 6 22:04:44 2022 - [warning] shutdown_script is not defined.

Wed Apr 6 22:04:44 2022 - [info] Set master ping interval 1 seconds.

Wed Apr 6 22:04:44 2022 - [warning] secondary_check_script is not defined. It is highly recommended setting it to check master reachability from two or more routes.

Wed Apr 6 22:04:44 2022 - [info] Starting ping health check on 192.168.1.2(192.168.1.2:3306)..

Wed Apr 6 22:04:44 2022 - [info] Ping(SELECT) succeeded, waiting until MySQL doesn't respond..

[root@mha ~]#

打开新的日志窗口观察vip和主从是否漂移

[root@mha ~]# tail -0f /var/log/masterha/app1/manager.log Wed Apr 6 22:13:40 2022 - [warning] Got error on MySQL select ping: 2006 (MySQL server has gone away)

Wed Apr 6 22:13:40 2022 - [info] Executing SSH check script: save_binary_logs --command=test --start_pos=4 --binlog_dir=/data/mysql/log --output_file=/tmp/save_binary_logs_test --manager_version=0.57 --binlog_prefix=mysql-bin

Wed Apr 6 22:13:40 2022 - [info] HealthCheck: SSH to 192.168.1.2 is reachable.

Wed Apr 6 22:13:41 2022 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.1.2' (111))

Wed Apr 6 22:13:41 2022 - [warning] Connection failed 2 time(s)..

Wed Apr 6 22:13:42 2022 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.1.2' (111))

Wed Apr 6 22:13:42 2022 - [warning] Connection failed 3 time(s)..

Wed Apr 6 22:13:43 2022 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.1.2' (111))

Wed Apr 6 22:13:43 2022 - [warning] Connection failed 4 time(s)..

Wed Apr 6 22:13:43 2022 - [warning] Master is not reachable from health checker!

Wed Apr 6 22:13:43 2022 - [warning] Master 192.168.1.2(192.168.1.2:3306) is not reachable!

Wed Apr 6 22:13:43 2022 - [warning] SSH is reachable.

Wed Apr 6 22:13:43 2022 - [info] Connecting to a master server failed. Reading configuration file /etc/masterha_default.cnf and /etc/masterha/app1.cnf again, and trying to connect to all servers to check server status..

Wed Apr 6 22:13:43 2022 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Apr 6 22:13:43 2022 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:13:43 2022 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Apr 6 22:13:44 2022 - [info] GTID failover mode = 0

Wed Apr 6 22:13:44 2022 - [info] Dead Servers:

Wed Apr 6 22:13:44 2022 - [info] 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:44 2022 - [info] Alive Servers:

Wed Apr 6 22:13:44 2022 - [info] 192.168.1.3(192.168.1.3:3306)

Wed Apr 6 22:13:44 2022 - [info] 192.168.1.4(192.168.1.4:3306)

Wed Apr 6 22:13:44 2022 - [info] Alive Slaves:

Wed Apr 6 22:13:44 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:44 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:44 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:44 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:44 2022 - [info] Checking slave configurations..

Wed Apr 6 22:13:44 2022 - [info] read_only=1 is not set on slave 192.168.1.3(192.168.1.3:3306).

Wed Apr 6 22:13:44 2022 - [info] read_only=1 is not set on slave 192.168.1.4(192.168.1.4:3306).

Wed Apr 6 22:13:44 2022 - [info] Checking replication filtering settings..

Wed Apr 6 22:13:44 2022 - [info] Replication filtering check ok.

Wed Apr 6 22:13:44 2022 - [info] Master is down!

Wed Apr 6 22:13:44 2022 - [info] Terminating monitoring script.

Wed Apr 6 22:13:44 2022 - [info] Got exit code 20 (Master dead).

Wed Apr 6 22:13:44 2022 - [info] MHA::MasterFailover version 0.57.

Wed Apr 6 22:13:44 2022 - [info] Starting master failover.

Wed Apr 6 22:13:44 2022 - [info]

Wed Apr 6 22:13:44 2022 - [info] * Phase 1: Configuration Check Phase..

Wed Apr 6 22:13:44 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] GTID failover mode = 0

Wed Apr 6 22:13:45 2022 - [info] Dead Servers:

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] Checking master reachability via MySQL(double check)...

Wed Apr 6 22:13:45 2022 - [info] ok.

Wed Apr 6 22:13:45 2022 - [info] Alive Servers:

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.3(192.168.1.3:3306)

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.4(192.168.1.4:3306)

Wed Apr 6 22:13:45 2022 - [info] Alive Slaves:

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] Starting Non-GTID based failover.

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] ** Phase 1: Configuration Check Phase completed.

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 2: Dead Master Shutdown Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] Forcing shutdown so that applications never connect to the current master..

Wed Apr 6 22:13:45 2022 - [info] Executing master IP deactivation script:

Wed Apr 6 22:13:45 2022 - [info] /usr/bin/master_ip_failover --orig_master_host=192.168.1.2 --orig_master_ip=192.168.1.2 --orig_master_port=3306 --command=stopssh --ssh_user=root IN SCRIPT TEST====/sbin/ifconfig ens33:1 down==/sbin/ifconfig ens33:1 192.168.1.200/24===Disabling the VIP on old master: 192.168.1.2

SIOCSIFFLAGS: Cannot assign requested address

Wed Apr 6 22:13:45 2022 - [info] done.

Wed Apr 6 22:13:45 2022 - [warning] shutdown_script is not set. Skipping explicit shutting down of the dead master.

Wed Apr 6 22:13:45 2022 - [info] * Phase 2: Dead Master Shutdown Phase completed.

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3: Master Recovery Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3.1: Getting Latest Slaves Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] The latest binary log file/position on all slaves is mysql-bin.000002:154

Wed Apr 6 22:13:45 2022 - [info] Latest slaves (Slaves that received relay log files to the latest):

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] The oldest binary log file/position on all slaves is mysql-bin.000002:154

Wed Apr 6 22:13:45 2022 - [info] Oldest slaves:

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.3(192.168.1.3:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info] 192.168.1.4(192.168.1.4:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Wed Apr 6 22:13:45 2022 - [info] Replicating from 192.168.1.2(192.168.1.2:3306)

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3.2: Saving Dead Master's Binlog Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] Fetching dead master's binary logs..

Wed Apr 6 22:13:45 2022 - [info] Executing command on the dead master 192.168.1.2(192.168.1.2:3306): save_binary_logs --command=save --start_file=mysql-bin.000002 --start_pos=154 --binlog_dir=/data/mysql/log --output_file=/tmp/saved_master_binlog_from_192.168.1.2_3306_20220406221344.binlog --handle_raw_binlog=1 --disable_log_bin=0 --manager_version=0.57Creating /tmp if not exists.. ok.Concat binary/relay logs from mysql-bin.000002 pos 154 to mysql-bin.000002 EOF into /tmp/saved_master_binlog_from_192.168.1.2_3306_20220406221344.binlog ..Binlog Checksum enabledDumping binlog format description event, from position 0 to 154.. ok.No need to dump effective binlog data from /data/mysql/log/mysql-bin.000002 (pos starts 154, filesize 154). Skipping.Binlog Checksum enabled/tmp/saved_master_binlog_from_192.168.1.2_3306_20220406221344.binlog has no effective data events.

Event not exists.

Wed Apr 6 22:13:45 2022 - [info] Additional events were not found from the orig master. No need to save.

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3.3: Determining New Master Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] Finding the latest slave that has all relay logs for recovering other slaves..

Wed Apr 6 22:13:45 2022 - [info] All slaves received relay logs to the same position. No need to resync each other.

Wed Apr 6 22:13:45 2022 - [info] Searching new master from slaves..

Wed Apr 6 22:13:45 2022 - [info] Candidate masters from the configuration file:

Wed Apr 6 22:13:45 2022 - [info] Non-candidate masters:

Wed Apr 6 22:13:45 2022 - [info] New master is 192.168.1.3(192.168.1.3:3306)

Wed Apr 6 22:13:45 2022 - [info] Starting master failover..

Wed Apr 6 22:13:45 2022 - [info]

From:

192.168.1.2(192.168.1.2:3306) (current master)+--192.168.1.3(192.168.1.3:3306)+--192.168.1.4(192.168.1.4:3306)To:

192.168.1.3(192.168.1.3:3306) (new master)+--192.168.1.4(192.168.1.4:3306)

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3.3: New Master Diff Log Generation Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] This server has all relay logs. No need to generate diff files from the latest slave.

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] * Phase 3.4: Master Log Apply Phase..

Wed Apr 6 22:13:45 2022 - [info]

Wed Apr 6 22:13:45 2022 - [info] *NOTICE: If any error happens from this phase, manual recovery is needed.

Wed Apr 6 22:13:45 2022 - [info] Starting recovery on 192.168.1.3(192.168.1.3:3306)..

Wed Apr 6 22:13:45 2022 - [info] This server has all relay logs. Waiting all logs to be applied..

Wed Apr 6 22:13:45 2022 - [info] done.

Wed Apr 6 22:13:45 2022 - [info] All relay logs were successfully applied.

Wed Apr 6 22:13:45 2022 - [info] Getting new master's binlog name and position..

Wed Apr 6 22:13:45 2022 - [info] mysql-bin.000002:154

Wed Apr 6 22:13:45 2022 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.1.3', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000002', MASTER_LOG_POS=154, MASTER_USER='hello', MASTER_PASSWORD='xxx';

Wed Apr 6 22:13:45 2022 - [info] Executing master IP activate script:

Wed Apr 6 22:13:45 2022 - [info] /usr/bin/master_ip_failover --command=start --ssh_user=root --orig_master_host=192.168.1.2 --orig_master_ip=192.168.1.2 --orig_master_port=3306 --new_master_host=192.168.1.3 --new_master_ip=192.168.1.3 --new_master_port=3306 --new_master_user='manager' --new_master_password=xxx

Unknown option: new_master_user

Unknown option: new_master_passwordIN SCRIPT TEST====/sbin/ifconfig ens33:1 down==/sbin/ifconfig ens33:1 192.168.1.200/24===Enabling the VIP - 192.168.1.200/24 on the new master - 192.168.1.3

bash: /sbin/ifconfig: No such file or directory

Wed Apr 6 22:13:46 2022 - [info] OK.

Wed Apr 6 22:13:46 2022 - [info] ** Finished master recovery successfully.

Wed Apr 6 22:13:46 2022 - [info] * Phase 3: Master Recovery Phase completed.

Wed Apr 6 22:13:46 2022 - [info]

Wed Apr 6 22:13:46 2022 - [info] * Phase 4: Slaves Recovery Phase..

Wed Apr 6 22:13:46 2022 - [info]

Wed Apr 6 22:13:46 2022 - [info] * Phase 4.1: Starting Parallel Slave Diff Log Generation Phase..

Wed Apr 6 22:13:46 2022 - [info]

Wed Apr 6 22:13:46 2022 - [info] -- Slave diff file generation on host 192.168.1.4(192.168.1.4:3306) started, pid: 1291. Check tmp log /var/log/masterha/app1/192.168.1.4_3306_20220406221344.log if it takes time..

Wed Apr 6 22:13:47 2022 - [info]

Wed Apr 6 22:13:47 2022 - [info] Log messages from 192.168.1.4 ...

Wed Apr 6 22:13:47 2022 - [info]

Wed Apr 6 22:13:46 2022 - [info] This server has all relay logs. No need to generate diff files from the latest slave.

Wed Apr 6 22:13:47 2022 - [info] End of log messages from 192.168.1.4.

Wed Apr 6 22:13:47 2022 - [info] -- 192.168.1.4(192.168.1.4:3306) has the latest relay log events.

Wed Apr 6 22:13:47 2022 - [info] Generating relay diff files from the latest slave succeeded.

Wed Apr 6 22:13:47 2022 - [info]

Wed Apr 6 22:13:47 2022 - [info] * Phase 4.2: Starting Parallel Slave Log Apply Phase..

Wed Apr 6 22:13:47 2022 - [info]

Wed Apr 6 22:13:47 2022 - [info] -- Slave recovery on host 192.168.1.4(192.168.1.4:3306) started, pid: 1293. Check tmp log /var/log/masterha/app1/192.168.1.4_3306_20220406221344.log if it takes time..

Wed Apr 6 22:13:48 2022 - [info]

Wed Apr 6 22:13:48 2022 - [info] Log messages from 192.168.1.4 ...

Wed Apr 6 22:13:48 2022 - [info]

Wed Apr 6 22:13:47 2022 - [info] Starting recovery on 192.168.1.4(192.168.1.4:3306)..

Wed Apr 6 22:13:47 2022 - [info] This server has all relay logs. Waiting all logs to be applied..

Wed Apr 6 22:13:47 2022 - [info] done.

Wed Apr 6 22:13:47 2022 - [info] All relay logs were successfully applied.

Wed Apr 6 22:13:47 2022 - [info] Resetting slave 192.168.1.4(192.168.1.4:3306) and starting replication from the new master 192.168.1.3(192.168.1.3:3306)..

Wed Apr 6 22:13:47 2022 - [info] Executed CHANGE MASTER.

Wed Apr 6 22:13:47 2022 - [info] Slave started.

Wed Apr 6 22:13:48 2022 - [info] End of log messages from 192.168.1.4.

Wed Apr 6 22:13:48 2022 - [info] -- Slave recovery on host 192.168.1.4(192.168.1.4:3306) succeeded.

Wed Apr 6 22:13:48 2022 - [info] All new slave servers recovered successfully.

Wed Apr 6 22:13:48 2022 - [info]

Wed Apr 6 22:13:48 2022 - [info] * Phase 5: New master cleanup phase..

Wed Apr 6 22:13:48 2022 - [info]

Wed Apr 6 22:13:48 2022 - [info] Resetting slave info on the new master..

Wed Apr 6 22:13:48 2022 - [info] 192.168.1.3: Resetting slave info succeeded.

Wed Apr 6 22:13:48 2022 - [info] Master failover to 192.168.1.3(192.168.1.3:3306) completed successfully.

Wed Apr 6 22:13:48 2022 - [info] Deleted server1 entry from /etc/masterha/app1.cnf .

Wed Apr 6 22:13:48 2022 - [info] ----- Failover Report -----app1: MySQL Master failover 192.168.1.2(192.168.1.2:3306) to 192.168.1.3(192.168.1.3:3306) succeededMaster 192.168.1.2(192.168.1.2:3306) is down!Check MHA Manager logs at mha:/var/log/masterha/app1/manager.log for details.Started automated(non-interactive) failover.

Invalidated master IP address on 192.168.1.2(192.168.1.2:3306)

The latest slave 192.168.1.3(192.168.1.3:3306) has all relay logs for recovery.

Selected 192.168.1.3(192.168.1.3:3306) as a new master.

192.168.1.3(192.168.1.3:3306): OK: Applying all logs succeeded.

192.168.1.3(192.168.1.3:3306): OK: Activated master IP address.

192.168.1.4(192.168.1.4:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

192.168.1.4(192.168.1.4:3306): OK: Applying all logs succeeded. Slave started, replicating from 192.168.1.3(192.168.1.3:3306)

192.168.1.3(192.168.1.3:3306): Resetting slave info succeeded.

Master failover to 192.168.1.3(192.168.1.3:3306) completed successfully.

Wed Apr 6 22:13:48 2022 - [info] Sending mail..

sh: /usr/local/send_report: No such file or directory

Wed Apr 6 22:13:48 2022 - [error][/usr/share/perl5/vendor_perl/MHA/MasterFailover.pm, ln2066] Failed to send mail with return code 127:0

此时显示,vip和master都漂移成功。到次MHA全部搭建完毕。

搭建ceph集群

| 主机名 | IP |

|---|---|

| ceph01 | 192.168.1. 6 |

| ceph02 | 192.168.1.7 |

| ceph03 | 192.168.1.8 |

这三台服务器分别添加三块100G硬盘

根新主机时间

在所有主机上都需要根新

[root@ceph01 ~]# ntpdate ntp1.aliyun.com6 Apr 15:34:50 ntpdate[1467]: step time server 120.25.115.20 offset -28798.923817 sec

[root@ceph01 ~]#

做个计划任务

[root@ceph01 ~]# crontab -l

30 * * * * ntpdate ntp1.aliyun.com

修改host文件

首先需要修改主机名

[root@ceph01 ~]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.6 ceph01

192.168.1.7 ceoh02

192.168.1.8 ceph03

做SSH免密登陆

需要在所有的主机上都创建密钥

[root@ceph01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): #这里什么都不输入,直接回车

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase): #这里什么都不输入,直接回车

Enter same passphrase again: #这里什么都不输入,直接回车

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is: #这里什么都不输入,直接回车

SHA256:LFxoaPQyaj5aU37aPwiuXKJ/R+/I8HG7eNPTmvYHHHs root@ceph01

The key's randomart image is: #这里什么都不输入,直接回车

+---[RSA 2048]----+

| . |

| . o . |

| = + . |

| o = o . |

| o . o S . o |

| o o. .. + E |

| *.+ooo.. . o |

| = =oBo==.+.. . |

|o.+o..*+==o+.. |

+----[SHA256]-----+

[root@ceph01 ~]#

将密钥发送到所有主机

[root@ceph01 ~]# for i in 6 7 8;do ssh-copy-id 192.168.1.$i;done

上传软件包并解压

链接:https://pan.baidu.com/s/1DwCtwDd5_tv4uVQE-PxITQ

提取码:mlzc

–来自百度网盘超级会员V2的分享

[root@ceph01 ~]# tar -zxvf ceph-12.2.12.tar.gz

配置ceph的yum源

[root@ceph01 ~]# vim /etc/yum.repos.d/ceph.repo [ceph]

name=ceph

baseurl=file:///root/ceph

enabled=1

gpgcheck=0

将解压的软件包和yum源发送

[root@ceph01 ~]# for i in 7 8;do scp -r /root/ceph root@192.168.1.$i:~;done

[root@ceph01 ~]# for i in 7 8;do scp -r /etc/yum.repos.d/ root@192.168.1.$i:/etc/;done

安装epel-release(所有节点)

[root@ceph01 ~]# yum -y install epel-release yum-plugin-priorities yum-utils ntpdate

在所有的主机上部署ceph

[root@ceph01 ~]# yum -y install ceph-deploy ceph ceph-radosgw snappy leveldb gdisk python-argparse gperftools-libs

在管理节点上部署服务

注:也可以同时在ceph02,ceph03上部署mon,实现高可用,生产环境至少3个mon独立

在/etc/ceph目录操作,创建一个新集群,并设置ceph01为mon节点

[root@ceph01 ~]# cd /etc/ceph/

[root@ceph01 ceph]# ceph-deploy new ceph01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new ceph01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x7f8dc29cce60>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f8dc2351368>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph01']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] find the location of an executable

[ceph01][INFO ] Running command: /usr/sbin/ip link show

[ceph01][INFO ] Running command: /usr/sbin/ip addr show

[ceph01][DEBUG ] IP addresses found: [u'192.168.1.6']

[ceph_deploy.new][DEBUG ] Resolving host ceph01

[ceph_deploy.new][DEBUG ] Monitor ceph01 at 192.168.1.6

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph01']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.1.6']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

[root@ceph01 ceph]#

执行完毕后,可以看到/etc/ceph目录中生成了三个文件,其中有一个ceph配置文件可以做各种参数优化。(注意,在osd进程生成并挂载使用后,想修改配置需要使用命令行工具,修改配置文件是无效的,所以需要提前规划好优化的参数。),一个是监视器秘钥环。

[root@ceph01 ceph]# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring rbdmap

修改副本数

配置文件的默认副本数从3改成2,这样只有两个osd也能达到active+clean状态

[root@ceph01 ceph]# vim ceph.conf [global]

fsid = be4ef425-886e-44fb-90bc-77d2575fa0e1

mon_initial_members = ceph01

mon_host = 192.168.1.6

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd_pool_default_size = 2 #添加这一行

安装ceph monitor

[root@ceph01 ceph]# ceph-deploy mon create ceph01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon create ceph01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff3d15ede18>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['ceph01']

[ceph_deploy.cli][INFO ] func : <function mon at 0x7ff3d185f488>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph01

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph01 ...

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.5.1804 Core

[ceph01][DEBUG ] determining if provided host has same hostname in remote

[ceph01][DEBUG ] get remote short hostname

[ceph01][DEBUG ] deploying mon to ceph01

[ceph01][DEBUG ] get remote short hostname

[ceph01][DEBUG ] remote hostname: ceph01

[ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph01][DEBUG ] create the mon path if it does not exist

[ceph01][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph01/done

[ceph01][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph01/done

[ceph01][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph01.mon.keyring

[ceph01][DEBUG ] create the monitor keyring file

[ceph01][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i ceph01 --keyring /var/lib/ceph/tmp/ceph-ceph01.mon.keyring --setuser 167 --setgroup 167

[ceph01][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph01.mon.keyring

[ceph01][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph01][DEBUG ] create the init path if it does not exist

[ceph01][INFO ] Running command: systemctl enable ceph.target

[ceph01][INFO ] Running command: systemctl enable ceph-mon@ceph01

[ceph01][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph01.service to /usr/lib/systemd/system/ceph-mon@.service.

[ceph01][INFO ] Running command: systemctl start ceph-mon@ceph01

[ceph01][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status

[ceph01][DEBUG ] ********************************************************************************

[ceph01][DEBUG ] status for monitor: mon.ceph01

[ceph01][DEBUG ] {

[ceph01][DEBUG ] "election_epoch": 3,

[ceph01][DEBUG ] "extra_probe_peers": [],

[ceph01][DEBUG ] "feature_map": {

[ceph01][DEBUG ] "mon": {

[ceph01][DEBUG ] "group": {

[ceph01][DEBUG ] "features": "0x3ffddff8eeacfffb",

[ceph01][DEBUG ] "num": 1,

[ceph01][DEBUG ] "release": "luminous"

[ceph01][DEBUG ] }

[ceph01][DEBUG ] }

[ceph01][DEBUG ] },

[ceph01][DEBUG ] "features": {

[ceph01][DEBUG ] "quorum_con": "4611087853746454523",

[ceph01][DEBUG ] "quorum_mon": [

[ceph01][DEBUG ] "kraken",

[ceph01][DEBUG ] "luminous"

[ceph01][DEBUG ] ],

[ceph01][DEBUG ] "required_con": "153140804152475648",

[ceph01][DEBUG ] "required_mon": [

[ceph01][DEBUG ] "kraken",

[ceph01][DEBUG ] "luminous"

[ceph01][DEBUG ] ]

[ceph01][DEBUG ] },

[ceph01][DEBUG ] "monmap": {

[ceph01][DEBUG ] "created": "2022-04-06 16:16:46.287081",

[ceph01][DEBUG ] "epoch": 1,

[ceph01][DEBUG ] "features": {

[ceph01][DEBUG ] "optional": [],

[ceph01][DEBUG ] "persistent": [

[ceph01][DEBUG ] "kraken",

[ceph01][DEBUG ] "luminous"

[ceph01][DEBUG ] ]

[ceph01][DEBUG ] },

[ceph01][DEBUG ] "fsid": "be4ef425-886e-44fb-90bc-77d2575fa0e1",

[ceph01][DEBUG ] "modified": "2022-04-06 16:16:46.287081",

[ceph01][DEBUG ] "mons": [

[ceph01][DEBUG ] {

[ceph01][DEBUG ] "addr": "192.168.1.6:6789/0",

[ceph01][DEBUG ] "name": "ceph01",

[ceph01][DEBUG ] "public_addr": "192.168.1.6:6789/0",

[ceph01][DEBUG ] "rank": 0

[ceph01][DEBUG ] }

[ceph01][DEBUG ] ]

[ceph01][DEBUG ] },

[ceph01][DEBUG ] "name": "ceph01",

[ceph01][DEBUG ] "outside_quorum": [],

[ceph01][DEBUG ] "quorum": [

[ceph01][DEBUG ] 0

[ceph01][DEBUG ] ],

[ceph01][DEBUG ] "rank": 0,

[ceph01][DEBUG ] "state": "leader",

[ceph01][DEBUG ] "sync_provider": []

[ceph01][DEBUG ] }

[ceph01][DEBUG ] ********************************************************************************

[ceph01][INFO ] monitor: mon.ceph01 is running

[ceph01][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status

[root@ceph01 ceph]#

收集节点的keyring文件

[root@ceph01 ceph]# ceph-deploy gatherkeys ceph01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy gatherkeys ceph01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fd4e783fbd8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['ceph01']

[ceph_deploy.cli][INFO ] func : <function gatherkeys at 0x7fd4e7a93a28>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmpcFRJma

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] get remote short hostname

[ceph01][DEBUG ] fetch remote file

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.ceph01.asok mon_status

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.admin

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get-or-create client.admin osd allow * mds allow * mon allow * mgr allow *

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-mds

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get-or-create client.bootstrap-mds mon allow profile bootstrap-mds

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-mgr

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get-or-create client.bootstrap-mgr mon allow profile bootstrap-mgr

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-osd

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get-or-create client.bootstrap-osd mon allow profile bootstrap-osd

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-rgw

[ceph01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get-or-create client.bootstrap-rgw mon allow profile bootstrap-rgw

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpcFRJma

[root@ceph01 ceph]#

查看一下

[root@ceph01 ceph]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log rbdmap

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

可以看见ceph.client.admin.keyring已经有了

查看密钥

[root@ceph01 ceph]# cat ceph.client.admin.keyring

[client.admin]key = AQDgyk1i5gPqMRAAQa0dPgyF71C5Psiq/ebuQQ==

[root@ceph01 ceph]#

部署osd服务

使用ceph自动分区

都需要执行一般

ceph-deploy disk zap ceph01 /dev/sdb

ceph-deploy disk zap ceph02 /dev/sdb

ceph-deploy disk zap ceph03 /dev/sdb

这三条命令修需要在ceph01上执行

[root@ceph01 ceph]# ceph-deploy disk zap ceph01 /dev/sdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap ceph01 /dev/sdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f1565785290>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph01

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f15659db9b0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/sdb']

[ceph_deploy.osd][DEBUG ] zapping /dev/sdb on ceph01

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.5.1804 Core

[ceph01][DEBUG ] zeroing last few blocks of device

[ceph01][DEBUG ] find the location of an executable

[ceph01][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdb

[ceph01][DEBUG ] --> Zapping: /dev/sdb

[ceph01][DEBUG ] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph01][DEBUG ] Running command: wipefs --all /dev/sdb

[ceph01][DEBUG ] Running command: dd if=/dev/zero of=/dev/sdb bs=1M count=10

[ceph01][DEBUG ] --> Zapping successful for: <Raw Device: /dev/sdb>

You have mail in /var/spool/mail/root

[root@ceph01 ceph]#

添加osd节点

[root@ceph01 ceph]# ceph-deploy osd create ceph01 --data /dev/sdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph01 --data /dev/sdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f95b1d403b0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph01

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f95b1f91938>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/sdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.5.1804 Core

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph01

[ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph01][DEBUG ] find the location of an executable

[ceph01][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb

[ceph01][DEBUG ] Running command: /bin/ceph-authtool --gen-print-key

[ceph01][DEBUG ] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 2d5cfb18-85fc-49a3-b829-cce6b7e39e12

[ceph01][DEBUG ] Running command: vgcreate --force --yes ceph-8434389e-41f0-4d97-aec0-386ceae136f5 /dev/sdb

[ceph01][DEBUG ] stdout: Physical volume "/dev/sdb" successfully created.

[ceph01][DEBUG ] stdout: Volume group "ceph-8434389e-41f0-4d97-aec0-386ceae136f5" successfully created

[ceph01][DEBUG ] Running command: lvcreate --yes -l 100%FREE -n osd-block-2d5cfb18-85fc-49a3-b829-cce6b7e39e12 ceph-8434389e-41f0-4d97-aec0-386ceae136f5

[ceph01][DEBUG ] stdout: Logical volume "osd-block-2d5cfb18-85fc-49a3-b829-cce6b7e39e12" created.

[ceph01][DEBUG ] Running command: /bin/ceph-authtool --gen-print-key

[ceph01][DEBUG ] Running command: mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0

[ceph01][DEBUG ] Running command: restorecon /var/lib/ceph/osd/ceph-0

[ceph01][DEBUG ] Running command: chown -h ceph:ceph /dev/ceph-8434389e-41f0-4d97-aec0-386ceae136f5/osd-block-2d5cfb18-85fc-49a3-b829-cce6b7e39e12

[ceph01][DEBUG ] Running command: chown -R ceph:ceph /dev/dm-2

[ceph01][DEBUG ] Running command: ln -s /dev/ceph-8434389e-41f0-4d97-aec0-386ceae136f5/osd-block-2d5cfb18-85fc-49a3-b829-cce6b7e39e12 /var/lib/ceph/osd/ceph-0/block

[ceph01][DEBUG ] Running command: ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap

[ceph01][DEBUG ] stderr: got monmap epoch 1

[ceph01][DEBUG ] Running command: ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQAjzE1iLK8LLBAAdcsplCzkGVOD8khpaomgzw==

[ceph01][DEBUG ] stdout: creating /var/lib/ceph/osd/ceph-0/keyring

[ceph01][DEBUG ] added entity osd.0 auth auth(auid = 18446744073709551615 key=AQAjzE1iLK8LLBAAdcsplCzkGVOD8khpaomgzw== with 0 caps)

[ceph01][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring

[ceph01][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

[ceph01][DEBUG ] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 2d5cfb18-85fc-49a3-b829-cce6b7e39e12 --setuser ceph --setgroup ceph

[ceph01][DEBUG ] --> ceph-volume lvm prepare successful for: /dev/sdb

[ceph01][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[ceph01][DEBUG ] Running command: ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-8434389e-41f0-4d97-aec0-386ceae136f5/osd-block-2d5cfb18-85fc-49a3-b829-cce6b7e39e12 --path /var/lib/ceph/osd/ceph-0