title: Docker&K8S教程

date: 2023-03-13 18:33:19

tags: [K8S,Docker]

categories: [K8S]

网络策略

1.3开始提供 NetworkPolicy

基于策略的网络控制,用于隔离应用以减少攻击面

pod之间能否通信可通过如下三种组合进行确认:

- 其他被允许的pods(pod无法限制对自身的访问)

- 被允许访问的namespace

- IP CIDR 与pod运行所在节点的通信总是被允许的

默认情况下,pod是非隔离的,它们可以接收任何流量

入站ingress(入口流量)

出站egress(出口流量)

支持网络策略的插件:calico/weave net/romana/trireme

NetworkPolicy需要apiVersion kind metadata字段

NetworkPolicy对象

spec.podSelector

spec:podSelector:matchLabels:role: db

如果podSelector为空, 则选择ns下所有的pod

spec.policyTypes

spec:podSelector:matchLabels:role: dbpolicyTypes:- Ingress- Egressingress:- from:- ipBlock:cidr: 172.17.0.0/16except:- 172.17.1.0/24- namespaceSelector:matchLabels:project: myproject- podSelector:matchLabels:role: frontendports:- protocol: TCPport: 6379egress:- to:- ipBlock:cidr: 10.0.0.0/24ports:- protocol: TCPport: 5978

表示应用于所选pod的入口流量还是出口流量 两者亦可

如果未指定policyTypes则默认情况下始终设置Ingress 如果有任何出口规则的话则设置Egress

总结该网络策略:

- 隔离default命名空间下role=db的pod

- 出口限制:允许符合以下条件的pod连接到default名称空间下标签为role=db的所有pod的6379端口:

- default ns下带有role=frontend标签的所有pod

- 带有project=myproject标签的所有ns中的pod

- ip地址范围为172.17.0.0172.17.0.255和172.17.2.0172.17.255.255(即除了172.17.1.0/24之外的所有172.17.0.0/16)

- 入口限制:允许从带有role-db标签的ns下的所有pod到CIDR 10.0.0.0/24下5978的TCP

spec.ingress

允许入站流量的规则。

from

相关源,可以是其他pod或ip地址、网段等。

ports

限制进出网络流量的协议和端口

spec.egress

允许出站流量的规则

to

相关目的地,可以是其他pod或ip地址、网段等。

ports

限制进出网络流量的协议和端口

污点和容忍

-

设置节点上的污点

apiVersion: v1 kind: Node metadata:name: node1 spec:taints:- key: "example.com/role"value: "worker"effect: "NoSchedule" -

设置pod上的容忍

apiVersion: v1 kind: Pod metadata:name: example-pod spec:tolerations:- key: "example.com/role"opeator: "Equal"value: "worker"effect: "NoSchedule"containers:- name: example-containerimage: nginx:1.22Deployment:

spec.template.spec.tolerations

亲和性和反亲和性

使用节点标签设置pod亲和性

apiVersion: v1

kind: Pod

metadata:name: example-pod

spec:affinity:nodeAffinity:requiredDuringSchedulingIgnoreDuringExecution:nodeSelectorTerms:- matchExpressions:key: example.com/regionoperator: Invalues:- zone-1containers:- name: example-containerimage: nginx:1.22

example-pod只能在拥有标签example.com/region=zone-1的节点上运行

使用pod标签设置pod亲和性

apiVersion: v1

kind: Pod

metadata:name: example-pod

spec:affinity:podAffinity:requiredDuringSchedulingIgnoreDuringExecution:- labelSelector:matchLExpressions:- key: appoperator: Invalues:- example-apptopologKey: kubernetes.io/hostnamecontainers:- name: example-containerimage: nginx:1.22

example-pod只能运行在其他拥有app=example-app标签的pod所在的节点上。

pod反亲和性

apiVersion: v1

kind: Pod

metadata:name: example-pod

spec:affinity:podAntiAffinity:requiredDuringSchedulingIgnoreDuringExecution:- labelSelector:matchExpessions:- key: appoperator: Invalues:- example-apptopologyKey: kubernetes.io/hostnamecontainers:- name: example-containerimage: nginx:1.22

确保example-pod不会运行在与其他拥有app=example-app标签的pod的相同的节点上。

ingress

安装ingress controller

- nginx (默认)

- traefik

- haproxy

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.41.2/deploy/static/provider/cloud/deploy.yaml

创建ingress对象

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:name: my-ingressannotations:nginx.ingress.kubernetes.io/rewrite-target: /

spec:rules:- host: example.comhttp:paths:- path: /path1backend:serviceName: service1servicePort: 80- path: /path2backend:serviceName: service2servicePort: 80

将example.com的/path1请求转发到service1的80端口

将example.com的/path2请求转发到service2的80端口

K8S使用GPU

安装NVIDIA GPU驱动

节点上安装。

安装nvidia-docker

安装docker-ce

安装节点驱动

安装nvidia-docker

添加repository

curl -s -L https://nvidia.github.io/nvidia-docker/ubuntu18.04/nvidia-docker.list | sudo tee /etc/apt/source.list.d/nvidia-docker.list

执行安装

sudo apt update && sudo apt install -y nvidia-docker2systemctl restart docker

验证安装

sudo docker run --rm nvidia/cuda:9.0-base nvidia-smi

pod中配置GPU资源

apiVersion: v1

kind: Pod

metadata:name: gpu-pod

spec:containers:- name: gpu-containerimage: nvidia/cuda:9.0-baseresources:limits:nvidia.com/gpu: 1

限制使用1个nvidia gpu

为容器设置启动时执行的命令

apiVersion: v1

kind: Pod

metadata:name: command-podlabels:app: command

spec:containers:- name: command-containerimage: debianenv:- name: MSGvalue: "hello world"command: ["printenv"]args: ["HOSTNAME","KUBERNETES_PORT","$(MSG)"]restartPolicy: OnFailure

如果在配置文件中设置了容器启动时要执行的命令及参数,那么容器镜像中自带的命令与参数将会被覆盖而不再执行;

如果yaml中只是设置了参数,却没有设置其对应的命令,那么容器镜像中自带的命令会使用该新参数作为其执行时的参数。

command: ["/bin/sh"]

args: ["-c","while true;do echo hello;sleep 10;done"]

k8s command --> docker ENTRYPOINT

k8s args --> docker CMD

kata containers介绍

- 是一个可以使用容器镜像以超轻量级虚拟机的形式创建容器的运行时工具

- 能够支持不同平台的硬件:x86 arm

- 符合OCI规范

- 兼容K8S的CRI接口规范

- runtime agent proxy shim kernel

- 真正启动docker容器的是RunC 是

OCI运行时规范的默认实现 kata containers跟runc同一层级 - 不同的是kata为每个容器或pod增加了一个独立的linux内核(不共享宿主机的内核),使得容器有更好的隔离性、安全性。

什么是容器运行时

k8s的视角:

- docker

- containerd

- CRI-O

docker、containers等的视角:

- runc – 容器运行时工具

可作为runc的替代组件

K8S --> CRI(docker cri-containerd CRI-O)

docker shim–dockerd–containerd-shim–runc–C

cri-containerd–containerd–shim–runc–C

CRI-O–conmon–runc–C

组件及其功能介绍

runtime

符合OCI规范的容器运行时命令工具,主要用来创建轻量级虚拟机,通过agent控制虚拟机内容器的生命周期。

agent

运行在虚拟机中的一个运行时代理组件,主要用来执行runtime传给它的指令,在虚拟机内管理容器的生命周期。

shim

以对接docker为例,这里的shim相当于是containerd-shim的一个适配,用来处理容器进程的stdio和signals

kernel

就是提供一个轻量级虚拟机的linux内核,根据不同的需要,提供几个内核选择,最小的内核仅4M多

架构调整

v1.5.0版本之后,基于containerd的集成,对架构进行了调整,也就是将多个组件kata-shim kata-proxy kata-runtime以及containerd-shim的功能集成在同一个二进制文件中 kata-runtime

kata containers结合docker使用

为了进一步加强容器的安全性和隔离性,kata containers是一种轻量级的虚拟化技术。

安装kata containers

export https_proxy=http://localhost:20171curl -fsSL https://github.com/kata-containers/kata-containers/releases/download/3.0.2/kata-static-3.0.2-x86_64.tar.xz -o kata-static-3.0.2-x86_64.tar.xzxz -d kata-static-3.0.2-x86_64.tar.xzsudo tar xvf kata-static-3.0.2-x86_64.tar -C /sudo cp /opt/kata/bin/containerd-shim-kata-v2 /usr/binsudo cp /opt/kata/bin/kata-runtime /usr/binrm -f kata-static-3.0.2-x86_64.tar

check时解决如下报错

zxl@linux:/D/Workspace/Docker/kata_containers-about$ docker run -it --rm --runtime kata-runtime nginx ls /

docker: Error response from daemon: failed to create shim task: OCI runtime create failed: /opt/kata/share/defaults/kata-containers/configuration-qemu.toml: host system doesn't support vsock: stat /dev/vhost-vsock: no such file or directory: unknown.#检测当前系统是否支持vhost-vsock

zxl@linux:~$ grep CONFIG_VIRTIO_VSOCKETS /boot/config-$(uname -r)

CONFIG_VIRTIO_VSOCKETS=m

CONFIG_VIRTIO_VSOCKETS_COMMON=mzxl@linux:~$ sudo modprobe vhost_vsockzxl@linux:~$ sudo lsmod | grep vsock

vhost_vsock 28672 0

vmw_vsock_virtio_transport_common 40960 1 vhost_vsock

vhost 53248 2 vhost_vsock,vhost_net

vsock 49152 2 vmw_vsock_virtio_transport_common,vhost_vsockzxl@linux:~$ kata-runtime check

No newer release available

System is capable of running Kata Containers

docker集成kata containers作为runtime

sudo vim /etc/docker/daemon.json

"runtimes": {"kata-runtime": {"path": "/usr/bin/kata-runtime"}}

systemctl daemon-reloadsystemctl restart dockerdocker info

Runtimes: io.containerd.runc.v2 kata-runtime runc

containerd集成kata containers作为runtime

check

zxl@linux:~$ sudo modprobe vhost_vsockzxl@linux:~$ sudo lsmod | grep vsock

vhost_vsock 28672 0

vmw_vsock_virtio_transport_common 40960 1 vhost_vsock

vhost 53248 2 vhost_vsock,vhost_net

vsock 49152 2 vmw_vsock_virtio_transport_common,vhost_vsockzxl@linux:~$ sudo kata-runtime check

WARN[0000] Not running network checks as super user arch=amd64 name=kata-runtime pid=49537 source=runtime

System is capable of running Kata Containers

System can currently create Kata Containers

配置containerd

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes][plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata]runtime_type = "io.containerd.kata.v2"

重启:

systemctl restart containerd

测试

zxl@linux:~$ sudo ctr run --rm --runtime "io.containerd.kata.v2" -t docker.io/library/nginx:latest test uname -r

5.19.2zxl@linux:~$ uname -r

5.15.0-67-genericzxl@linux:~$ sudo ctr run --rm -t docker.io/library/nginx:latest test uname -r

5.15.0-67-generic

查看docker服务日志

查看所有日志

sudo journalctl -u docker.service

排名前十的错误日志

sudo journalctl -u docker.service --since today --until now | grep -i error | head -n 10

查看实时日志

sudo journalctl -u docker.service -f

按日期和时间范围查找日志

sudo journalctl -u docker.service --since "2023-03-15 00:00:00" --until "2023-03-16 23:00:00"

Kuboard Dashboard使用教程

安装

# admin Kuboard123

sudo docker run -d \--restart=unless-stopped \--name=kuboard-local \-p 30080:80/tcp \-p 30081:10081/tcp \-e KUBOARD_ENDPOINT="http://192.168.122.1:30080" \-e KUBOARD_AGENT_SERVER_TCP_PORT="30081" \-e KUBOARD_DISABLE_AUDIT=true \-v /D/CACHE/docker/kuboard-data:/data \swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3#eipwork/kuboard:v3# 也可以使用镜像 swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3 ,可以更快地完成镜像下载。# 请不要使用 127.0.0.1 或者 localhost 作为内网 IP \# Kuboard 不需要和 K8S 在同一个网段,Kuboard Agent 甚至可以通过代理访问 Kuboard Server \# KUBOARD_DISABLE_AUDIT=true 禁用审计功能

添加K8S集群

# master节点

cat /root/.kube/config# 注意apiserver.cluster.local的IP地址问题,为master节点的ip地址

创建NFS存储类

搭建NFS服务

服务端

yum install rpcbind nfs-serversystemctl enable rpcbind

systemctl enable nfs-serversystemctl start rpcbind

systemctl start nfs-servervim /etc/exports

/D/CACHE/NFS *(rw,sync,no_root_squash,no_subtree_check)exportfs -r

exportfs

showmount -e

客户端

yum install -y nfs-utils[root@node1 ~]# showmount -e 192.168.122.1

Export list for 192.168.122.1:

/D/CACHE/NFS *Kuboard创建NFS存储类

overview – create storageclass创建存储类

---

apiVersion: v1

kind: PersistentVolume

metadata:finalizers:- kubernetes.io/pv-protectionname: nfs-pv-nfs-storage

spec:accessModes:- ReadWriteManycapacity:storage: 100GimountOptions: []nfs:path: /D/CACHE/NFSserver: 192.168.122.1persistentVolumeReclaimPolicy: RetainstorageClassName: nfs-storageclass-provisionervolumeMode: Filesystem---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:finalizers:- kubernetes.io/pvc-protectionname: nfs-pvc-nfs-storagenamespace: kube-system

spec:accessModes:- ReadWriteManyresources:requests:storage: 100GistorageClassName: nfs-storageclass-provisionervolumeMode: FilesystemvolumeName: nfs-pv-nfs-storage---

apiVersion: v1

kind: ServiceAccount

metadata:name: eip-nfs-client-provisionernamespace: kube-system---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: eip-nfs-client-provisioner-runner

rules:- apiGroups:- ''resources:- nodesverbs:- get- list- watch- apiGroups:- ''resources:- persistentvolumesverbs:- get- list- watch- create- delete- apiGroups:- ''resources:- persistentvolumeclaimsverbs:- get- list- watch- update- apiGroups:- storage.k8s.ioresources:- storageclassesverbs:- get- list- watch- apiGroups:- ''resources:- eventsverbs:- create- update- patch---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: eip-run-nfs-client-provisioner

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: eip-nfs-client-provisioner-runner

subjects:- kind: ServiceAccountname: eip-nfs-client-provisionernamespace: kube-system---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: eip-leader-locking-nfs-client-provisionernamespace: kube-system

rules:- apiGroups:- ''resources:- endpointsverbs:- get- list- watch- create- update- patch---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: eip-leader-locking-nfs-client-provisionernamespace: kube-system

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: eip-leader-locking-nfs-client-provisioner

subjects:- kind: ServiceAccountname: eip-nfs-client-provisionernamespace: kube-system---

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: eip-nfs-nfs-storagename: eip-nfs-nfs-storagenamespace: kube-system

spec:replicas: 1selector:matchLabels:app: eip-nfs-nfs-storagestrategy:type: Recreatetemplate:metadata:labels:app: eip-nfs-nfs-storagespec:containers:- env:- name: PROVISIONER_NAMEvalue: nfs-nfs-storage- name: NFS_SERVERvalue: 192.168.122.1- name: NFS_PATHvalue: /D/CACHE/NFSimage: >-swr.cn-east-2.myhuaweicloud.com/kuboard-dependency/nfs-subdir-external-provisioner:v4.0.2name: nfs-client-provisionervolumeMounts:- mountPath: /persistentvolumesname: nfs-client-rootserviceAccountName: eip-nfs-client-provisionervolumes:- name: nfs-client-rootpersistentVolumeClaim:claimName: nfs-pvc-nfs-storage---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:annotations:k8s.kuboard.cn/storageNamespace: 'mysql-space,default,kuboard'k8s.kuboard.cn/storageType: nfs_client_provisionername: nfs-storage

mountOptions: []

parameters:archiveOnDelete: 'false'

provisioner: nfs-nfs-storage

reclaimPolicy: Delete

volumeBindingMode: Immediate

Kuboard导入示例微服务

- 创建名称空间

- 导入、上传yaml文件

- 执行apply

K8S诊断应用程序

pod一直是pending

- 一般原因是pod不能被调度到某一个节点上

- 集群缺乏足够的资源、合适的资源 --> 删除某些pods 调整pod的资源请求 添加节点

- kubectl describe pod – > Events

- 资源没有就绪 configmap pvc

pod一直是waiting

- 容器的镜像名称正确否?

- 容器镜像推送否?

- 节点上docker pull / ctr -n k8s.io image pull 能拉取否?

pod处于running状态,但是不工作

- yaml语法、格式问题排查

kubectl apply -f xxx.yaml --validate

- pod是否达到预期?

kubectl get pod mypod -o yaml > mypod.yamlkubectl apply -f mp.yamlistio

安装

curl -L https://istio.io/downloadIstio | sh -cd istio-1.17.1export PATH=$PATH:$PWD/binunset https_proxyistioctl install --set profile=demo

K8S部署MySQL

configmap --> pvc --> svc --> deploy

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:name: my-mysqlnamespace: mysql-space

spec:replicas: 1selector:matchLabels:app: my-mysqltemplate:metadata:labels:app: my-mysqlspec:containers:- name: my-mysqlimage: mysql:8.0.30imagePullPolicy: IfNotPresentenv:- name: MYSQL_ROOT_PASSWORDvalue: root- name: MYSQL_USERvalue: test- name: MYSQL_PASSWORDvalue: testports:- containerPort: 3306protocol: TCPname: httpvolumeMounts:- name: my-mysql-datamountPath: /var/lib/mysql- name: mysql-confmountPath: /etc/mysql/mysql.conf.dvolumes:- name: mysql-confconfigMap:name: mysql-conf- name: my-mysql-datapersistentVolumeClaim:claimName: my-mysql-data

pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: my-mysql-datanamespace: mysql-space

spec:accessModes:- ReadWriteManyresources:requests:storage: 5Gi

ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:name: mysql-confnamespace: mysql-space

data:mysql.cnf: |[mysqld]pid-file = /var/run/mysqld/mysqld.pidsocket = /var/run/mysqld/mysqld.sockdatadir = /var/lib/mysqlsymbolic-links=0sql-mode=ONLY_FULL_GROUP_BY,STRICT_TRANS_TABLES,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

Service

apiVersion: v1

kind: Service

metadata:name: mysql-exportnamespace: my-space

spec:type: NodePortselector:app: my-mysqlports:- port: 3306targetPort: 3306nodePort: 32306

连接测试

dbeaver

K8S部署MongoDB

sts

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mongonamespace: my-space

spec:serviceName: mongoreplicas: 3selector:matchLabels:role: mongoenvironment: testtemplate:metadata:labels:role: mongoenvironment: testspec:terminationGracePeriodSeconds: 10containers:- name: mongoimage: 'mongo:3.4'command:- mongod- '--replSet'- rs0- '--bind_ip'- 0.0.0.0- '--smallfiles'- '--noprealloc'ports:- containerPort: 27017volumeMounts:- name: mongo-persistent-storagemountPath: /data/db- name: mongo-sidecarimage: cvallance/mongo-k8s-sidecarenv:- name: MONGO_SIDECAR_POD_LABELSvalue: 'role=mongo,environment=test'volumeClaimTemplates:- metadata:name: mongo-persistent-storagespec:accessModes:- ReadWriteOnceresources:requests:storage: 1Gi

svc

apiVersion: v1

kind: Service

metadata:name: mongonamespace: my-spacelabels:name: mongo

spec:ports:- port: 27017targetPort: 27017clusterIP: Noneselector:role: mongo

连接

mongodb://mongo-0.mongo,mongo-1.mongo,mongo-2.mongo:27017/dbnameK8S部署Zookeeper

部署

apiVersion: v1

kind: Service

metadata:name: zk-hslabels:app: zk

spec:ports:- port: 2888name: server- port: 3888name: leader-electionclusterIP: Noneselector:app: zk

---

apiVersion: v1

kind: Service

metadata:name: zk-cslabels:app: zk

spec:ports:- port: 2181name: clientselector:app: zk

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:name: zk-pdb

spec:selector:matchLabels:app: zkmaxUnavailable: 1 # minAvailable: 2

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: zk

spec:selector:matchLabels:app: zkserviceName: zk-hsreplicas: 3updateStrategy:type: RollingUpdatepodManagementPolicy: Paralleltemplate:metadata:labels:app: zkspec:affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchExpressions:- key: "app"operator: Invalues:- zktopologyKey: "kubernetes.io/hostname"containers:- name: kubernetes-zookeeperimagePullPolicy: IfNotPresentimage: "kubebiz/zookeeper:3.4.10"resources:requests:memory: "1Gi"cpu: "0.5"ports:- containerPort: 2181name: client- containerPort: 2888name: server- containerPort: 3888name: leader-electioncommand:- sh- -c- "start-zookeeper \--servers=3 \--data_dir=/var/lib/zookeeper/data \--data_log_dir=/var/lib/zookeeper/data/log \--conf_dir=/opt/zookeeper/conf \--client_port=2181 \--election_port=3888 \--server_port=2888 \--tick_time=2000 \--init_limit=10 \--sync_limit=5 \--heap=512M \--max_client_cnxns=60 \--snap_retain_count=3 \--purge_interval=12 \--max_session_timeout=40000 \--min_session_timeout=4000 \--log_level=INFO"readinessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5livenessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5volumeMounts:- name: datadirmountPath: /var/lib/zookeepersecurityContext:runAsUser: 1000fsGroup: 1000volumeClaimTemplates:- metadata:name: datadirspec:storageClassName: "nfs-storage"accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 1Gi

测试

# 连接

zxl@linux:/D/Develop/apache-zookeeper-3.6.2-bin/bin$ ./zkCli.sh -server localhost:30281# 创建ZNode节点并添加数据 获取数据 删除节点 查看子节点

[zk: localhost:30281(CONNECTED) 0] create /zk_test

Created /zk_test

[zk: localhost:30281(CONNECTED) 1] delete /zk_test

[zk: localhost:30281(CONNECTED) 2] create /zk_test test111

Created /zk_test

[zk: localhost:30281(CONNECTED) 3] get /zk_test

test111

[zk: localhost:30281(CONNECTED) 4] ls /zk_test

[]

[zk: localhost:30281(CONNECTED) 5] create /zk_test/test2 test222

Created /zk_test/test2

[zk: localhost:30281(CONNECTED) 6] ls /zk_test

[test2]

[zk: localhost:30281(CONNECTED) 7] get /zk_test/test2

test222

[zk: localhost:30281(CONNECTED) 8]

K8S部署Kafka

部署

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:name: kafka-pdb

spec:selector:matchLabels:app: kafkamaxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: kafka

spec:serviceName: kafka-hsreplicas: 3podManagementPolicy: ParallelupdateStrategy:type: RollingUpdateselector:matchLabels:app: kafkatemplate:metadata:labels:app: kafkaspec:affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchExpressions:- key: "app"operator: Invalues:- kafkatopologyKey: "kubernetes.io/hostname"podAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 1podAffinityTerm:labelSelector:matchExpressions:- key: "app"operator: Invalues:- zktopologyKey: "kubernetes.io/hostname"terminationGracePeriodSeconds: 300containers:- name: k8skafkaimagePullPolicy: IfNotPresentimage: kubebiz/kafka:0.10.2.1resources:requests:memory: "1Gi"cpu: "0.5"ports:- containerPort: 9093name: servercommand:- sh- -c- "exec kafka-server-start.sh /opt/kafka/config/server.properties \--override broker.id=${HOSTNAME##*-} \--override listeners=PLAINTEXT://:9093 \--override zookeeper.connect=zk-cs.default.svc.cluster.local:2181 \--override log.dirs=/var/lib/kafka \--override auto.create.topics.enable=true \--override auto.leader.rebalance.enable=true \--override background.threads=10 \--override compression.type=producer \--override delete.topic.enable=false \--override leader.imbalance.check.interval.seconds=300 \--override leader.imbalance.per.broker.percentage=10 \--override log.flush.interval.messages=9223372036854775807 \--override log.flush.offset.checkpoint.interval.ms=60000 \--override log.flush.scheduler.interval.ms=9223372036854775807 \--override log.retention.bytes=-1 \--override log.retention.hours=168 \--override log.roll.hours=168 \--override log.roll.jitter.hours=0 \--override log.segment.bytes=1073741824 \--override log.segment.delete.delay.ms=60000 \--override message.max.bytes=1000012 \--override min.insync.replicas=1 \--override num.io.threads=8 \--override num.network.threads=3 \--override num.recovery.threads.per.data.dir=1 \--override num.replica.fetchers=1 \--override offset.metadata.max.bytes=4096 \--override offsets.commit.required.acks=-1 \--override offsets.commit.timeout.ms=5000 \--override offsets.load.buffer.size=5242880 \--override offsets.retention.check.interval.ms=600000 \--override offsets.retention.minutes=1440 \--override offsets.topic.compression.codec=0 \--override offsets.topic.num.partitions=50 \--override offsets.topic.replication.factor=3 \--override offsets.topic.segment.bytes=104857600 \--override queued.max.requests=500 \--override quota.consumer.default=9223372036854775807 \--override quota.producer.default=9223372036854775807 \--override replica.fetch.min.bytes=1 \--override replica.fetch.wait.max.ms=500 \--override replica.high.watermark.checkpoint.interval.ms=5000 \--override replica.lag.time.max.ms=10000 \--override replica.socket.receive.buffer.bytes=65536 \--override replica.socket.timeout.ms=30000 \--override request.timeout.ms=30000 \--override socket.receive.buffer.bytes=102400 \--override socket.request.max.bytes=104857600 \--override socket.send.buffer.bytes=102400 \--override unclean.leader.election.enable=true \--override zookeeper.session.timeout.ms=6000 \--override zookeeper.set.acl=false \--override broker.id.generation.enable=true \--override connections.max.idle.ms=600000 \--override controlled.shutdown.enable=true \--override controlled.shutdown.max.retries=3 \--override controlled.shutdown.retry.backoff.ms=5000 \--override controller.socket.timeout.ms=30000 \--override default.replication.factor=1 \--override fetch.purgatory.purge.interval.requests=1000 \--override group.max.session.timeout.ms=300000 \--override group.min.session.timeout.ms=6000 \--override inter.broker.protocol.version=0.10.2-IV0 \--override log.cleaner.backoff.ms=15000 \--override log.cleaner.dedupe.buffer.size=134217728 \--override log.cleaner.delete.retention.ms=86400000 \--override log.cleaner.enable=true \--override log.cleaner.io.buffer.load.factor=0.9 \--override log.cleaner.io.buffer.size=524288 \--override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \--override log.cleaner.min.cleanable.ratio=0.5 \--override log.cleaner.min.compaction.lag.ms=0 \--override log.cleaner.threads=1 \--override log.cleanup.policy=delete \--override log.index.interval.bytes=4096 \--override log.index.size.max.bytes=10485760 \--override log.message.timestamp.difference.max.ms=9223372036854775807 \--override log.message.timestamp.type=CreateTime \--override log.preallocate=false \--override log.retention.check.interval.ms=300000 \--override max.connections.per.ip=2147483647 \--override num.partitions=1 \--override producer.purgatory.purge.interval.requests=1000 \--override replica.fetch.backoff.ms=1000 \--override replica.fetch.max.bytes=1048576 \--override replica.fetch.response.max.bytes=10485760 \--override reserved.broker.max.id=1000 "env:- name: KAFKA_HEAP_OPTSvalue : "-Xmx512M -Xms512M"- name: KAFKA_OPTSvalue: "-Dlogging.level=INFO"volumeMounts:- name: datadirmountPath: /var/lib/kafkareadinessProbe:exec:command:- sh- -c- "/opt/kafka/bin/kafka-broker-api-versions.sh --bootstrap-server=localhost:9093"securityContext:runAsUser: 1000fsGroup: 1000volumeClaimTemplates:- metadata:name: datadirspec:storageClassName: "nfs-storage"accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 10Gi

测试

kafka@kafka-0:/$ kafka-topics.sh --create --zookeeper zk-cs.default.svc.cluster.local:2181 --replication-factor 1 --partitions 1 --topic test-topic

Created topic "test-topic".

kafka@kafka-0:/$ kafka-topics.sh --delete --zookeeper zk-cs.default.svc.cluster.local:2181 --topic test-topic

Topic test-topic is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

kafka@kafka-0:/$

K8S部署Harbor

helm方式部署

version: 2.7.1

root@linux:~/harbor-helm# helm repo add harbor https://helm.goharbor.ioroot@linux:~/harbor-helm# helm fatech harbor/harbor --untarroot@linux:~/harbor-helm# export KUBECONFIG=/etc/rancher/k3s/k3s.yamlroot@linux:~/harbor-helm# helm install myharbor harbor -n harbor

通过ingress访问harbor

vim /etc/hosts

127.0.0.1 core.harbor.domainhttp://core.harbor.domain

生成https自签名证书

# Generate a Certificate Authority Certificate

openssl genrsa -out ca.key 4096openssl req -x509 -new -nodes -sha512 -days 3650 \-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=core.harbor.domain" \-key ca.key \-out ca.crt# Generate a Server Certificate

openssl genrsa -out core.harbor.domain.key 4096openssl req -sha512 -new \-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=core.harbor.domain" \-key core.harbor.domain.key \-out core.harbor.domain.csrcat > v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names[alt_names]

DNS.1=core.harbor.domain

DNS.2=core.harbor

DNS.3=hostname

EOF openssl x509 -req -sha512 -days 3650 \-extfile v3.ext \-CA ca.crt -CAkey ca.key -CAcreateserial \-in core.harbor.domain.csr \-out core.harbor.domain.crt

配置证书

k delete secret myharbor-ingress -n harbork create secret -n harbor generic myharbor-ingress --from-file=ca.crt=ca.crt --from-file=tls.crt=core.harbor.domain.crt --from-file=tls.key=core.harbor.domain.key

访问测试

https://core.harbor.domainK8S部署ElasticSearch

sts

apiVersion: apps/v1

kind: StatefulSet

metadata:name: es-clusternamespace: elk

spec:serviceName: es-clusterreplicas: 3selector:matchLabels:app: es-clustertemplate:metadata:name: es-clusterlabels:app: es-clusterspec:initContainers:- name: init-sysctlimage: busyboxcommand:- sysctl- '-w'- vm.max_map_count=262144imagePullPolicy: IfNotPresentsecurityContext:privileged: truecontainers:- image: 'docker.elastic.co/elasticsearch/elasticsearch:7.17.5'name: esenv:- name: node.namevalueFrom:fieldRef:fieldPath: metadata.name- name: cluster.namevalue: my-cluster- name: cluster.initial_master_nodesvalue: 'es-cluster-0,es-cluster-1,es-cluster-2'- name: discovery.seed_hostsvalue: es-cluster- name: network.hostvalue: _site_- name: ES_JAVA_OPTSvalue: '-Xms512m -Xmx512m'volumeMounts:- name: es-cluster-datamountPath: /usr/share/elasticsearch/datavolumes:- name: es-cluster-dataemptyDir: {}

svc-headless

apiVersion: v1

kind: Service

metadata:name: es-clusternamespace: elk

spec:clusterIP: Noneports:- name: httpport: 9200- name: tcpport: 9300selector:app: es-cluster

nodeport

apiVersion: v1

kind: Service

metadata:name: es-cluster-nodeportnamespace: elk

spec:type: NodePortselector:app: es-clusterports:- name: httpport: 9200targetPort: 9200nodePort: 31200- name: tcpport: 9300targetPort: 9300nodePort: 31300

K8S部署Filebeat

DaemonSet

apiVersion: apps/v1

kind: DaemonSet

metadata:name: filebeatnamespace: elklabels:k8s-app: filebeat

spec:selector:matchLabels:k8s-app: filebeattemplate:metadata:labels:k8s-app: filebeatspec:serviceAccountName: filebeatterminationGracePeriodSeconds: 30hostNetwork: truednsPolicy: ClusterFirstWithHostNetcontainers:- name: filebeatimage: 'docker.elastic.co/beats/filebeat:7.17.5'args:- '-c'- /etc/filebeat.yml- '-e'env:- name: ELASTICSEARCH_HOSTvalue: es-cluster- name: ELASTICSEARCH_PORTvalue: '9200'- name: ELASTICSEARCH_USERNAMEvalue: elastic- name: ELASTICSEARCH_PASSWORDvalue: "123456"- name: ELASTIC_CLOUD_IDvalue: null- name: ELASTIC_CLOUD_AUTHvalue: null- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeNamesecurityContext:runAsUser: 0resources:limits:memory: 200Mirequests:cpu: 100mmemory: 100MivolumeMounts:- name: configmountPath: /etc/filebeat.ymlreadOnly: truesubPath: filebeat.yml- name: datamountPath: /usr/share/filebeat/data- name: varlibdockercontainersmountPath: /var/lib/docker/containersreadOnly: true- name: varlogmountPath: /var/logreadOnly: truevolumes:- name: configconfigMap:defaultMode: 416name: filebeat-config- name: varlibdockercontainershostPath:path: /var/lib/docker/containers- name: varloghostPath:path: /var/log- name: datahostPath:path: /var/lib/filebeat-datatype: DirectoryOrCreate

ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:name: filebeat-confignamespace: elklabels:k8s-app: filebeat

data:filebeat.yml: >-filebeat.inputs:- type: containerpaths:- /var/log/containers/*.logprocessors:- add_kubernetes_metadata:host: ${NODE_NAME}matchers:- logs_path:logs_path: "/var/log/containers/"# To enable hints based autodiscover, remove `filebeat.inputs` configurationand uncomment this:#filebeat.autodiscover:# providers:# - type: kubernetes# node: ${NODE_NAME}# hints.enabled: true# hints.default_config:# type: container# paths:# - /var/log/containers/*${data.kubernetes.container.id}.logprocessors:- add_cloud_metadata:- add_host_metadata:cloud.id: ${ELASTIC_CLOUD_ID}cloud.auth: ${ELASTIC_CLOUD_AUTH}output.elasticsearch:hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']username: ${ELASTICSEARCH_USERNAME}password: ${ELASTICSEARCH_PASSWORD}

RBAC

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: filebeat

subjects:- kind: ServiceAccountname: filebeatnamespace: elk

roleRef:kind: ClusterRolename: filebeatapiGroup: rbac.authorization.k8s.io---apiVersion: v1

kind: ServiceAccount

metadata:name: filebeatnamespace: elklabels:k8s-app: filebeat---apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: filebeatlabels:k8s-app: filebeat

rules:- apiGroups:- ''resources:- namespaces- podsverbs:- get- watch- list

K8S部署Kibana

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:name: kbnamespace: elk

spec:replicas: 1selector:matchLabels:app: kbtemplate:metadata:name: kblabels:app: kbspec:containers:- name: kbimage: 'docker.elastic.co/kibana/kibana:7.17.5'env:- name: ELASTICSEARCH_HOSTSvalue: '["http://es-cluster:9200"]'ports:- name: httpcontainerPort: 5601

SVC

apiVersion: v1

kind: Service

metadata:name: kb-svcnamespace: elk

spec:type: NodePortselector:app: kbports:- port: 5601targetPort: 5601nodePort: 31065

K8S部署GitLab

sts

apiVersion: apps/v1

kind: StatefulSet

metadata:name: gitlabnamespace: my-space

spec:serviceName: gitlabreplicas: 1selector:matchLabels:app: gitlabtemplate:metadata:labels:app: gitlabspec:containers:- name: gitlabimage: 'gitlab/gitlab-ce:15.2.3-ce.0'ports:- containerPort: 80name: web

svc

apiVersion: v1

kind: Service

metadata:name: gitlab-svcnamespace: my-space

spec:type: NodePortselector:app: gitlabports:- port: 80targetPort: 80

获取密码

k exec gitlab-0 -n my-space -- cat /etc/gitlab/initial_root_passwordroot / C5b1sQhAfMQpHDqDsBBaB5S5BXXU95uP6u7Jj7Tfo2M=

K8S部署Prometheus + Grafana

下载

git clone https://github.com/prometheus-operator/kube-prometheus.git -b release-0.12安装

cd /D/Workspace/K8S/learning-demo/22-prometheus-deploy/kube-prometheus# 安装crd和namespace

k apply --server-side -f manifests/setup# 创建其他资源

until k get servicemonitors -A ; do date; sleep 1; echo ""; done# find /D/Workspace/K8S/learning-demo/22-prometheus-deploy/kube-prometheus/manifests -name "*.yaml" |xargs perl -pi -e 's|registry.k8s.io|registry.cn-hangzhou.aliyuncs.com/google_containers|g'# 修改镜像地址

prometheusAdapter-deployment.yaml --> thinkingdata/prometheus-adapter:v0.10.0

kubeStateMetrics-deployment.yaml --> bitnami/kube-state-metrics:2.7.0k apply -f manifests

K8S部署Jenkins

ns

k create ns jenkins

pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: jenkins-pvc-claimnamespace: jenkins

spec:storageClassName: "nfs-storage"accessModes:- ReadWriteManyresources:requests:storage: 1Gi

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:name: jenkins-deploymentnamespace: jenkins

spec:replicas: 1selector:matchLabels:app: jenkinstemplate:metadata:labels:app: jenkinsspec:securityContext:fsGroup: 1000containers:- name: jenkinsimage: 'jenkins/jenkins:2.346.3-2-jdk8'imagePullPolicy: IfNotPresentports:- containerPort: 8080name: webprotocol: TCP- containerPort: 50000name: agentprotocol: TCPvolumeMounts:- name: jenkins-stroragemountPath: /var/jenkins_homevolumes:- name: jenkins-stroragepersistentVolumeClaim:claimName: jenkins-pvc-claim

svc

apiVersion: v1

kind: Service

metadata:name: jenkins-exportnamespace: jenkins

spec:selector:app: jenkinstype: NodePortports:- name: httpport: 8080targetPort: 8080nodePort: 32080

获取登录密码

# 方式一

k get po -n jenkinsk exec -it jenkins-deployment-6fb7cc656f-6mvrp -n jenkins -- cat /var/jenkins_home/secrets/initialAdminPassword

# 14095a60f7124247beeb3d1989a1b08c# 方式二

k logs -f -n jenkins jenkins-deployment-6fb7cc656f-6mvrp

rbac

创建sa,为其赋予特定的权限,在配置jenkins-slave的时候会用到。

apiVersion: v1

kind: ServiceAccount

metadata:name: jenkins2namespace: jenkins---kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: jenkins2namespace: jenkins

rules:- apiGroups: [""]resources: ["pods"]verbs: ["create","delete","get","list","patch","update","watch"]- apiGroups: [""]resources: ["pods/exec"]verbs: ["create","delete","get","list","patch","update","watch"]- apiGroups: [""]resources: ["pods/log"]verbs: ["get","list","watch"]- apiGroups: [""]resources: ["secrets"]verbs: ["get"]- apiGroups: ["extensions", "apps"]resources: ["deployments"]verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]---apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: jenkins2namespace: jenkins

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: jenkins2

subjects:- kind: ServiceAccountname: jenkins2namespace: jenkins

安装Kubernetes插件

kubernets插件,它能够动态生成 Slave 的 Pod。

安装插件相对较慢,请耐心等待,并且由于是在线安装,集群需要开通外网。

注意:该插件目前只支持标准的K8S集群,k3s k0s等不支持。

Manage Jenkins–>Manage Plugins–>Available–>搜索Kubernetes

配置Kubernetes插件

使Jenkins能够连接到K8S集群,调用API 动态创建jenkins slave pod,执行构建任务。

Manage Jenkins–>Manage Nodes and Clouds–>Configure Clouds–>

如果是以root用户安装的K8S集群,kubeconfig文件所在路径是

/root/.kube/configK8S部署RocketMQ Operator

golang的版本要求:1.16.x

cd /D/Workspace/K8S/learning-demo/24-rocketmq-operator/./01-git-clone.sh./02-deploy.sh

验证

# 部署集群 默认EmptyDir

k apply -f example/rocketmq_v1alpha1_rocketmq_cluster.yaml# 访问30000

k apply -f example/rocketmq_v1alpha1_cluster_service.yaml

K8S部署Redis

ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:name: redis-config

data:redis-config: |maxmemory 2mbmaxmemory-policy allkeys-lrudir /dataappendonly yessave 900 1save 300 10save 60 10000

Pod

apiVersion: v1

kind: Pod

metadata:name: redislabels:app: redis

spec:containers:- name: redisimage: 'redis'command:- redis-server- /redis-conf/redis.confenv:- name: MASTERvalue: 'true'ports:- containerPort: 6379resources:limits:cpu: '0.1'volumeMounts:- mountPath: /dataname: data- mountPath: /redis-confname: configvolumes:- name: dataemptyDir: {}- name: configconfigMap:name: redis-configitems:- key: redis-configpath: redis.conf

Service

apiVersion: v1

kind: Service

metadata:name: redis-masterlabels:app: redis

spec:selector:app: redisports:- port: 6379targetPort: 6379

K8S部署Redis集群

Redis Proxy是一种用于搭建Redis集群的方式,它是基于Redis协议的代理层,将客户端的请求转发到多个Redis实例中,从而实现高可用和负载均衡。Redis Proxy的优点是不需要修改客户端代码,不需要对Redis实例进行改动,可以快速搭建Redis集群,并支持动态扩容和缩容。

下面是Redis Proxy方式搭建Redis集群的步骤:

- 安装Redis Proxy,可以选择开源的Twemproxy或商业的Redis Enterprise Proxy。

- 配置Redis Proxy,包括设置监听端口、Redis实例的地址和端口、权重等参数。

- 启动Redis Proxy,监听客户端请求,将请求转发到多个Redis实例中。

- 在Redis实例中设置主从复制,将主节点的数据同步到从节点。

- 使用Redis Cluster或其他工具对Redis实例进行水平扩容和缩容。

需要注意的是,使用Redis Proxy方式搭建Redis集群时,需要保证Redis实例的数据一致性和可靠性,可以使用Redis Sentinel或其他监控工具对Redis实例进行监控和管理。同时,需要考虑网络延迟、负载均衡等因素,以提高Redis集群的性能和可用性。

cm

#redis配置文件

apiVersion: v1

kind: ConfigMap

metadata:name: redis-conf

data:redis.conf: |port 6379masterauth 123456requirepass 123456appendonly yesdir /var/lib/rediscluster-enabled yescluster-config-file /var/lib/redis/nodes.confcluster-node-timeout 5000---

#redis-proxy配置文件

apiVersion: v1

kind: ConfigMap

metadata:name: redis-proxy

data:proxy.conf: |cluster redis-cluster:6379bind 0.0.0.0port 7777threads 8daemonize noauth 123456enable-cross-slot yeslog-level error

sts

apiVersion: apps/v1

kind: StatefulSet

metadata:name: redis-nodeannotations:reloader.stakater.com/auto: "true"

spec:serviceName: redis-clusterreplicas: 6selector:matchLabels:app: redistemplate:metadata:labels:app: redisappCluster: redis-clusterspec:terminationGracePeriodSeconds: 20affinity:podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 100podAffinityTerm:labelSelector:matchExpressions:- key: appoperator: Invalues:- redistopologyKey: kubernetes.io/hostnamecontainers:- name: redisimage: rediscommand:- "redis-server"args:- "/etc/redis/redis.conf"- "--protected-mode"- "no"- "--cluster-announce-ip"- "$(POD_IP)"env:- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources:requests:cpu: "100m"memory: "100Mi"ports:- name: rediscontainerPort: 6379protocol: "TCP"- name: clustercontainerPort: 16379protocol: "TCP"volumeMounts:- name: "redis-conf"mountPath: "/etc/redis"- name: "redis-data"mountPath: "/var/lib/redis"volumes:- name: "redis-conf"configMap:name: "redis-conf"items:- key: "redis.conf"path: "redis.conf"volumeClaimTemplates:- metadata:name: redis-dataspec:accessModes: [ "ReadWriteMany","ReadWriteOnce"]resources:requests:storage: 1GstorageClassName: nfs-storage---

apiVersion: v1

kind: Service

metadata:name: redis-clusterlabels:app: redis

spec:ports:- name: redis-portport: 6379clusterIP: Noneselector:app: redisappCluster: redis-cluster

proxy

apiVersion: apps/v1

kind: Deployment

metadata:name: redis-proxyannotations:reloader.stakater.com/auto: "true"

spec:replicas: 1selector:matchLabels:app: redis-proxytemplate:metadata:labels:app: redis-proxyspec:imagePullSecrets:- name: harborcontainers:- name: redis-proxyimage: nuptaxin/redis-cluster-proxy:v1.0.0imagePullPolicy: IfNotPresentcommand: ["redis-cluster-proxy"]args: - -c- /data/proxy.conf # 指定启动配置文件ports:- name: redis-proxycontainerPort: 7777protocol: TCPvolumeMounts:- name: redis-proxy-confmountPath: /data/volumes: - name: redis-proxy-confconfigMap:name: redis-proxy

svc

apiVersion: v1

kind: Service

metadata:name: redis-access-servicelabels:app: redis

spec:ports:- name: redis-portprotocol: "TCP"port: 6379targetPort: 6379type: NodePortselector:app: redisappCluster: redis-cluster---

#redis外网访问

apiVersion: v1

kind: Service

metadata:name: redis-proxy-servicelabels:name: redis-proxy

spec:type: NodePortports:- port: 7777protocol: TCPtargetPort: 7777name: httpnodePort: 30939selector:app: redis-proxy

K8S部署Kaniko

configmap

apiVersion: v1

kind: ConfigMap

metadata:name: dockerfile-storagenamespace: default

data:dockerfile: |FROM alpine RUN echo "created from standard input"

pod

apiVersion: v1

kind: Pod

metadata:name: kanikonamespace: default

spec:containers:- name: kanikoimage: kubebiz/kaniko:executor-v1.9.1args:- '--dockerfile=/workspace/dockerfile'- '--context=dir://workspace'- '--no-push'volumeMounts:- name: dockerfile-storagemountPath: /workspacerestartPolicy: Nevervolumes:- name: dockerfile-storageconfigMap:name: dockerfile-storage

K8S部署PostgreSQL

PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: postgres-data

spec:storageClassName: "nfs-storage"accessModes:- ReadWriteManyresources:requests:storage: 1Gi

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:name: postgres-sonarlabels:app: postgres-sonar

spec:replicas: 1selector:matchLabels:app: postgres-sonartemplate:metadata:labels:app: postgres-sonarspec:containers:- name: postgres-sonarimage: 'postgres:11.4'imagePullPolicy: IfNotPresentports:- containerPort: 5432env:- name: POSTGRES_DBvalue: sonarDB- name: POSTGRES_USERvalue: sonarUser- name: POSTGRES_PASSWORDvalue: '123456'resources:limits:cpu: 1000mmemory: 2048Mirequests:cpu: 500mmemory: 1024MivolumeMounts:- name: datamountPath: /var/lib/postgresql/datavolumes:- name: datapersistentVolumeClaim:claimName: postgres-data

SVC

apiVersion: v1

kind: Service

metadata:name: postgres-sonarlabels:app: postgres-sonar

spec:clusterIP: Noneports:- port: 5432protocol: TCPtargetPort: 5432selector:app: postgres-sonar

测试

# 连接指定数据库

psql -U sonarUser -h localhost -p 5432 -d sonarDB# 查看所有数据库

\l

K8S部署SonarQube

PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: sonarqube-data

spec:storageClassName: "nfs-storage"accessModes:- ReadWriteManyresources:requests:storage: 1Gi

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:name: sonarqubenamespace: my-spacelabels:app: sonarqube

spec:replicas: 1selector:matchLabels:app: sonarqubetemplate:metadata:labels:app: sonarqubespec:initContainers: #设置初始化镜像,执行 system 命令- name: init-sysctlimage: busyboximagePullPolicy: IfNotPresentcommand: ["sysctl", "-w", "vm.max_map_count=262144"] #必须设置vm.max_map_count这个值调整内存权限,否则启动可能报错securityContext:privileged: true #赋予权限能执行系统命令containers:- name: sonarqubeimage: 'sonarqube:8.5.1-community'ports:- containerPort: 9000env:- name: SONARQUBE_JDBC_USERNAMEvalue: sonarUser- name: SONARQUBE_JDBC_PASSWORDvalue: '123456'- name: SONARQUBE_JDBC_URLvalue: 'jdbc:postgresql://postgres-sonar:5432/sonarDB'livenessProbe:httpGet:path: /sessions/newport: 9000initialDelaySeconds: 60periodSeconds: 30readinessProbe:httpGet:path: /sessions/newport: 9000initialDelaySeconds: 60periodSeconds: 30failureThreshold: 6volumeMounts:- mountPath: /opt/sonarqube/confname: datasubPath: conf- mountPath: /opt/sonarqube/dataname: datasubPath: data- mountPath: /opt/sonarqube/extensionsname: datasubPath: extensionsvolumes:- name: datapersistentVolumeClaim:claimName: sonarqube-data

SVC

apiVersion: v1

kind: Service

metadata:name: sonarqubenamespace: my-spacelabels:app: sonarqube

spec:type: NodePortports:- name: sonarqubeport: 9000targetPort: 9000protocol: TCPselector:app: sonarqube

K8S部署Minio

apiVersion: apps/v1

kind: Deployment

metadata:name: minio

spec:replicas: 1selector:matchLabels:tier: miniotemplate:metadata:labels:tier: miniospec:containers:- name: minioimage: 'bitnami/minio:2022.8.13-debian-11-r0'imagePullPolicy: IfNotPresentenv:- name: MINIO_ROOT_USERvalue: 'admin'- name: MINIO_ROOT_PASSWORDvalue: '123456'volumeMounts:- name: datamountPath: /datavolumes:- name: dataemptyDir: {}

K8S部署Nexus3

deploy

apiVersion: apps/v1

kind: Deployment

metadata:name: nexus3namespace: default

spec:selector:matchLabels:app: nexus3template:metadata:labels:app: nexus3spec:initContainers:- name: volume-mount-hackimage: busyboxcommand:- sh- '-c'- 'chown -R 200:200 /nexus-data'volumeMounts:- name: nexus-datamountPath: /nexus-datacontainers:- image: sonatype/nexus3:3.41.1name: nexus3ports:- containerPort: 8081name: nexus3volumeMounts:- name: nexus-datamountPath: /nexus-datavolumes:- name: nexus-dataemptyDir: {}

svc

kind: Service

apiVersion: v1

metadata:name: nexus3labels:app: nexus3

spec:type: NodePortselector:app: nexus3ports:- port: 8081targetPort: 8081

Docker部署centos7(带图形化界面)

部署

sudo docker run --rm -it --name kasmweb-centos7 --shm-size=512m -p 6901:6901 -e VNC_PW=123456 kasmweb/centos-7-desktop:1.12.0

VNC_PW 指定访问密码 123456

用户名默认是:kasm_user

https😕/localhost:6901

文档

https://hub.docker.com/r/kasmweb/centos-7-desktop

Docker部署Ubuntu18.04(带图形化界面)

部署

sudo docker run --rm -it --shm-size=512m -p 6902:6901 -e VNC_PW=123456 kasmweb/ubuntu-bionic-desktop:1.10.0

https://localhost:6902

账户名:kasm_user123456文档

https://hub.docker.com/r/kasmweb/ubuntu-bionic-desktop

x86_64平台运行arm64版docker容器

amd64

# 安装qemu-aarch64-static

curl -L https://github.com/multiarch/qemu-user-static/releases/download/v7.2.0-1/qemu-aarch64-static -o /D/Workspace/Docker/qemu-aarch64-staticsudo ln -s /D/Workspace/Docker/run-arm-on-amd-with-qemu/v7.2.0-1/qemu-aarch64-static /usr/local/bin/qemu-aarch64-static# register 注册qemu虚拟机(电脑重启后每次都需要执行一下)

docker run --rm --privileged multiarch/qemu-user-static:register# 测试:运行一个arm64的镜像

docker run --rm -it \

-v /usr/local/bin/qemu-aarch64-static:/usr/bin/qemu-aarch64-static \

-v /etc/timezone:/etc/timezone:ro \

-v /etc/localtime:/etc/localtime:ro \

arm64v8/ubuntu:20.04 \

bashuname -m #aarch64

K8S中的PodDisruptionBudget对象

PDB

用于确保在进行维护、升级或其他操作时,系统中的pod不会被意外中断或终止,可以控制在任何给定时间内可以中断的pod的数量,以确保系统的可用性和可靠性。

PDB有两个属性,这两个属性可以设置为绝对数量或百分比,根据需要设置。

- minAvailable 指定在任何给定时间内必须保持可用的最小pod数量

- maxUnavaiable 指定在任何给定时间内可以中断的最大pod数量

kubeadm安装K8S 1.26.2

基础环境准备

https://mirrors.aliyun.com

centos 7.9.2009yum install -y vim wget ntpdate# 编辑 hosts

cat >> /etc/hosts <<-EOF

192.168.122.101 node1

192.168.122.102 node2

EOF# 时间同步

ntpdate ntp1.aliyun.com

systemctl start ntpdate

systemctl enable ntpdate# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld# 关闭swap

swapoff -a

sed -i 's/.*/#&/' /etc/fstab# 关闭selinux

getenforce

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config安装containerd

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# Step 4: 更新并安装Docker-CE

sudo yum makecache fast

sudo yum -y install docker-ce

# Step 4: 开启Docker服务

sudo service docker start

systemctl enable docker# 注意:

# 官方软件源默认启用了最新的软件,你可以通过编辑软件源的方式获取各个版本的软件包。例如官方并没有将测试版本的软件源置为可用,你可以通过以下方式开启。同理可以开启各种测试版本等。

# vim /etc/yum.repos.d/docker-ce.repo

# 将[docker-ce-test]下方的enabled=0修改为enabled=1

#

# 安装指定版本的Docker-CE:

# Step 1: 查找Docker-CE的版本:

# yum list docker-ce.x86_64 --showduplicates | sort -r

# Loading mirror speeds from cached hostfile

# Loaded plugins: branch, fastestmirror, langpacks

# docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

# docker-ce.x86_64 17.03.1.ce-1.el7.centos @docker-ce-stable

# docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

# Available Packages

# Step2: 安装指定版本的Docker-CE: (VERSION例如上面的17.03.0.ce.1-1.el7.centos)

# sudo yum -y install docker-ce-[VERSION]

配置containerd

sudo cp /etc/containerd/config.toml /etc/containerd/config.toml.baksudo containerd config default > $HOME/config.tomlsudo cp $HOME/config.toml /etc/containerd/config.toml# 修改 /etc/containerd/config.toml 文件后,要将 docker、containerd 停止后,再启动

sudo sed -i "s#registry.k8s.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml# 确保 /etc/containerd/config.toml 中的 disabled_plugins 内不存在 cri

sudo sed -i "s#SystemdCgroup = false#SystemdCgroup = true#g" /etc/containerd/config.toml

systemctl stop docker

systemctl stop containerd

systemctl start docker

systemctl start containerdsudo systemctl enable --now containerd.servicesudo mkdir -p /etc/dockersudo tee /etc/docker/daemon.json <<-'EOF'

{"registry-mirrors": ["https://hnkfbj7x.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]

}

EOFsudo systemctl daemon-reload

sudo systemctl restart docker

添加阿里云K8S镜像仓库

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

# 是否开启本仓库

enabled=1

# 是否检查 gpg 签名文件

gpgcheck=0

# 是否检查 gpg 签名文件

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

安装K8S组件

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1EOF# 应用 sysctl 参数而不重新启动

sudo sysctl --system# yum --showduplicates list kubelet --nogpgcheck

# yum --showduplicates list kubeadm --nogpgcheck

# yum --showduplicates list kubectl --nogpgcheck# 2023-02-07,经过测试,版本号:1.24.0,同样适用于本文章

# sudo yum install -y kubelet-1.24.0-0 kubeadm-1.24.0-0 kubectl-1.24.0-0 --disableexcludes=kubernetes --nogpgcheck# 如果你看到有人说 node 节点不需要安装 kubectl,其实这种说法是错的,kubectl 会被当做依赖安装,如果安装过程没有指定 kubectl 的版本,则会安装最新版的 kubectl,可能会导致程序运行异常

sudo yum install -y kubelet-1.25.3-0 kubeadm-1.25.3-0 kubectl-1.25.3-0 --disableexcludes=kubernetes --nogpgcheck# 2022-11-18,经过测试,版本号:1.25.4,同样适用于本文章

# sudo yum install -y kubelet-1.25.4-0 kubeadm-1.25.4-0 kubectl-1.25.4-0 --disableexcludes=kubernetes --nogpgcheck# 2023-02-07,经过测试,版本号:1.25.5,同样适用于本文章

# sudo yum install -y kubelet-1.25.5-0 kubeadm-1.25.5-0 kubectl-1.25.5-0 --disableexcludes=kubernetes --nogpgcheck# 2023-02-07,经过测试,版本号:1.25.6,同样适用于本文章

# sudo yum install -y kubelet-1.25.6-0 kubeadm-1.25.6-0 kubectl-1.25.6-0 --disableexcludes=kubernetes --nogpgcheck# 2023-02-07,经过测试,版本号:1.26.0,同样适用于本文章

# sudo yum install -y kubelet-1.26.0-0 kubeadm-1.26.0-0 kubectl-1.26.0-0 --disableexcludes=kubernetes --nogpgcheck# 2023-02-07,经过测试,版本号:1.26.1,同样适用于本文章

# sudo yum install -y kubelet-1.26.1-0 kubeadm-1.26.1-0 kubectl-1.26.1-0 --disableexcludes=kubernetes --nogpgcheck# 2023-03-02,经过测试,版本号:1.26.2,同样适用于本文章

# sudo yum install -y kubelet-1.26.2-0 kubeadm-1.26.2-0 kubectl-1.26.2-0 --disableexcludes=kubernetes --nogpgcheck# 2023-03-21,经过测试,版本号:1.26.3,同样适用于本文章

# sudo yum install -y kubelet-1.26.3-0 kubeadm-1.26.3-0 kubectl-1.26.3-0 --disableexcludes=kubernetes --nogpgcheck# 安装最新版,生产时不建议

# sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes --nogpgchecksystemctl daemon-reload

sudo systemctl restart kubelet

sudo systemctl enable kubelet# 查看kubelet的日志及状态

# k8s 未初始化时,kubelet 可能无法启动

journalctl -xefu kubelet

初始化K8S集群

kubeadm init --image-repository=registry.aliyuncs.com/google_containers

# 指定集群的IP

# kubeadm init --image-repository=registry.aliyuncs.com/google_containers --apiserver-advertise-address=192.168.80.60mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config# 或者在环境变量中添加:export KUBECONFIG=/etc/kubernetes/admin.conf

# 添加完环境变量后,刷新环境变量:source /etc/profilekubectl cluster-info# 初始化失败后,可进行重置,重置命令:kubeadm reset# 执行成功后,会出现类似下列内容:

# kubeadm join 192.168.80.60:6443 --token f9lvrz.59mykzssqw6vjh32 \

# --discovery-token-ca-cert-hash sha256:4e23156e2f71c5df52dfd2b9b198cce5db27c47707564684ea74986836900107

kubeadm join 192.168.122.101:6443 --token zep3dz.8ft4mebhg7fslqbu \--discovery-token-ca-cert-hash sha256:dffe5efbf0e908471040ea4f6ef790c36fcee39c0b03afb906fd697c70f4152f#

# kubeadm token create --print-join-command

node节点加入集群

kubeadm join 192.168.122.101:6443 --token zep3dz.8ft4mebhg7fslqbu \--discovery-token-ca-cert-hash sha256:dffe5efbf0e908471040ea4f6ef790c36fcee39c0b03afb906fd697c70f4152f

安装calico网络插件

# 下载

wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yamlvim calico.yaml

# 在 - name: CLUSTER_TYPE 下方添加如下内容

- name: CLUSTER_TYPEvalue: "k8s,bgp"# 下方为新增内容

- name: IP_AUTODETECTION_METHODvalue: "interface=网卡名称" #eth0# INTERFACE_NAME=ens33

# sed -i '/k8s,bgp/a \ - name: IP_AUTODETECTION_METHOD\n value: "interface=INTERFACE_NAME"' calico.yaml

# sed -i "s#INTERFACE_NAME#$INTERFACE_NAME#g" calico.yaml# 配置网络

kubectl apply -f calico.yaml集群验证

kubectl run nginx --image=nginx:latest --port=80kubectl expose pod/nginx --target-port=80 --type=NodePort

kubeadm证书续期

主要组件默认有效期是1年时间

如果针对K8S集群进行了版本升级处理,那么证书会自动续期

查看有效期

kubeadm certs check-expiration

证书续期

kubeadm certs renew allDone renewing certificates. You must restart the kube-apiserver, kube-controller-manager, kube-scheduler and etcd, so that they can use the new certificates.

编译kubeadm延长证书有效期

100年,修改golang的源码,在kubeadm init之前进行编译,二进制文件

Helm

yum apt pip npm

Helm常用仓库

常用的Helm仓库包括官方仓库和第三方仓库,其中官方仓库为https://charts.helm.sh/stable

第三方仓库包括:

- Bitnami:https://charts.bitnami.com/bitnami

- https://github.com/bitnami/charts/tree/main/bitnami

- Rancher:https://charts.rancher.io

- 阿里云:https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

- 微软仓库:http://mirror.azure.cn/kubernetes/charts

要通过Helm安装zookeeper,可以使用官方的zookeeper Helm Chart,该Chart存储在官方仓库中。要安装zookeeper,可以使用以下命令:

helm repo add incubator https://charts.helm.sh/incubatorhelm install --name my-zookeeper incubator/zookeeper

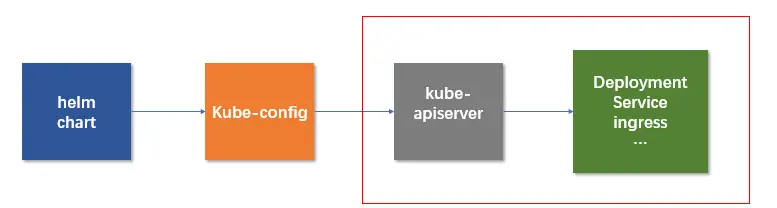

Helm绑定K8S集群

指定对应的k8s集群,它是helm与k8s通讯的保证,这一步就是把k8s环境变量KUBECONFIG进行配置

临时生效:export KUBECONFIG=/root/.kube/config永久生效:vim /etc/profileKUBECONFIG=/root/.kube/config

source /etc/profile

指定k3s

Error: Kubernetes cluster unreachable: Get “http://localhost:8080/version?timeout=32s”: dial tcp 127.0.0.1:8080: connect: connection refused

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

Helm命令补全

source <(helm completion bash)echo "source <(helm completion bash)" >> ~/.bashrc

Helm创建自定义应用helm charts

chart release

image container

你可以使用helm create命令创建一个新的chart,用于部署nginx。首先,在命令行中运行以下命令:

helm create my-nginx这将在当前目录下创建一个名为my-nginx的新chart。接下来,你可以编辑该chart中的模板文件,以定义用于部署nginx的Kubernetes资源。

例如,你可以编辑my-nginx/templates/deployment.yaml文件,以定义一个用于部署nginx的Deployment资源。下面是一个简单的示例:

apiVersion: apps/v1

kind: Deployment

metadata:name: {{ include "my-nginx.fullname" . }}labels:{{- include "my-nginx.labels" . | nindent 4 }}

spec:replicas: {{ .Values.replicaCount }}selector:matchLabels:{{- include "my-nginx.selectorLabels" . | nindent 6 }}template:metadata:labels:{{- include "my-nginx.selectorLabels" . | nindent 8 }}spec:containers:- name: nginximage: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"ports:- name: httpcontainerPort: 80protocol: TCP

你还可以编辑其他模板文件,以定义其他Kubernetes资源,例如Service、ConfigMap等。

完成编辑后,你可以使用helm install命令安装chart,以在Kubernetes集群中部署nginx:

helm install my-release my-nginx/上面的命令将使用my-nginx/目录中的chart在Kubernetes集群中创建一个名为my-release的新发布。

Helm模板函数介绍

Helm的模板语言实际上是Go模板语言、一些额外函数和多种包装器的组合,以便将某些对象暴露给模板。Helm有超过60个可用的函数。其中一些由Go模板语言本身定义。大多数其他函数是Sprig模板库的一部分1。

模板语言的一个强大功能是它的管道概念。借鉴UNIX的一个概念,管道是一种将一系列模板命令链接在一起,以紧凑地表达一系列转换的工具。换句话说,管道是顺序完成多项任务的有效方法。例如,我们可以使用管道重写上面的示例1。

apiVersion: v1

kind: ConfigMap

metadata:name: {{ .Release.Name }}-configmap

data:myvalue: "Hello World"drink: {{ .Values.favorite.drink | quote }}food: {{ .Values.favorite.food | upper | quote }}

在这个例子中,我们使用管道(|)将参数“发送”给函数:.Values.favorite.drink | quote。使用管道,我们可以将多个函数链接在一起1。

Helm quote函数

quote函数用于在模板中引用字符串。当您将.Values对象中的字符串注入模板时,最好使用quote函数对这些字符串进行引用。例如,您可以在模板指令中调用quote函数:

apiVersion: v1

kind: ConfigMap

metadata:name: {{ .Release.Name }}-configmap

data:myvalue: "Hello World"drink: {{ quote .Values.favorite.drink }}food: {{ quote .Values.favorite.food }}

在上面的示例中,quote .Values.favorite.drink调用了quote函数并传递了一个参数。这样,当模板被评估时,它将生成一个带有引号的字符串值。

HelmRequest CRD

K8S Operator HelmRequest是用于部署Helm Chart的自定义资源定义(CRD),它可以管理Helm Chart的生命周期,包括安装、升级、卸载等操作。下面是一个使用示例:

安装K8S Operator HelmRequest

首先,需要安装K8S Operator HelmRequest。可以使用以下命令:

kubectl apply -f https://raw.githubusercontent.com/operator-framework/operator-lifecycle-manager/master/deploy/upstream/quickstart/olm.yaml

创建Helm Chart

接下来,需要创建一个Helm Chart。这里以prometheus-operator为例,可以使用以下命令:

helm fetch stable/prometheus-operatortar -zxvf prometheus-operator-*.tgzcd prometheus-operator

创建HelmRequest

创建一个HelmRequest来部署Helm Chart,可以使用以下yaml文件:

apiVersion: helm.fluxcd.io/v1

kind: HelmRelease

metadata:name: prometheus-operatornamespace: prometheus

spec:releaseName: prometheus-operatorchart:spec:chart: ./prometheus-operatorsourceRef:kind: GitRepositoryname: prometheus-operatornamespace: prometheusapiVersion: source.toolkit.fluxcd.io/v1beta1interval: 5mvalues:prometheusOperator:createCustomResource: falsealertmanager:enabled: trueingress:enabled: trueannotations:nginx.ingress.kubernetes.io/proxy-body-size: "0"hosts:- prometheus-alertmanager.example.comservice:type: ClusterIPgrafana:enabled: trueingress:enabled: trueannotations:nginx.ingress.kubernetes.io/proxy-body-size: "0"hosts:- prometheus-grafana.example.comservice:type: ClusterIP

在这个yaml文件中,定义了一个名为prometheus-operator的HelmRelease资源,使用prometheus Chart来部署prometheus-operator,设置releaseName为prometheus-operator,指定Chart所在的GitRepository为prometheus,设置Chart更新间隔为5分钟

docker-compose中networks的介绍

在docker-compose中,networks关键字用于定义和配置网络。您可以使用它来指定容器之间的网络连接方式。

下面是一个简单的示例,它定义了两个服务:web和db,并将它们连接到名为backend的自定义网络上:

version: '3'

services:web:image: nginx:alpineports:- "8000:80"networks:- backenddb:image: postgres:latestnetworks:- backendnetworks:backend:

在这个示例中,我们定义了一个名为backend的网络。然后,我们在web和db服务中使用了networks关键字,并指定了它们应该连接到backend网络。

这样,当我们使用docker-compose up命令启动这些服务时,它们将被连接到同一个网络上,并且可以相互通信。

您可以使用更多的选项来配置网络,例如指定网络驱动程序、IP地址分配等。您可以在Docker文档中找到更多关于如何使用和配置网络的信息。

Go安装及使用protobuf

Protocol Buffers(简称Protobuf)是一种语言中立、平台中立、可扩展的数据序列化机制。它由谷歌开发,用于序列化结构化数据。您可以定义数据的结构,然后使用特殊生成的源代码轻松地将结构化数据写入和读取各种数据流,并使用多种语言进行操作。1

与XML相比,Protobuf更小、更快、更简单。它目前支持Java、Python、Objective-C和C++等语言的代码生成。使用新的proto3语言版本,您还可以使用Kotlin、Dart、Go、Ruby、PHP和C#等语言。1

您可以在谷歌开发者文档中找到有关如何使用Protocol Buffers的详细信息。1

user.proto

syntax = "proto3";

option go_package="./service";

package service;message User{string username=1;int32 age=2;

}

protoc --go_out=./ user.proto --> service/user.pb.go

main.go

package mainimport ("fmt""github.com/golang/protobuf/proto""proto-demo/service"

)func main() {user := &service.User{Username: "zxl",Age: 18,}marshal, err := proto.Marshal(user)if err != nil {panic(err)}newUser:=&service.User{}err1:=proto.Unmarshal(marshal,newUser)if err1 != nil {panic(err1)}fmt.Println(newUser.String())

}

Go操作RocketMQ实现消息的发送和消费

这是一个使用Go语言操作RocketMQ,实现消息发送和接收的简单示例程序:

package mainimport ("context""fmt""time""github.com/apache/rocketmq-client-go/v2""github.com/apache/rocketmq-client-go/v2/consumer""github.com/apache/rocketmq-client-go/v2/primitive""github.com/apache/rocketmq-client-go/v2/producer"

)func main() {// 实例化生产者p, _ := rocketmq.NewProducer(producer.WithNameServer([]string{"127.0.0.1:9876"}),producer.WithRetry(2),)// 启动生产者p.Start()// 发送消息res, err := p.SendSync(context.Background(), primitive.NewMessage("test", []byte("Hello RocketMQ")))if err != nil {fmt.Printf("发送失败: %s\n", err)} else {fmt.Printf("发送成功: %s\n", res.String())}// 关闭生产者p.Shutdown()// 实例化消费者c, _ := rocketmq.NewPushConsumer(consumer.WithNameServer([]string{"127.0.0.1:9876"}),consumer.WithGroupName("testGroup"),consumer.WithConsumeFromWhere(consumer.ConsumeFromFirstOffset),)// 订阅主题c.Subscribe("test", consumer.MessageSelector{}, func(ctx context.Context,msgs ...*primitive.MessageExt) (consumer.ConsumeResult, error) {for i := range msgs {fmt.Printf("接收到消息: %s\n", msgs[i].Body)}return consumer.ConsumeSuccess, nil})// 启动消费者c.Start()time.Sleep(time.Hour)// 关闭消费者c.Shutdown()

}

这段代码中,我们首先实例化了一个生产者,并启动它。然后,我们使用SendSync方法发送一条消息。接着,我们实例化了一个消费者,并订阅了test主题。最后,我们启动消费者并等待接收消息。

请注意,您需要根据您的环境更改代码中的NameServer地址。

RocketMQ事务消息Go代码示例

以下是一个使用Go语言编写的RocketMQ事务消息的示例代码:

package mainimport ("context""fmt""github.com/apache/rocketmq-client-go/v2""github.com/apache/rocketmq-client-go/v2/consumer""github.com/apache/rocketmq-client-go/v2/primitive""github.com/apache/rocketmq-client-go/v2/producer"

)func main() {p, _ := rocketmq.NewTransactionProducer(&producer.Config{GroupID: "test_group",NameServer: "localhost:9876",},func(ctx context.Context, msg *primitive.MessageExt) rocketmq.LocalTransactionState {return rocketmq.CommitMessageState},nil,)err := p.Start()if err != nil {fmt.Printf("Start producer error: %s", err.Error())return}defer p.Shutdown()c, _ := rocketmq.NewPushConsumer(&consumer.Config{GroupName: "test_group",NameServer: "localhost:9876",})err = c.Subscribe("test_topic", consumer.MessageSelector{}, func(ctx context.Context,msgs ...*primitive.MessageExt) (consumer.ConsumeResult, error) {for _, msg := range msgs {fmt.Printf("Got message: %s\n", msg.Body)}return consumer.ConsumeSuccess, nil})if err != nil {fmt.Printf("Subscribe error: %s", err.Error())return}err = c.Start()if err != nil {fmt.Printf("Start consumer error: %s", err.Error())return}defer c.Shutdown()msg := &primitive.Message{Topic: "test_topic",Tags: []string{"TagA"},Key: "12345",}res, err := p.SendMessageInTransaction(context.Background(), msg,func(ctx context.Context, msg *primitive.Message) (*primitive.SendResult, error) {return p.SendSync(context.Background(), msg)})if err != nil {fmt.Printf("Send message error: %s\n", err.Error())} else {fmt.Printf("Send message success. Result=%s\n", res.String())}

}

希望这个代码能够帮助您1。

Go语言模板函数库Sprig使用示例

Sprig是一个Go语言模板函数库,提供了100多个常用模板函数。它的灵感来自于Twig和各种JavaScript库中的模板函数,比如underscore.js。您可以在GitHub上找到Sprig的源代码1。下面是一个使用Sprig的示例:

package mainimport ("fmt""os""github.com/Masterminds/sprig""text/template"

)func main() {tmpl := template.Must(template.New("test").Funcs(sprig.TxtFuncMap()).Parse(`{{ trimAll " \t\n" " foo bar \n\t " }}`))err := tmpl.Execute(os.Stdout, nil)if err != nil {panic(err)}

}

这个示例演示了如何使用Sprig中的trimAll函数,该函数删除字符串中的所有空格、制表符和换行符1。

RocketMQ运行原理及常用命令整理

RocketMQ是一种开源的分布式消息中间件,用于在生产和消费过程中传输消息。RocketMQ的基本运行原理是:生产者将消息发送到Broker,Broker将消息存储在Topic中,消费者从Broker订阅Topic并消费消息1。

RocketMQ由多个组件组成,包括NameServer、Broker、Producer和Consumer。NameServer是一个简单的命名服务,用于管理Broker的地址信息。Broker是RocketMQ的核心组件,负责存储和传递消息。Producer是消息的生产者,负责将消息发送到Broker。Consumer是消息的消费者,负责从Broker订阅Topic并消费消息2.

以下是一些常用的RocketMQ命令使用示例:

- 启动NameServer:

nohup sh mqnamesrv & - 启动Broker:

nohup sh mqbroker -n localhost:9876 & - 查看NameServer和Broker的运行状态:

sh mqadmin clusterList -n localhost:9876 - 查看所有Topic:

sh mqadmin topicList -n localhost:9876 - 查看某个Topic的信息:

sh mqadmin topicStatus -t TopicTest -n localhost:9876 - 查看某个Consumer Group的信息:

sh mqadmin consumerProgress -g ConsumerGroupTest -n localhost:9876

希望这些信息能够帮助您了解RocketMQ3。

Federation实现K8S多集群管理

Federation是Kubernetes的一个子项目,它提供了一种管理多个Kubernetes集群的方式,可以将多个集群组合成一个大型的Kubernetes集群。下面是一个示例,演示如何使用Federation来管理多个Kubernetes集群:

安装Federation

首先,需要安装Federation。可以使用以下命令:

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/kubernetes-client-linux-amd64.tar.gztar -zxvf kubernetes-client-linux-amd64.tar.gzsudo mv kubernetes/client/bin/kubefed /usr/local/bin/

创建Federation配置文件

创建一个名为federation.yaml的文件,用于定义Federation的配置:

apiVersion: federation/v1beta1

kind: Federation

metadata:name: my-federation

spec:apiServer:host: <API_SERVER_ADDRESS>certData: <BASE64_ENCODED_CERTIFICATE>keyData: <BASE64_ENCODED_PRIVATE_KEY>placement:clusters:- name: cluster1clusterAPIEndpoint:host: <CLUSTER_1_API_SERVER_ADDRESS>certData: <BASE64_ENCODED_CERTIFICATE>keyData: <BASE64_ENCODED_PRIVATE_KEY>placement:labelSelector:matchExpressions:- key: federationoperator: Invalues:- cluster1- name: cluster2clusterAPIEndpoint:host: <CLUSTER_2_API_SERVER_ADDRESS>certData: <BASE64_ENCODED_CERTIFICATE>keyData: <BASE64_ENCODED_PRIVATE_KEY>placement:labelSelector:matchExpressions:- key: federationoperator: Invalues:- cluster2

在这个yaml文件中,定义了一个名为my-federation的Federation资源,指定了Federation的API Server地址和证书信息。同时指定了两个名为cluster1和cluster2的Kubernetes集群,每个集群都有自己的API Server地址和证书信息。在每个集群中,使用标签选择器来指定该集群。