文章目录

- 一、物理内存分配概述

- 二、分配核心函数(__alloc_pages_nodemask)

- (2-1)重要函数1:(get_page_from_freelist())

- (2-2-1)for_each_zone_zonelist_nodemask{}

- (2-2)重要函数2:(__alloc_pages_slowpath())

- 三、分配掩码

- (3-1)分配掩码宏定义

- (3-2)宏定义组合

- 四、总结

👀 注:以下代码片段出自linux内核版本:

4.1.15

一、物理内存分配概述

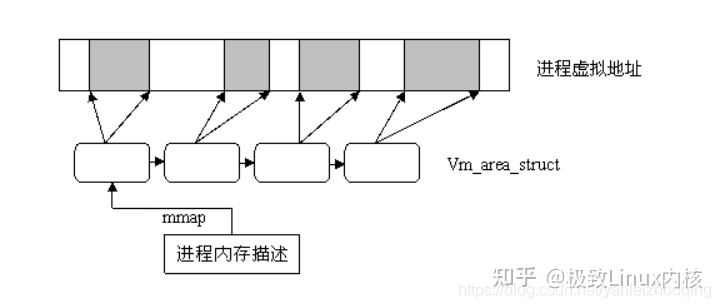

在linux内核中,页面分配器用于分配出一个或者多个连续的物理页面。分配的页面个数只能是2的整数次幂;分配连续的物理页面要比分配离散的物理页面更有利于缓解系统的内存碎片化。

linux内核中常使用的内存分配API函数如下:

//只分配1个page,返回指向第一page结构的指针

alloc_page() //分配2^order个page,返回指向第一page结构的指针

alloc_pages()//只分配1个page,返回该page所在的虚拟地址的指针

__get_free_page()//配2^order个page,返回指向第一page结构的虚拟地址的指针

__get_free_pages()//只分配1个page,让内容填充为0,返回该page所在的虚拟地址的指针

get_zeroed_page()

__get_dma_pages()

上述这些函数都将调用到一个共同函数:__alloc_pages_nodemask()。该函数是linux分配内存的核心函数。

二、分配核心函数(__alloc_pages_nodemask)

__alloc_pages_nodemask()函数原型:

struct page *__alloc_pages_nodemask

(gfp_t gfp_mask, //分配掩码unsigned int order, //分配阶数struct zonelist *zonelist, //zone链表nodemask_t *nodemask //node掩码

)

函数定义(/mm/page_alloc.c):

struct page *__alloc_pages_nodemask(gfp_t gfp_mask, unsigned int order,struct zonelist *zonelist, nodemask_t *nodemask)

{struct zoneref *preferred_zoneref;struct page *page = NULL;unsigned int cpuset_mems_cookie;int alloc_flags = ALLOC_WMARK_LOW|ALLOC_CPUSET|ALLOC_FAIR;gfp_t alloc_mask; /* The gfp_t that was actually used for allocation *///struct alloc_context数据结构用于保存分配相关参数。struct alloc_context ac = {//gfp_zone()函数将从分配掩码中计算出zoneidx,并存放于high_zoneidx结构成员中。.high_zoneidx = gfp_zone(gfp_mask),//将nodemask进行保存到ac中.nodemask = nodemask,//把gfp_mask掩码转换成MIGRATE_TYPES类型,并存放于migratetype结构成员中。.migratetype = gfpflags_to_migratetype(gfp_mask),};gfp_mask &= gfp_allowed_mask;lockdep_trace_alloc(gfp_mask);might_sleep_if(gfp_mask & __GFP_WAIT);if (should_fail_alloc_page(gfp_mask, order))return NULL;/** Check the zones suitable for the gfp_mask contain at least one* valid zone. It's possible to have an empty zonelist as a result* of __GFP_THISNODE and a memoryless node*/if (unlikely(!zonelist->_zonerefs->zone))return NULL;if (IS_ENABLED(CONFIG_CMA) && ac.migratetype == MIGRATE_MOVABLE)alloc_flags |= ALLOC_CMA;retry_cpuset:cpuset_mems_cookie = read_mems_allowed_begin();//将zonelist保存到ac中,因为在__alloc_pages_slowpath函数中可能会改变zonelistac.zonelist = zonelist;/* The preferred zone is used for statistics later */preferred_zoneref = first_zones_zonelist(ac.zonelist, ac.high_zoneidx,ac.nodemask ? : &cpuset_current_mems_allowed,&ac.preferred_zone);if (!ac.preferred_zone)goto out;ac.classzone_idx = zonelist_zone_idx(preferred_zoneref);//设置分配掩码alloc_mask = gfp_mask|__GFP_HARDWALL;page = get_page_from_freelist(alloc_mask, order, alloc_flags, &ac);if (unlikely(!page)) {//设置分配掩码为noio标志。 因为设备上的I/O可能还没有执行完成,运行时PM、block IO以及其错误处理路径可能将导致死锁。alloc_mask = memalloc_noio_flags(gfp_mask);page = __alloc_pages_slowpath(alloc_mask, order, &ac);}if (kmemcheck_enabled && page)kmemcheck_pagealloc_alloc(page, order, gfp_mask);trace_mm_page_alloc(page, order, alloc_mask, ac.migratetype);out:/** When updating a task's mems_allowed, it is possible to race with* parallel threads in such a way that an allocation can fail while* the mask is being updated. If a page allocation is about to fail,* check if the cpuset changed during allocation and if so, retry.*/if (unlikely(!page && read_mems_allowed_retry(cpuset_mems_cookie)))goto retry_cpuset;return page;

}

上述函数中调用get_page_from_freelist(alloc_mask, order, alloc_flags, &ac);去尝试分配物理页面。

当未能成功分配页面时,将进入以下所示的代码片段:

alloc_mask = memalloc_noio_flags(gfp_mask);

page = __alloc_pages_slowpath(alloc_mask, order, &ac);

在__alloc_pages_slowpath()函数中将处理很多特殊场景,且该函数也较复杂,此处暂且打住。

(2-1)重要函数1:(get_page_from_freelist())

get_page_from_freelist()函数定义如下(/mm/page_alloc.c):

static struct page *

get_page_from_freelist(gfp_t gfp_mask, unsigned int order, int alloc_flags,const struct alloc_context *ac)

{struct zonelist *zonelist = ac->zonelist;struct zoneref *z;struct page *page = NULL;struct zone *zone;nodemask_t *allowednodes = NULL;/* zonelist_cache approximation */int zlc_active = 0; /* set if using zonelist_cache */int did_zlc_setup = 0; /* just call zlc_setup() one time */bool consider_zone_dirty = (alloc_flags & ALLOC_WMARK_LOW) &&(gfp_mask & __GFP_WRITE);int nr_fair_skipped = 0;bool zonelist_rescan;zonelist_scan:zonelist_rescan = false;/** Scan zonelist, looking for a zone with enough free.* See also __cpuset_node_allowed() comment in kernel/cpuset.c.*/for_each_zone_zonelist_nodemask(zone, z, zonelist, ac->high_zoneidx,ac->nodemask) {unsigned long mark;if (IS_ENABLED(CONFIG_NUMA) && zlc_active &&!zlc_zone_worth_trying(zonelist, z, allowednodes))continue;if (cpusets_enabled() &&(alloc_flags & ALLOC_CPUSET) &&!cpuset_zone_allowed(zone, gfp_mask))continue;/** Distribute pages in proportion to the individual* zone size to ensure fair page aging. The zone a* page was allocated in should have no effect on the* time the page has in memory before being reclaimed.*/if (alloc_flags & ALLOC_FAIR) {if (!zone_local(ac->preferred_zone, zone))break;if (test_bit(ZONE_FAIR_DEPLETED, &zone->flags)) {nr_fair_skipped++;continue;}}/** When allocating a page cache page for writing, we* want to get it from a zone that is within its dirty* limit, such that no single zone holds more than its* proportional share of globally allowed dirty pages.* The dirty limits take into account the zone's* lowmem reserves and high watermark so that kswapd* should be able to balance it without having to* write pages from its LRU list.** This may look like it could increase pressure on* lower zones by failing allocations in higher zones* before they are full. But the pages that do spill* over are limited as the lower zones are protected* by this very same mechanism. It should not become* a practical burden to them.** XXX: For now, allow allocations to potentially* exceed the per-zone dirty limit in the slowpath* (ALLOC_WMARK_LOW unset) before going into reclaim,* which is important when on a NUMA setup the allowed* zones are together not big enough to reach the* global limit. The proper fix for these situations* will require awareness of zones in the* dirty-throttling and the flusher threads.*/if (consider_zone_dirty && !zone_dirty_ok(zone))continue;mark = zone->watermark[alloc_flags & ALLOC_WMARK_MASK];if (!zone_watermark_ok(zone, order, mark,ac->classzone_idx, alloc_flags)) {int ret;/* Checked here to keep the fast path fast */BUILD_BUG_ON(ALLOC_NO_WATERMARKS < NR_WMARK);if (alloc_flags & ALLOC_NO_WATERMARKS)goto try_this_zone;if (IS_ENABLED(CONFIG_NUMA) &&!did_zlc_setup && nr_online_nodes > 1) {/** we do zlc_setup if there are multiple nodes* and before considering the first zone allowed* by the cpuset.*/allowednodes = zlc_setup(zonelist, alloc_flags);zlc_active = 1;did_zlc_setup = 1;}if (zone_reclaim_mode == 0 ||!zone_allows_reclaim(ac->preferred_zone, zone))goto this_zone_full;/** As we may have just activated ZLC, check if the first* eligible zone has failed zone_reclaim recently.*/if (IS_ENABLED(CONFIG_NUMA) && zlc_active &&!zlc_zone_worth_trying(zonelist, z, allowednodes))continue;ret = zone_reclaim(zone, gfp_mask, order);switch (ret) {case ZONE_RECLAIM_NOSCAN:/* did not scan */continue;case ZONE_RECLAIM_FULL:/* scanned but unreclaimable */continue;default:/* did we reclaim enough */if (zone_watermark_ok(zone, order, mark,ac->classzone_idx, alloc_flags))goto try_this_zone;/** Failed to reclaim enough to meet watermark.* Only mark the zone full if checking the min* watermark or if we failed to reclaim just* 1<<order pages or else the page allocator* fastpath will prematurely mark zones full* when the watermark is between the low and* min watermarks.*/if (((alloc_flags & ALLOC_WMARK_MASK) == ALLOC_WMARK_MIN) ||ret == ZONE_RECLAIM_SOME)goto this_zone_full;continue;}}try_this_zone:page = buffered_rmqueue(ac->preferred_zone, zone, order,gfp_mask, ac->migratetype);if (page) {if (prep_new_page(page, order, gfp_mask, alloc_flags))goto try_this_zone;return page;}

this_zone_full:if (IS_ENABLED(CONFIG_NUMA) && zlc_active)zlc_mark_zone_full(zonelist, z);}/**************************for_each_zone_zonelist_nodemask [End]*************************//** The first pass makes sure allocations are spread fairly within the* local node. However, the local node might have free pages left* after the fairness batches are exhausted, and remote zones haven't* even been considered yet. Try once more without fairness, and* include remote zones now, before entering the slowpath and waking* kswapd: prefer spilling to a remote zone over swapping locally.*/if (alloc_flags & ALLOC_FAIR) {alloc_flags &= ~ALLOC_FAIR;if (nr_fair_skipped) {zonelist_rescan = true;reset_alloc_batches(ac->preferred_zone);}if (nr_online_nodes > 1)zonelist_rescan = true;}if (unlikely(IS_ENABLED(CONFIG_NUMA) && zlc_active)) {/* Disable zlc cache for second zonelist scan */zlc_active = 0;zonelist_rescan = true;}if (zonelist_rescan)goto zonelist_scan;return NULL;

}

以上代码比较长,主要分两部分:

(1)for_each_zone_zonelist_nodemask{}结构中部分。

(2)页面分配完后的后续操作

(2-2-1)for_each_zone_zonelist_nodemask{}

for_each_zone_zonelist_nodemask本质是一个宏定义:

#define for_each_zone_zonelist_nodemask(zone, z, zlist, highidx, nodemask) \for (z = first_zones_zonelist(zlist, highidx, nodemask, &zone); \zone; \z = next_zones_zonelist(++z, highidx, nodemask), \zone = zonelist_zone(z)) \

- zone:迭代器中的当前zone

- z:正在被迭代的

zonelist->zone中的当前指针 - zlist:正在迭代的zonelist

- highidx:最高zone的zone索引

- nodemask:分配器允许的nodemask

该宏定义功能是:充当一个迭代器,用于在给定zone索引或低于给定zone索引和节点掩码的zonelist中迭代有效的zone。

-

first_zones_zonelist()功能是:返回zonelist中允许的nodemask中最高zoneidx或低于最高zoneidx的第一个zoneref指针(注:zoneref结构中包含了一个zone和一个zoneidx)。 -

next_zones_zonelist功能是:返回位于或低于zonelist中最高zoneidx的下一个zone。

当for_each_zone_zonelist_nodemask找到了zone,接下来会调用zone_watermark_ok()检查zone中的水位是否充足。

如果检测到zone中的水位充足,就调用buffered_rmqueue()函数从伙伴系统中分配物理页面。buffered_rmqueue()函数的功能是:从给定的zone中分配一个页面。注:当order为0时的分配将使用pcplist(什么是pcplist,容后续分析!)。

(2-2)重要函数2:(__alloc_pages_slowpath())

__alloc_pages_slowpath()函数较长,该函数是在get_page_from_freelist()尝试分配物理页面失败后才会调用。经过该函数处理后结果有两种情况:

(1)情况1、重新分配物理页面成功,那么将返回对应的page。

(2)情况2、页面分配失败,将调用warn_alloc_failed()函数提示物理页面分配失败,并将打印出:

%s: page allocation failure: order:%d

类似信息。

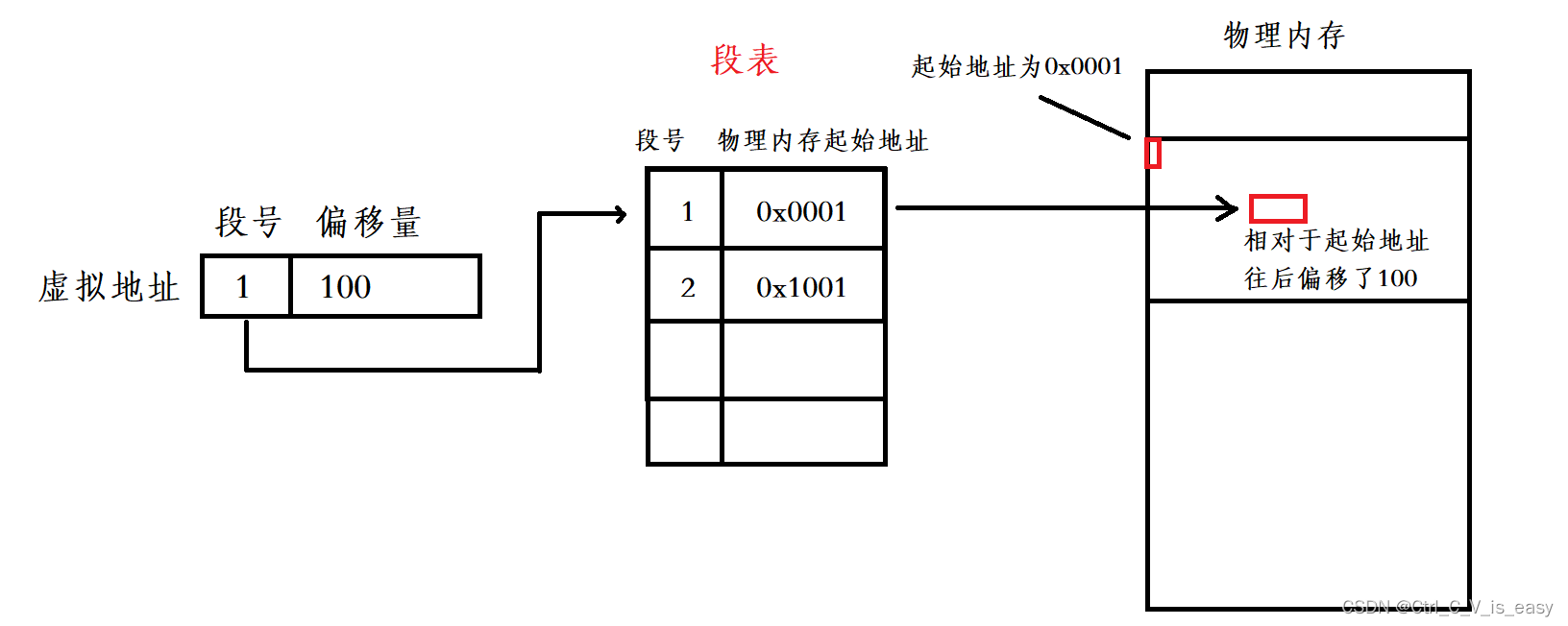

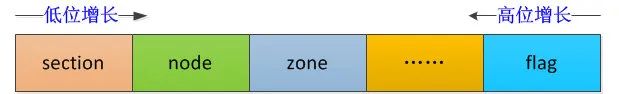

三、分配掩码

(3-1)分配掩码宏定义

分配掩码是一个在linux内核进行内存分配时需要执行或传递的重要参数,用于控制分配器的页面分配行为和状态。其定义在文件(/include/linux/gfp.h)中:

#define ___GFP_DMA 0x01u

#define ___GFP_HIGHMEM 0x02u

#define ___GFP_DMA32 0x04u

#define ___GFP_MOVABLE 0x08u

#define ___GFP_WAIT 0x10u

#define ___GFP_HIGH 0x20u

#define ___GFP_IO 0x40u

#define ___GFP_FS 0x80u

#define ___GFP_COLD 0x100u

#define ___GFP_NOWARN 0x200u

#define ___GFP_REPEAT 0x400u

#define ___GFP_NOFAIL 0x800u

#define ___GFP_NORETRY 0x1000u

#define ___GFP_MEMALLOC 0x2000u

#define ___GFP_COMP 0x4000u

#define ___GFP_ZERO 0x8000u

#define ___GFP_NOMEMALLOC 0x10000u

#define ___GFP_HARDWALL 0x20000u

#define ___GFP_THISNODE 0x40000u

#define ___GFP_RECLAIMABLE 0x80000u

#define ___GFP_NOACCOUNT 0x100000u

#define ___GFP_NOTRACK 0x200000u

#define ___GFP_NO_KSWAPD 0x400000u

#define ___GFP_OTHER_NODE 0x800000u

#define ___GFP_WRITE 0x1000000u

以上宏定义在实际开发中,内核开发者们建议不要直接使用,而是将其组合着使用:

分配掩码在linux内核中分为两类:

(1)zone_modifiers:指定从哪个zone中分配页面。有以下宏定义选项(/include/linux/gfp.h):

#define __GFP_DMA ((__force gfp_t)___GFP_DMA)

#define __GFP_HIGHMEM ((__force gfp_t)___GFP_HIGHMEM)

#define __GFP_DMA32 ((__force gfp_t)___GFP_DMA32)

#define __GFP_MOVABLE ((__force gfp_t)___GFP_MOVABLE) /* Page is movable */

#define GFP_ZONEMASK (__GFP_DMA|__GFP_HIGHMEM|__GFP_DMA32|__GFP_MOVABLE)

(2)action_modifiers:由分配掩码的最低4位来定义,分别是__GFP_DMA、__GFP_HIGHMEM、__GFP_DMA32、__GFP_MOVABLE。有以下宏定义选项(/include/linux/gfp.h):

#define __GFP_WAIT ((__force gfp_t)___GFP_WAIT) /* Can wait and reschedule? */

#define __GFP_HIGH ((__force gfp_t)___GFP_HIGH) /* Should access emergency pools? */

#define __GFP_IO ((__force gfp_t)___GFP_IO) /* Can start physical IO? */

#define __GFP_FS ((__force gfp_t)___GFP_FS) /* Can call down to low-level FS? */

#define __GFP_COLD ((__force gfp_t)___GFP_COLD) /* Cache-cold page required */

#define __GFP_NOWARN ((__force gfp_t)___GFP_NOWARN) /* Suppress page allocation failure warning */

#define __GFP_REPEAT ((__force gfp_t)___GFP_REPEAT) /* See above */

#define __GFP_NOFAIL ((__force gfp_t)___GFP_NOFAIL) /* See above */

#define __GFP_NORETRY ((__force gfp_t)___GFP_NORETRY) /* See above */

#define __GFP_MEMALLOC ((__force gfp_t)___GFP_MEMALLOC)/* Allow access to emergency reserves */

#define __GFP_COMP ((__force gfp_t)___GFP_COMP) /* Add compound page metadata */

#define __GFP_ZERO ((__force gfp_t)___GFP_ZERO) /* Return zeroed page on success */

#define __GFP_NOMEMALLOC ((__force gfp_t)___GFP_NOMEMALLOC) /* Don't use emergency reserves.*/

#define __GFP_HARDWALL ((__force gfp_t)___GFP_HARDWALL) /* Enforce hardwall cpuset memory allocs */

#define __GFP_THISNODE ((__force gfp_t)___GFP_THISNODE)/* No fallback, no policies */

#define __GFP_RECLAIMABLE ((__force gfp_t)___GFP_RECLAIMABLE) /* Page is reclaimable */

#define __GFP_NOACCOUNT ((__force gfp_t)___GFP_NOACCOUNT) /* Don't account to kmemcg */

#define __GFP_NOTRACK ((__force gfp_t)___GFP_NOTRACK) /* Don't track with kmemcheck */#define __GFP_NO_KSWAPD ((__force gfp_t)___GFP_NO_KSWAPD)

#define __GFP_OTHER_NODE ((__force gfp_t)___GFP_OTHER_NODE) /* On behalf of other node */

#define __GFP_WRITE ((__force gfp_t)___GFP_WRITE) /* Allocator intends to dirty page */

(3-2)宏定义组合

宏定义组合就是将两个或多个分配掩码宏定义组合成一个宏定义,以便实现多个功能掩码标记。常用宏定义组合如下(/include/linux/gfp.h):

#define GFP_ATOMIC (__GFP_HIGH)

#define GFP_NOIO (__GFP_WAIT)

#define GFP_NOFS (__GFP_WAIT | __GFP_IO)

#define GFP_KERNEL (__GFP_WAIT | __GFP_IO | __GFP_FS)

#define GFP_TEMPORARY (__GFP_WAIT | __GFP_IO | __GFP_FS | \__GFP_RECLAIMABLE)

#define GFP_USER (__GFP_WAIT | __GFP_IO | __GFP_FS | __GFP_HARDWALL)

#define GFP_HIGHUSER (GFP_USER | __GFP_HIGHMEM)

#define GFP_HIGHUSER_MOVABLE (GFP_HIGHUSER | __GFP_MOVABLE)

#define GFP_IOFS (__GFP_IO | __GFP_FS)

#define GFP_TRANSHUGE (GFP_HIGHUSER_MOVABLE | __GFP_COMP | \__GFP_NOMEMALLOC | __GFP_NORETRY | __GFP_NOWARN | \__GFP_NO_KSWAPD)

(注:代码没贴完,具体可参考源码)

四、总结

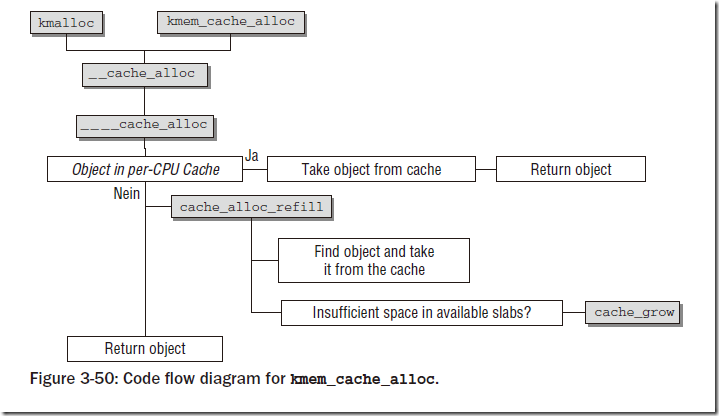

linux分配物理页面的过程比较复杂,用一张图来总结一下: