下面主要介绍Kafka两种认证方式

kafka验证方式:

-

SASL/PLAIN:不能动态添加用户配置文件写死账号密码

-

SASL/SCRAM: 可以动态的添加用户

SASL/PLAIN方式

cd /usr/local/kafka/kafka_2.12-3.0.1/bin/

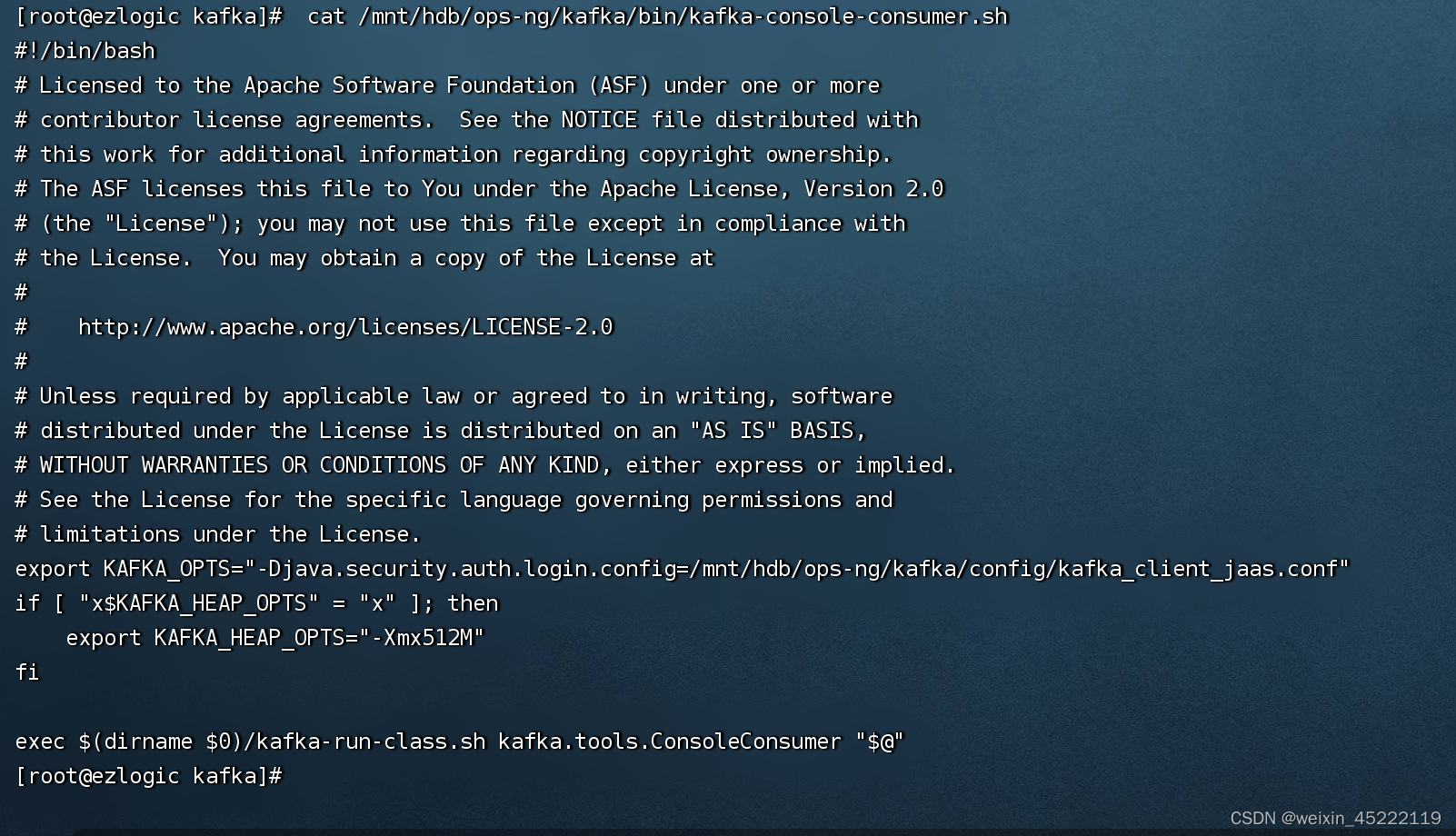

## 复制一份saslcp kafka-server-start.sh kafka-server-start-sasl.sh 在kafka-server-start-sasl.sh 末尾修改配置

exec $base_dir/kafka-run-class.sh $EXTRA_ARGS -Djava.security.auth.login.config=/usr/local/kafka/kafka_2.12-3.0.1/config/kafka-server-jaas.conf kafka.Kafka "$@"或者在环境变量vim /etc/profile末尾增加如下配置:

if [ "x$KAFKA_OPTS" ]; thenexport KAFKA_OPTS="-Djava.security.auth.login.config=/usr/local/kafka/kafka_2.12-3.0.1/config/kafka-client-jaas.conf"

ficonfig目录下增加kafka_server_jaas.conf文件

touch kafka-server-jaas.confKafkaServer {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="admin"password="admin"user_admin="admin"user_rex="123456"user_alice="123456"user_lucy="123456";

};进入config目录将server.properties 复制一份改为server-sasl.properties 并添加如下配置:

listeners=SASL_PLAINTEXT://localhost:9092

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.enabled.mechanisms=PLAIN

sasl.mechanism.inter.broker.protocol=PLAIN

authorizer.class.name=kafka.security.authorizer.AclAuthorizer ## 如果kafka是3.0一下的配置authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer 因为kafka3.0开始已经移除了SimpleAclAuthorizer改用AclAuthorizer 如果还是配置SimpleAclAuthorizer 启动时会报ClassNotFoundException

super.users=User:admin使用sasl认证启动kafka

./bin/kafka-server-start-sasl.sh -daemon config/server-sasl.propertiesSASL/SCRAM方式

创建kafka用户

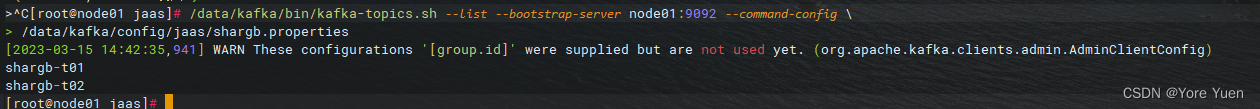

bin/kafka-configs.sh --zookeeper 127.0.0.1:2181/kafka --alter --add-config 'SCRAM-SHA-256=[password=admin],SCRAM-SHA-512=[password=admin]' --entity-type users --entity-name admin # 系统用户bin/kafka-configs.sh --zookeeper 127.0.0.1:2181/kafka --alter --add-config 'SCRAM-SHA-256=[password=chan_test],SCRAM-SHA-512=[password=chan_test]' --entity-type users --entity-name chan # 测试用户可以看到在zk上已经创建了对应的用户信息,并且对密码做了加密

在kafka的config目录下创建jaas文件

touch kafka_server_jaas_scram.confvim kafka_server_jaas_scram.confKafkaServer{

org.apache.kafka.common.security.scram.ScramLoginModule required

username="admin"

password="admin";

} # 同时启用SCRAM和PLAIN机制

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN

# 为broker间通讯开启SCRAM机制,采用SCRAM-SHA-512算法

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-256

# broker间通讯使用PLAINTEXT

security.inter.broker.protocol=SASL_PLAINTEXT

# 配置listeners使用SASL_PLAINTEXT

listeners=SASL_PLAINTEXT://:9092

# 配置advertised.listeners

advertised.listeners=SASL_PLAINTEXT://127.0.0.1:9092#ACL配置

allow.everyone.if.no.acl.found=false

# 系统用户,多个分号隔开

super.users=User:admin;

authorizer.class.name=kafka.security.authorizer.AclAuthorizer完整配置

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.# see kafka.server.KafkaConfig for additional details and defaults############################# Server Basics ############################## The id of the broker. This must be set to a unique integer for each broker.

broker.id=0############################# Socket Server Settings ############################## The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

#listeners=PLAINTEXT://:9092# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

#advertised.listeners=PLAINTEXT://your.host.name:9092# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=3# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600############################# Log Basics ############################## A comma separated list of directories under which to store log files

log.dirs=/usr/local/kafka/data/kafka-logs# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1############################# Log Flush Policy ############################## Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000############################# Log Retention Policy ############################## The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=168# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000############################# Zookeeper ############################## Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=127.0.0.1:2181/kafka# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=18000############################# Group Coordinator Settings ############################## The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0# 同时启用SCRAM和PLAIN机制

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN

# 为broker间通讯开启SCRAM机制,采用SCRAM-SHA-512算法

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-256

# broker间通讯使用PLAINTEXT

security.inter.broker.protocol=SASL_PLAINTEXT

# 配置listeners使用SASL_PLAINTEXT

listeners=SASL_PLAINTEXT://:9092

# 配置advertised.listeners

advertised.listeners=SASL_PLAINTEXT://127.0.0.1:9092#ACL配置

allow.everyone.if.no.acl.found=false

# 系统用户,多个分号隔开

super.users=User:admin;

# 如果kafka小于3.x版本的 这边配置需要改成kafka.security.auth.SimpleAclAuthorizer

authorizer.class.name=kafka.security.authorizer.AclAuthorizer拷贝一份启动脚本重命名为:kafka-server-start-scram.sh 修改kafka启动脚本 注释最后一行并添加如下配置

#exec $base_dir/kafka-run-class.sh $EXTRA_ARGS kafka.Kafka "$@"

exec $base_dir/kafka-run-class.sh $EXTRA_ARGS -Djava.security.auth.login.config=/usr/local/kafka/kafka_2.12-3.0.1/config/kafka_server_jaas_scram.conf kafka.Kafka "$@"启动kafka

./kafka-server-start-scram.sh -daemon ../config/server-scram.properties 注意:kafka连接的zk地址需要跟创建用户的zk节点一样,否则启动kafka会报认证失败<!--failed authentication due to: Authentication failed during authentication due to invalid credentials with SASL mechanism SCRAM-SHA-256-->

比如:server.properties 配置连接的zk是zookeeper.connect=127.0.0.1:2181/kafka ,这时创建用户的zk地址要bin/kafka-configs.sh --zookeeper 127.0.0.1:2181/kafka才可以 如果zk没有kafka节点 需要自己到zk上新建一个。

启动生产者和消费者

由于broker使用安全认证的方式启动,所以开启生产者和消费者也需要经过客户端认证。

-

在config目录下新建 producer.conf并添加以下配置

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin";使用配置启动生产者

[root@hub kafka_2.12-3.0.1]# bin/kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic test --producer.config config/producer.conf

>1

>2

>3

>使用chan用户开启生产者

在config目录新建producer-chan.conf并添加以下配置

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="chan" password="chan_test";使用chan开启生产者,可以看到test这个topic是没有对chan这个用户做认证的,所以chan对这个用户没办法进行生产消息,这时候就需要进行授权。

[root@hub kafka_2.12-3.0.1]# bin/kafka-console-producer.sh --bootstrap-server 192.168.67.142:9092 --topic test --producer.config config/producer-chan.conf

>1

[2022-04-13 20:32:43,651] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 4 : {test=TOPIC_AUTHORIZATION_FAILED} (org.apache.kafka.clients.NetworkClient)

[2022-04-13 20:32:43,654] ERROR [Producer clientId=console-producer] Topic authorization failed for topics [test] (org.apache.kafka.clients.Metadata)

[2022-04-13 20:32:43,657] ERROR Error when sending message to topic test with key: null, value: 1 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback)

org.apache.kafka.common.errors.TopicAuthorizationException: Not authorized to access topics: [test]开启消费者

[root@hub config]# cat consumer.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin";bin/kafka-console-consumer.sh --bootstrap-server 192.168.67.142:9092 --topic test --group 'test_group' --from-beginning --consumer.config config/consumer.confACL授权

给chan这个用户授权,对test这个topic可以进行写操作

[root@hub kafka_2.12-3.0.1]# bin/kafka-acls.sh --authorizer-properties zookeeper.connect=127.0.0.1:2181/kafka --add --allow-principal User:chan --operation Write --topic 'test'

Adding ACLs for resource `ResourcePattern(resourceType=TOPIC, name=test, patternType=LITERAL)`: (principal=User:chan, host=*, operation=WRITE, permissionType=ALLOW) Current ACLs for resource `ResourcePattern(resourceType=TOPIC, name=test, patternType=LITERAL)`: (principal=User:chan, host=*, operation=WRITE, permissionType=ALLOW) [root@hub kafka_2.12-3.0.1]# bin/kafka-console-producer.sh --bootstrap-server 192.168.67.142:9092 --topic test --producer.config config/producer-chan.conf

>1

>2

>3

>可以看到chan这个用户就可以往这个topic写数据了。