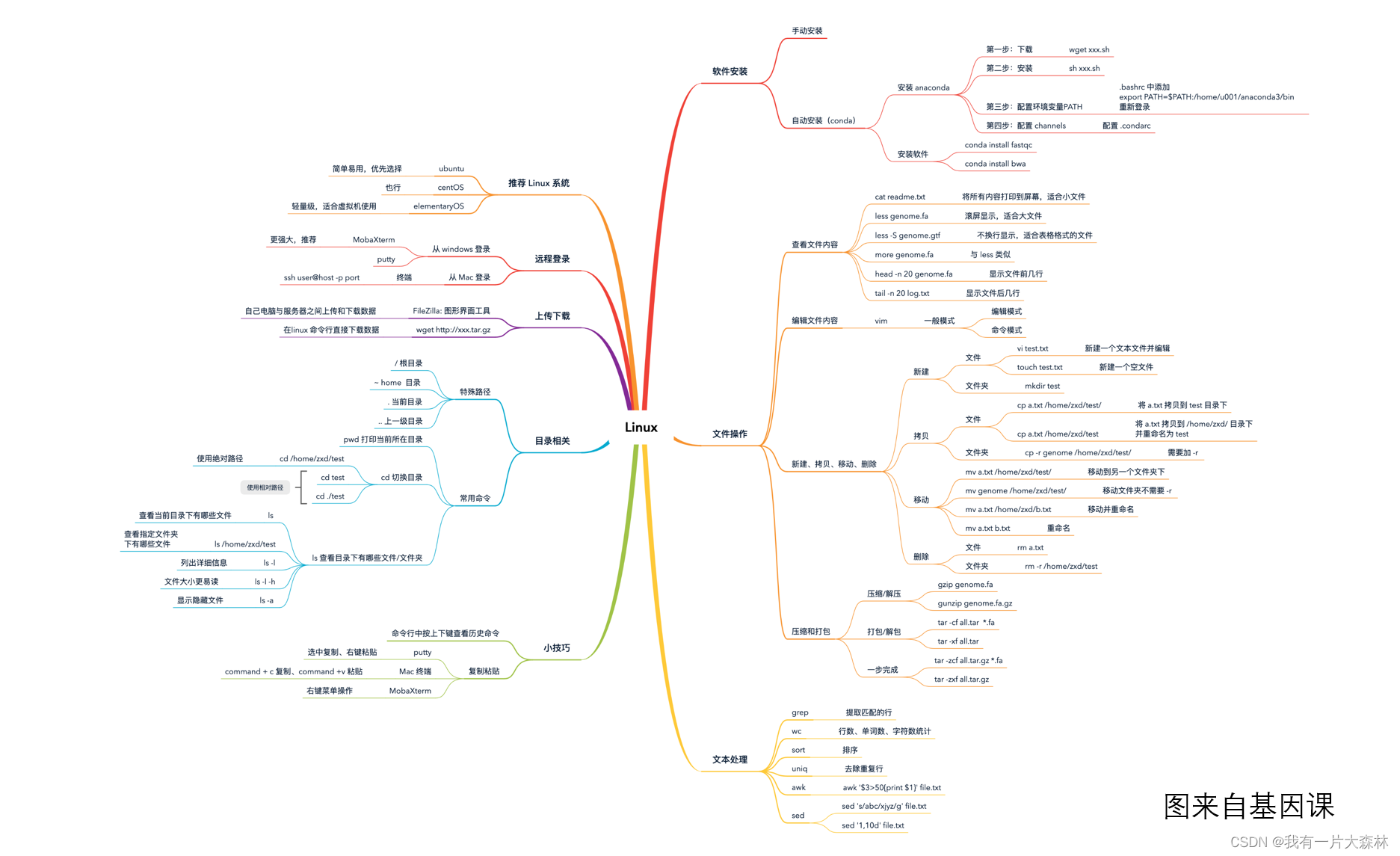

一、什么是ELK

ELK是Elasticsearch + Logstash + Kibana 这种架构的简写.

二、ELK常见的架构

Elasticsearch + Logstash + Kibana

这是一种最简单的架构。这种架构,通过logstash收集日志,Elasticsearch分析日志,然后在Kibana(web界面)中展示。这种架构虽然是官网介绍里的方式,但是往往在生产中很少使用。

Elasticsearch + Logstash + filebeat + Kibana

与上一种架构相比,这种架构增加了一个filebeat模块。filebeat是一个轻量的日志收集代理,用来部署在客户端,优势是消耗非常少的资源(较logstash), 所以生产中,往往会采取这种架构方式,但是这种架构有一个缺点,当logstash出现故障, 会造成日志的丢失。

Elasticsearch + Logstash + filebeat + redis(也可以是其他中间件,比如rabbitmq(集群化)) + Kibana

这种架构是上面那个架构的完善版,通过增加中间件,来避免数据的丢失。当Logstash出现故障,日志还是存在中间件中,当Logstash再次启动,则会读取中间件中积压的日志。

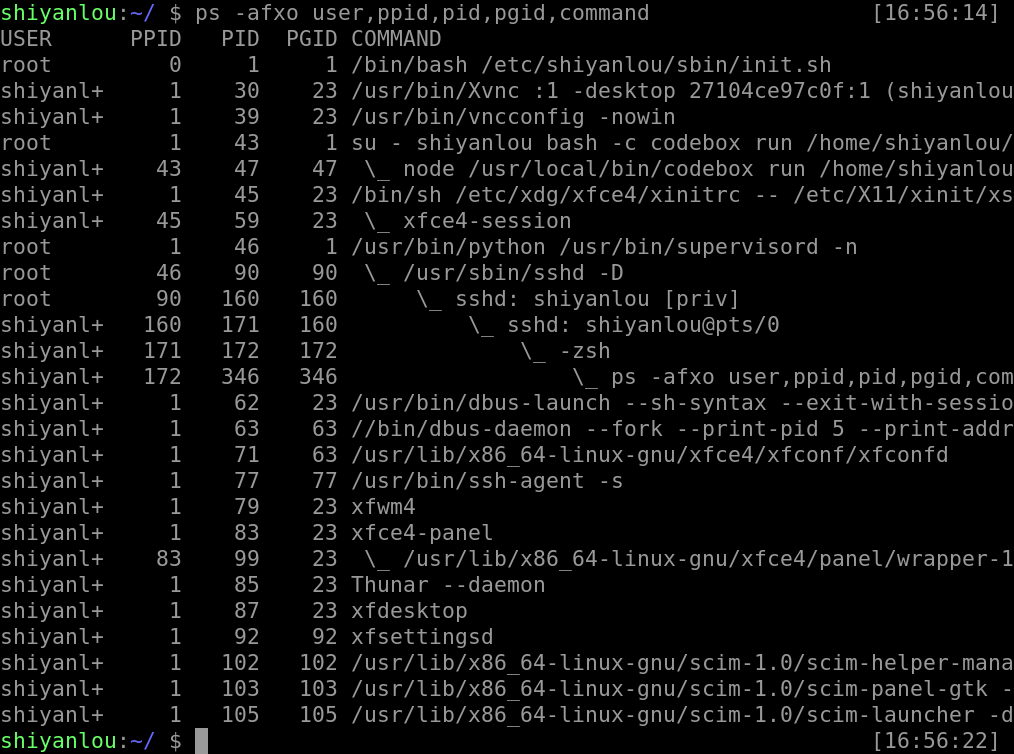

下面如何搭建:

1.es 搭建

1.Create a file called elasticsearch.repo in the /etc/yum.repos.d/

[elasticsearch]

name=Elasticsearch repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=0

autorefresh=1

type=rpm-md2.sudo yum install --enablerepo=elasticsearch elasticsearch

3.配置:vim /etc/elasticsearch/elasticsearch.yml

path.data: /data/elasticsearch

#

# Path to log files:

#

path.logs: /var/log/elasticsearchnetwork.host: 0.0.0.0

#

# By default Elasticsearch listens for HTTP traffic on the first free port it

# finds starting at 9200. Set a specific HTTP port here:

#

http.port: 9200

xpack.security.enabled: false4.修改同目录下:jvm.options 保证 -xms -xmx 保证是系统内存一半以下或者保证自己服务器合适大小

5.创建文件夹 并授权 如上的 pat.data=

mkdir /data/elasticsearch

chown -R elasticsearch:elasticsearch /data/elasticsearch/

6.启动:

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.servicesudo systemctl start elasticsearch.service

2.官网下载 下载logstash (rabbitmq 中间件安装跳过自己百度搜索)

1.选择自己的操作系统下载 进行解压

2.修改配置文件 conf/logstash-sample.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.input {#对接 filebeat 我们使用java 链接不用这个beats {port => 5044}#对接 tcptcp {mode => "server"host => "0.0.0.0"port => 4560codec => json_lines}#对接rocketmqrabbitmq {host=>"localhost" vhost => "/" port=> 5672 user=>"guest" password=>"guest" queue=>"station_Route" durable=> true codec=>json}

}output {elasticsearch {hosts => ["http://ip:9200"]index => "rabbitmq-%{+YYYY.MM.dd}"#user => "elastic"#password => "changeme"}

}

3. 启动 logstash -f logstash.conf

3.安装 kibana 官网下载

1.解压完成修改配置文件 conf/kibana.yml

i18n.locale: "zh-CN"

elasticsearch.hosts: ["http://localhost:9200"]

server.name: "test-kin"

server.port: 5601# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "0.0.0.0"

2.启动

nohup ./kibana --allow-root >/dev/null & 3.注意防火墙 访问 地址

4.Spring boot 项目整合

1.添加依赖

<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-amqp</artifactId><version>2.5.5</version></dependency>2.配置yml

spring:application:name: testmvc:static-path-pattern: /**rabbitmq:host: localhostport: 5672username: guestpassword: guest3.修改 logback-spring.xml 自定义一个logback拦截器 只有当使用Marker 再进行记录

/*** @author chenkang* @date 2022/5/19 13:24*/

public class LogStashFilter extends Filter<ILoggingEvent> {public final static Marker LOGSTASH = MarkerFactory.getMarker("logstash");@Overridepublic FilterReply decide(ILoggingEvent iLoggingEvent) {Marker marker = iLoggingEvent.getMarker();return Optional.ofNullable(marker).filter(m->m.equals(LOGSTASH)).map(m->FilterReply.ACCEPT).orElse(FilterReply.DENY);}

}

<springProperty name="rabbitmqHost" source="spring.rabbitmq.host"/><springProperty name="rabbitmqPort" source="spring.rabbitmq.port"/><springProperty name="rabbitmqUsername" source="spring.rabbitmq.username"/><springProperty name="rabbitmqPassword" source="spring.rabbitmq.password"/><appender name="AMQP" class="org.springframework.amqp.rabbit.logback.AmqpAppender"><!--Layout(纯文本)而不是格式化的JSON --><filter class="com.chenkang.test.config.LogStashFilter" /><layout><pattern><![CDATA[%msg]]></pattern></layout><host>${rabbitmqHost}</host><port>${rabbitmqPort}</port><username>${rabbitmqUsername}</username><password>${rabbitmqPassword}</password><declareExchange>false</declareExchange><exchangeType>direct</exchangeType><exchangeName>exchanges.route</exchangeName><routingKeyPattern>route_exchange</routingKeyPattern><generateId>true</generateId><charset>UTF-8</charset><durable>false</durable><deliveryMode>NON_PERSISTENT</deliveryMode></appender>最后是测试:

Message message = new Message();message.setDeviceCode("code123");message.setDeviceName("deviceName3345");message.setIndex("1024");log.info(LogStashFilter.LOGSTASH,JSON.toJSONString(message));log.info(JSON.toJSONString(message));输出日志就会推送rabbitmq 订阅 然后 logstash 消费 存储到 es

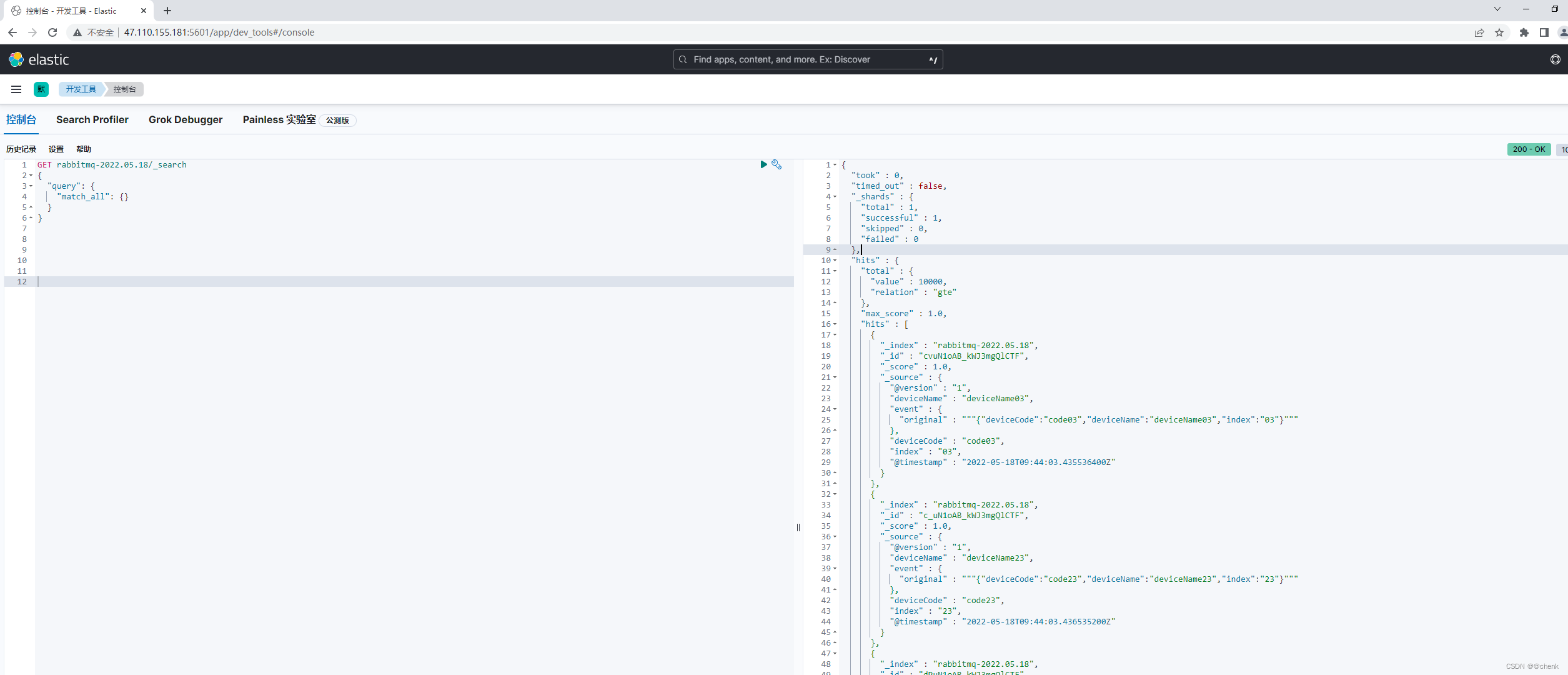

KIbana 查询数据 自己本地实测 每秒 1-2 千没啥问题 但是10000 时候就崩掉了 logstash 假死