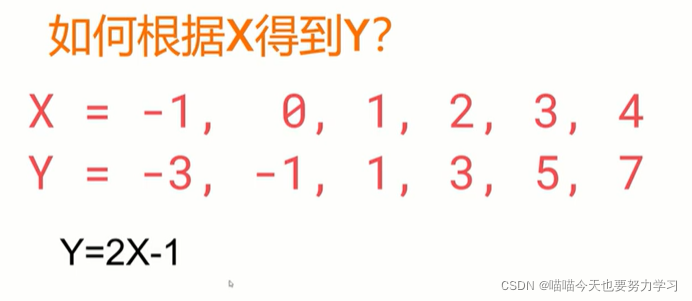

训练线性函数

import numpy as np

import tensorflow.keras as keras

# 构建模型

model = keras.Sequential([keras.layers.Dense(units=1,input_shape=[1])])

# optimizer优化,loss损失

model.compile(optimizer='sgd', loss='mean_squared_error')

#准备训练数据

xs=np.array([-1.0,0,1,2,3,4],dtype=float)

ys=np.array([-3,-1,1,3,5,7],dtype=float)

#训练模型,epochs是训练次数

model.fit(xs,ys,epochs=500)

#使用模型,用模型去检测10

print(model.predict([10.0]))

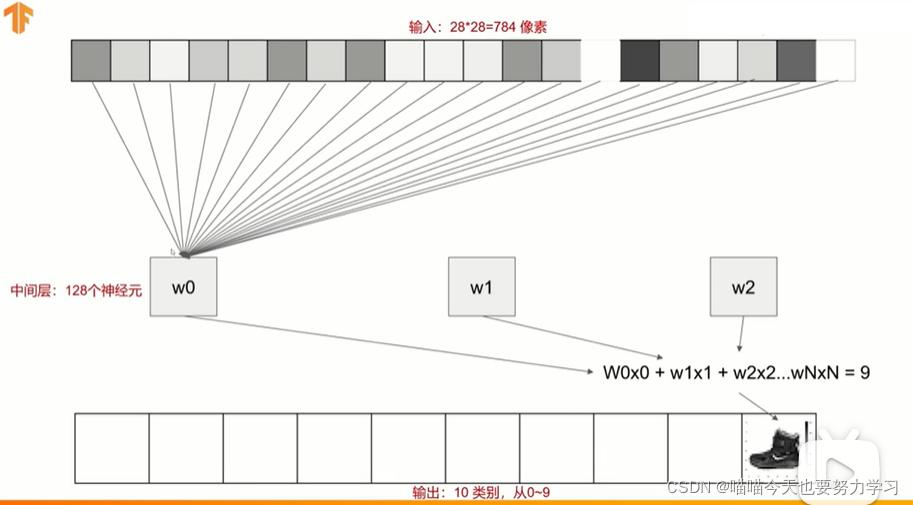

计算机视觉

import tensorflow as tf

from tensorflow import keras

fashion_mnist = keras.datasets.fashion_mnist

(train_images,train_labels),(test_images,test_labels)=fashion_mnist.load_data()#构造神经元模型,是全连接网络

#输入是28*28,最后一层需要分10类,中间有128个神经元,激活函数是relu

#最后一层是输出层

model=keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28,28)))

model.add(keras.layers.Dense(128,activation=tf.nn.relu))

model.add(keras.layers.Dense(10,activation=tf.nn.softmax))model.summary()

#图片是784个像素,128层神经元,+1是每一层都有一个bias,100480个param

(784+1)*128

#输出层是10个神经元,输出是(128+1)*10

import tensorflow as tf

import numpy as np

from tensorflow import keras

fashion_mnist = keras.datasets.fashion_mnist

(train_images,train_labels),(test_images,test_labels)=fashion_mnist.load_data()#构造神经元模型

#输入是28*28,最后一层需要分10类,中间有128个神经元,激活函数是relu

#最后一层是输出层

model=keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28,28)))

model.add(keras.layers.Dense(128,activation=tf.nn.relu))

model.add(keras.layers.Dense(10,activation=tf.nn.softmax))#model.summary()

#图片是784个像素,128层神经元,+1是每一层都有一个bias,100480个param

(784+1)*128

#输出层是10个神经元,输出是(128+1)*10#让他变成0-1之间的数,准确率会更高

train_images=train_images/255

#指定优化的方法,

model.compile(optimizer="adam",loss="sparse_categorical_crossentropy",metrics=['accuracy'])

#用训练数据集训练

model.fit(train_images,train_labels,epochs=5)test_images_scale=test_images/255

#评估模型效果

model.evaluate(test_images_scale,test_labels)#用模型判断单张图片的方法

print(np.argmax(model.predict([[test_images[0]/255]])))

print(test_labels[0])

自动终止训练

用到的是callback,每次训练以后都会使用callback,例子是loss小于0.4终止

步骤就是定义类,生成实例

import tensorflow as tf

import numpy as np

from tensorflow import keras

#定义callback,也就是loss小于0.4的时候终止

class myCallback(tf.keras.callbacks.Callback):def on_epoch_end(self,epoch,logs={}):if(logs.get('loss')<0.4):print('\nLoss is low so cancelling training!')self.model.stop_training=True#调用实例

callbacks=myCallback()

fashion_mnist = keras.datasets.fashion_mnist

(train_images,train_labels),(test_images,test_labels)=fashion_mnist.load_data()

train_images_scaled=train_images/255.0

test_labels_scaled=test_labels/255.0

model=keras.Sequential()

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512,activation=tf.nn.relu))

model.add(keras.layers.Dense(10,activation=tf.nn.softmax))

model.compile(optimizer="adam",loss="sparse_categorical_crossentropy",metrics=['accuracy'])

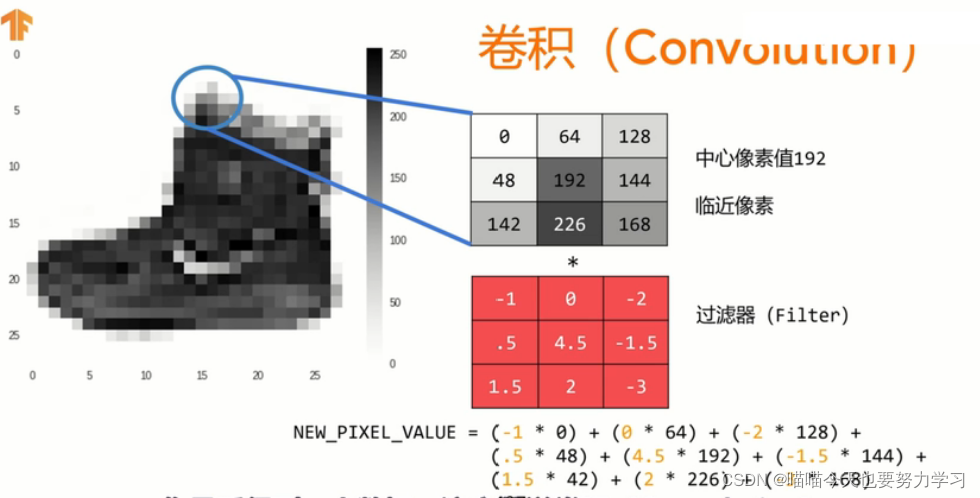

model.fit(train_images_scaled,train_labels,epochs=5,callbacks=[callbacks])卷积神经网络结合

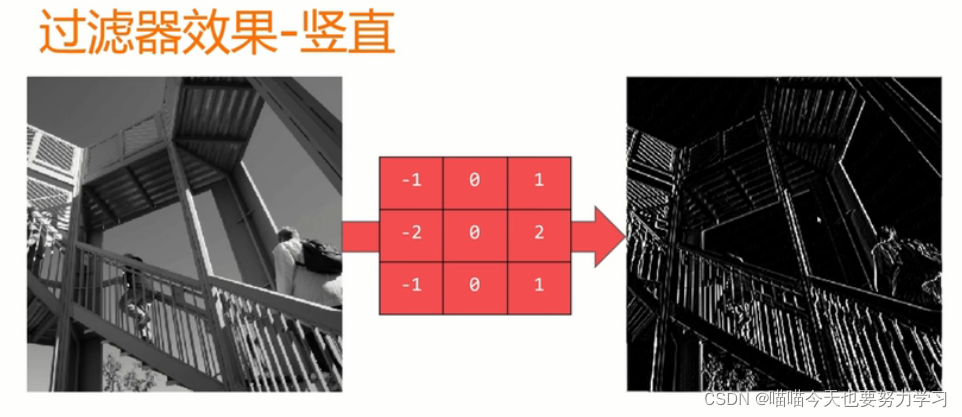

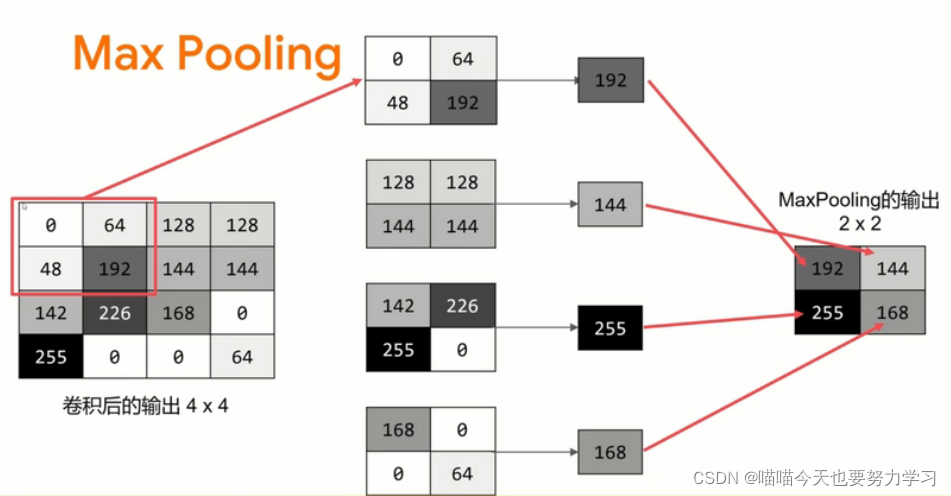

max pooling是为了增加区域特征

卷积神经网络的程序

import tensorflow as tf

import numpy as np

from tensorflow import kerasfashion_mnist = keras.datasets.fashion_mnist

(train_images,train_labels),(test_images,test_labels)=fashion_mnist.load_data()

train_images_scaled=train_images/255.0

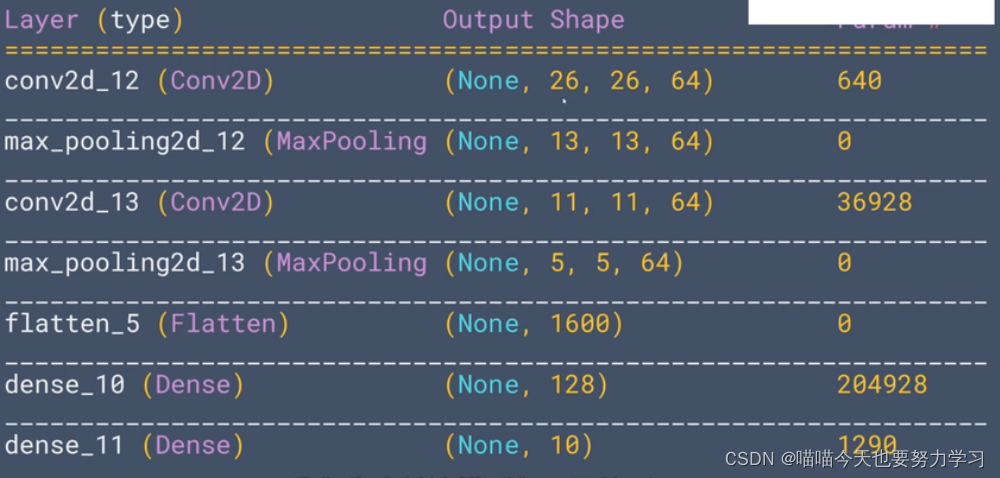

test_labels_scaled=test_labels/255.0model=tf.keras.models.Sequential([#卷积层,过滤器有64个,每个过滤器是3*3个像素,输入图片灰度值tf.keras.layers.Conv2D(64,(3,3),activation='relu',input_shape=(28,28,1)),#卷积之后增强特征,原来的输出会小一半tf.keras.layers.MaxPooling2D(2,2),tf.keras.layers.Conv2D(64,(3,3),activation='relu'),tf.keras.layers.MaxPooling2D(2,2),tf.keras.layers.Flatten(),tf.keras.layers.Dense(128,activation='relu'),tf.keras.layers.Dense(10,activation='softmax')

])

model.compile(optimizer="adam",loss="sparse_categorical_crossentropy",metrics=['accuracy'])

#如果有维度问题可以加reshape

model.fit(train_images_scaled.reshape(-1,28,28,1),train_labels,epochs=5)

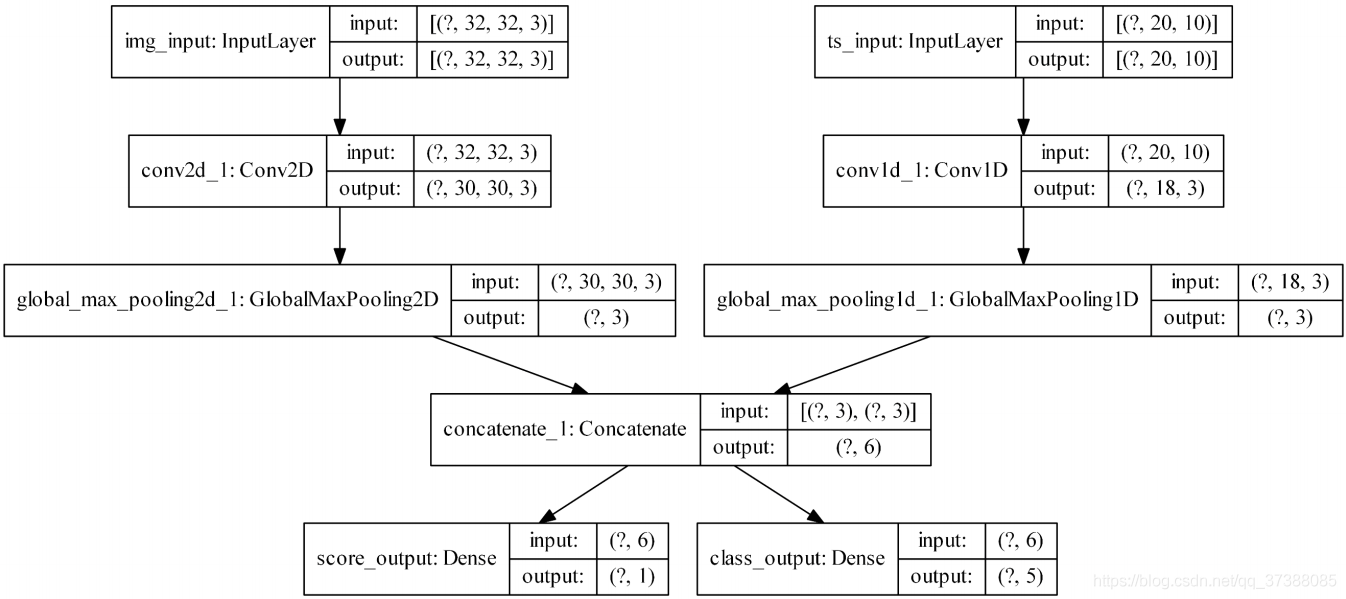

可以用model.summary()来查看神经网络的结构

图片原尺寸是2828,经过33的过滤器变成了2626,一张图片变成了64张,maxpooling将照片剪成1/4,后面同理

param (33+1)*64=640

#查看网络结构

layer_outputs=[layer.output for layer in model.layers]

activation_model=tf.keras.models.Model(inputs=model.input,outputs=layer_outputs)

pred=activation_model.predict(test_images[0].reshape(1,28,28,1))

#可以查看过滤器之后的小图片

plt.imshow(pred[0][0,:,:,2])

项目实战

准备训练数据,构建模型,训练模型,优化参数

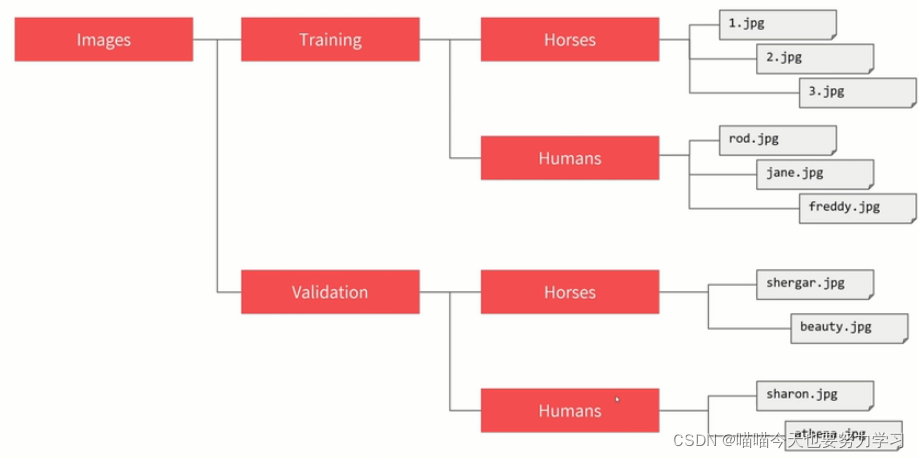

数据结构

真实数据的特点

图片尺寸大小不一,需要裁剪成一样大小

数据量比较大,不能一下子装入内存

经常需要修改数据

加载数据

from tensorflow.keras.preprocessing.image import ImageDataGenerator

#创建两个数据生成器,指定scaling范围0-1

train_datagen=ImageDataGenerator(rescale=1/255)

validation_datagen=ImageDataGenerator(rescale=1/255)#指向训练数据文件夹

train_generator=train_datagen.flow_from_directory('E:/tf神经网络练习/horse-or-human/',#训练数据所在文件夹target_size=(300,300), #指定输出尺寸batch_size=32,class_mode='binary' #指定二分类)#指向测试数据文件夹

validation_generator=validation_datagen.flow_from_directory('E:/tf神经网络练习/validation-horse-or-human',#训练数据所在文件夹target_size=(300,300), #指定输出尺寸batch_size=32,class_mode='binary' #指定二分类)模型及训练

model=tf.keras.models.Sequential([tf.keras.layers.Conv2D(16, (3, 3), activation='relu',input_shape=(150, 150, 3)),# 卷积之后增强特征,原来的输出会小一半tf.keras.layers.MaxPooling2D(2, 2),tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),tf.keras.layers.MaxPooling2D(2, 2),tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),tf.keras.layers.MaxPooling2D(2, 2),tf.keras.layers.Flatten(),tf.keras.layers.Dense(512, activation='relu'),tf.keras.layers.Dense(1, activation='sigmoid')

])model.compile(loss="binary_crossentropy",optimizer="adam",metrics=['acc'])history=model.fit(train_generator,epochs=15,verbose=1,validation_data=validation_generator,validation_steps=8)对模型的各种参数进行优化

from kerastunter.engine.hyperparameters import Hyperpaeameters

hp=hyperpaeameters()

def build_model(hp):model=tf.keras.models.Sequential()#调整过滤器函数model.add(tf.keras.layers.Conv2D(hp.Choice('num_filters_layer0',values=[16,64],default=16), (3, 3), activation='relu',input_shape=(150, 150, 3)))model.add(tf.keras.layers.MaxPooling2D(2, 2))for i in range(hp.Int("num_conv_layers",1,3)):model.add(tf.keras.layers.Conv2D(hp.Choice(f'num_filters_layer{i}',values=[16,64],default=16), (3, 3), activation='relu'))model.add(tf.keras.layers.MaxPooling2D(2, 2))model.add(tf.keras.layers.Conv2D(64, (3, 3), activation='relu'))model.add(tf.keras.layers.MaxPooling2D(2, 2))model.add(tf.keras.layers.Flatten())#调整神经元model.add(tf.keras.layers.Dense(hp.Int("hidden_units",128,512,step=32), activation='relu'))model.add(tf.keras.layers.Dense(1, activation='sigmoid'))model.compile(model.compile(loss="binary_crossentropy",optimizer="adam",metrics=['acc']))return model

tuner=Hyperband(build_model,objective='val_acc',max_epochs=15,diretory='horse_human_params',hyperparameters=hp,project_name='my_hourse_human_project'

)

#查看调参过程

tuner.search(train_generator,epochs=10,validation_data=validation_generator)best_hps=tuner.get_best_hyperparametera(1)[0]

print(best_hps.values)

#获取最好参数把模型构建出来

model=tuner.hypermodel.build(best_hps)

model.summary()

![Pytorch教程[10]完整模型训练套路](https://img-blog.csdnimg.cn/d71a67469c3e4770901343d7cc1c1330.png#pic_center)