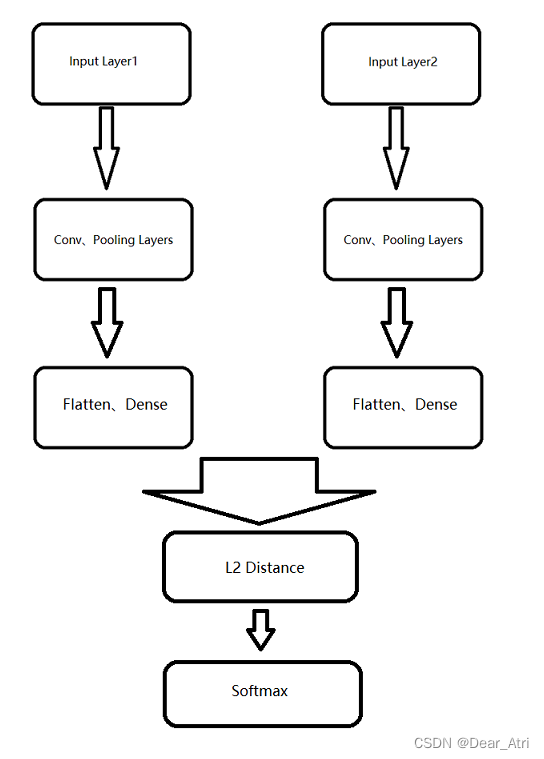

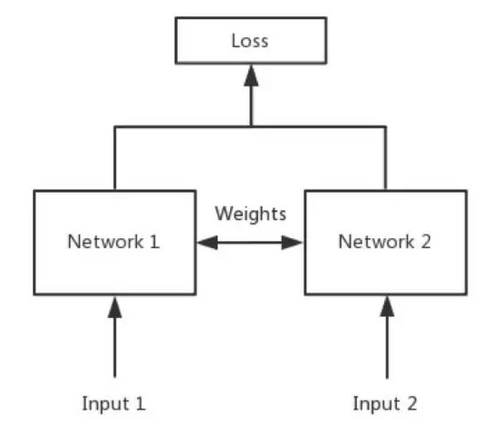

基本思想:需要一个判断目标相似度的模型,来比对被检测目标和既定目标的相似度,测试图片仅有的几张图片,感觉一般,量化图片尽量多点对于rknn

链接: https://pan.baidu.com/s/1NFjnCBh5RqJXDxEjl9TzHg?pwd=xev4 提取码: xev4

https://github.com/bubbliiiing/Siamese-pytorch.git一、测试图片

/usr/bin/python3.8 /home/ubuntu/Siamese-pytorch/predict.py

Loading weights into state dict...

model_data/Omniglot_vgg.pth model loaded.

Configurations:

----------------------------------------------------------------------

| keys | values|

----------------------------------------------------------------------

| model_path | model_data/Omniglot_vgg.pth|

| input_shape | [105, 105]|

| letterbox_image | False|

| cuda | True|

----------------------------------------------------------------------

Input image_1 filename:/home/ubuntu/Siamese-pytorch/img/0.jpeg

Input image_2 filename:/home/ubuntu/Siamese-pytorch/img/1.jpeg测试结果

转onnx模型

import matplotlib.pyplot as plt

import numpy as np

import onnxruntime

import torch

import cv2

from PIL import Image

from nets.siamese import Siamese as siamese

from torch.autograd import Variable

from onnxruntime.datasets import get_example

from PIL import Imagefrom utils.utils_aug import center_crop, resizedevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

def letterbox_image(image, size, letterbox_image):w, h = sizeiw, ih = image.sizeif letterbox_image:'''resize image with unchanged aspect ratio using padding'''scale = min(w/iw, h/ih)nw = int(iw*scale)nh = int(ih*scale)image = image.resize((nw,nh), Image.BICUBIC)new_image = Image.new('RGB', size, (128,128,128))new_image.paste(image, ((w-nw)//2, (h-nh)//2))else:if h == w:new_image = resize(image, h)else:new_image = resize(image, [h ,w])new_image = center_crop(new_image, [h ,w])return new_imagedef cvtColor(image):if len(np.shape(image)) == 3 and np.shape(image)[2] == 3:return imageelse:image = image.convert('RGB')return image

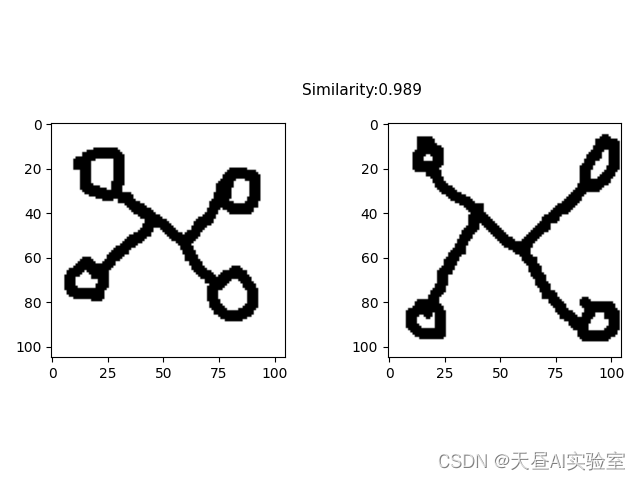

def preprocess_input(x):x /= 255.0return xif __name__ == "__main__":image_1=Image.open("/home/ubuntu/Siamese-pytorch/img/0.jpeg")image_2 = Image.open("/home/ubuntu/Siamese-pytorch/img/1.jpeg")image_1_ = cvtColor(image_1)image_2_ = cvtColor(image_2)# ---------------------------------------------------## 对输入图像进行不失真的resize# ---------------------------------------------------#image_1 = letterbox_image(image_1_, [105, 105],False)image_2 = letterbox_image(image_2_, [105, 105],False)# ---------------------------------------------------------## 归一化+添加上batch_size维度# ---------------------------------------------------------#photo_1 = preprocess_input(np.array(image_1, np.float32))photo_2 = preprocess_input(np.array(image_2, np.float32))photo_1 = torch.from_numpy(np.expand_dims(np.transpose(photo_1, (2, 0, 1)), 0)).type(torch.FloatTensor).to(device)photo_2 = torch.from_numpy(np.expand_dims(np.transpose(photo_2, (2, 0, 1)), 0)).type(torch.FloatTensor).to(device)print('Loading weights into state dict...')model = siamese([105, 105])model.load_state_dict(torch.load("/home/ubuntu/Siamese-pytorch/model_data/Omniglot_vgg.pth", map_location=device))net = model.eval()net = net.cuda()dummy_input = [photo_1, photo_2]input_names = ["in1", "in2"]output_names = ["output"]torch.onnx.export(net,dummy_input,"/home/ubuntu/Siamese-pytorch/Siamese.onnx",verbose=True,input_names=input_names,output_names=output_names,keep_initializers_as_inputs=False,opset_version=12,)example_model = get_example("/home/ubuntu/Siamese-pytorch/Siamese.onnx")session = onnxruntime.InferenceSession(example_model)# get the name of the first input of the modelinput_name0 = session.get_inputs()[0].nameinput_name1 = session.get_inputs()[1].name# print('onnx Input Name:', input_name)output = session.run([], {input_name0: dummy_input[0].data.cpu().numpy(),input_name1: dummy_input[1].data.cpu().numpy()})output = torch.nn.Sigmoid()(torch.Tensor(output))#output = torch.nn.Sigmoid()(output)score=output[0].tolist()[0][0]plt.subplot(1, 2, 1)plt.imshow(np.array(image_1_))plt.subplot(1, 2, 2)plt.imshow(np.array(image_2_))plt.text(-12, -12, 'Similarity:%.3f' %score, ha='center', va='bottom', fontsize=11)plt.show()

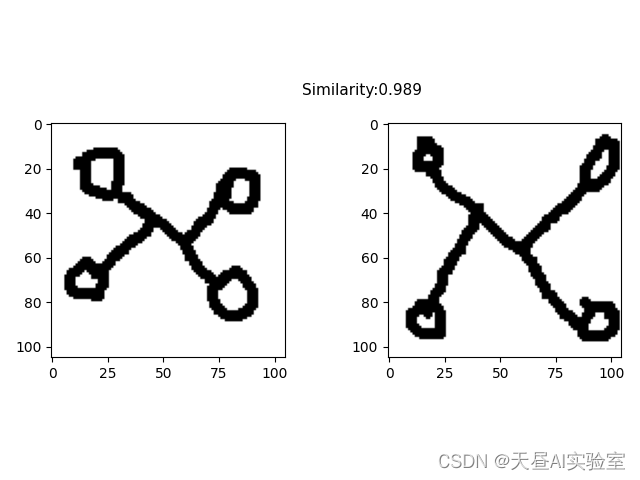

测试结果

二、转ncnn模型

ubuntu@ubuntu:~/ncnn/build/install/bin$ ./onnx2ncnn /home/ubuntu/Siamese-pytorch/Siamese.onnx /home/ubuntu/Siamese-pytorch/Siamese.param /home/ubuntu/Siamese-pytorch/Siamese.bin

camkelists.txt

cmake_minimum_required(VERSION 3.16)

project(siamsese)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fopenmp ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fopenmp ")

set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/ncnn)

include_directories(${CMAKE_SOURCE_DIR})

find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

#导入ncnn

add_library(libncnn STATIC IMPORTED)

set_target_properties(libncnn PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libncnn.a)add_executable(siamsese main.cpp)target_link_libraries(siamsese ${OpenCV_LIBS} libncnn)main.cpp

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include<opencv2/opencv.hpp>

#include "net.h"

void crop_img(cv::Mat img, cv::Mat &crop_img, int x,int y,int w,int h) {int crop_x1 = std::max(0, x);int crop_y1 = std::max(0, y);int crop_x2 = crop_x1+std::min(img.cols , x + w );int crop_y2 = crop_y1+std::min(img.rows , y+ h );crop_img = img.clone()(cv::Range(crop_x1, crop_x2),cv::Range(crop_y1, crop_y2));

}void letterbox_image(cv::Mat image, cv::Mat &image_dst_1, int size) {float w = image.cols;float h = image.rows;int oh=0;int ow=0;if(w<=h&&w==size || h<=w&& h==size){image_dst_1=image;return;}else if(w<h){ow=size;oh=int(size*h/w);} else {oh=size;ow=int(size*w/h);}cv::Mat temp;cv::resize(image, temp, cv::Size(ow, oh), 0, 0, cv::INTER_LINEAR);w=temp.rows;h=temp.cols;int th=size;int tw=size;int i = int(round((h - th) / 2.));int j = int(round((w - tw) / 2.));//crop_img(temp,image_dst_1,i,j,th,tw);crop_img(temp,image_dst_1,j,i,tw,th);}void pretty_print(const ncnn::Mat& m)

{for (int q=0; q<m.c; q++){const float* ptr = m.channel(q);for (int z=0; z<m.d; z++){for (int y=0; y<m.h; y++){for (int x=0; x<m.w; x++){printf("%f ", ptr[x]);}ptr += m.w;printf("\n");}printf("\n");}printf("------------------------\n");}

}

static inline float sigmoid(float x)

{return static_cast<float>(1.f / (1.f + exp(-x)));

}int main() {cv::Mat image_1 = cv::imread("/home/ubuntu/Siamese-pytorch/img/Angelic_01.png");cv::Mat image_2 = cv::imread("/home/ubuntu/Siamese-pytorch/img/Angelic_02.png");int image_1_w = image_1.cols;int image_1_h = image_1.rows;int image_2_w = image_2.cols;int image_2_h = image_2.rows;int target_size = 105;cv::Mat image_dst_1, image_dst_2;letterbox_image(image_1, image_dst_1, target_size);letterbox_image(image_2, image_dst_2, target_size);cv::imwrite("a.jpg",image_dst_1);cv::imwrite("b.jpg",image_dst_2);// subtract 128, norm to -1 ~ 1ncnn::Mat in_1 = ncnn::Mat::from_pixels(image_dst_1.data, ncnn::Mat::PIXEL_BGR2RGB, image_dst_1.cols,image_dst_1.rows);ncnn::Mat in_2 = ncnn::Mat::from_pixels(image_dst_2.data, ncnn::Mat::PIXEL_BGR2RGB, image_dst_2.cols,image_dst_2.rows);float mean[3] = {0, 0, 0};float norm[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};in_1.substract_mean_normalize(mean, norm);in_2.substract_mean_normalize(mean, norm);//pretty_print(in_1);ncnn::Net net;net.load_param("/home/ubuntu/Siamese-pytorch/Siamese.param");net.load_model("/home/ubuntu/Siamese-pytorch/Siamese.bin");ncnn::Extractor ex = net.create_extractor();ex.set_light_mode(true);ex.set_num_threads(4);ex.input("in1", in_1);ex.input("in2", in_2);ncnn::Mat out;ex.extract("output", out);out = out.reshape(out.h * out.w * out.c);fprintf(stderr, "output shape: %d %d %d %d\n", out.dims, out.h, out.w, out.c);printf("cls_scores=%f\n",out[0]);float score=sigmoid(out[0]);printf("cls_scores=%f\n",score);return 0;

}

测试结果

/home/ubuntu/demo/cmake-build-debug/siamsese

cls_scores=4.522670

cls_scores=0.989257

output shape: 1 1 1 1Process finished with exit code 0

三、转mnn模型

ubuntu@ubuntu:~/MNN/build$ ./MNNConvert -f ONNX --modelFile /home/ubuntu/Siamese-pytorch/Siamese.onnx --MNNModel /home/ubuntu/Siamese-pytorch/Siamese.mnn --bizCode MNN

Start to Convert Other Model Format To MNN Model...

[14:37:19] /home/ubuntu/MNN/tools/converter/source/onnx/onnxConverter.cpp:40: ONNX Model ir version: 6

Start to Optimize the MNN Net...

The Convolution use shared weight, may increase the model size

inputTensors : [ in1, in2, ]

outputTensors: [ output, ]

Converted Success!cmakelist.txt

cmake_minimum_required(VERSION 3.10)

project(untiled2)set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/MNN)

find_package(OpenCV REQUIRED)

add_library(libmnn SHARED IMPORTED)

set_target_properties(libmnn PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libMNN.so)add_executable(untiled2 main.cpp )target_link_libraries(untiled2 ${OpenCV_LIBS} libmnn)测试代码

#include <iostream>

#include <algorithm>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/opencv.hpp>

#include<MNN/Interpreter.hpp>

#include<MNN/ImageProcess.hpp>

using namespace std;

using namespace cv;void crop_img(cv::Mat img, cv::Mat &crop_img, int x,int y,int w,int h) {int crop_x1 = std::max(0, x);int crop_y1 = std::max(0, y);int crop_x2 = crop_x1+std::min(img.cols , x + w );int crop_y2 = crop_y1+std::min(img.rows , y+ h );crop_img = img.clone()(cv::Range(crop_x1, crop_x2),cv::Range(crop_y1, crop_y2));

}void letterbox_image(cv::Mat image, cv::Mat &image_dst_1, int size) {float w = image.cols;float h = image.rows;int oh=0;int ow=0;if(w<=h&&w==size || h<=w&& h==size){image_dst_1=image;return;}else if(w<h){ow=size;oh=int(size*h/w);} else {oh=size;ow=int(size*w/h);}cv::Mat temp;cv::resize(image, temp, cv::Size(ow, oh), 0, 0, cv::INTER_LINEAR);w=temp.rows;h=temp.cols;int th=size;int tw=size;int i = int(round((h - th) / 2.));int j = int(round((w - tw) / 2.));//crop_img(temp,image_dst_1,i,j,th,tw);crop_img(temp,image_dst_1,j,i,tw,th);}inline float sigmoid(float x)

{return static_cast<float>(1.f / (1.f + exp(-x)));

}int main(int argc, char **argv) {cv::Mat image_1 = cv::imread("/home/ubuntu/Siamese-pytorch/img/Angelic_01.png");cv::Mat image_2 = cv::imread("/home/ubuntu/Siamese-pytorch/img/Angelic_02.png");int target_size = 105;cv::Mat image_dst_1, image_dst_2;letterbox_image(image_1, image_dst_1, target_size);letterbox_image(image_2, image_dst_2, target_size);// MNN inferenceauto mnnNet = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile("/home/ubuntu/Siamese-pytorch/Siamese.mnn"));auto t1 = std::chrono::steady_clock::now();MNN::ScheduleConfig netConfig;netConfig.type = MNN_FORWARD_CPU;netConfig.numThread = 4;auto session = mnnNet->createSession(netConfig);auto input_1 = mnnNet->getSessionInput(session, "in1");auto input_2 = mnnNet->getSessionInput(session, "in2");mnnNet->resizeTensor(input_1, {1, 3, (int) target_size, (int) target_size});mnnNet->resizeTensor(input_2, {1, 3, (int) target_size, (int) target_size});mnnNet->resizeSession(session);MNN::CV::ImageProcess::Config config;const float mean_vals[3] = {0.f,0.f,0.f};const float norm_255[3] = {1.f / 255, 1.f / 255, 1.f / 255};std::shared_ptr<MNN::CV::ImageProcess> pretreat(MNN::CV::ImageProcess::create(MNN::CV::BGR, MNN::CV::RGB, mean_vals, 3,norm_255, 3));pretreat->convert(image_1.data, (int) target_size, (int) target_size, image_1.step[0], input_1);pretreat->convert(image_2.data, (int) target_size, (int) target_size, image_2.step[0], input_2);mnnNet->runSession(session);auto SparseInst_scores = mnnNet->getSessionOutput(session, "output");MNN::Tensor scoresHost(SparseInst_scores, SparseInst_scores->getDimensionType());SparseInst_scores->copyToHostTensor(&scoresHost);float value = scoresHost.host<float>()[0];std::cout<<value<<std::endl;float score=sigmoid(value);std::cout<<score<<std::endl;mnnNet->releaseModel();mnnNet->releaseSession(session);return 0;

}四、转rknn模型

from rknn.api import RKNNONNX_MODEL = '/home/ubuntu/Siamese-pytorch/Siamese.onnx'

RKNN_MODEL = '/home/ubuntu/Siamese-pytorch/Siamese.rknn'if __name__ == '__main__':# Create RKNN objectrknn = RKNN(verbose=True)# pre-process configprint('--> config model')rknn.config(mean_values=[[0, 0, 0],[0, 0, 0]], std_values=[[255, 255, 255],[255, 255, 255]],reorder_channel='0 1 2#0 1 2',target_platform=['rk1808', 'rk3399pro'],quantized_dtype='asymmetric_affine-u8',batch_size=1, optimization_level=3, output_optimize=1)print('done')print('--> Loading model')ret = rknn.load_onnx(model=ONNX_MODEL)if ret != 0:print('Load model failed!')exit(ret)print('done')# Build modelprint('--> Building model')ret = rknn.build(do_quantization=True, dataset='dataset.txt') # ,pre_compile=Trueif ret != 0:print('Build Siamese failed!')exit(ret)print('done')# Export rknn modelprint('--> Export RKNN model')ret = rknn.export_rknn(RKNN_MODEL)if ret != 0:print('Export Siamese.rknn failed!')exit(ret)print('done')rknn.release()dataset.txt这样写,多多益善

Angelic_01.png Angelic_02.png

img.png img.png

0.jpeg 1.jpeg

Atem_01.png Atl_01.png

00.jpeg 11.pngcmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled10)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fopenmp ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fopenmp")include_directories(${CMAKE_SOURCE_DIR})

include_directories(${CMAKE_SOURCE_DIR}/include)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(librknn_api SHARED IMPORTED)

set_target_properties(librknn_api PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib64/librknn_api.so)add_executable(untitled10 main.cpp)

target_link_libraries(untitled10 ${OpenCV_LIBS} librknn_api )源码

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include<opencv2/opencv.hpp>

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#include <queue>

#include "rknn_api.h"

void crop_img(cv::Mat img, cv::Mat &crop_img, int x,int y,int w,int h) {int crop_x1 = std::max(0, x);int crop_y1 = std::max(0, y);int crop_x2 = crop_x1+std::min(img.cols , x + w );int crop_y2 = crop_y1+std::min(img.rows , y+ h );crop_img = img.clone()(cv::Range(crop_x1, crop_x2),cv::Range(crop_y1, crop_y2));

}void letterbox_image(cv::Mat image, cv::Mat &image_dst_1, int size) {float w = image.cols;float h = image.rows;int oh=0;int ow=0;if(w<=h&&w==size || h<=w&& h==size){image_dst_1=image;return;}else if(w<h){ow=size;oh=int(size*h/w);} else {oh=size;ow=int(size*w/h);}cv::Mat temp;cv::resize(image, temp, cv::Size(ow, oh), 0, 0, cv::INTER_LINEAR);w=temp.rows;h=temp.cols;int th=size;int tw=size;int i = int(round((h - th) / 2.));int j = int(round((w - tw) / 2.));//crop_img(temp,image_dst_1,i,j,th,tw);crop_img(temp,image_dst_1,j,i,tw,th);}

void printRKNNTensor(rknn_tensor_attr *attr) {printf("index=%d name=%s n_dims=%d dims=[%d %d %d %d] n_elems=%d size=%d ""fmt=%d type=%d qnt_type=%d fl=%d zp=%d scale=%f\n",attr->index, attr->name, attr->n_dims, attr->dims[3], attr->dims[2],attr->dims[1], attr->dims[0], attr->n_elems, attr->size, 0, attr->type,attr->qnt_type, attr->fl, attr->zp, attr->scale);

}static inline float sigmoid(float x)

{return static_cast<float>(1.f / (1.f + exp(-x)));

}int main() {cv::Mat image_1 = cv::imread("../Angelic_01.jpeg");cv::Mat image_2 = cv::imread("../Angelic_02.jpeg");int target_size = 105;cv::Mat image_dst_1, image_dst_2;letterbox_image(image_1, image_dst_1, target_size);letterbox_image(image_2, image_dst_2, target_size);const char *model_path = "../Siamese.rknn";// Load modelFILE *fp = fopen(model_path, "rb");if (fp == NULL) {printf("fopen %s fail!\n", model_path);return -1;}fseek(fp, 0, SEEK_END);int model_len = ftell(fp);void *model = malloc(model_len);fseek(fp, 0, SEEK_SET);if (model_len != fread(model, 1, model_len, fp)) {printf("fread %s fail!\n", model_path);free(model);return -1;}rknn_context ctx = 0;int ret = rknn_init(&ctx, model, model_len, 0);if (ret < 0) {printf("rknn_init fail! ret=%d\n", ret);return -1;}/* Query sdk version */rknn_sdk_version version;ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version,sizeof(rknn_sdk_version));if (ret < 0) {printf("rknn_init error ret=%d\n", ret);return -1;}printf("sdk version: %s driver version: %s\n", version.api_version,version.drv_version);/* Get input,output attr */rknn_input_output_num io_num;ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));if (ret < 0) {printf("rknn_init error ret=%d\n", ret);return -1;}printf("model input num: %d, output num: %d\n", io_num.n_input,io_num.n_output);rknn_tensor_attr input_attrs[io_num.n_input];memset(input_attrs, 0, sizeof(input_attrs));for (int i = 0; i < io_num.n_input; i++) {input_attrs[i].index = i;ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]),sizeof(rknn_tensor_attr));if (ret < 0) {printf("rknn_init error ret=%d\n", ret);return -1;}printRKNNTensor(&(input_attrs[i]));}rknn_tensor_attr output_attrs[io_num.n_output];memset(output_attrs, 0, sizeof(output_attrs));for (int i = 0; i < io_num.n_output; i++) {output_attrs[i].index = i;ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]),sizeof(rknn_tensor_attr));printRKNNTensor(&(output_attrs[i]));}int input_channel = 3;int input_width = 0;int input_height = 0;if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) {printf("model is NCHW input fmt\n");input_width = input_attrs[0].dims[0];input_height = input_attrs[0].dims[1];printf("input_width=%d input_height=%d\n", input_width, input_height);} else {printf("model is NHWC input fmt\n");input_width = input_attrs[0].dims[1];input_height = input_attrs[0].dims[2];printf("input_width=%d input_height=%d\n", input_width, input_height);}printf("model input height=%d, width=%d, channel=%d\n", input_height, input_width,input_channel);/* Init input tensor */rknn_input inputs[2]={0};inputs[0].index = 0;inputs[0].buf = image_dst_1.data;inputs[0].type = RKNN_TENSOR_UINT8;inputs[0].size = input_width * input_height * input_channel;inputs[0].fmt = RKNN_TENSOR_NCHW;inputs[0].pass_through = 0;inputs[1].index = 1;inputs[1].buf = image_dst_2.data;inputs[1].type = RKNN_TENSOR_UINT8;inputs[1].size = input_width * input_height * input_channel;inputs[1].fmt = RKNN_TENSOR_NCHW;inputs[1].pass_through = 0;/* Init output tensor */rknn_output outputs[io_num.n_output];memset(outputs, 0, sizeof(outputs));for (int i = 0; i < io_num.n_output; i++) {outputs[i].want_float = 1;}printf("img.cols: %d, img.rows: %d\n", image_dst_1.cols, image_dst_1.rows);auto t1 = std::chrono::steady_clock::now();rknn_inputs_set(ctx, io_num.n_input, inputs);ret = rknn_run(ctx, NULL);if (ret < 0) {printf("ctx error ret=%d\n", ret);return -1;}ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);if (ret < 0) {printf("outputs error ret=%d\n", ret);return -1;}int shape_b = 0;int shape_c = 0;int shape_w = 0;;int shape_h = 0;;for (int i = 0; i < io_num.n_output; ++i) {shape_b = output_attrs[i].dims[3];shape_c = output_attrs[i].dims[2];shape_h = output_attrs[i].dims[1];;shape_w = output_attrs[i].dims[0];;}printf("batch=%d channel=%d width=%d height= %d\n", shape_b, shape_c, shape_w, shape_h);float *output = (float *) outputs[0].buf;printf("cls_scores=%f\n",output[0]);float score=sigmoid(output[0]);printf("cls_scores=%f\n",score);return 0;

}

测试结果

/tmp/tmp.E4ciUSTTsf/cmake-build-debug/untitled10

D RKNNAPI: ==============================================

D RKNNAPI: RKNN VERSION:

D RKNNAPI: API: 1.6.1 (00c4d8b build: 2021-03-15 16:31:37)

D RKNNAPI: DRV: 1.7.1 (0cfd4a1 build: 2021-12-10 09:43:11)

D RKNNAPI: ==============================================

sdk version: 1.6.1 (00c4d8b build: 2021-03-15 16:31:37) driver version: 1.7.1 (0cfd4a1 build: 2021-12-10 09:43:11)

model input num: 2, output num: 1

index=0 name=in1_69 n_dims=4 dims=[1 3 105 105] n_elems=33075 size=33075 fmt=0 type=3 qnt_type=2 fl=0 zp=0 scale=0.003922

index=1 name=in2_70 n_dims=4 dims=[1 3 105 105] n_elems=33075 size=33075 fmt=0 type=3 qnt_type=2 fl=0 zp=0 scale=0.003922

index=0 name=Gemm_Gemm_67/out0_0 n_dims=2 dims=[0 0 1 1] n_elems=1 size=1 fmt=0 type=3 qnt_type=2 fl=0 zp=0 scale=0.043770

model is NCHW input fmt

input_width=105 input_height=105

model input height=105, width=105, channel=3

img.cols: 105, img.rows: 105

batch=0 channel=0 width=1 height= 1

cls_scores=11.161281

cls_scores=0.999986Process finished with exit code 0

剩下的根据自己的场景简单训练了