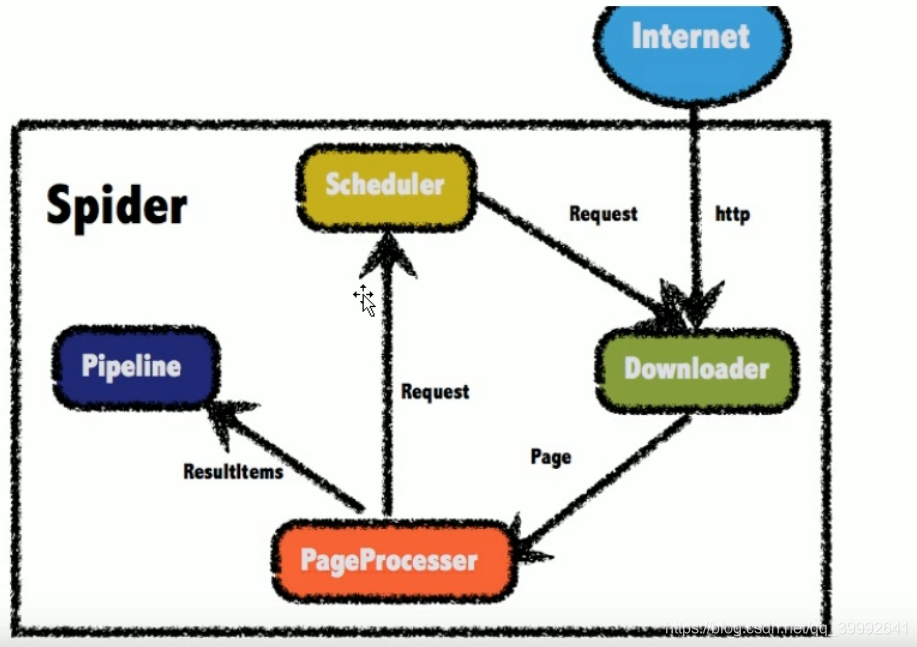

WebMagic框架

webmagic结构分为Downloader,pageProcessor,Scheduler,pipeline四大组件 并由splider将他们组织起来 这四大组件对应着爬虫生命周期中的下载 处理 管理 和持久化等功能,

依赖

依赖

<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-data-jpa</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web-services</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-devtools</artifactId><scope>runtime</scope><optional>true</optional></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><dependency><groupId>org.apache.commons</groupId><artifactId>commons-lang3</artifactId><version>3.7</version></dependency><dependency><groupId>us.codecraft</groupId><artifactId>webmagic-core</artifactId><version>0.7.3</version></dependency><!-- https://mvnrepository.com/artifact/us.codecraft/webmagic-extension --><dependency><groupId>us.codecraft</groupId><artifactId>webmagic-extension</artifactId><version>0.7.3</version></dependency><!--布隆过滤器--><!-- https://mvnrepository.com/artifact/com.google.guava/guava --><dependency><groupId>com.google.guava</groupId><artifactId>guava</artifactId><version>27.1-jre</version></dependency>

<dependency><groupId>us.codecraft</groupId><artifactId>webmagic-core</artifactId><version>0.7.3</version></dependency>

数据库采用的是springdata JPA

数据库采用的是springdata JPA

配置文件

spring.jpa.database=mysql

spring.jpa.show-sql=false

spring.jpa.hibernate.ddl-auto=update

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.datasource.url=jdbc:mysql://localhost:3306/movies?serverTimezone=UTC

spring.datasource.username=root

spring.datasource.password=123123代码是建立在spring boot之上的 需要开启定时任务

爬虫主要代码

@Component

public class JobProcesser implements PageProcessor {private String url = "https://search.51job.com/list/090200,000000,0000,38,9,99,JAVA,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=";//解析页面@Overridepublic void process(Page page) {//解析页面 获取招聘信息详情的url地址List<Selectable> list = page.getHtml().css("div#resultList div.el").nodes();

//判断获取到的集合是否为空 如果为空表示这是招聘详情页 如果不为空表示这是列表页if (list.size() == 0) {

// 如果为空 表示这是招聘详情页 解析页面获取招聘信息 保存数据this.saveJobInfo(page);} else {

// 如果不为空 解析详情页的url地址 放到任务队列中for (Selectable selectable:list){String jobInfoUrl = selectable.links().toString();page.addTargetRequest(jobInfoUrl); //把获取到的url地址放到任务队列中}

// 获取下一页的urlString bkurl = page.getHtml().css("div.p_in li.bk").nodes().get(1).links().toString();

// 把url放到任务队列中page.addTargetRequest(bkurl);}}//解析页面获取招聘信息 保存数据private void saveJobInfo(Page page) {

// 创建对象Job job = new Job();// 解析页面Html html = page.getHtml();// 获取数据job.setCompanyName(String.valueOf(html.css("div.cn p.cname a","text")));job.setCompanyAddr(Jsoup.parse(html.css("div.bmsg").nodes().get(1).toString()).text() );job.setCompanyInfo(Jsoup.parse(html.css("div.tmsg").toString()).text());job.setJobName(html.css("div.cn h1","text").toString());job.setJobAddr(html.css("div.cn p.msg","title").toString());job.setJobInfo(Jsoup.parse(html.css("div.job_msg").toString()).text());job.setUrl(page.getUrl().toString());job.setSalaryMax(Jsoup.parse(html.css("div.cn strong").toString()).text());job.setSalaryMin("");String time = html.css("div.cn p.msg","title").toString();job.setTime(time.substring(time.length()-8));

// 把结果保存起来page.putField("jobinfo",job);}private Site site = Site.me().setCharset("gbk").setTimeOut(10 * 1000) //超时时间.setRetrySleepTime(3000).setRetryTimes(3);@Overridepublic Site getSite() {return site;}

@Autowired

SpringDataPipeline springDataPipeline;// initialDelay 当任务启动后 等多久执行方法

// fixedDelay 每隔多久执行一次@Scheduled(initialDelay = 1000, fixedDelay = 100 * 1000)public void process() {Spider.create(new JobProcesser()).addUrl(url).setScheduler(new QueueScheduler().setDuplicateRemover(new BloomFilterDuplicateRemover(100000))).thread(10).addPipeline(springDataPipeline).run();}

}

其他代码就是对数据库的增删查改了