1.介绍

原始GAN(GAN 简介与代码实战_天竺街潜水的八角的博客-CSDN博客)在理论上可以完全逼近真实数据,但它的可控性不强(生成小图片还行,生成的大图片可能是不合逻辑的),因此需要对gan加一些约束,能生成我们想要的图片,这个时候,CGAN就横空出世了,更加详细的介绍参考论文:Conditional Generative Adversarial Nets

2.模型结构

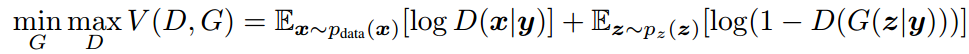

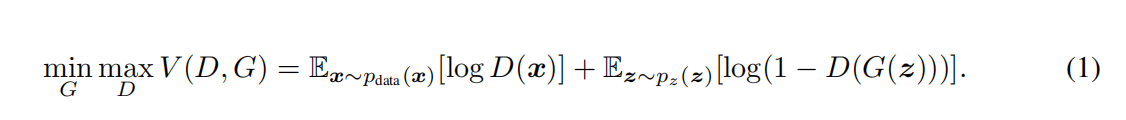

公式1是原始GAN的损失函数,公式2相对于公式1多了一个条件y,这个y可以是标签和图片中需要修复的部分(比如动物)等

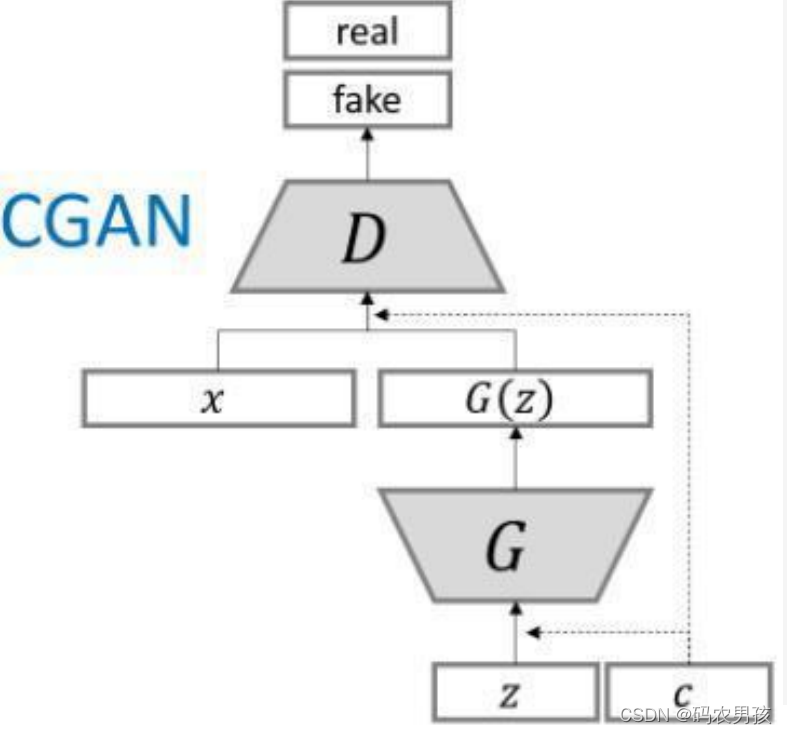

如果只看公式2,很难想象到,怎样才能把y当作条件来融入网络。看下图之后,我们很容易想到,条件y和待判别的图像被拼接(concat)起来就可以达到这个效果。

3.模型特点

使用额外信息y对模型增加条件,可以指导数据生成过程

4.代码实现 keras

class CGAN():def __init__(self):# Input shapeself.img_rows = 28self.img_cols = 28self.channels = 1self.img_shape = (self.img_rows, self.img_cols, self.channels)self.num_classes = 10self.latent_dim = 100optimizer = Adam(0.0002, 0.5)# Build and compile the discriminatorself.discriminator = self.build_discriminator()self.discriminator.compile(loss=['binary_crossentropy'],optimizer=optimizer,metrics=['accuracy'])# Build the generatorself.generator = self.build_generator()# The generator takes noise and the target label as input# and generates the corresponding digit of that labelnoise = Input(shape=(self.latent_dim,))label = Input(shape=(1,))img = self.generator([noise, label])# For the combined model we will only train the generatorself.discriminator.trainable = False# The discriminator takes generated image as input and determines validity# and the label of that imagevalid = self.discriminator([img, label])# The combined model (stacked generator and discriminator)# Trains generator to fool discriminatorself.combined = Model([noise, label], valid)self.combined.compile(loss=['binary_crossentropy'],optimizer=optimizer)def build_generator(self):model = Sequential()model.add(Dense(256, input_dim=self.latent_dim))model.add(LeakyReLU(alpha=0.2))model.add(BatchNormalization(momentum=0.8))model.add(Dense(512))model.add(LeakyReLU(alpha=0.2))model.add(BatchNormalization(momentum=0.8))model.add(Dense(1024))model.add(LeakyReLU(alpha=0.2))model.add(BatchNormalization(momentum=0.8))model.add(Dense(np.prod(self.img_shape), activation='tanh'))model.add(Reshape(self.img_shape))model.summary()noise = Input(shape=(self.latent_dim,))label = Input(shape=(1,), dtype='int32')label_embedding = Flatten()(Embedding(self.num_classes, self.latent_dim)(label))model_input = multiply([noise, label_embedding])img = model(model_input)return Model([noise, label], img)def build_discriminator(self):model = Sequential()model.add(Dense(512, input_dim=np.prod(self.img_shape)))model.add(LeakyReLU(alpha=0.2))model.add(Dense(512))model.add(LeakyReLU(alpha=0.2))model.add(Dropout(0.4))model.add(Dense(512))model.add(LeakyReLU(alpha=0.2))model.add(Dropout(0.4))model.add(Dense(1, activation='sigmoid'))model.summary()img = Input(shape=self.img_shape)label = Input(shape=(1,), dtype='int32')label_embedding = Flatten()(Embedding(self.num_classes, np.prod(self.img_shape))(label))flat_img = Flatten()(img)model_input = multiply([flat_img, label_embedding])validity = model(model_input)return Model([img, label], validity)def train(self, epochs, batch_size=128, sample_interval=50):# Load the dataset(X_train, y_train), (_, _) = mnist.load_data()# Configure inputX_train = (X_train.astype(np.float32) - 127.5) / 127.5X_train = np.expand_dims(X_train, axis=3)y_train = y_train.reshape(-1, 1)# Adversarial ground truthsvalid = np.ones((batch_size, 1))fake = np.zeros((batch_size, 1))for epoch in range(epochs):# ---------------------# Train Discriminator# ---------------------# Select a random half batch of imagesidx = np.random.randint(0, X_train.shape[0], batch_size)imgs, labels = X_train[idx], y_train[idx]# Sample noise as generator inputnoise = np.random.normal(0, 1, (batch_size, 100))# Generate a half batch of new imagesgen_imgs = self.generator.predict([noise, labels])# Train the discriminatord_loss_real = self.discriminator.train_on_batch([imgs, labels], valid)d_loss_fake = self.discriminator.train_on_batch([gen_imgs, labels], fake)d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)# ---------------------# Train Generator# ---------------------# Condition on labelssampled_labels = np.random.randint(0, 10, batch_size).reshape(-1, 1)# Train the generatorg_loss = self.combined.train_on_batch([noise, sampled_labels], valid)# Plot the progressprint ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))# If at save interval => save generated image samplesif epoch % sample_interval == 0:self.sample_images(epoch)def sample_images(self, epoch):r, c = 2, 5noise = np.random.normal(0, 1, (r * c, 100))sampled_labels = np.arange(0, 10).reshape(-1, 1)gen_imgs = self.generator.predict([noise, sampled_labels])# Rescale images 0 - 1gen_imgs = 0.5 * gen_imgs + 0.5fig, axs = plt.subplots(r, c)cnt = 0for i in range(r):for j in range(c):axs[i,j].imshow(gen_imgs[cnt,:,:,0], cmap='gray')axs[i,j].set_title("Digit: %d" % sampled_labels[cnt])axs[i,j].axis('off')cnt += 1fig.savefig("images/%d.png" % epoch)plt.close()